-

Notifications

You must be signed in to change notification settings - Fork 5.8k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

executor: implement disk-based hash join #12067

Conversation

e82763e

to

6911bd3

Compare

|

/run-all-tests |

Codecov Report

@@ Coverage Diff @@

## master #12067 +/- ##

===========================================

Coverage 80.8753% 80.8753%

===========================================

Files 454 454

Lines 99547 99547

===========================================

Hits 80509 80509

Misses 13251 13251

Partials 5787 5787 |

4bfaeb1

to

b550331

Compare

TPC-H query 13Run query 13 under TPC-H 10G on my laptop (MacBook Pro 15-inch, 2019, 2.6GHz)

default:mysql> explain analyze select c_count, count(*) as custdist from ( select c_custkey, count(o_orderkey) as c_count from customer left outer join orders on c_custkey = o_custkey and o_comment not like '%pending%deposits%' group by c_custkey ) c_orders group by c_count order by custdist desc,c_count desc;

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+-------------------------------------------------------------------------------------------+-----------------------+

| id | count | task | operator info | execution info | memory |

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+-------------------------------------------------------------------------------------------+-----------------------+

| Sort_9 | 150000.00 | root | custdist:desc, c_orders.c_count:desc | time:15.838720948s, loops:2, rows:65 | 2.7578125 KB |

| └─Projection_11 | 150000.00 | root | c_count, 6_col_0 | time:15.838681959s, loops:2, rows:65 | N/A |

| └─HashAgg_14 | 150000.00 | root | group by:c_count, funcs:count(1), firstrow(c_count) | time:15.838639251s, loops:2, rows:65 | N/A |

| └─HashAgg_17 | 150000.00 | root | group by:tpch.customer.c_custkey, funcs:count(tpch.orders.o_orderkey) | time:15.837279594s, loops:1466, rows:1500000 | N/A |

| └─HashLeftJoin_20 | 2252162.08 | root | left outer join, inner:TableReader_25, equal:[eq(tpch.customer.c_custkey, tpch.orders.o_custkey)] | time:14.629918936s, loops:14982, rows:15338185 | 1.0599235333502293 GB |

| ├─TableReader_22 | 150000.00 | root | data:TableScan_21 | time:490.729044ms, loops:1466, rows:1500000 | 12.883949279785156 MB |

| │ └─TableScan_21 | 150000.00 | cop | table:customer, range:[-inf,+inf], keep order:false | proc max:369ms, min:259ms, p80:369ms, p95:369ms, rows:1500000, iters:1482, tasks:4 | N/A |

| └─TableReader_25 | 12000000.00 | root | data:Selection_24 | time:8.412349583s, loops:14492, rows:14838130 | 236.17509269714355 MB |

| └─Selection_24 | 12000000.00 | cop | not(like(tpch.orders.o_comment, "%pending%deposits%", 92)) | proc max:2.98s, min:1.359s, p80:2.63s, p95:2.966s, rows:14838130, iters:14788, tasks:31 | N/A |

| └─TableScan_23 | 15000000.00 | cop | table:orders, range:[-inf,+inf], keep order:false | proc max:2.878s, min:1.302s, p80:2.536s, p95:2.863s, rows:15000000, iters:14788, tasks:31 | N/A |

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+-------------------------------------------------------------------------------------------+-----------------------+

10 rows in set (15.85 sec)

(Lots of memory is used by disk:mysql> explain analyze select /*+ MEMORY_QUOTA(10 MB) */ c_count, count(*) as custdist from ( select c_custkey, count(o_orderkey) as c_count from customer left outer join orders on c_custkey = o_custkey and o_comment not like '%pending%deposits%' group by c_custkey ) c_orders group by c_count order by custdist desc,c_count desc;

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------+-----------------------+

| id | count | task | operator info | execution info | memory |

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------+-----------------------+

| Sort_9 | 150000.00 | root | custdist:desc, c_orders.c_count:desc | time:34.525661988s, loops:2, rows:65 | 2.7578125 KB |

| └─Projection_11 | 150000.00 | root | c_count, 6_col_0 | time:34.524866162s, loops:2, rows:65 | N/A |

| └─HashAgg_14 | 150000.00 | root | group by:c_count, funcs:count(1), firstrow(c_count) | time:34.524826727s, loops:2, rows:65 | N/A |

| └─HashAgg_17 | 150000.00 | root | group by:tpch.customer.c_custkey, funcs:count(tpch.orders.o_orderkey) | time:34.52306466s, loops:1466, rows:1500000 | N/A |

| └─HashLeftJoin_20 | 2252162.08 | root | left outer join, inner:TableReader_25, equal:[eq(tpch.customer.c_custkey, tpch.orders.o_custkey)] | time:33.306195067s, loops:14982, rows:15338185 | 76.69921875 KB |

| ├─TableReader_22 | 150000.00 | root | data:TableScan_21 | time:263.787279ms, loops:1466, rows:1500000 | 12.883944511413574 MB |

| │ └─TableScan_21 | 150000.00 | cop | table:customer, range:[-inf,+inf], keep order:false | proc max:252ms, min:199ms, p80:252ms, p95:252ms, rows:1500000, iters:1482, tasks:4 | N/A |

| └─TableReader_25 | 12000000.00 | root | data:Selection_24 | time:5.559257104s, loops:14492, rows:14838130 | 638.274112701416 MB |

| └─Selection_24 | 12000000.00 | cop | not(like(tpch.orders.o_comment, "%pending%deposits%", 92)) | proc max:2.072s, min:905ms, p80:1.911s, p95:1.988s, rows:14838130, iters:14788, tasks:31 | N/A |

| └─TableScan_23 | 15000000.00 | cop | table:orders, range:[-inf,+inf], keep order:false | proc max:1.969s, min:836ms, p80:1.804s, p95:1.87s, rows:15000000, iters:14788, tasks:31 | N/A |

+--------------------------------+-------------+------+---------------------------------------------------------------------------------------------------+------------------------------------------------------------------------------------------+-----------------------+

10 rows in set (34.64 sec)(We can see the memory usage in |

|

I've moved utility changes in this pr out to #12116 , Please review it first. |

d4e0e09

to

cbd9e30

Compare

|

/run-all-tests |

|

/run-all-tests |

1 similar comment

|

/run-all-tests |

11fa137

to

668bc27

Compare

|

/run-all-tests |

|

/run-unit-test |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

|

/run-all-tests |

|

@SunRunAway merge failed. |

|

/run-unit-test |

2 similar comments

|

/run-unit-test |

|

/run-unit-test |

|

/run-integration-ddl-test |

What problem does this PR solve?

part of #11607

What is changed and how it works?

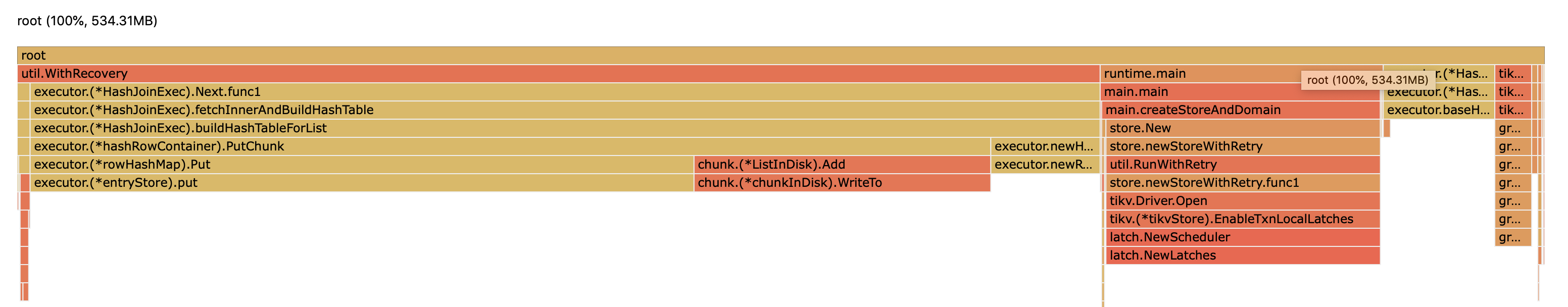

When the memory usage of a query exceeds

mem-quota-query, it will be spilled out to disk.I've written a slide to demonstrate how Spilling to disk is triggered in this PR,

https://docs.google.com/presentation/d/1Sa9xNbDTPnLwnQHLKfpwksdYXWodisXPEqp-WR5Up0U/edit?usp=sharing

Here is the benchmark result,

TPC-H query 13 result is here, #12067 (comment)

Check List

Tests

Now we can see log in tidb-server:

Code changes

Side effects

Related changes

Release note

oom-use-tmp-storageintidb.toml, which istruein default, now takes control of the behavior whether to spill over to disk in ahashJoin(and other executors we will support spilling in the future). If a query withhashJoinexceeds themem-quota-query,hashJoinwill spill over to the temporary directory. (which could affect performance).TMPDIRwhen starting tidb-server.