New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BUG: entropy calculations failing for large values #18093

Comments

Just a heads up that this formula is wrong. You used the expansion used for large x on gammaln, when x is defined to not be large. |

Thanks for finding, corrected it! |

|

BTW technically these implementations don't really need to use When this issue is ready to be closed, it would be good to take one more pass to clean up a few things:

Individually, these are mostly insignificant. But if we're going to take the time to do all this, we might as well do it right. |

|

Anyone interested is welcome to help fix this issue. |

Yes, that sounds good. I'm a bit busy right now, so I wouldn't be able to handle this for a while, anyways. |

|

Hi @dschmitz89 |

Yes definitely! I guess that |

I see thank you. I'll have a look at that then as a start. |

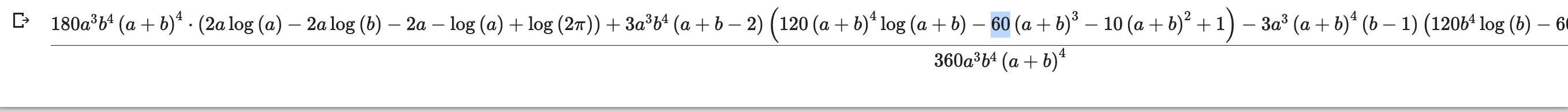

@dschmitz89 I tried using wolfram alpha and the output it returns is quite long. Could you kindly have a look to see if I have inputted this correctly. Wolfram alpha link Also when comparing the results with mpmath I am not sure how we can handle this shape_info.log_pdet using mpmath. |

I think in your input wolfram alpha did not recognize the I would not worry about the |

|

@dschmitz89 Thank you! This certainly looks much better. |

|

Hi @dschmitz89 If we want to handle the beta entropy def _entropy(self, a, b):

return (sc.betaln(a, b) - (a - 1) * sc.psi(a) -

(b - 1) * sc.psi(b) + (a + b - 2) * sc.psi(a + b))do we need to consider each of the three conditions separately for the expansions as mentioned in the description i.e.

|

Edit: I think we only need two cases. One if both arguments are large and one if only one argument is large. The formula is kind of "symmetric" so |

@dschmitz89 This is the code when I also add the expansion of gammaln. Code import sympy

from sympy import log, pi, sqrt, simplify, expand, Rational

x, y, a, b = sympy.symbols("x, y, a, b")

def digamma(x):

return log(x) - 1/(2*x) - 1/(12*x**2) + 1/(120*x**4)

def gammaln(x):

return x*log(x) - x - Rational(1,2)*log(x) + Rational(1,2)*log(2*pi) + 1/(12*x) - 1/(360*x**3) + 1/(1260*x**5)

def betaln(x, y):

return gammaln(x) - x*log(y)

old = betaln(a, b) - (a - 1)*digamma(a) - (b - 1)*digamma(b) + (a + b - 2)*digamma(a+b)

ref = simplify(simplify(simplify(old)))

refimport numpy as np

import matplotlib.pyplot as plt

from scipy import stats

from mpmath import mp

mp.dps = 500

def beta_entropy_mpmath(a, b):

a = mp.mpf(a)

b = mp.mpf(b)

entropy = mp.log(mp.beta(a, b)) - (a - 1) * mp.digamma(a) - (b - 1) * mp.digamma(b) + (a + b - 2) * mp.digamma(a + b)

return float(entropy)

def asymptotic_one_large(a, b):

return (

- a*np.log(b) + a*np.log(a + b) - a - a*(a + b)**-2./12 + a*(a + b)**-4./120

- b*np.log(b) + b*np.log(a + b) - b*(a + b)**-2./12 + b*(a + b)**-4./120

+ np.log(a)/2 + np.log(b) - 2*np.log(a + b) + 1/2 + np.log(2*np.pi)/2 + 1/(a + b)

+ (a + b)**-2./6 - (a + b)**-4./60 - 5/(12*b) - b**-2./12

- b**-3./120 + b**-4./120 - 1/(3*a) - a**-2./12 - a**-3./90

+ a**-4./120 + a**-5./1260

)

a, b = 1.0, 1e50

print(asymptotic_one_large(a, b))

print(beta_entropy_mpmath(a, b))

>>>-114.12896691014839

>>>-114.12925464970229 |

|

@OmarManzoor |

Thanks. |

I tried this out by ignoring the - (a-1)*digamma(a) in the expansion import sympy

from sympy import log, pi, sqrt, simplify, expand, Rational

x, y, a, b = sympy.symbols("x, y, a, b")

def digamma(x):

return log(x) - 1/(2*x) - 1/(12*x**2) + 1/(120*x**4)

def gammaln(x):

return x*log(x) - x - Rational(1,2)*log(x) + Rational(1,2)*log(2*pi) + 1/(12*x) - 1/(360*x**3)

def betaln(x, y):

return gammaln(x) - x*log(y)

#old_1 = betaln(a, b) - (a - 1)*digamma(a) - (b - 1)*digamma(b) + (a + b - 2)*digamma(a+b)

old = betaln(a, b) - (b - 1)*digamma(b) + (a + b - 2)*digamma(a+b)

ref = simplify(simplify(simplify(old)))

ref

I simplified this expression and added the - (a - 1) * psi(a) at the end def asymptotic_one_large(a, b):

sm = a + b

return (

a*np.log(a) - a*(np.log(b) + 1) + (np.log(2*np.pi) - np.log(a)) / 2

+ (sm - 2) * (-1/(2*sm) - sm**-2.0/12 + np.log(sm) + sm**-4.0/120)

- (b - 1) * (np.log(b) - 1/(2*b) - b**-2.0/12 + b**-4.0/120)

+ 1/(12*a) - a**-3.0/360

- (a - 1) * psi(a)

)

def beta_entropy_mpmath(a, b):

a = mp.mpf(a)

b = mp.mpf(b)

entropy = mp.log(mp.beta(a, b)) - (a - 1) * mp.digamma(a) - (b - 1) * mp.digamma(b) + (a + b - 2) * mp.digamma(a + b)

return float(entropy)

a, b = 1.0, 1e50

print(asymptotic_one_large(a, b))

print(beta_entropy_mpmath(a, b))

>>>0.08055555555555555

>>>-114.12925464970229The overall results seem to be really off. Could you kindly have a look? |

We are confusing the arguments with each other. For large That means here we need to do: where |

|

I tried this as well but it just does not seem to match. Here is the code from scipy.special import psi

from scipy.special import gammaln

def asymptotic_one_large(a, b):

s = a + b

t1 = gammaln(a) - a*np.log(b) - (a - 1)*psi(a)

t2 = np.log(b) - b*np.log(b) - 1/(2*b) + 1/(12*b) - b**-2./12

t3 = a*np.log(s) + b*np.log(s) - 2*np.log(s) - 1/(12*s) + 1/s + s**-2./6

return t1 + t2 + t3

def beta_entropy_mpmath(a, b):

a = mp.mpf(a)

b = mp.mpf(b)

entropy = mp.log(mp.beta(a, b)) - (a - 1) * mp.digamma(a) - (b - 1) * mp.digamma(b) + (a + b - 2) * mp.digamma(a + b)

return float(entropy)

a, b = 2.0, 1e20

print(asymptotic_one_large(a, b))

print(beta_entropy_mpmath(a, b))

>>>0.0

>>>-44.47448619497938By the way if I define asymptotic_one_large like this by returning the value at once instead of keeping it in t1,t2,t3 and then adding, the result is completely different def asymptotic_one_large(a, b):

s = a + b

return (

gammaln(a) - a*np.log(b) - (a - 1)*psi(a)

+ np.log(b) - b*np.log(b) - 1/(2*b) + 1/(12*b) - b**-2./12

+ a*np.log(s) + b*np.log(s) - 2*np.log(s) - 1/(12*s) + 1/s + s**-2./6

)

a, b = 2.0, 1e20

print(asymptotic_one_large(a, b))

print(beta_entropy_mpmath(a, b))

>>>-92.10340371976183

>>>-44.47448619497938 |

|

@OmarManzoor sorry for all this back and forth. I found a way around the catastrophic cancellations that occur in the logarithmic terms finally. from scipy.special import psi

from scipy.special import gammaln

from mpmath import mp

import numpy as np

mp.dps = 100

def asymptotic_one_large(a, b):

s = a + b

simple_terms = gammaln(a) - (a -1)*psi(a) - 1/(2*b) + 1/(12*b) - b**-2./12 - 1/(12*s) + 1/s + s**-2./6

difficult_terms = s * np.log1p(a/b) + np.log(b) - 2 * np.log(s) #np.log(b) * (1 - s) + (s - 2) * np.log(s)

return simple_terms + difficult_terms

def beta_entropy_mpmath(a, b):

a = mp.mpf(a)

b = mp.mpf(b)

entropy = mp.log(mp.beta(a, b)) - (a - mp.one) * mp.digamma(a) - (b - mp.one) * mp.digamma(b) + (a + b - 2*mp.one) * mp.digamma(a + b)

return float(entropy)

a, b = 20, 1e20

print(asymptotic_one_large(a, b))

print(beta_entropy_mpmath(a, b))

>>> -43.151773525282245

>>> -43.15177352528225The trick is to write a, b = 20., 1e20

s = a+b

np.log(b) * (1 - s) + (s - 2) * np.log(s), s * np.log1p(a/b) + np.log(b) - 2 * np.log(s)

>>> (0.0, -26.051701859880907) |

Very nice! Thank you for this. |

|

I was curious whether anyone could share an example use of the numerical value of the entropy of a distribution. The concept of differential entropy is used all over, of course, but I haven't been able to find examples where the entropy of a named distribution (like one of those named above) is used. Thanks for considering it! |

|

Closing for some time until more reviewer capacity is available. |

Describe your issue.

For a few distributions, the entropy calculation currently fails for large values. As an example, consider the gamma distribution:$x>1e12$ , the computed entropy starts to oscillate and after approx.

for

2e16, returns just 0.Affected distributions and current PRs that aim to fix it:

gammaENH: asymptotic expansion for gamma distribution entropy #18095gengammaENH: Added asymptotic expansion for gengamma entropy #18131invgammaENH: Added asymptotic expansion for invgamma entropy (#18093) #18122ftAdded asymptotic expansion for t entropy (#18093) #18135betaENH: asymptotic expansion for beta entropy for large a and b #18499 ENH: Improve beta entropy when one argument is large #18714chiENH: improve entropy calculation of chi distribution using asymptotic expansion #18094chi2ENH: use asymptotic expansions for entropy ofgenlogisticandchi2#17930genlogisticENH: use asymptotic expansions for entropy ofgenlogisticandchi2#17930wishartinvwishartmultivariate_tENH: asymptotic expansion for multivariate t entropy #18465The reason for the failures are catastrophic cancellations occuring in the subtraction of logarithmic beta/gamma functions

betaln/gammalnon one side and the digamma functionpsior simplylogon the other side.This can be avoided by using asymptotic expansions instead of calling the functions from

scipy.special. See #17930 for examples.The expansions for$\psi$ and $\ln(\Gamma)$ used in PRs such as #17929 are:

For the beta function, expansions are listed on Wikipedia:

If$x$ and $y$ are large:

If one argument is large (here

y, same holds for largexasbetalnis symmetric):This issue can keep track of the necessary fixes.

Reproducing Code Example

Error message

SciPy/NumPy/Python version and system information

The text was updated successfully, but these errors were encountered: