This is a Pytorch implementation of SiamDW with train codes, which is mainly based on deeper_wider_siamese_trackers and Siamese-RPN. I re-format my code with reference to the author's official code SiamDW.

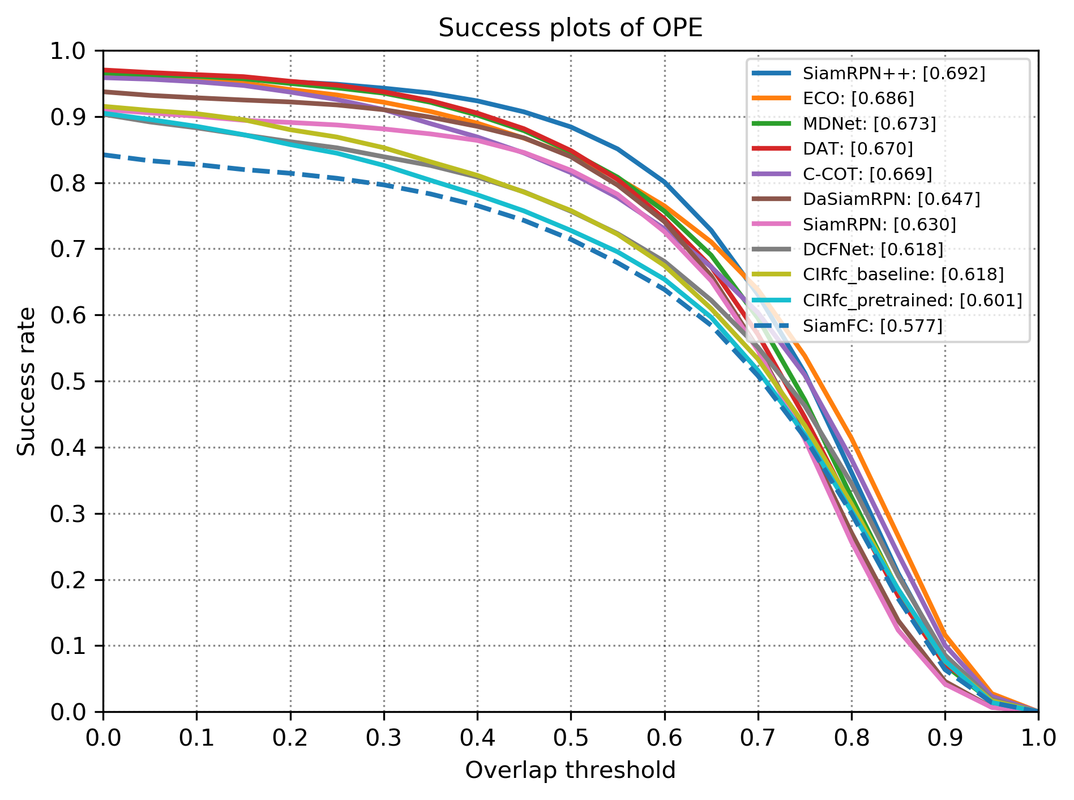

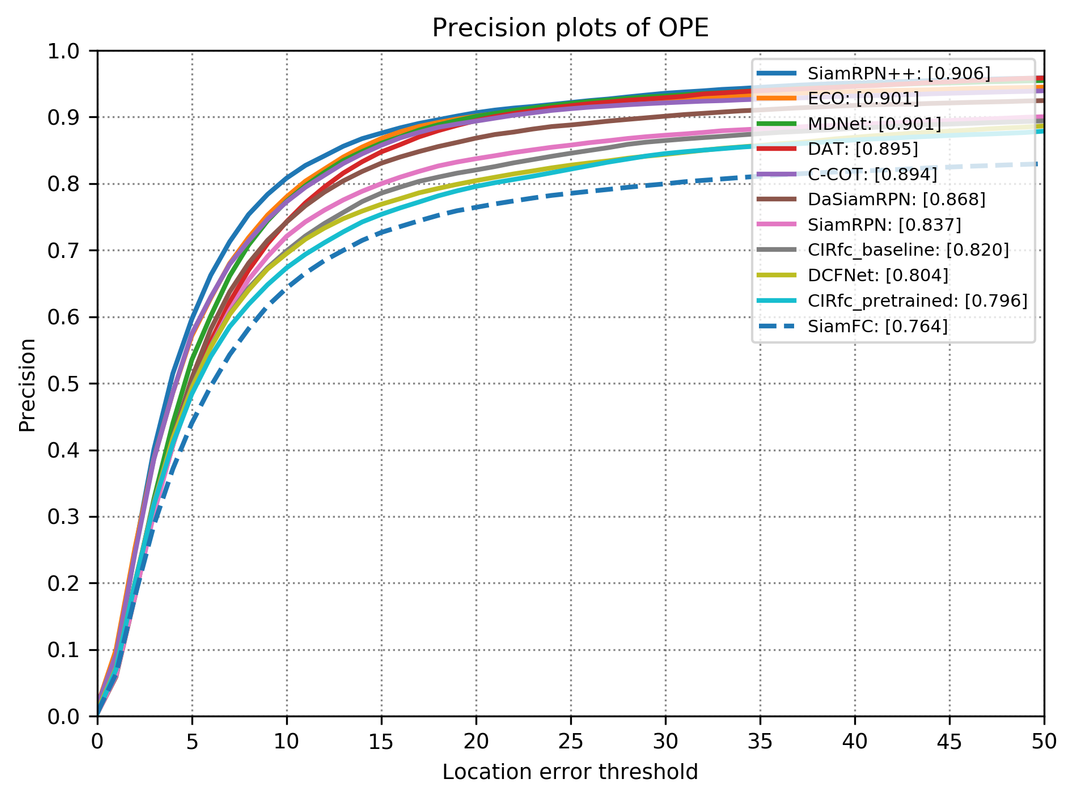

For more details about the CIR tracker please refer to the paper: Deeper and Wider Siamese Networks for Real-Time Visual Tracking by Zhipeng Zhang and Houwen Peng.

NOTE: The author proposed CIR/CIR-D unit into both SiamFC and SiamRPN, repectively denoted as SiamFC+ and SiamRPN+. Currently this repo only contained that of SiamFC+ and SiamRPN+ with backbone ResNet22 and others will be listed into future work.

The repo is still under development.

- python == 3.6

- pytorch == 0.3.1

- numpy == 1.12.1

- opencv == 3.1.0

-

data preparation

-

Follow the instructions in Siamese-RPN to curate the

VIDandYTBdataset. If you also want to useGOT10K, follow the same instructions as above or download the curated data by author. -

Create the soft links

data_curatedanddata_curated.lmdbto folderdataset.

-

-

download pretrained model

-

Download pretrained model from OneDrive, GoogleDrive or BaiduDrive. Extracted code for BaiduDrive is

7rfu. -

Put them to

models/pretraindirectory.

-

-

chosse the training dataset by set the parameters in

lib/utils/config.py.For example, if you would like to use both

VIDandYTBdataset to trainSiamRPN+, then just simply set bothVID_usedandYTB_usedintoTrue. -

choose the model to be trained by modifying

train.sh, e.g, to trainSiamFC+using commandCUDA_VISIBLE_DEVICES=0 python bin/train_siamfc.py --arch SiamFC_Res22 --resume ./models/SiamFC_Res22_mine.pthor to train

SiamRPN+byCUDA_VISIBLE_DEVICES=0 python bin/train_siamrpn.py --arch SiamRPN_Res22 --resume ./models/SiamRPN_Res22_mine.pth

-

data preparation

- Create the soft link

OTB2015to folderdataset

- Create the soft link

-

start tracking by modifying

test.shas above

- OTB2015

[1] Zhipeng Zhang, Houwen Peng. Deeper and Wider Siamese Networks for Real-Time Visual Tracking. Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 2019.