-

Notifications

You must be signed in to change notification settings - Fork 74.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

CUDA 10 #22706

Comments

|

I've built tf 1.12.0rc0 with all the latest, test well except this silly warning *** WARNING *** You are using ptxas 10.0.145, which is older than 9.2.88. ptxas 9.x before 9.2.88 is known to miscompile XLA code, leading to incorrect results or invalid-address errors. You do not need to update to CUDA 9.2.88; cherry-picking the ptxas binary is sufficient. seems no problem for now only thing left is python 3.7, which I haven't tried yet |

|

Could we expect CUDA10+python3.7 prebuilt images from 1.13rc0? |

|

@alanpurple Ahh I had not tried CUDA 10 with XLA yet, it is now compiled in by default. Great feedback I will pass the warning on to the team (b/117268529), looks like they might have a slight error in their version checker. As far as Python versions that is not under my authority (even a little). @gunan owns that part of the matrix. |

This was completely broken for CUDA versions > 9 and resulted in spurious warnings. Reported in #22706#issuecomment-426861394 -- thank you! PiperOrigin-RevId: 215841354

|

@tfboyd Hey could you kindly share the .whl of tensorflow 1.11 with, |

|

Updated 10-15-2018: Moved my builds into the original comment to make the thread shorter. Updated 10-10-2018: Build is under way. For python 2.7 and compute 3.7,5.2,6.0,6.1,7.0 (normally only do 6.0 and 7.0 so I tried to think about what all of you might want) |

This was completely broken for CUDA versions > 9 and resulted in spurious warnings. Reported in tensorflow#22706#issuecomment-426861394 -- thank you! PiperOrigin-RevId: 215841354

|

Just wrote instruction how to build TF from scratch. Maybe somebody found it useful |

|

Here is my new tutorial for Building Tensorflow 1.12 + CUDA 10.0 + CUDNN 7.3.1 + NCCL 2.3.5 + bazel-0.17.2 https://www.python36.com/how-to-install-tensorflow-gpu-with-cuda-10-0-for-python-on-ubuntu/, you will also get prebuilt wheel at last. |

|

Thanks @arunmandal53 for taking the time to write the tutorial. However, I need to install Tensorflow in the Python installation that is under my home account rather than installing Tensorflow on Python that comes with Ubuntu. I think that I need to wait until Tensorflow releases a version that can be installed using pip and is compatible with CUDA 10 (and cuDNN) |

|

@ivan-marroquin Incase you did not know you can install any .whl file anywhere you want. You can use the .whl packages I created or the ones @arunmandal53 has done which covers Python 3.6. Pretty nice selectoin :-). I might also not understand you issue but you can do this:

You do not have to count on pypi and --user would also work and installing in a virtual env. |

|

@tfboyd thanks for the clarification. And thanks to all of you for a such impressive help! i will give a try as soon as possible |

|

@everyone thanks for spilling all the bits and bytes. I have built the TF with all my requirements and successfully running the tests for 5days now :) |

|

Here is my new tutorial on building Tensorflow 1.12 + CUDA 10.0 + CUDNN 7.3.1 + + Bazel 0.17.2 on Windows. https://www.python36.com/how-to-install-tensorflow-gpu-with-cuda-10-0-for-python-on-windows/ . |

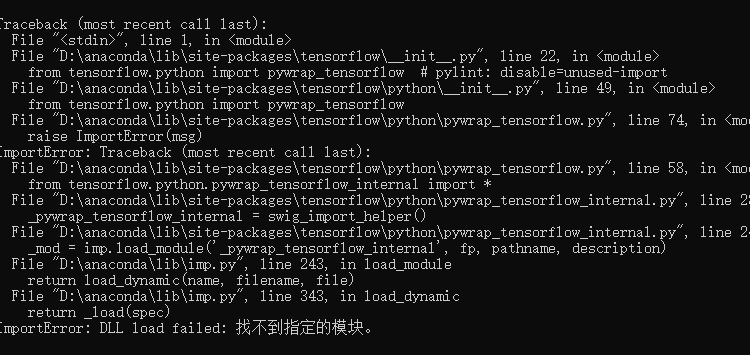

@arunmandal53 When i install the cuda 10 and tensorflow-gpu 1.11.0 with rtx 2080, it went to the error "ImportError: DLL load failed:" |

|

You will find whl links above. |

|

Detailed tutorial just in case :) https://medium.com/@saitejadommeti/building-tensorflow-gpu-from-source-for-rtx-2080-96fed102fcca |

|

Ubuntu 18.04 - CUDA 10.0 - libcudnn 7.3.1 + Python 3.6 I'm trying bazel now, but it's pain in the ass... just to have the last version of cuda. :( EDIT: There was a problem in bazel and to build tf I needed to put the --batch option |

|

Here is a windows build tensorflow 1.12.0, CUDA 10, cuDNN 7.3.1, RTX2080TI (feature level 7.5). |

|

are nightlies cuda 10 at the moment? edit: nope. |

Yes. If i remember correctly i manually installed with cuda 10 for 18.04 run file. Deb may not work? |

Hi @MingyaoLiu , |

What compute level? |

Can you please share how you make the file? When I tried I did not succeed :( |

|

When I try to build TF at AWS P3 V100 Tesla instance it runs 4000/6000 and then fails? What can I do to fix it? .\tensorflow/core/kernels/mirror_pad_op_cpu_impl.h(31): note: see reference to class template instantiation 'tensorflow: later it ends with... c:\users\administrator_bazel_administrator\xv6zejqw\execroot\org_tensorflow\external\eigen_archive\unsupported\eigen\sr c:\users\administrator_bazel_administrator\xv6zejqw\execroot\org_tensorflow\external\eigen_archive\eigen\src/Core/Array external/com_google_absl\absl/strings/string_view.h(496): warning: expression has no effect external/protobuf_archive/src\google/protobuf/arena_impl.h(55): warning: integer conversion resulted in a change of sign external/protobuf_archive/src\google/protobuf/arena_impl.h(309): warning: integer conversion resulted in a change of sig external/protobuf_archive/src\google/protobuf/arena_impl.h(310): warning: integer conversion resulted in a change of sig external/protobuf_archive/src\google/protobuf/map.h(1025): warning: invalid friend declaration host_defines.h is an internal header file and must not be used directly. This file will be removed in a future CUDA rel |

Hi Toby, how's your testing going? |

|

Should be fixed (partially at least) by 29b8d49. |

|

Nightly builds are now CUDA 10. I tested via the Docker images from dockerhub. I did not test Windows as that is something I have yet to use (sorry) for ML. This happened on Friday so there may still be some issues as we roll it out. Let me know if you run into any problems. We also moved to NCCL from source so you no longer need to install NCCL to use it. More info in the new few weeks, I do not know if we will do an official RC before the year ends but 1.13 is likely to go final mid/end-JAN and it 100% has CUDA 10 and cuDNN 7.4 (you could always update this by just adding a new binary). |

Thank a lot @tfboyd |

|

Well, if this root is good, how do i incorporate it into my system? |

Me too. |

Thank you guys, it also works for me as well with a 1080 ti |

|

I installed the nightly docker image which works in general but I get an error message when trying to fit a keras.layers.Conv1D layer on my GPU (RTX 2070)

Is cuDNN installed on the tensorflow nightly containers? Or is this the wrong question! |

|

my GPU : RTX 2070 |

|

Tried some wheels in #22706 (comment), Also I built a wheel based on nvidia tensorflow docker 18.12(py27), the same error. Does anyone run into this error and solve it? |

Does it include nccl 2.3.7? I have installed nccl but TF cannot find it. |

Perhaps you haven't run calibration which is required for INT8 unless you manually insert dynamic ranges. https://docs.nvidia.com/deeplearning/dgx/integrate-tf-trt/index.html#tutorial-tftrt-int8 Also I think you are using an old TF (perhaps 1.12?) because we have renamed |

|

@pooyadavoodi Thanks for responding!!

|

Are there any updates on 1.13 release date? |

|

Hey, |

|

Hi all, I've managed to get this working and haven't experienced any issues for a while. TLDR: Install CUDA 10, build Tensorflow locally. Once this is running you need to tell TF to use float16 and adjust the epsilon or you will get NaN during training. |

|

I've managed to build it in Ubuntu 18.04, with Python 3.6, Cuda 10.0, cudnn 7.4.2. GPU model RTX 2080Ti. Here is the .whl: |

|

Hi @tfboyd , I have the same question as @tydlwav , any updates on the 1.13 release? I am coding in R using Keras and using the RTX 2080. Really looking forward to this release. |

|

rc0 is already out: All our nightlies also have cuda 10 support for a while now. |

*** WARNING *** You are using ptxas 10.1.243, which is older than 11.1. ptxas before 11.1 is known to miscompile XLA code, leading to incorrect results or invalid-address errors. I am facing this issue for Tensorflow==2.7.0 in ubuntu20.04. Please let me know how did you solve your issue. |

Edited: 17-DEC-2018 Nightly builds are CUDA 10

Edited: 27-NOV-2018 Added 1.12 FINAL builds.

Edited: 29-OCT-2018 Added 1.12 RC2 builds.

Edited: 17-OCT-2018 Added 1.12 RC1 builds.

Nightly Builds are now CUDA 10 as of 16-DEC-2018

Install instructions for CUDA 10 for a fresh system that should also work on an existing system if using

apt-get.TensorFlow will be upgrading to

CUDA 10as soon as possible. As of TF 1.11 building TensorFlow from source works just fine withCUDA 10and possibly even before. There is nothing special needed other than all of theCUDA,cuDNN,NCCL(optional), andTensorRT(optional) libraries. If people have some builds feel free to link them here (Keep in mind if you download them to decided what risk you want to take based on the source.) as well as any issues. AlsoNCCLis now open source again and soon will be back to being automatically downloaded bybazeland included in the binary.CUDA 10would likely go into TF 1.13, which is not scheduled. I will update this post as I have more info to share. I hope to flip nightly builds to CUDA 10 in November but the TF 1.13 release will likely push to early Jan.I and some really cool people below made some binaries (even windows, see comments below) to help people out along with my really bad "instructions". I suspect the instructions linked in the comments below are better.

Libraries used (rough list, similar to what I listed above)

TF 1.12.0 FINAL

Python 2.7 (Ubuntu 16.04)

Python 3.5 (Ubuntu 16.04)

Dockerfiles

These are partial files and the apt-get commands should work on non-Docker systems as well assuming you have the NVIDIA apt-get repositories which should be the same as listed on tf.org.

The text was updated successfully, but these errors were encountered: