Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Adds a wrapper for Weight Normalization as requested in #14070 & #10125 .

Contains optional data dependent initialization for eager execution, and works on both keras.layers and tf.layers

I struggled to figure out where to place this Wrapper, as no other layers in contrib appear to subclass anything from tf.Keras, but going forward I believe this is the direction TF is headed. Please advise if it should go somewhere else (maybe a wrappers module in contrib?)

Collab Example:

https://colab.research.google.com/drive/1nBQSAA78oUBmi9fhnHJ_zWhHq2NXjwIc#scrollTo=au25bSP75hdr

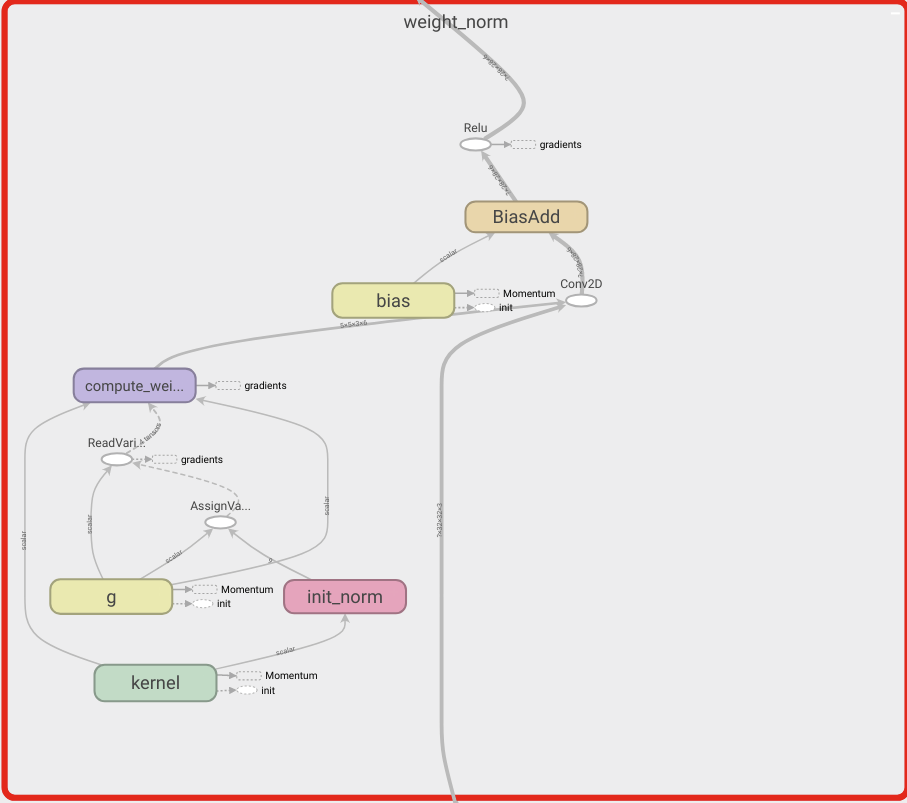

Wrapped graph: