-

-

Notifications

You must be signed in to change notification settings - Fork 16.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Different pre-processing times with PyTorch Hub and detect.py #11370

Comments

|

@glenn-jocher Could you perhaps briefly answer the above questions? I would be very grateful. |

|

@DP1701 certainly! The pre-processing time with PyTorch Hub may be slightly longer because it pre-processes the image on the CPU instead of the GPU. Additionally, the pre-processing times may vary as the PyTorch Hub is a pre-trained model and may perform more operations than necessary for inference. Regarding the second question, when the input image size is not divisible by a certain factor, some architectures including YOLOv5 will pad the image with zeros. This is done to ensure that the outputs have the correct dimensions, and the padding is added symmetrically around the image. |

|

@glenn-jocher thank you for the fast answer! If I understand correctly, PyTorch Hub is not the best idea to run inference in real time on a system. I am looking for a way to run inference optimally using simple methods. The hardware for the system is not fixed yet, but I wanted to try it out in some way. |

|

@DP1701 PyTorch Hub is still a good option for running inference and can achieve real-time performance depending on the hardware. However, if you're looking for a more optimized solution, I recommend trying out other methods such as using the PyTorch/CUDA backend, or optimizing the model architecture for your specific use case (e.g. reducing the number of layers or channels). You could also try running the model on a dedicated inference hardware like an NVIDIA Jetson device or a Google Coral board which has specialized hardware for accelerating inference. |

|

👋 Hello there! We wanted to give you a friendly reminder that this issue has not had any recent activity and may be closed soon, but don't worry - you can always reopen it if needed. If you still have any questions or concerns, please feel free to let us know how we can help. For additional resources and information, please see the links below:

Feel free to inform us of any other issues you discover or feature requests that come to mind in the future. Pull Requests (PRs) are also always welcomed! Thank you for your contributions to YOLO 🚀 and Vision AI ⭐ |

Search before asking

Question

Hello,

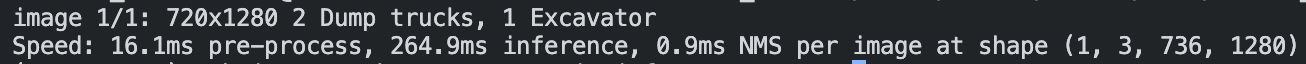

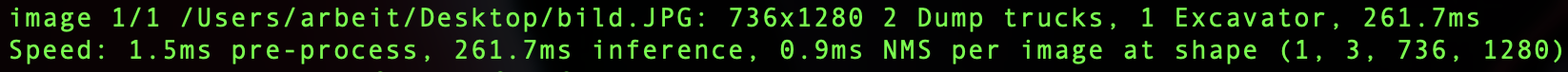

why is the pre-procssing time with PyTorch Hub higher than with detect.py?

I use a custom yolov5 model and an image of size 1280x720px

PyTorch Hub:

detect.py

Another question: when given the size of 1280, 720 becomes 736. Which technique is used to fill the missing pixels? Zero padding?

Additional

No response

The text was updated successfully, but these errors were encountered: