#INSTALL First change the variables in example.venv to the correct paths. The variables are explained below under "env" windows

python -m venv venv_bark

venv_bark/Scripts/activate.bat

git clone https://github.com/Anonym0us33/bark_storymaker

cd bark_storymaker

pip install -r freeze.txtlinux

change venv_bark/Scripts/activate.bat to source venv_bark/bin/activate

NOTE: freeze.txt is simple the output of one working environment. further steps may be required to install the pip requirements. Googleing the "Module not found" error usually gives the answer in the first result. I will make a setup script if there is demand for it.

There is also a tutorial on a popular video website: /watch?v=w41-MUfxIWo

#ENV

NOTE: these havn't been checked. you may have to alter them to fit the variables you are using.

leave as "/" or "" if unsure

BARK_PATH = "path/to/folder"

path for results

OUTPUT_PATH = "/audio"

path to activate.bat

VENV_PATH = "/venv_bark/Scripts/activate.bat"

path to activate on linux. TODO: implement

VENV_PATH_LINUX = "/venv_bark/bin/activate"

how ofter files are saved when using chunks.

Different to chunk size, set at runtime.

CHUNK_SAVE_FREQUENCY = 10

how ofter files are saved when using sentences.

Sentence mode uses a token counter to cut audio at the end of a sentence. Not reccomended on GPUs with <16GB VRAM. That means you need a RTX 3090, 4080 Ti or better to guarentee this will work.

SENTENCE_SAVE_FREQUENCY = 10

Not implemented. Extra value if you want to edit the code and test it.

TEST_SAVE_FREQUENCY = 1

Defaults to first GPU. Not tested but can delete from code or edit value to 0,1,2,3 etc if you have 2 or more GPUs. Older GPUs had cores counted individually so if you get this working with CUDA 11.4 or lower this may be useful.

CUDA_VISIBLE_DEVICES = 0

Full list of speakers can be displayed using commands from original github below. This is my selection of the best ones. You can edit it to any valid value.

BEST_SPEAKERS = en_speaker_9,it_speaker_9,ja_speaker_0,en_speaker_6,de_speaker_3

The file to be read when selecting option 2.

TEXT_FILE = input.txt

Strings for testing and default string.

TEST_TEXT = "one"

TEST_TEXT2 = "1"

TEST_TEXT3 = "10 ten."

Test string from original repo. env var unused.

POTATOS = Картофель,potato,potato

#adapted from official github https://github.com/suno-ai/bark

Examples • Suno Studio Waitlist • Updates • How to Use • Installation • FAQ

Bark is a transformer-based text-to-audio model created by Suno. Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints, which are ready for inference and available for commercial use.

Bark was developed for research purposes. It is not a conventional text-to-speech model but instead a fully generative text-to-audio model, which can deviate in unexpected ways from provided prompts. Suno does not take responsibility for any output generated. Use at your own risk, and please act responsibly.

2023.05.01

-

©️ Bark is now licensed under the MIT License, meaning it's now available for commercial use!

-

⚡ 2x speed-up on GPU. 10x speed-up on CPU. We also added an option for a smaller version of Bark, which offers additional speed-up with the trade-off of slightly lower quality.

-

📕 Long-form generation, voice consistency enhancements and other examples are now documented in a new notebooks section.

-

👥 We created a voice prompt library. We hope this resource helps you find useful prompts for your use cases! You can also join us on Discord, where the community actively shares useful prompts in the #audio-prompts channel.

-

💬 Growing community support and access to new features here:

-

💾 You can now use Bark with GPUs that have low VRAM (<4GB).

2023.04.20

- 🐶 Bark release!

from bark import SAMPLE_RATE, generate_audio, preload_models

from scipy.io.wavfile import write as write_wav

from IPython.display import Audio

# download and load all models

preload_models()

# generate audio from text

text_prompt = """

Hello, my name is Suno. And, uh — and I like pizza. [laughs]

But I also have other interests such as playing tic tac toe.

"""

audio_array = generate_audio(text_prompt)

# save audio to disk

write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

# play text in notebook

Audio(audio_array, rate=SAMPLE_RATE)pizza.webm

Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling.

text_prompt = """

추석은 내가 가장 좋아하는 명절이다. 나는 며칠 동안 휴식을 취하고 친구 및 가족과 시간을 보낼 수 있습니다.

"""

audio_array = generate_audio(text_prompt)suno_korean.webm

Note: since Bark recognizes languages automatically from input text, it is possible to use for example a german history prompt with english text. This usually leads to english audio with a german accent.

Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

text_prompt = """

♪ In the jungle, the mighty jungle, the lion barks tonight ♪

"""

audio_array = generate_audio(text_prompt)lion.webm

Bark supports 100+ speaker presets across supported languages. You can browse the library of speaker presets here, or in the code. The community also often shares presets in Discord.

Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

text_prompt = """

I have a silky smooth voice, and today I will tell you about

the exercise regimen of the common sloth.

"""

audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1")sloth.webm

By default, generate_audio works well with around 13 seconds of spoken text. For an example of how to do long-form generation, see this example notebook.

Click to toggle example long-form generations (from the example notebook)

dialog.webm

longform_advanced.webm

longform_basic.webm

pip install git+https://github.com/suno-ai/bark.git

or

git clone https://github.com/suno-ai/bark

cd bark && pip install .

Note: Do NOT use 'pip install bark'. It installs a different package, which is not managed by Suno.

Bark has been tested and works on both CPU and GPU (pytorch 2.0+, CUDA 11.7 and CUDA 12.0).

On enterprise GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. On older GPUs, default colab, or CPU, inference time might be significantly slower. For older GPUs or CPU you might want to consider using smaller models. Details can be found in out tutorial sections here.

The full version of Bark requires around 12GB of VRAM to hold everything on GPU at the same time.

To use a smaller version of the models, which should fit into 8GB VRAM, set the environment flag SUNO_USE_SMALL_MODELS=True.

If you don't have hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground here.

Bark is fully generative tex-to-audio model devolved for research and demo purposes. It follows a GPT style architecture similar to AudioLM and Vall-E and a quantized Audio representation from EnCodec. It is not a conventional TTS model, but instead a fully generative text-to-audio model capable of deviating in unexpected ways from any given script. Different to previous approaches, the input text prompt is converted directly to audio without the intermediate use of phonemes. It can therefore generalize to arbitrary instructions beyond speech such as music lyrics, sound effects or other non-speech sounds.

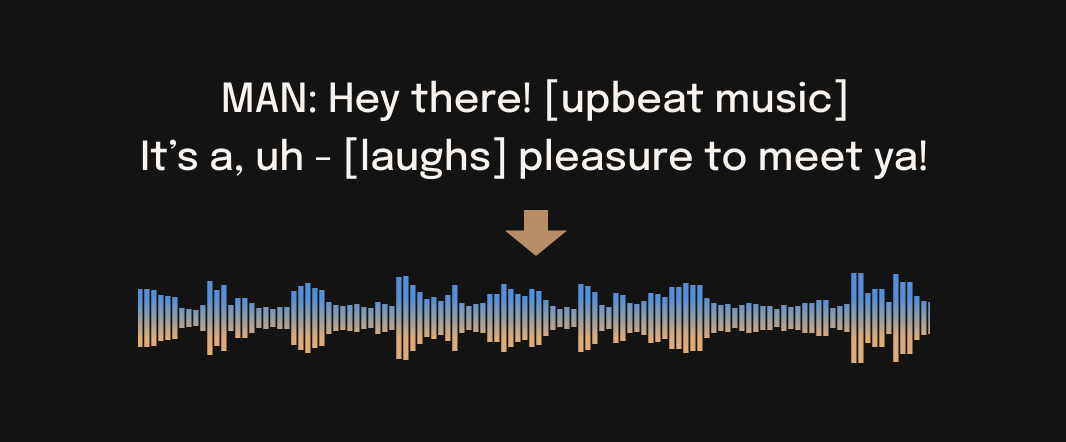

Below is a list of some known non-speech sounds, but we are finding more every day. Please let us know if you find patterns that work particularly well on Discord!

[laughter][laughs][sighs][music][gasps][clears throat]—or...for hesitations♪for song lyrics- CAPITALIZATION for emphasis of a word

[MAN]and[WOMAN]to bias Bark toward male and female speakers, respectively

| Language | Status |

|---|---|

| English (en) | ✅ |

| German (de) | ✅ |

| Spanish (es) | ✅ |

| French (fr) | ✅ |

| Hindi (hi) | ✅ |

| Italian (it) | ✅ |

| Japanese (ja) | ✅ |

| Korean (ko) | ✅ |

| Polish (pl) | ✅ |

| Portuguese (pt) | ✅ |

| Russian (ru) | ✅ |

| Turkish (tr) | ✅ |

| Chinese, simplified (zh) | ✅ |

Requests for future language support here or in the #forums channel on Discord.

- nanoGPT for a dead-simple and blazing fast implementation of GPT-style models

- EnCodec for a state-of-the-art implementation of a fantastic audio codec

- AudioLM for related training and inference code

- Vall-E, AudioLM and many other ground-breaking papers that enabled the development of Bark

Bark is licensed under the MIT License.

Please contact us at bark@suno.ai to request access to a larger version of the model.

We’re developing a playground for our models, including Bark.

If you are interested, you can sign up for early access here.

- Bark uses Hugging Face to download and store models. You can see find more info here.

- Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

- Bark supports 100+ speaker presets across supported languages. You can browse the library of speaker presets here. The community also shares presets in Discord. Bark also supports generating unique random voices that fit the input text. Bark does not currently support custom voice cloning.

- The full version of Bark requires around 12Gb of memory to hold everything on GPU at the same time. However, even smaller cards down to ~2Gb work with some additional settings. Simply add the following code snippet before your generation:

import os

os.environ["SUNO_OFFLOAD_CPU"] = True

os.environ["SUNO_USE_SMALL_MODELS"] = True