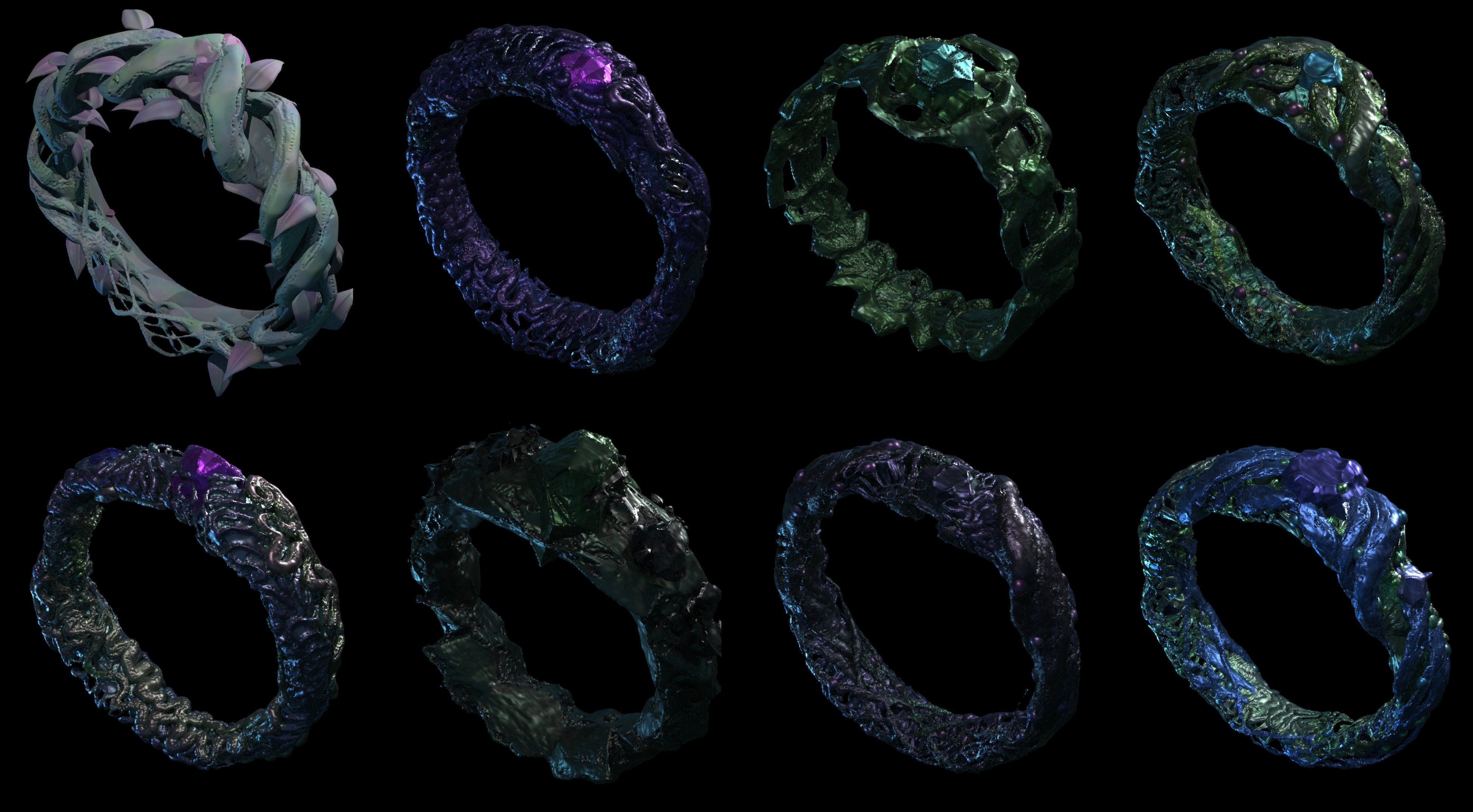

create item images , a circular workflow of refinement using procgen augmented by neural networks .

- DOWNLOAD | VIEW ITEMS | INSTALL

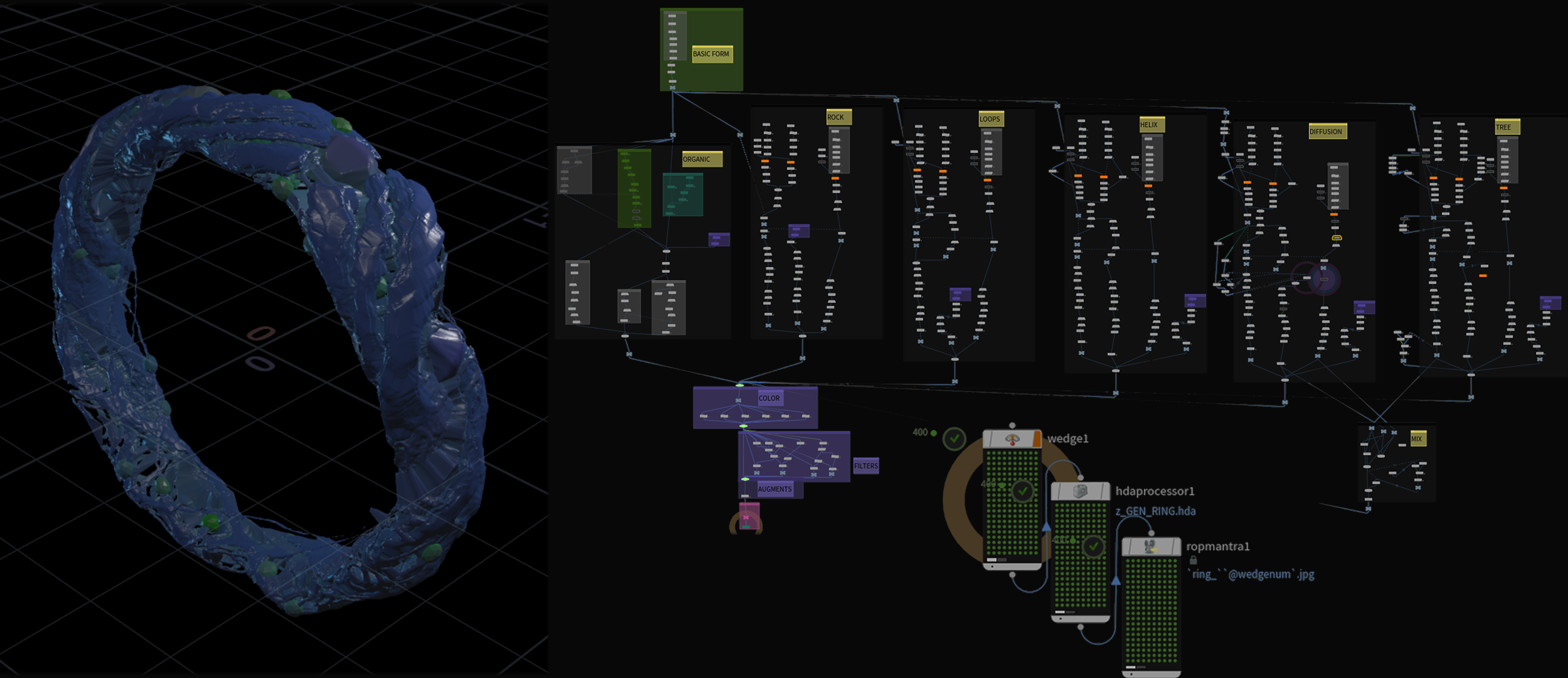

- procedurally generated 3d renders using SideFX's Houdini tools and PDG TOPs

- mutated by text-to-image guided neural networks ( VQGAN+CLIP , STABLEDIFFUSION

- cultivated dataset trained generative adversarial network to generate new variants ( STYLEGAN2ADA )

|

|

|

|---|---|---|

| ring | potion | helm |

- a synthetic image dataset of fantasy items

- collection of favored items generated free to all

- DOWNLOAD IMAGES | VIEW ITEMS

- stylegan2 network checkpoints trained on synthetic 1024x1024 images of generated selections .

- create new seeds using these notebooks or spaces :

| item | generate | fid | dataset | date | color_distribution |

|---|---|---|---|---|---|

| ring |  |

14.9 | 3953 | 20230427 |  |

| potion |  |

9.24 | 4413 | 20230218 |  |

| helm | space | 14.7 | 2818 | 20221013 |  |

- houdini hda tools generate 3d randomized items as a base

- included hip files setup with PDG TOPs , rendering randomized wedging to generate the dataset

- utilizes SideFXLabs hda tools and ZENV hda tools

- with initial set of procgen selected , expand the dataset and alter using multiple techniques :

- IMAGE_COLLAGE.py - given a folder of images randomly composites them with randomized color / brightness

- z_MLOP_COLOR_GRADIENT_VARIANT.hda - given a folder of images , generates randomized color gradient variations

- IMAGE_TEXTURIZER.py - overlay texture dataset , using subcomponent datasets for example fluids for potions , and metals for shields .

- VQGAN+CLIP and STABLEDIFFUSION - text-to-image guided modification of input image , prompts generated from included wildcard txt files

#open anaconda as admin

#clone gen_item

git clone 'https://github.com/CorvaeOboro/gen_item'

cd gen_item

#create conda venv from included environment.yml

conda env create --prefix venv -f environment.yml

conda activate C:/FOLDER/gen_item/venv

#clone STYLEGAN2ADA

git clone "https://github.com/NVlabs/stylegan2-ada-pytorch"

#clone VQGANCLIP

git clone "https://github.com/openai/CLIP"

git clone "https://github.com/CompVis/taming-transformers"

#download VQGAN checkpoint imagenet 16k

mkdir checkpoints

curl -L -o checkpoints/vqgan_imagenet_f16_16384.yaml -C - 'https://heibox.uni-heidelberg.de/d/a7530b09fed84f80a887/files/?p=%2Fconfigs%2Fmodel.yaml&dl=1' #ImageNet 16384

curl -L -o checkpoints/vqgan_imagenet_f16_16384.ckpt -C - 'https://heibox.uni-heidelberg.de/d/a7530b09fed84f80a887/files/?p=%2Fckpts%2Flast.ckpt&dl=1' #ImageNet 16384

# generate new seeds from checkpoints

python gen_item_stylegan2ada_generate.pystylegan2ada requires CUDA https://developer.nvidia.com/cuda-11.3.0-download-archive

- generate procgen renders from houdini , selecting favored renders

# procgen houdini pdg render , requires houdini and zenv tools

python gen_item_houdini_render.py- generate prompts for text2image mutation

# vqgan+clip text2image batch alter from init image set

python prompts/text_word_combine_complex.py - mutate those renders via text guided VQGAN+CLIP

# vqgan+clip text2image batch alter from init image set

python gen_item_vqganclip.py

python gen_item_vqganclip.py --input_path="./item/ring" --input_prompt_list="./prompts/prompts_ring.txt" - combine the renders and mutants via random collaging

# collage from generated icon set

python gen_item_collage.py

python gen_item_collage.py --input_path="./item/ring" --resolution=1024- select the favored icons to create a stylegan2 dataset

- train stylegan2 network , then generate seeds from trained checkpoint

# stylegan2ada generate from trained icon checkpoint

python gen_item_stylegan2ada_generate.py- cultivate the complete dataset thru selection and art direction adjustments

- repeat to expand and refine by additional text guided mutation , retraining , regenerating

- 20230430 = added potion stylegan2ada checkpoint gen 5

- 20230218 = added ring stylegan2ada checkpoint gen 7

- 20221016 = added helm stylegan2ada checkpoint gen 3

many thanks to

- NVIDIA NVLabs - https://github.com/NVlabs/stylegan2-ada

- CLIP - https://github.com/openai/CLIP

- VQGAN - https://github.com/CompVis/taming-transformers

- Katherine Crowson : https://github.com/crowsonkb https://arxiv.org/abs/2204.08583

- NerdyRodent : https://github.com/nerdyrodent/VQGAN-CLIP

@inproceedings{Karras2020ada,

title = {Training Generative Adversarial Networks with Limited Data},

author = {Tero Karras and Miika Aittala and Janne Hellsten and Samuli Laine and Jaakko Lehtinen and Timo Aila},

booktitle = {Proc. NeurIPS},

year = {2020}

}

@misc{esser2020taming,

title={Taming Transformers for High-Resolution Image Synthesis},

author={Patrick Esser and Robin Rombach and Björn Ommer},

year={2020},

eprint={2012.09841},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{https://doi.org/10.48550/arxiv.2103.00020,

doi = {10.48550/ARXIV.2103.00020},

url = {https://arxiv.org/abs/2103.00020},

author = {Radford, Alec and Kim, Jong Wook and Hallacy, Chris and Ramesh, Aditya and Goh, Gabriel and Agarwal, Sandhini and Sastry, Girish and Askell, Amanda and Mishkin, Pamela and Clark, Jack and Krueger, Gretchen and Sutskever, Ilya},

keywords = {Computer Vision and Pattern Recognition (cs.CV), Machine Learning (cs.LG), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Learning Transferable Visual Models From Natural Language Supervision},

publisher = {arXiv},

year = {2021},

copyright = {arXiv.org perpetual, non-exclusive license}

}

free to all , creative commons CC0 , free to redistribute , no attribution required