This project explores the opportunities of deep learning for character animation and control as part of my Ph.D. research at the University of Edinburgh in the School of Informatics, supervised by Taku Komura. Over the last couple years, this project has become a modular and stable framework for data-driven character animation, including data processing, network training and runtime control, developed in Unity3D / Tensorflow / PyTorch. This repository enables using neural networks for animating biped locomotion, quadruped locomotion, and character-scene interactions with objects and the environment. Further advances on this research will continue to be added to this project.

SIGGRAPH Asia 2019

Neural State Machine for Character-Scene Interactions

Sebastian Starke*, He Zhang*, Taku Komura, Jun Saito. ACM Trans. Graph. 38, 6, Article 178.

(*Joint First Authors)

- Video - Paper - Code (coming soon) - Demos (coming soon) -

SIGGRAPH 2018

Mode-Adaptive Neural Networks for Quadruped Motion Control

He Zhang*, Sebastian Starke*, Taku Komura, Jun Saito. ACM Trans. Graph. 37, 4, Article 145.

(*Joint First Authors)

- Video - Paper - Code - Windows Demo - Linux Demo - Mac Demo - MoCap Data - ReadMe -

SIGGRAPH 2017

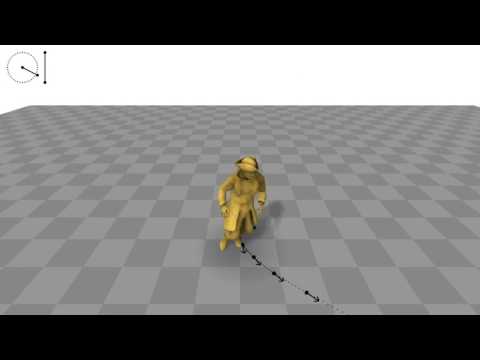

Phase-Functioned Neural Networks for Character Control

Daniel Holden, Taku Komura, Jun Saito. ACM Trans. Graph. 36, 4, Article 42.

- Video - Paper - Code (Unity) - Windows Demo - Linux Demo - Mac Demo -

In progress. More information will be added soon.

This code implementation is only for research or education purposes, and (especially the learned data) not freely available for commercial use or redistribution. The intellectual property and code implementation belongs to the University of Edinburgh and Adobe Systems. Licensing is possible if you want to apply this research for commercial use. For scientific use, please reference this repository together with the relevant publications below. In any case, I would ask you to contact me if you intend to seriously use, redistribute or publish anything related to this repository.