Created by Jiangtao Xie and Peihua Li

This repository contains the source code under PyTorch framework and models trained on ImageNet 2012 dataset for the following paper:

@InProceedings{Li_2018_CVPR,

author = {Li, Peihua and Xie, Jiangtao and Wang, Qilong and Gao, Zilin},

title = {Towards Faster Training of Global Covariance Pooling Networks by Iterative Matrix Square Root Normalization},

booktitle = { IEEE Int. Conf. on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2018}

}

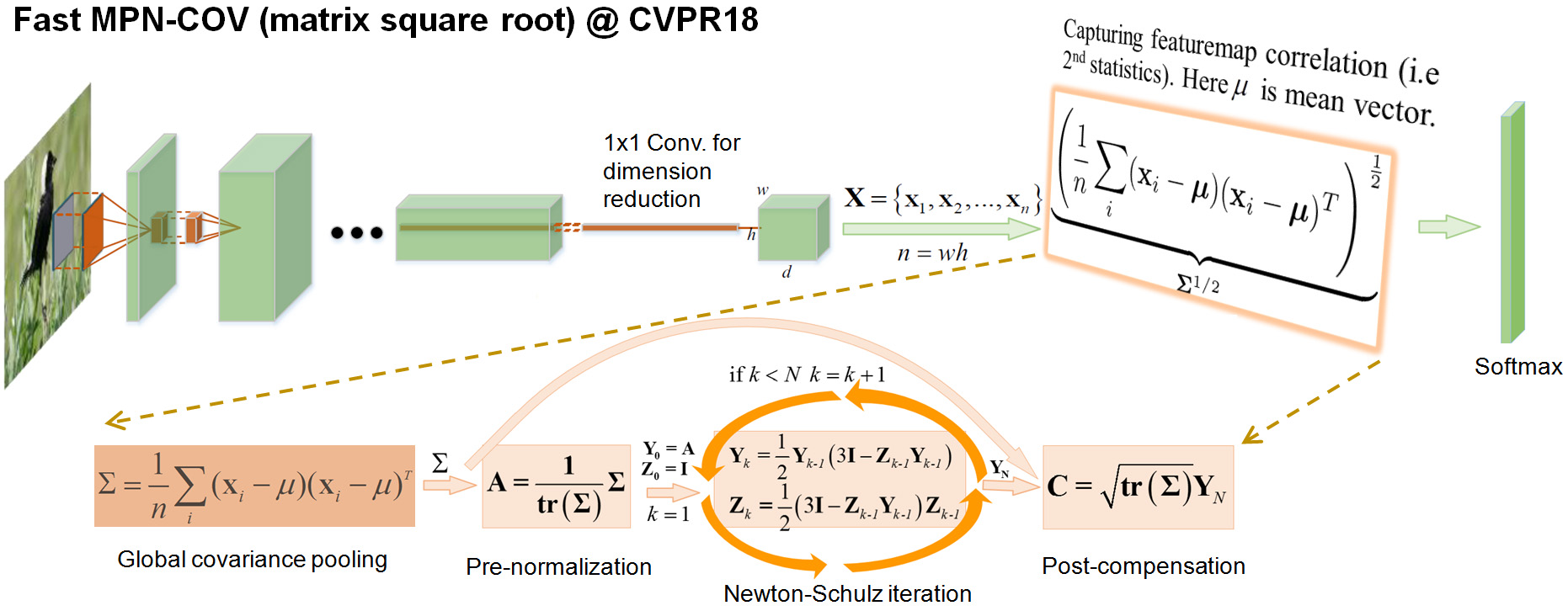

This paper concerns an iterative matrix square root normalization network (called fast MPN-COV), which is very efficient, fit for large-scale datasets, as opposed to its predecessor (i.e., MPN-COV published in ICCV17) that performs matrix power normalization by Eigen-decompositon. The code on bilinear CNN (B-CNN), compact bilinear pooling and global average pooling etc. is also released for both training from scratch and finetuning. If you use the code, please cite this fast MPN-COV work and its predecessor (i.e., MPN-COV).

| Network | Top-1 Error | Top-5 Error | Pre-trained models | |||

|---|---|---|---|---|---|---|

| paper | reproduce | paper | reproduce | GoogleDrive | BaiduCloud | |

| fast MPN-COV-ResNet50 | 22.14 | 21.71 | 6.22 | 6.13 | 217.3MB | 217.3MB |

| fast MPN-COV-ResNet101 | 21.21 | 20.99 | 5.68 | 5.56 | 289.9MB | 289.9MB |

| Backbone model | Dim. | Birds | Aircrafts | Cars | |||

|---|---|---|---|---|---|---|---|

| paper | reproduce | paper | reproduce | paper | reproduce | ||

| ResNet-50 | 32K | 88.1 | 88.0 | 90.0 | 90.3 | 92.8 | 92.3 |

| ResNet-101 | 32K | 88.7 | TODO | 91.4 | TODO | 93.3 | TODO |

- Our method uses neither bounding boxes nor part annotations

- The reproduced results are obtained by simply finetuning our pre-trained fast MPN-COV-ResNet model with a small learning rate, which do not perform SVM as our paper described.

We implement our Fast MPN-COV (i.e., iSQRT-COV) meta-layer under PyTorch package. Note that though autograd package of PyTorch 0.4.0 or above can compute correctly gradients of our meta-layer, that of PyTorch 0.3.0 fails. As such, we decide to implement the backpropagation of our meta-layer without using autograd package, which works well for both PyTorch release 0.3.0 and 0.4.0.

For making our Fast MPN-COV meta layer can be added in a network conveniently, we reconstruct pytorch official demo imagenet/ and models/. In which, we divide any network for three parts: 1) features extractor; 2) global image representation; 3) classifier. As such, we can arbitrarily combine a network with our Fast MPN-COV or some other global image representation methods (e.g.,Global average pooling, Bilinear pooling, Compact bilinear pooling, etc.) Based on these, we can:

- Finetune a pre-trained model on any image classification datasets.

AlexNet, VGG, ResNet, Inception, etc.

- Finetune a pre-trained model with a powerful global image representation method on any image classification datasets.

Fast MPN-COV, Bilinear Pooling (B-CNN), Compact Bilinear Pooling (CBP), etc.

- Train a model from scratch with a powerful global image representation method on any image classification datasets.

Welcome to contribution. In this repository, we will keep updating for containing more networks and global image representation methods.

├── main.py

├── imagepreprocess.py

├── functions.py

├── model_init.py

├── src

│ ├── network

│ │ ├── __init__.py

│ │ ├── base.py

│ │ ├── inception.py

│ │ ├── alexnet.py

│ │ ├── mpncovresnet.py

│ │ ├── resnet.py

│ │ └── vgg.py

│ ├── representation

│ │ ├── __init__.py

│ │ ├── MPNCOV.py

│ │ ├── GAvP.py

│ │ ├── BCNN.py

│ │ ├── CBP.py

│ │ └── Custom.py

│ └── torchviz

│ ├── __init__.py

│ └── dot.py

├── trainingFromScratch

│ └── train.sh

└── finetune

├── finetune.sh

└── two_stage_finetune.sh

- implement some functions for plotting convergence curve.

- adopt network visualization tool pytorchviz for plotting network structure.

- use shell file to manage the process.

- Install PyTorch (0.4.0 or above)

- type

git clone https://github.com/jiangtaoxie/fast-MPN-COV pip install -r requirements.txt- prepare the dataset as follows

.

├── train

│ ├── class1

│ │ ├── class1_001.jpg

│ │ ├── class1_002.jpg

| | └── ...

│ ├── class2

│ ├── class3

│ ├── ...

│ ├── ...

│ └── classN

└── val

├── class1

│ ├── class1_001.jpg

│ ├── class1_002.jpg

| └── ...

├── class2

├── class3

├── ...

├── ...

└── classN

cp trainingFromScratch/train.sh ./- modify the dataset path in

train.sh sh train.sh

cp finetune/finetune.sh ./- modify the dataset path in

finetune.sh sh finetune.sh

cp finetune/two_stage_finetune.sh ./- modify the dataset path in

two_stage_finetune.sh sh two_stage_finetune.sh

- MatConvNet Implementation

- TensorFlow Implemention(coming soon)

If you have any questions or suggestions, please contact me

jiangtaoxie@mail.dlut.edu.cn