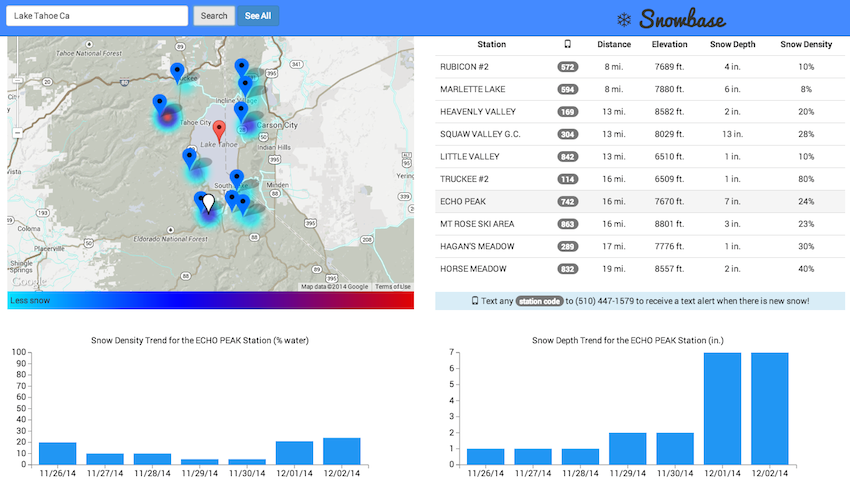

- SnowBase connects winter backcountry enthusiasts to up-to-date snow pack conditions with one click.

- For those who are eagerly awaiting new snow, easy-to-use text alerts are available for over 860 SNOTEL mountain stations.

- Data generated by the USDA's SNOTEL network is brought to life on a daily basis through the use of data, mapping, and texting APIs plus data visualization technology.

- PostgreSQL

- Python

- Flask

- SQLAlchemy

- JavaScript

- jQuery

- d3

- Bootstrap

- HTML/CSS

- Powederlin.es SNOTEL API

- Google Maps API

- Twilio API

Data:

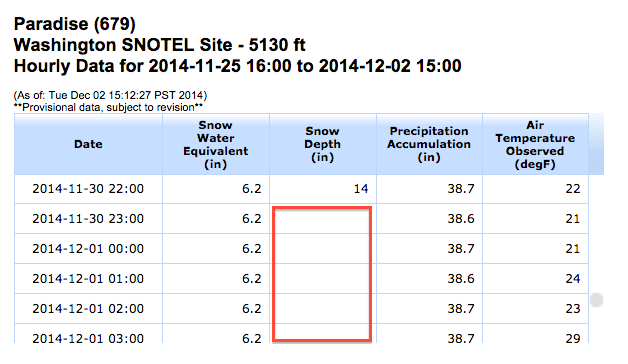

While the Powderlin.es API provides a robust service, the underlying mechanical system of the SNOTEL stations and system can misfire, with stations serving missing data points, and the API experiencing the occasional delay from the USDA server. To shield the user from delays, and so that data is always available, I used a relational database (PostgreSQL) to store data points that are collected daily.

To account for missing data points, I determined a minimally viable dataset and filtered out non-viable data points and data sets. QA on data points is currently conducted by comparing data on the SNOTEL site to chart and graph values on SnowBase. I anticipate that the minimally viable set of data will evolve with user feedback.

Search Performance:

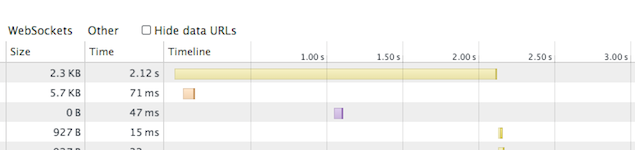

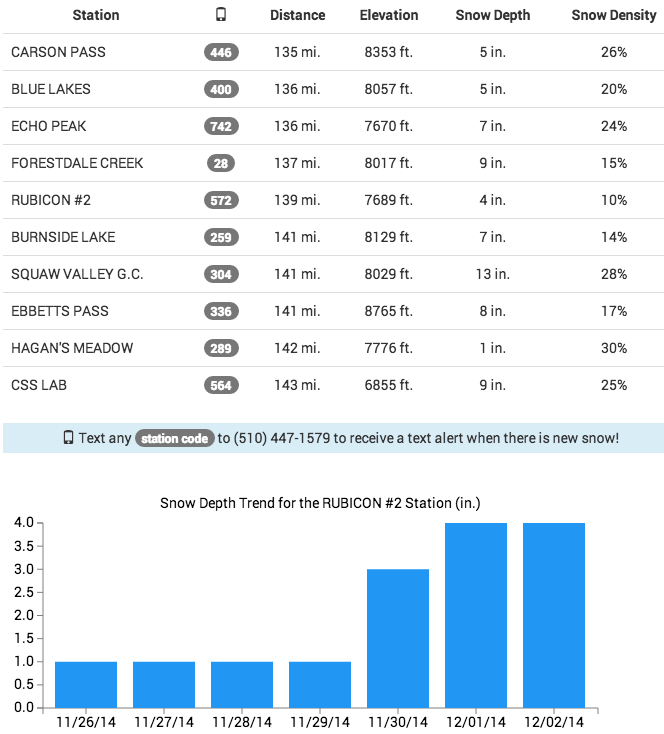

SnowBase takes user input and uses the Google Maps geocoding API and the haversine formula to calculate the 10 closest stations in the database, returning the latest snow telemetry data for each station that is actively reporting data, and has a snow depth > 0. This query is a classic example of a geospacial "Nearest Neighbor Search" problem. Not suprisingly, the brute force approach (calculating the distance from the location input to every station location in the database) did not perform well. The search is optimized by storing geohashed SNOTEL station locations, and geohashing the user input. Because geohashes are strings, geographical proximity searches are easily performed using partial string match ("like") queries. In this case, the gohashed user location input is expanded in all directions to form a geographical "neighborhood" within which the 10 closest SNOTEL stations reporting snow can be derived. As I tested various solutions to improving performance, I timed outcomes against known benchmark locations input performance. Ultimately, I improved the locally hosted benchmark search speeds by over 3 seconds from the original brute-force algorithm performance. The image below reflects the benchmark performance of an intermediate geofencing solution, created using latitudinal or longitudinal bounds, which also proved suboptimal.

Usability:

Existing SNOTEL representations require users to zoom, scroll, and click to find the data for a given SNOTEL station and there is no way to compare data between SNOTEL stations. For this reason, I committed to making the interface extremely simple for the end user. I included a heatmap layer, gradient key, and marker indicators on the map, so that users can visually identify where the deepest snow is. Data is instantly accessed and compared; I used jQuery and d3 to effortlessly render trending data as the user clicks on the data chart.

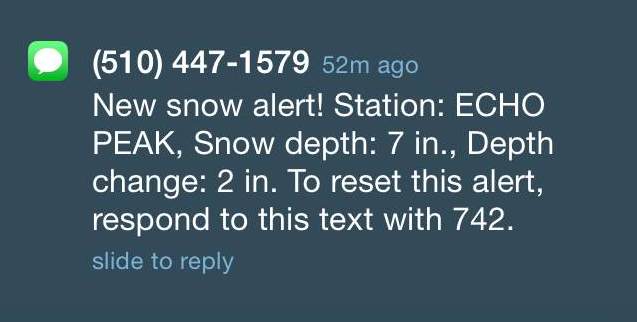

The text alert system also reflects my commitment to simplicity; having users log in to set or manage text messages felt too cumbersome for the simple task of setting an alert. Instead, users just text a code to the SnowBase phone number and the alert is set via the Twilio API. The user receives a single text alert when a station registers new snow, and in the same text are instructions for how to reset the alert. Users can effectively manage their alerts from their phone without visiting SnowBase. This light solution employs a data table and a boolean toggle system. Example text alert:

MVC: Contains flask app files, and reference/scripts needed to create the database of stations and snow telemetry data points.- requirements.txt: Requirements for virtual environment.

- app.py: Flask application for capture of lat/long, returns closest stations with telemetry data.

- haversine.py: Computation for distance between two points given lat/long for each.

- static and template folders: contain files for view rendering

- model.py: Create data tables, or add to data tables

- seed.py: Seed table using reference file and/or by calling station APIs

- add.py: Add snow telemetry data to existing database.

- alerts.py: Adds and updates alert data to alerts database

- scan.py: Scans database for alerts, sends alerts

- SnowDataParsed2014-11-08-0152Z.csv: Used to seed tables

- Snow.db: Including db, for running finder.py

- geohashing.py: Geohashing algorithm

- seed_geohash.py: Seeds table with geohashed SNOTEL station locations

- create_station_json.py: Creates a csv file of all of the stations, including the station triplet needed for the API url.

- create_urls.py: Creates a file of all API urls needed to call in a complete set of telemetry data.

- json_cron.py: Calls all station APIs and saves a file of json objects. Runs daily.

- parsed_cron.py: Calls all station APIs and saves a csv file of parsed data from each station. Runs daily.

- StationJSON: File of json objects from each station.

- stationsTriplets.csv: File of station triplets used to create API urls.

- APIurls.csv: csv file of API urls for all stations.

- APIurls_short.csv: short csv file of API urls for testing purposes.

- KEY: Key for parsing the output from the parsed_cron.py file.

- Various csv files are included as sample output