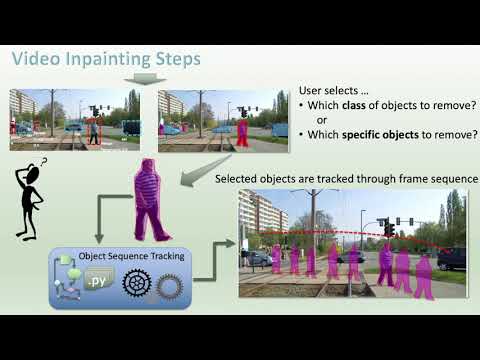

In this project, a prototype video editing system based on “inpainting” is demonstrated. Inpainting is an image editing method for replacement of masked regions of an image with a suitable background. The resulting video is thus free from the selected objects and more readily adaptable for further simulation. The system utilizes a three-step approach to simulation: (1) detection, (2) mask grouping, and (3) inpainting. The detection step involves the identification of objects within the video based upon a given object class definition, and the production of pixel level masks. Next, the object masks are grouped and tracked through the frame sequence to determine persistence and allow correction of classified results. Finally, the grouped masks are used to target specific objects instances in the video for inpainting removal.

The end result of this project is a video editing platform in the context of locomotive route simulation. The final video output demonstrates the system’s ability to automatically remove moving pedestrians in a video sequence, which commonly occur in most street tram simulations. This work also addresses the limitations of the system, in particular, the inability to remove quasi-stationary objects. The overall outcome of the project is a video editing system with automation capabilities rivaling commercial inpainting software.

| Result | Description |

|---|---|

|

Single object removal - A single vehichle is removed using a conforming mask - elliptical dilation mask of 21 pixels used Source: YouTube video 15:16-15:17 |

| Result | Description |

|---|---|

|

Multiple object removal - pedestrians are removed using bounding-box shaped masks - elliptical dilation mask of 21 pixels used Source: YouTube video 15:26-15:27 |

-

Install NVIDIA docker, if not already installed (see setup )

-

Follow the instructions from the YouTube video for the specific project setup: