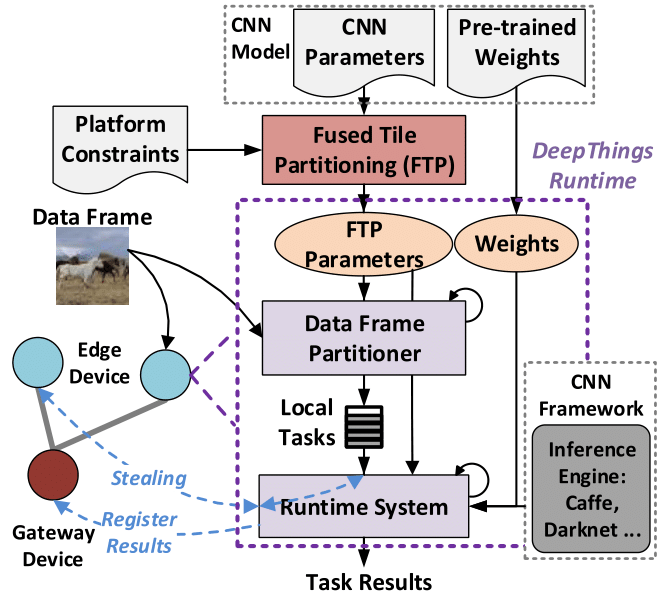

DeepThings is a framework for locally distributed and adaptive CNN inference in resource-constrained IoT edge clusters. DeepThings mainly consists of:

- A Fused Tile Partitioning (FTP) method for dividing convolutional layers into independently distributable tasks. FTP fuses layers and partitions them vertically in a grid fashion, which largely reduces communication and task migration overhead.

- A distributed work stealing runtime system for IoT clusters to adaptively distribute FTP partitions in dynamic application scenarios.

For more details of DeepThings, please refer to [1].

This repository includes a lightweight, self-contained and portable C implementation of DeepThings. It uses a NNPACK-accelerated Darknet as the default inference engine. More information on porting DeepThings with different inference frameworks and platforms can be found below.

The current implementation has been tested on Raspberry Pi 3 Model B running Raspbian.

Edit the configuration file include/configure.h according to your IoT cluster parameters, then run:

make clean_all

make

This will automatically compile all related libraries and generate the DeepThings executable. If you want to run DeepThings on Raspberry Pi with NNPACK acceleration, you need first follow install NNPACK before running the Makefile commands, and set the options in Makefile as below:

NNPACK=1

ARM_NEON=1

In order to perform distributed inference, you need to download pre-trained CNN models and put it in ./models folder. Current implementation is tested with YOLOv2, which can be downloaded from YOLOv2 model and YOLOv2 weights. If the link doesn't work, you can also find the weights here.

For input data, images need to be numbered (starting from 0) and renamed as <#>.jpg, and placed in ./data/input folder.

An overview of DeepThings command line options is listed below:

#./deepthings -mode <execution mode: {start, gateway, data_src, non_data_src}>

# -total_edge <total edge number: t>

# -edge_id <edge device ID: {0, ... t-1}>

# -n <FTP dimension: N>

# -m <FTP dimension: M>

# -l <number of fused layers: L>For example, assuming you have a host machine H, gateway device G, and two edge devices E0 (data source) and E1 (idle), while you want to perform a 5x5 FTP with 16 fused layers, then you need to follow the steps below:

In gateway device G:

./deepthings -mode gateway -total_edge 2 -n 5 -m 5 -l 16In edge device E0:

./deepthings -mode data_src -edge_id 0 -n 5 -m 5 -l 16In edge device E1:

./deepthings -mode non_data_src -edge_id 1 -n 5 -m 5 -l 16Now all the devices will wait for a trigger signal to start. You can simply do that in your host machine H:

./deepthings -mode startMany people want to first try the FTP-partitioned inference in a single device. Now you can find a single-device execution example in ./examples folder. To run it:

make clean_all

make

make testThis will first initialize a gateway context and a client context in different local threads. FTP partition inference results will be transferred between queues associated with each context to emulate the inter-device communication.

One just needs to simply modify the corresponding abstraction layer files to port DeepThings. If you want to use a different CNN inference engine, modify:

If you want to port DeepThings onto a different OS (Currently using UNIX pthread), modify:

If you want to use DeepThings with different networking APIs (Currently using UNIX socket), modify:

[1] Z. Zhao, K. Mirzazad and A. Gerstlauer, "DeepThings: Distributed Adaptive Deep Learning Inference on Resource-Constrained IoT Edge Clusters," CODES+ISSS 2018, special issue of IEEE Transactions on Computer-Aided Design of Integrated Circuits and Systems (TCAD).

Zhuoran Zhao, zhuoran@utexas.edu