There are so many NeRF implementations, yet, the way ray projects from the camera unto the scene is not really well explained. This project aims to visualize exactly how the ray projection from camera to the image plane, the effect of focal length, sensor size of the camera, etc.

Implemented using Unity 2022.3.0f1 LTS.

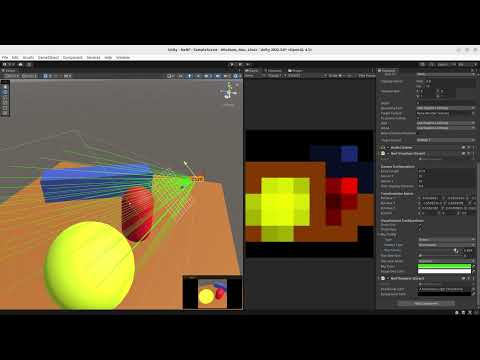

The camera in this project models the pinhole camera as shown below:

Camera Configurationscontrols the camera intrinsic parameters as shown in the image above.Transformation Matrixshows the rotation matrix and the position of the camera.Show Gridshows the grid on the image plane.Show Raysshows the rays projected from the camera to the image plane.Ray Config,- Type

Scenewill project the rays from the camera until they intersect with the scene. - Type

Imagewill project the rays from the camera until they intersect with the image plane.

- Type

Iterator Type,Singlewill show only one ray.Accumulatewill show all the rays up to the current ray.

Ray Iteratorcontrols how much ray is projected from the camera.Max Row Gridcontrols the pixel resolution of the image plane.Obj Layer Maskcontrols which layer the ray will intersect with. Put all the objects in the scene in this layer.- The rest are self-explanatory.

- The code is not optimized for performance as the objective is to simply visualize the ray projection.

- All scripts are executed in edit mode, you don't need to play the scene. However, be aware of the changes you make in the scene as it will be saved.

- The rendering part of this image is simply using Lambertian shading, so it does not take into account the volumetric rendering as in the NeRF paper.