Ever wanted to render a (large-scale) NeRF scene in real-time on the web? Welcome to try our code.

This repository contains the official implementation of city-on-web and the PyTorch implementation of MERF, based on the nerfstudio framework.

City-on-Web:Real-time Neural Rendering of Large-scale Scenes on the Web

Kaiwen Song, Xiaoyi Zeng, Chenqu Ren, Juyong Zhang

European Conference on Computer Vision (ECCV)

Project page / Paper / Twitter

If you have any question on this repo, feel free to open an issue or contact SA21001046@mail.ustc.edu.cn.

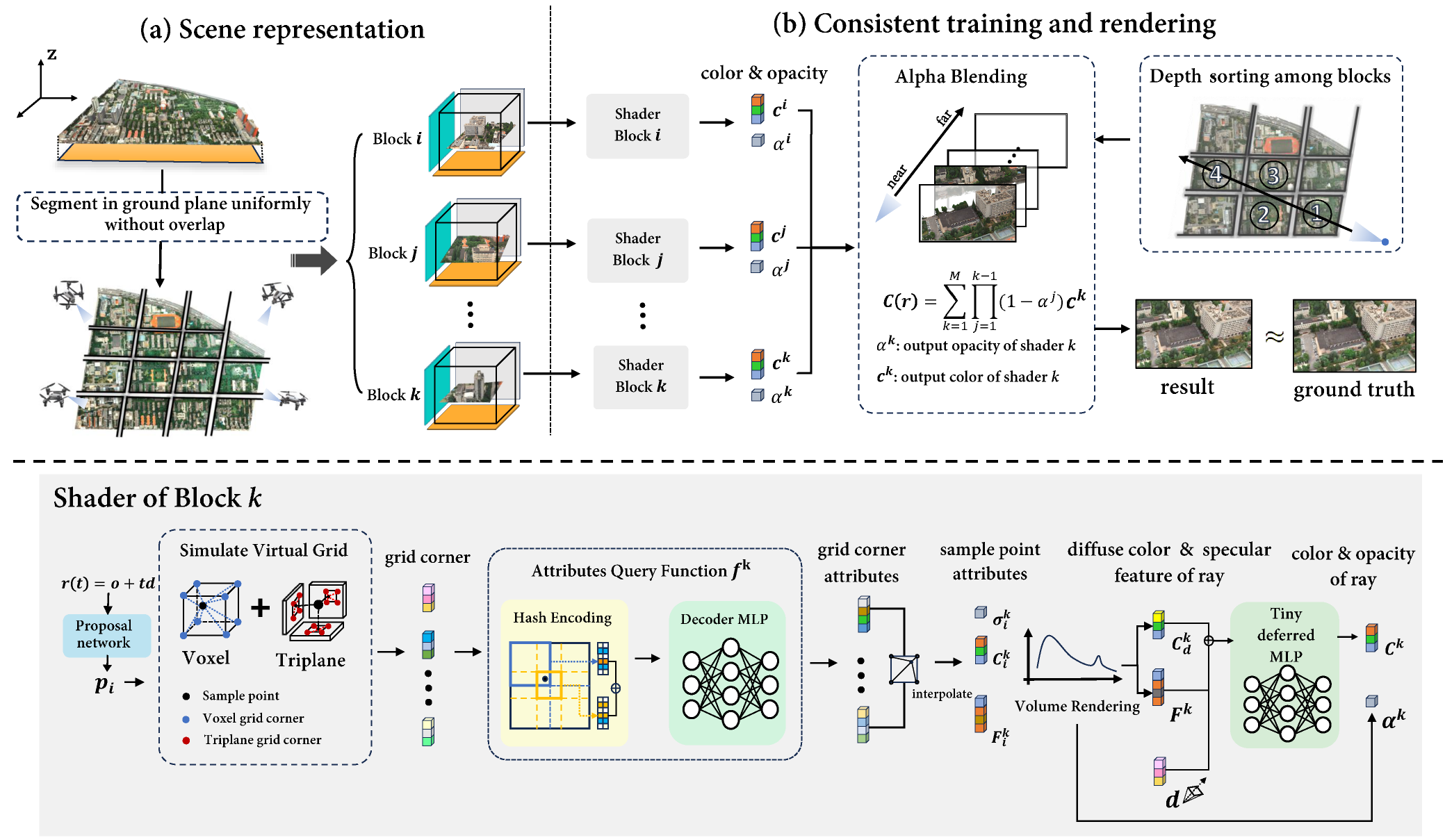

During the training phase, we uniformly partition the scene and reconstruct it at the finest LOD. To ensure 3D consistency, we use a resource-independent block-based volume rendering strategy. For LOD generation, we downsample virtual grid points and retrain a coarser model. This approach supports subsequent real-time rendering by facilitating the dynamic loading of rendering resources.

Unlike existing block-based methods that require the resources of all blocks to be loaded simultaneously for rendering, our strategy can be rendered independently using its own texture in its own shader. _This novel strategy supports asynchronous resource loading and independent rendering, which allows the strategy to be applied to other resource-independent environments, paving the way for further research and applications_. We are very pleased to see this strategy being applied to other large-scale scene reconstruction scenarios, such as multi-GPU parallel or distributed training of NeRF, and even Gaussian splatting.The code has been tested with Nvidia A100.

git clone --recursive https://github.com/Totoro97/f2-nerf.git

cd merfstudioWe recommend using conda to manage dependencies. Make sure to install Conda before proceeding.

conda create --name merfstudio -y python=3.8

conda activate merfstudioInstall PyTorch with CUDA (this repo has been tested with CUDA 11.8) and tiny-cuda-nn.

cuda-toolkit is required for building tiny-cuda-nn.

pip install torch==2.0.1+cu118 torchvision==0.15.1+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

conda install -c "nvidia/label/cuda-11.8.0" cuda-toolkit

pip install ninja git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchOur code has been tested with nerfstudio==0.3.3. There may be issues with newer versions of nerfstudio, but we will update it later to ensure compatibility with the latest version of nerfstudio.

cd nerfstudio

pip install -e .You can easily use the following command to install merf.

cd ..

pip install -e .and city-on-web. Notably, the installation of city-on-web requires the prior installation of merf.

cd block-merf

pip install -e .Our data format requirements follow the instant-ngp convention.

To download the mip-NeRF 360 dataset, visit the official page. Then use ns-process-data to generate the transforms.json file.

ns-process-data images --data path/to/data --skip-colmap

To download the Matrix City dataset, visit the official page. You can opt to download the "small city" dataset to test your algorithm. This dataset follows the instant-ngp convention, so no preprocessing is required.

We highly recommend using Metashape to obtain camera poses from multi-view images. Then, use their script to convert camera poses to the COLMAP convention. Alternatively, you can use COLMAP to obtain the camera poses. After obtaining the data in COLMAP format, use ns-process-data to generate the transforms.json file.

ns-process-data images --data path/to/data --skip-colmap

Our loaders expect the following dataset structure in the source path location:

<location>

|---images

| |---<image 0>

| |---<image 1>

| |---...

|---sparse(optionally)

|---0

|---cameras.bin

|---images.bin

|---points3D.bin

|---transforms.json

Our implementation of MERF is primarily based on the official implementation.

To reconstruct a small scene like mip-nerf 360, simply use:

ns-trian merf --data path/to/data

To bake a reconstructed MERF scene, simply use the following command and load config from the config in the training output folder:

ns-baking --load-config path/to/output/config

Our results are compatible with the official MERF renderer. You can follow the official guidance and place the baking results in the webviewer folder.

cd webviewer

mkdir -p third_party

curl https://unpkg.com/three@0.113.1/build/three.js --output third_party/three.js

curl https://unpkg.com/three@0.113.1/examples/js/controls/OrbitControls.js --output third_party/OrbitControls.js

curl https://unpkg.com/three@0.113.1/examples/js/controls/PointerLockControls.js --output third_party/PointerLockControls.js

curl https://unpkg.com/png-js@1.0.0/zlib.js --output third_party/zlib.js

curl https://unpkg.com/png-js@1.0.0/png.js --output third_party/png.js

curl https://unpkg.com/stats-js@1.0.1/build/stats.min.js --output third_party/stats.min.js

Our web viewer is hosted on largeviewer folder. Notably, some parameters need to be specified during rendering, such as the position of each block, which can be automatically obtained from the input data. We will update later to automatically adapt the baking results with the real-time viewer.

To reconstruct a large scale scene like block All scene in the Matrix City dataset, simply use:

ns-trian block-merf --data path/to/data

To bake a reconstructed large scale scene, simply use the following command and load config from the config in the training output folder:

ns-trian block-baking --config path/to/config

If you use this repo or find the documentation useful for your research, please consider citing:

@inproceedings{song2024city,

title={City-on-Web: Real-time Neural Rendering of Large-scale Scenes on the Web},

author={Kaiwen Song and Xiaoyi Zeng and Chenqu Ren and Juyong Zhang},

booktitle = {European Conference on Computer Vision (ECCV)},

year={2024}

}

This repository's code is based on nerfstudio and MERF. We are very grateful for their outstanding work.