by Lingxiao Yang, Risheng Liu, David Zhang, Lei Zhang at The Hong Kong Polytechnic University.

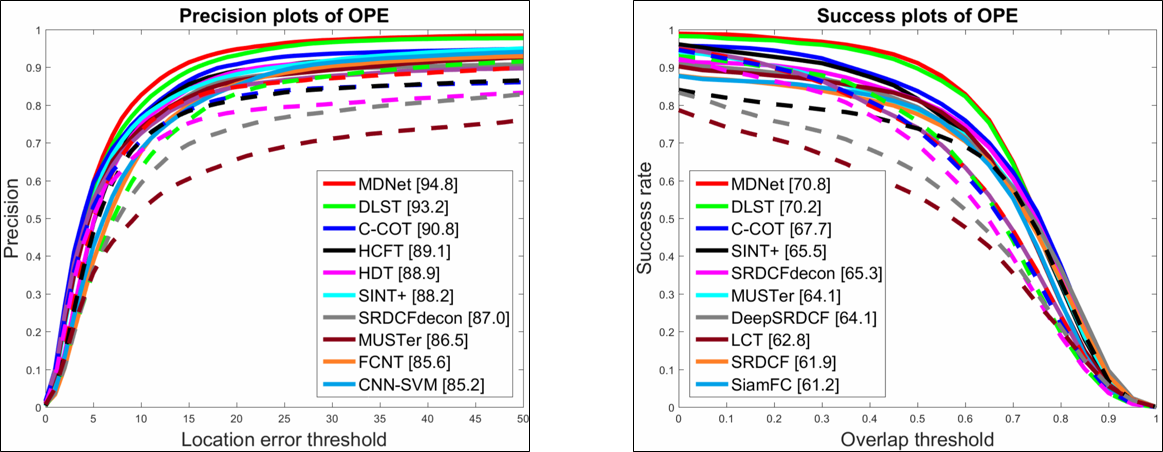

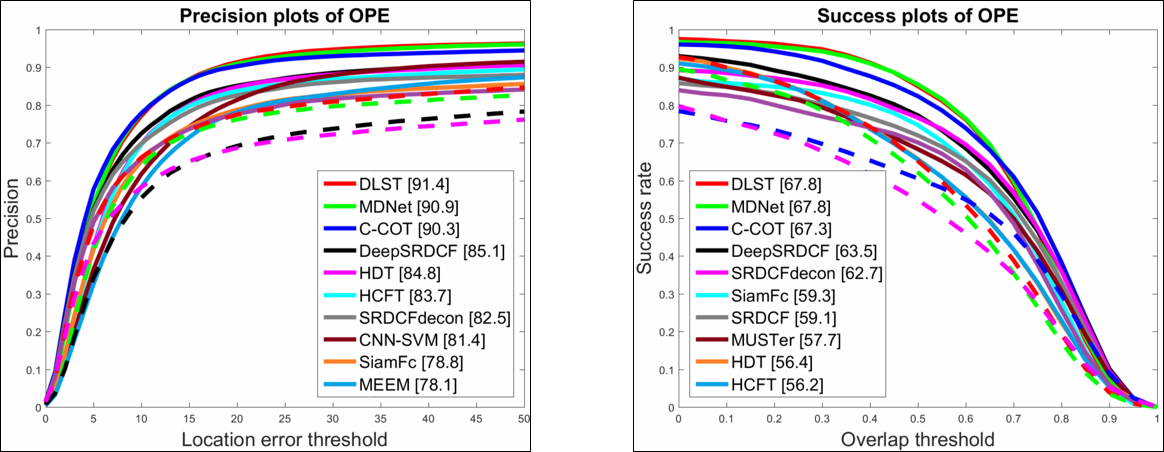

Deep Location-Specific Tracking (DLST) is an tracking framework based on deep convolutional networks, which decouples the tracking problem into two sub-tasks: a localization task and a classification task. The localization is a preprocess step to estimate the target location in the current frame. The output of localization with target position in the previous frames are both utilized to generate samples for further classification. The classification network is developed based on ''1x1'' convolution and global average pooling to reduce the overfitting problem online. Without using any labeled tracking videos for fine-tuning, our tracker achieves competitive results on OTB 50 & 100 and VOT 2016 datasets.

This code has been tested on Windows 10 64-bit and Ubuntu on MATLAB 2015a/2016b.

Prerequisites

-

MATLAB (2015a/2016b).

-

MatConvNet (with version 1.0-beta23 or above).

-

For GPU support, a GPU at least 2GB memory is needed.

-

Cuda toolkit 7.5 or above (8.0) is required. Cudnn is optional.

-

OpenCV 3.0 (3.1) and MexOpencv are needed if you want faster speed.

How to run the Code

-

Compile the MatConvNet according to the website

-

Compile the OpenCV and MexOpenCV if you need faster speed. Otherwise, please comment the line 98 in

utils/im_crop.m, and uncomment the line 93-94 to use the matlabimresizefunction (around 2x slower). -

Change the path to your local path in

setupDLST.mand the local tracker model path inutils/getDefaultOpts.m (opts.model). -

If you want create your own tracker model, please see the details in

createDLST.m. Currently, we only adopt VGG-M model in our paper. -

For VOT2016 testing, please install the VOT official toolkit, and simply copy the

DLST\VOT2016\wrapper\tracker_DLST.mto your VOT workspace.

It is very time consuming for running this code on entire OTB100 and VOT2016 datasets. Ususally it will take around 1 day for OTB100 testing (One-Pass), and 3 ~ 4 days for VOT2016 evaluation. You can simply download all pre-computed results from following links.

| DLST (Ours) | EBT | DDC | Staple | MLDF | SSAT | TCNN | C-COT | |

|---|---|---|---|---|---|---|---|---|

| EAO | 0.343 | 0.291 | 0.293 | 0.295 | 0.311 | 0.321 | 0.325 | 0.331 |

| Accuracy | 0.55 | 0.44 | 0.53 | 0.54 | 0.48 | 0.57 | 0.54 | 0.52 |

| Fail.rt | 0.83 | 0.90 | 1.23 | 1.35 | 0.83 | 1.04 | 0.96 | 0.85 |

If you find DLST useful in your research, please consider citing:

@inproceedings{yang2017deep,

title={Deep Location-Specific Tracking},

author={Yang, Lingxiao and Liu, Risheng and Zhang, David and Zhang, Lei},

booktitle={Proceedings of the 2017 ACM on Multimedia Conference},

pages={1309--1317},

year={2017},

organization={ACM}

}

This software is being made available for research purpose only.

We utilize or re-implement many functions from project MDNet and RCNN. please check their licence files for details.