-

Notifications

You must be signed in to change notification settings - Fork 2

Home

- Team Info

- Vision Statement

- Features

- UI Sketches

- Domain Analysis

- Use-cases

- Architecture

- Machine Learning Analysis

- Current Iteration

- Next Iteration

- Long Term

- Resources and References

- Trevor Bonjour

- Srinivas Suresh Kumar

- Ankur Gupta

- Iskandar Atakhodjaev

Create a software package that provides services for people with hearing and speech impairment. The core service will be the conversion of American Sign Language(ASL), read using a Leap Motion, to text/audio. Applying machine learning classification algorithms to data extrapolated from a 3D Leap Motion Sensor, we plan to create a ASL recognition system with an instant text and audio output. For the purpose of the project we wish to incorporate 26 english letters. On top of the core service, the package may include:

- Assistance in learning ASL using games/quizzes/cue-cards.

- Support for simple words and phrases

- Capability to add and train on user defined gestures

- Additional training service with feedback stating how far/close the user is from the expected hand gesture

- User can login/sign-up

- User can download the software as a binary package

- Upon running the software, user shall see the welcome page with username and password text fields

- User can login

- User can edit his/her account settings

- User can delete his/her profile

- User can sign out the software program

- User can see the schematic picture of his gesture on the screen

- User can change his computer and his settings will be preserved

- Computations are done on the user's machine (locally) for fast prediction turn around time

- User should calibrate the sensor once to adjust it to user's hand size

- Prediction Models can be updated over the internet for better experience (OTA)

- The software can be used offline, but needs internet access to train new gestures and receive updated models

- Live textual and audio responses are provided for all predictions to a gesture performed by the user

- Software allows ASL experts to make additions to the global ASL model

- (Extended) User-defined gestures

- (Extended) Tutorial/Interactive Quiz for people to quickly learn ASL

- (Extended) Prediction model can be trained to give more complex output, e.g. basic phrases, words

- (Extended) Physical ASL training provided using Leap Motion

###Website : Login, Signup, Download

Home

signup-user

user-expert-login

expert_signup

Expert-User Logged-in Screen

thankyou

###Native Binary UI:

User Login Window

Leap NOT Connected

Spacebar to Start Recording

gesture-mode-binary

[gesture-mode-binary](../../(wikiresources/snapshots/bin-gesture.PNG)

profile-binary

expert_login_binary

expert-training-binary

UML for UI

UML for Native Backend

UML for Server Backend

- User accesses the website

- User is presented with an introduction, a signup button and a login button

- User clicks on the Signup button on the website

- Wesbsite Signup Form is displayed, User fills out

- Username

- Password

- EmailID

- Display Name

- User hits Submit

- Verification Email is sent to the user's email

- User clicks on the verify link in his inbox

- User is served a 'Email Verified' webpage.

- User is now served a link to 'asla-user-application-binary' for downloading

- User is redirected to a Thank You for Downloading page

- User runs the dowloaded asla-user-application-binary

- User fills in the username and password he chose while signing up

- Clicks on LogIn Button

- The application checks if user's email was verified

- If the email is verified, user can now use the application

- The Global Model is updated from the server

- User Custom Model is synced

- User's info is downloaded and synced

- User is taken to Gesture Mode by default

- User Logs In

- User clicks on the 'Profile'

- Application displays Profile Form

- User's profile is fetched from the server and pre-populated in the form

- User can Edit profile data, except username

- User clicks on 'Apply'

- Profile data is sent to the Server

- User logs in

- User selects one of the following modes:

- Gesture Mode (Default)

- Quiz Mode (Extended)

- Custom Gesture Mode (Extended)

- Learn-ASL Mode (Extended)

- User logs in

- User is asked to connect the Leap Motion sensor if not connected

- If logged in for the first time, calibration of sensor is initiated

- User's hand skeleton graphics is displayed on the screen

- User hits spacebar to start the recognition process

- User makes the gesture in the sensor's view

- If recognized:

- Label is displayed on the screen

- Audio output

- If not recognized:

- User is asked to make the gesture again

- If recognized:

- User removes the hand from the sensor's view

- User proceeds to make remaining gestures

- User hits spacebar to stop the recognition process

- User places the hand in the sensor's view and follows the directions

- If calibration is not successful, user needs to repeat the process

- If calibration is successful, user proceeds to recognition process

- User Logs in

- Application checks the latest Global Model from Server

- If Global Model has changed, update the local copy of Global Model

- User selects mode

- User logs in to the website to download the binary

- User installs the binary

- User logs in to his account from the native application.

- All data is synced from the server

- Expert keys in his authentication token(sent via email), which prompts a download for the expert binary

- Expert keys in his authentication key to log in

- Expert logs in

- Expert is asked to connect the Leap Motion sensor if not connected

- If logged in for the first time, calibration of sensor is initiated

- Expert hits spacebar to start training

- Expert chooses label to train on, training process consists of:

- Expert makes a gesture in the sensor's view

- Expert holds the gesture for pre-defined time

- Expert removes the hand from sensor's view

- Expert repeats steps i-iii for pre-defined number of times

- Expert can proceed to add another gesture

- Expert hits spacebar to stop training

- This data containing label, gesture data and authentication token is sent to the server

- Server authenticates expert token

- If the authentication token is invalid:

- Data packet is rejected

- Notification is sent to expert

- If the authentication token is valid:

- Server adds the data from expert to global data

- Success notification is sent to expert

- Server updates the Global Model

- If the authentication token is invalid:

- Expert places the hand in the sensor's view and follows the directions

- If calibration is not successful, expert needs to repeat the process

- If calibration is successful, expert proceeds to training process

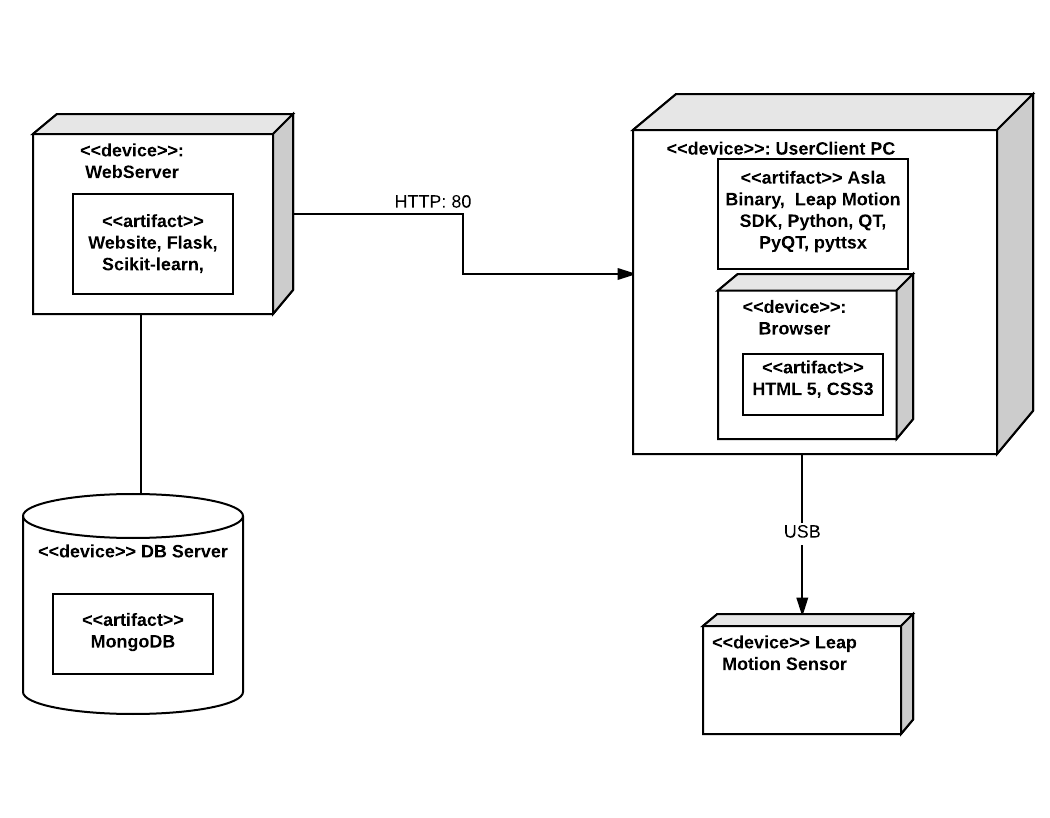

Our project predominantly uses HTTP to facilitate communication between the client binary and the server. The potential uses cases include updating the global models and training to use the custom gesture model. These requests are HTTP posts which include a JSON payload of authentication tokens and either raw Leap Motion data or trained model

eg:

{

user: "Batman"

userid: "80085"

model: [a1, a2, a3]

}

The user interacts with a UI generated by QT and powered by Python. The binary includes Leap Motion libraries for Python and Javascript that will facilitate communication with the Leap Motion device. The Javascript Leap Motion libraries in conjunction with Three.js allow a visualizer that renders a live skeletal view of the user's ongoing interaction with Leap Motion. The skeletal structure of the hand is rendered by performing calculations with the orientation of the hand and subjecting it to the Phong model of shading. The Python portion of the binary is responsible for i. handling requesting JSON data via HTTP outlined in the paragraph above ii. interfaces with the Leap Motion to capture data for prediction of gestures iii. predicting labels from the model for gestures performed by processing live Leap Motion data using numpy and scipy

The server persists the raw data that was received into a database, MongoDB. This data is then parsed by the Machine learning toolkit (scikit-learn) with the help of data processing libraries like numpy and scipy and a model is created. This model is then sent out to users so that they have access to the latest gestures and persisted in the database. This is all facilitated via the communication protocols outlined in paragraph 1.

All website communication happens via the methods and tactics outlined in paragraph 1

- Native UI Toolkits: QT, PyQT, Three.js for skeletal visualizer

- Website UI Toolikits: Twitter Bootstrap, Javascript, HTML 5, CSS 3

- Machine Learning Toolkiits: Scikit-Learn, scipy, numpy

- Device Interface Library: [Leapmotion SDK for Python and Javascript] (https://developer.leapmotion.com/documentation/python/api/Leap_Classes.html)

- Communication Protocols and Libraries: JSON - support native to Python, HTTP - Beautiful Soup

- Text to Speech Library: [pyttsx] (https://pypi.python.org/pypi/pyttsx)

- Web Server: [Flask] (http://flask.pocoo.org/)

- Database: [MongoDB] (https://www.mongodb.com/)

The data for training was collected from all of the team members. Each memeber used the expert module to collect the data.

Data collection steps:

- Chose an alphabet

- Make the respective sign in front of the leap motion

2.1 If satisfied by the visualizer's feedback, continue holding the sign for 5 seconds

2.2 If not, remove hand from view of the leap. This flushed the data collected for this iteration. 3. Repeat the process for a fixed number of iterations

In total 2080 data points were collected, with each alphabet having 80 rows.

The following Machine Learning algorithms were used:

- k-Nearest Neighbor

- Linear SVM

- Decision Trees

- Random Forest

We tried several other models, but the performance were not at par with these.

8.3.1 Leave One Group Out Cross-Validation

For the purpose of selecting the model, we went with Leave One Group Out cross-validation accuracy as the drivig factor. The groups here corresponded to each team members data. The model was trained on data from three members, and tested on the fourth members data. This was repeated for all four members data as test data and the average cross validation accuracy was calculated.

8.3.2 Parameter Tuning

Parameter tuning was performed using grid search cross validation over a range of parameter vaules. The parameter values that gave the least cross-validation error were then chosen for model selection.

- k-Nearest Neighbor

* Random Forest

* Random Forest

* Linear SVM

* Linear SVM

* Decision Trees

* Decision Trees

8.3.3 Model Comparison

The best accuracy(~84%) we got was for a Random Forest model, however we went with the Linear SVM classifier, mainly due to its stability and the time taken for training as compared to Random Forest. The bar plot below shows the comparison based on the cv-accuracy.

<img src="https://github.com/ankurgupta7/asla-pub/blob/master/ml_analysis/plot/class_comp.png", width=800>

The final model used for prediction was trained on all 2080 data points.

The following table gives the f1 score for each letter

| Letter | F1 Score | Letter | F1 Score |

|---|---|---|---|

| A | 0.91 | N | 0.38 |

| B | 0.96 | O | 0.34 |

| C | 0.77 | P | 0.94 |

| D | 0.93 | Q | 1.00 |

| E | 0.48 | R | 0.76 |

| F | 1.00 | S | 0.64 |

| G | 0.99 | T | 0.36 |

| H | 0.99 | U | 0.60 |

| I | 0.99 | V | 0.86 |

| J | 0.94 | W | 0.99 |

| K | 0.89 | X | 0.92 |

| L | 0.99 | Y | 1.00 |

| M | 0.20 | Z | 0.95 |

It can be seen that, while the scores are high for most of the signs that are easily distinguished, signs that are similar to each other have a lower score. This is a limitation of the Leap Motion controller. It's very hard for the leap to distinguish between similar signs like 'M', 'N' and 'T'.

Confusion matrix for specific letters with low accuracy using Linear SVM

| Letter | Classified as |

|---|---|

| E | E(0.60), O(0.30) |

| M | M(0.15), N(0.50), S(0.25), O(0.10) |

| N | M(0.20), N(0.65), T(0.10), O(.05) |

| O | E(0.40),N(0.10),O(0.50) |

| T | M(0.10),N(0.50),T(0.40) |

| U | R(0.30),U(0.70) |

Signs with low scores

| Group 1 | Group 2 | Group 3 |

|---|---|---|

| <img src="https://github.com/ankurgupta7/asla-pub/blob/master/wikiresources/signs/group1.png", width=170> | <img src="https://github.com/ankurgupta7/asla-pub/blob/master/wikiresources/signs/group2.png", width=100> | <img src="https://github.com/ankurgupta7/asla-pub/blob/master/wikiresources/signs/group3.png", width=100> |

All the code related to the analysis is placed here.

- Fixed the thread-related bugs to show correct messages in QT windows

- The model files with time stamps are fetched and updated correctly

- Full ML analysis was collected and presented. The accuracy of various classification algorithms were calculated with respective cross-validation curves. (results below)

- Use cases of app were tested and confirmed from both standpoints, users as well as experts

- Implement the 'User defined signs' feature

- Collect more data from 'ASL experts'

- Secure storing of the model file, currently pickle(prone to security concerns)

- More stringent testing and fixing of minor bugs

- Make the application cross-platform, currently only runs on Linux

- Implement for words/phrases

- Incorporate the use of a web camera along with the leap motion controller