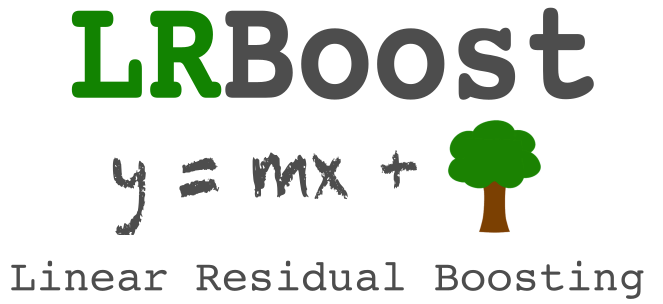

lrboost is a sckit-learn compatible package for linear residual boosting. lrboost uses a linear estimator to first remove any linear trends from the data, and then uses a separate non-linear estimator to model the remaining non-linear trends. We find that extrapolation tasks or data with linear and non-linear components are the best use cases. Not every modeling task will benefit from lrboost, but we use this in our own work and wanted to share something that made it easy to use.

For a stable version, install using pip:

pip install lrboostlrboost was inspired by 'Regression-Enhanced Random Forests' by Haozhe Zhang, Dan Nettleton, and Zhengyuan Zhu. An excellent PyData talk by Gabby Shklovsky explaining the intuition underlying the approach may also be found here: 'Random Forest Best Practices for the Business World'.

- LRBoostRegressor can be used like any other sklearn estimator and is built off a sklearn template.

predict(X)returns an array-like of final predictions- Adding

predict(X, detail=True)returns a dictionary with primary, secondary, and final predictions.

from sklearn.datasets import load_diabetes

from lrboost import LRBoostRegressor

X, y = load_diabetes(return_X_y=True)

lrb = LRBoostRegressor().fit(X, y)

predictions = lrb.predict(X)

detailed_predictions = lrb.predict(X, detail=True)

print(lrb.primary_model.score(X, y)) #R2

print(lrb.score(X, y)) #R2

>>> 0.512

>>> 0.933