-

Notifications

You must be signed in to change notification settings - Fork 12

AKS Hybrid Small topology 1.3 AHR Manual

What to expect:

- detailed instructions,

- no steps skipped or redirected,

- no gui for actionable tasks for better scriptability/automation (but all right for checks and validations),

- interim validation steps.

- tidying up afterwards (deleting all created resources).

Duration: 60m

Prerequisites:

- Azure project and a resource group;

- GCP project

Both clouds have shells. You can do installation using both, or each. On Azure Shell you can setup gcloud. Similarly, on Gloud you can install az.

Both shells are a free resource and disintegrate after an idle timeout, having only ~ [home] directory persisted. As a result, the only way to setup gcloud at Azure or az at GCP is to install it into a home directory.

Both shells have tmux preinstalled. It's useless for a long-running operations with such a short shell VM time-to-live.

Azure shell: 20 min timeout

GCP shell: 60 min timeout

Saying that, GCP Cloud Shell has an hour of idle time, which makes it practical for majority of situations without having you setting up a bastion host.

The timeout also means you better start to work with environment variables configuration files as a way to persist your session state. That is what we are going to do.

?. Open a GCP Cloud Shell in your browser

https://ssh.cloud.google.com/

?. Execute az installation script from https://docs.microsoft.com/en-us/cli/azure/install-azure-cli-linux

Accept defaults (for install directory; executable directory; allow to modify bashrc profile and do modifications)

curl -L https://aka.ms/InstallAzureCli | bash

NOTE: az completion will be configured, still make a note for az completion file location, ~/lib/azure-cli/az.completion.

?. Either re-open shell or start another bash instance to activate .bashrc configs.

bash

?. Log into your Azure account, using a link and a code to authenticate.

az login

?. Test your connection

az account show

You will see az account show output json if logged in successfully.

Let's keep all nice and tidy. We are going to organize all dependencies and configuration files in a single hybrid install directory. ?. Configure hybrid install environment location

export HYBRID_HOME=~/apigee-hybrid-install

?. Create $HYBRID_HOME directory, if it does not exist.

mkdir -p $HYBRID_HOME

?. If not, be in the Hybrid Install Home directory

cd $HYBRID_HOME

?. Clone ahr git repository

git clone https://github.com/apigee/ahr.git

?. Define AHR_HOME variable and add bin directory to the PATH

export AHR_HOME=$HYBRID_HOME/ahr

export PATH=$AHR_HOME/bin:$PATH

?. Define the hybrid configuration file name

export HYBRID_ENV=$HYBRID_HOME/hybrid-1.3.env

?. Use provided example configuration for a small footprint hybrid runtime cluster.

cp $AHR_HOME/examples/hybrid-sz-s-1.3.sh $HYBRID_ENV

?. Inspect the $HYBRID_ENV configuration file.

vi $HYBRID_ENV

Observe:

-

sourced ahr-lib.sh file that contains useful functions like token() and is required to correctly generate names of the asm and apigectl distributives;

-

we control versions of cert-manager, asm, Kubernetes and Apigee hybrid here;

-

example contains GCP project variables; we are going to add Azure variables as well;

-

cluster parameters are defined, including a cluster template and a name for a cluster config file, used to create a cluster at GKE. We are going to use az utility to create an AKS cluster;

-

the last section defines default values describing hybrid properties, as well as HYBRID_CONFIG variable that configures a hybrid configuration file;

-

the RUNTIME_IP variable is commented out, as it is treated differently depending on your circumstances.

?. Add environment variables that describe following Azure project properties, right after GCP Project variable group:

#

# Azure Project

#

export RESOURCE_GROUP=yuriyl

export AZ_REGION=westeurope

export AZ_VNET=$RESOURCE_GROUP-vnet-$AZ_REGION

export AZ_VNET_SUBNET=$RESOURCE_GROUP-vnet-$AZ_REGION-subnet

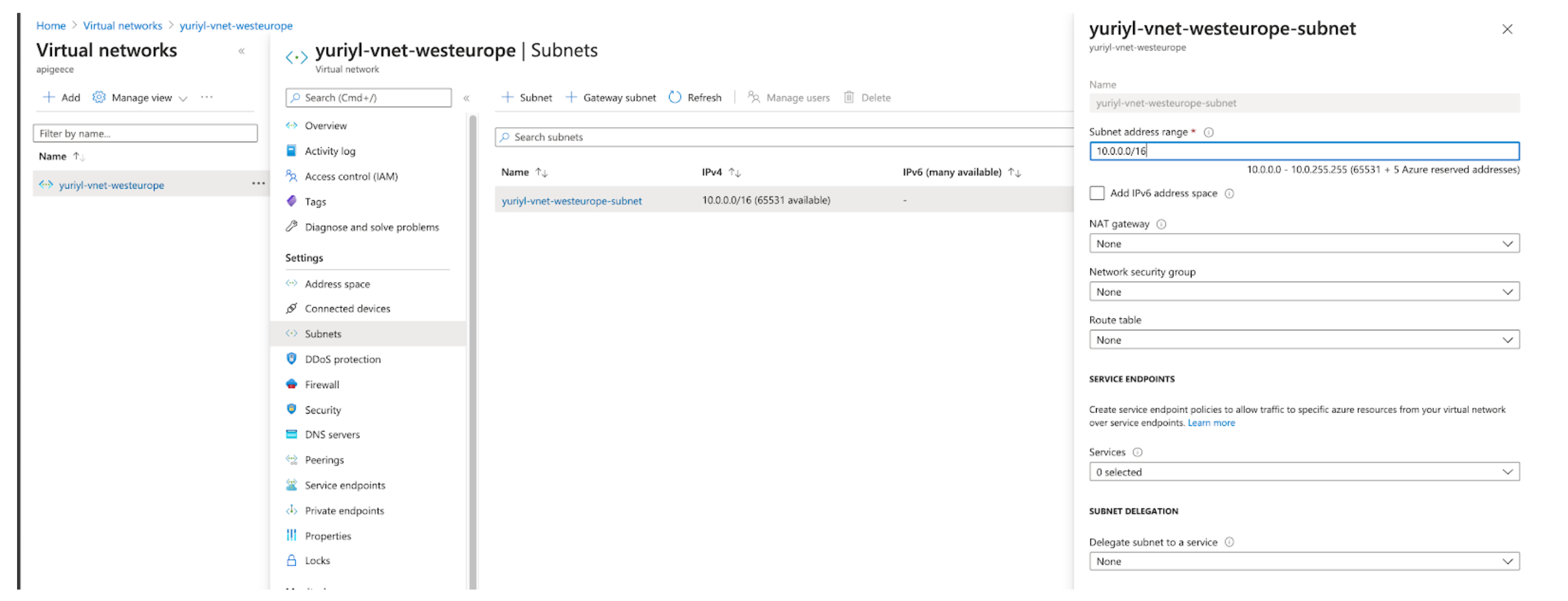

?. Define network layout.

For a region AZ_REGION we are creating our AKS cluster in, we need a virtual network VNET. We will define a subnet in it for the cluster nodes. As we are going to use Azure CNI, we also need to allocate another subnet that will host services, as well as docker bridge addresses pool and a DNS service IP.

Those address previces in CIDR notation are:

For VNet create command:

--address-prefixes $AZ_VNET_CIDR network cidr XXX

--subnet-prefix $AZ_VNET_SUBNET_CIDR subnet for cluster nodes

For AKS create command: --service-cidr=$AKS_SERVICE_CIDR is a CIDR notation IP range from which to assign service cluster IPs --docker-bridge-address is a specific IP address and netmask for the Docker bridge, using standard CIDR notation --dns-service-ip is an IP address assigned to the Kubernetes DNS service

Of course, with /14 (262,144 addresses)and /16 (65,536 addresses) subnet masks we are very generous, not to say wasteful, but why not if we can and what's more important, those values are soft-coded in the variables and you can change them as it suits you.

CIDR: 10.0.0.0/14 CIDR IP Range: 10.0.0.0 - 10.3.255.255

CIDR: 10.0.0.0/16 CIDR IP Range: 10.0.0.0 - 10.0.255.255

CIDR: 10.1.0.0/16 CIDR IP Range: 10.1.0.0 - 10.1.255.255

?. Add network definition variables to the HYBRID_ENV file

# network layout

export AZ_VNET_CIDR=10.0.0.0/14

export AZ_VNET_SUBNET_CIDR=10.0.0.0/16

export AKS_SERVICE_CIDR=10.1.0.0/16

export AKS_DNS_SERVICE_IP=10.1.0.10

export AKS_DOCKER_CIDR=172.17.0.1/16

We'll fix the RUNTIME_IP address after we provision a static IP address. We are going to use default values for all other variables.

?. Define PROJECT variable

NOTE: For qwiklabs account, you can use

export PROJECT=$(gcloud projects list|grep qwiklabs-gcp|awk '{print $1}')

export PROJECT=<gcp-project-id>

?. Set it up as a a gcloud config default

gcloud config set project $PROJECT

?. Now that we set up required variables that the env file depends (PROJECT, AHR_HOME), we can source the HYBRID_ENV

source $HYBRID_ENV

?. We need a number of google apis enabled for correct operations of gke hube, apigee organization, asm, etc.

Let's enable them

ahr-verify-ctl api-enable

We are going to create Azure objects using CLI commands. We will be using Azure portal to verify objects creation state.

NOTE: If you didn't yet, create a resource group.

az group create --name $RESOURCE_GROUP --location $AZ_REGION

?. Create Azure network and a subnet

REF: az network * https://docs.microsoft.com/en-us/cli/azure/network/vnet?view=azure-cli-latest

az network vnet create \

--name $AZ_VNET \

--resource-group $RESOURCE_GROUP \

--location $AZ_REGION \

--address-prefixes $AZ_VNET_CIDR \

--subnet-name $AZ_VNET_SUBNET \

--subnet-prefix $AZ_VNET_SUBNET_CIDR

?. Get subnet resource Id we need to supply as a parameter to create AKS cluster

export AZ_VNET_SUBNET_ID=$(

az network vnet subnet show \

--resource-group $RESOURCE_GROUP \

--vnet-name $AZ_VNET \

--name $AZ_VNET_SUBNET \

--query id \

--output tsv )

?. TODO: Portal snapshot

?. Verify the value. It is rather involved. That is why we don't want to write it manually.

echo $AZ_VNET_SUBNET_ID

/subscriptions/07e07c10-acbd-417b-b4b0-3aca8ce79b38/resourceGroups/yuriyl/providers/Microsoft.Network/virtualNetworks/yuriyl-vnet-eastus/subnets/yuriyl-vnet-eastus-subnet

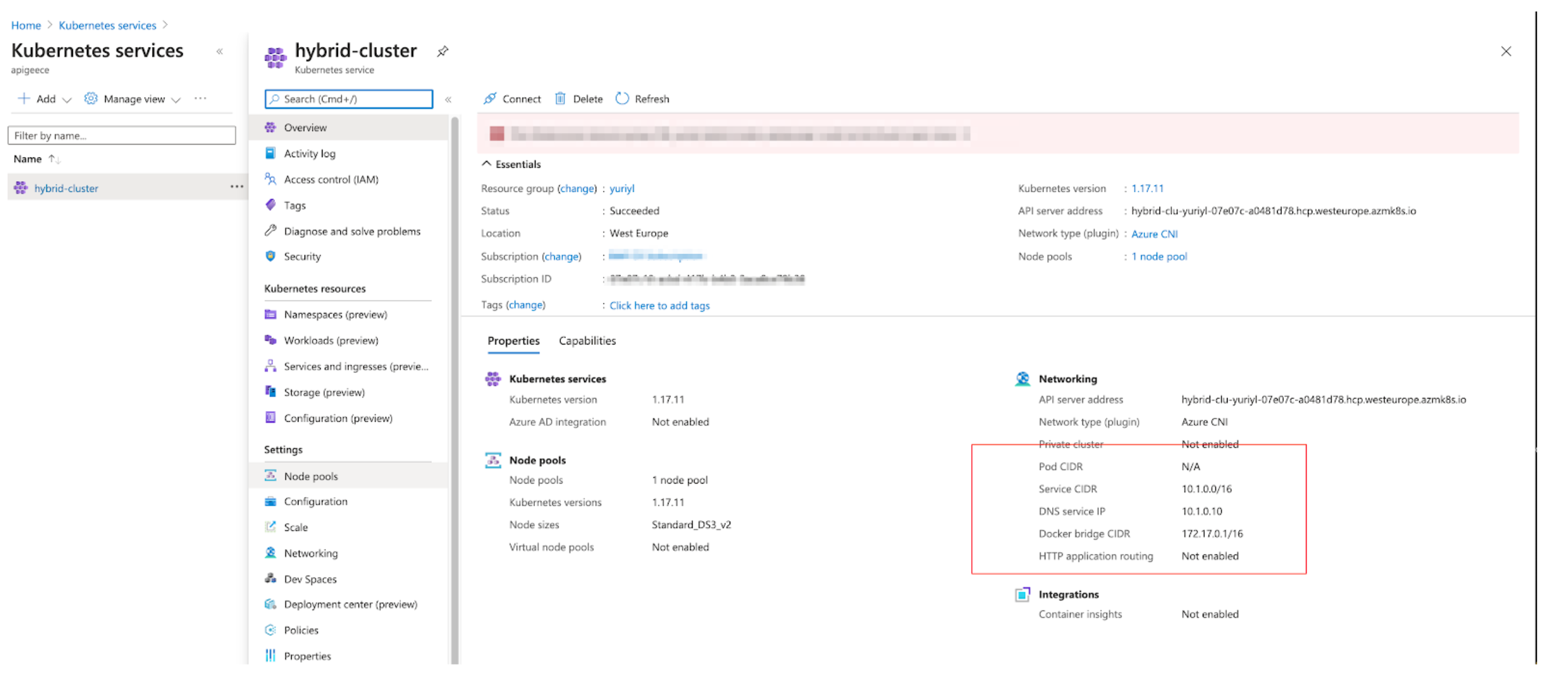

?. Create an AKS cluster

az aks create \

--resource-group $RESOURCE_GROUP \

--name $CLUSTER \

--location $AZ_REGION \

--kubernetes-version 1.17.11 \

--nodepool-name hybridpool \

--node-vm-size Standard_DS3_v2 \

--node-count 4 \

--network-plugin azure \

--vnet-subnet-id $AZ_VNET_SUBNET_ID \

--service-cidr $AKS_SERVICE_CIDR \

--dns-service-ip $AKS_DNS_SERVICE_IP \

--docker-bridge-address $AKS_DOCKER_CIDR \

--generate-ssh-keys \

--output table

Output:

SSH key files '/home/student_00_638a7fc61408/.ssh/id_rsa' and '/home/student_00_638a7fc61408/.ssh/id_rsa.pub' have been generated under ~/.ssh to allow SSH access to the VM. If using machines without permanent storage like Azure Cloud Shell without an attached file share, back up your keys to a safe location

DnsPrefix EnableRbac Fqdn KubernetesVersion Location MaxAgentPools Name NodeResourceGroup ProvisioningState ResourceGroup

------------------------ ------------ ------------------------------------------------------ ------------------- ---------- --------------- -------------- ------------------------------- ------------------- ---------------

hybrid-clu-yuriyl-07e07c True hybrid-clu-yuriyl-07e07c-d17b2653.hcp.eastus.azmk8s.io 1.17.11 eastus 10 hybrid-cluster MC_yuriyl_hybrid-cluster_eastus Succeeded yuriyl

?. You can now open Azure portal and see created cluster there.

?. Get cluster credentials

az aks get-credentials --resource-group $RESOURCE_GROUP --name $CLUSTER

Merged "hybrid-cluster" as current context in /home/student_00_638a7fc61408/.kube/config

NOTE: Make a note of the kube config file location if you want to copy/move those credentials to another server.

?. Verify kubectl communication with the cluster by getting list of deployed pods

kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system azure-cni-networkmonitor-2kcjs 1/1 Running 0 2m47s

kube-system azure-cni-networkmonitor-k8pjf 1/1 Running 0 2m48s

...

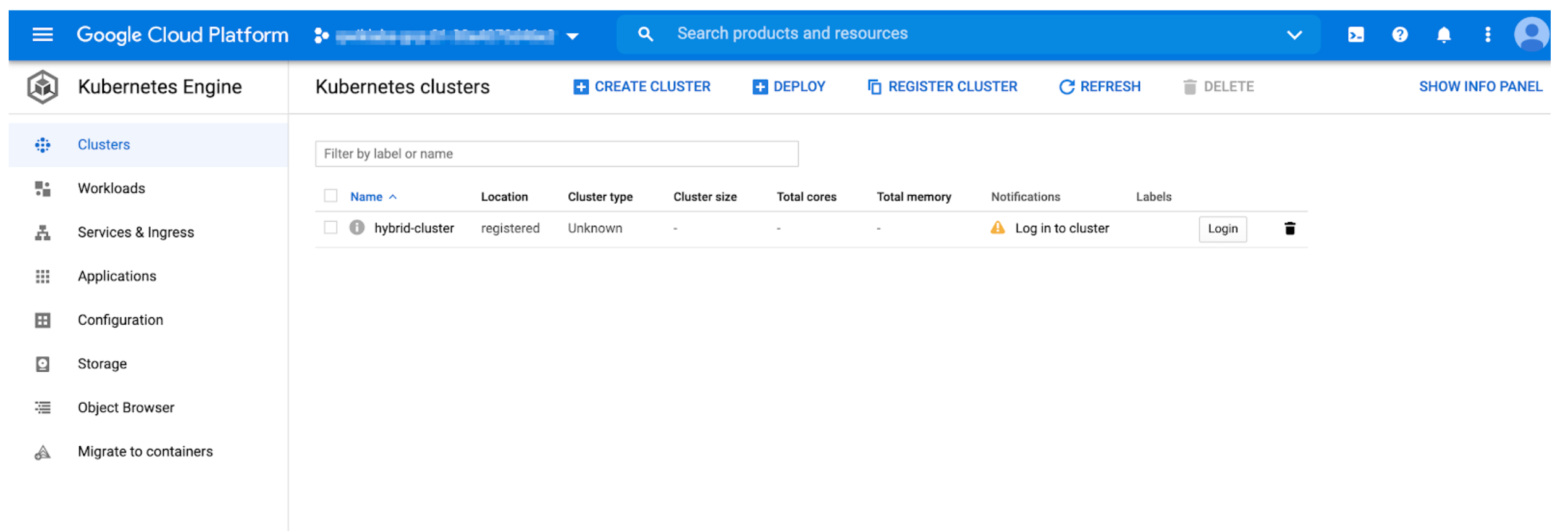

We are using Anthos attached cluster to control the cluster from GCP. For this we need to:

- register the cluster in the Anthos;

- create a kubernetes service account;

- login into the cluster via GCP Console

NOTE: roles required for gkehub membership management:

--role=roles/gkehub.admin --role=roles/iam.serviceAccountAdmin --role=roles/iam.serviceAccountKeyAdmin --role=roles/resourcemanager.projectIamAdmin

?. Create a new service account for cluster registration

gcloud iam service-accounts create anthos-hub --project=$PROJECT

?. Grant GKE Hub connect role to the service account

gcloud projects add-iam-policy-binding $PROJECT \

--member="serviceAccount:anthos-hub@$PROJECT.iam.gserviceaccount.com" \

--role="roles/gkehub.connect"

?. Create key and download its JSON file

gcloud iam service-accounts keys create $HYBRID_HOME/anthos-hub-$PROJECT.json \

--iam-account=anthos-hub@$PROJECT.iam.gserviceaccount.com --project=$PROJECT

?. Register the AKS cluster in the GKE Hub

gcloud container hub memberships register $CLUSTER \

--context=$CLUSTER \

--kubeconfig=~/.kube/config \

--service-account-key-file=$HYBRID_HOME/anthos-hub-$PROJECT.json

Waiting for membership to be created...done.

Created a new membership [projects/qwiklabs-gcp-00-27dddd013336/locations/global/memberships/hybrid-cluster] for the cluster [hybrid-cluster]

Generating the Connect Agent manifest...

Deploying the Connect Agent on cluster [hybrid-cluster] in namespace [gke-connect]...

Deployed the Connect Agent on cluster [hybrid-cluster] in namespace [gke-connect].

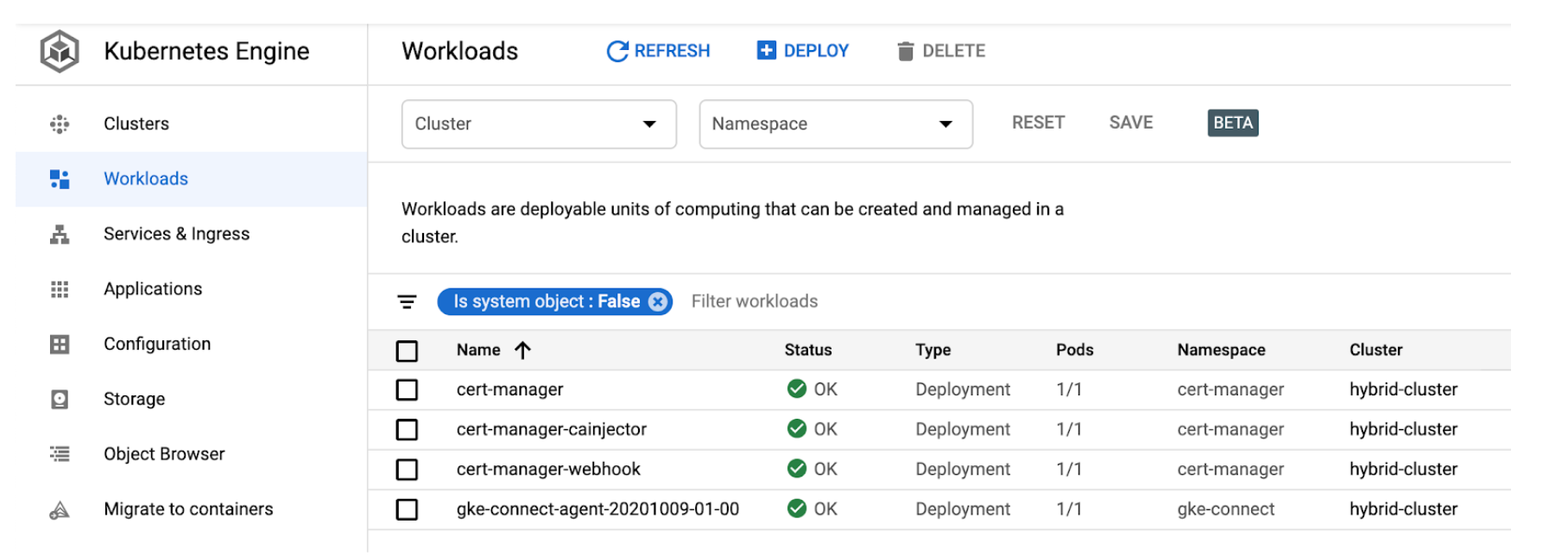

In the output, observe that besides cluster membership registration, the GKE Connect Agent was deployed into the target cluster.

At this point of time we can see our cluster in GCP Console.

?. In GCP Console, Open Kubernetes Engine / Cluster page

NOTE: If something went wrong and you need to remove the cluster membership

gcloud container hub memberships list NAME EXTERNAL_ID apigee-hybrid-cluster 5bbb2607-4266-40cd-bf86-22fdecb21137 gcloud container hub memberships unregister $CLUSTER_NAME \ --context=$CLUSTER_NAME \ --kubeconfig=~/kubeconfig-azure.yaml

?. Create kubernetes service account

kubectl create serviceaccount anthos-user

kubectl create clusterrolebinding aksadminbinding --clusterrole view --serviceaccount default:anthos-user

kubectl create clusterrolebinding aksadminnodereader --clusterrole node-reader --serviceaccount default:anthos-user

kubectl create clusterrolebinding aksclusteradminbinding --clusterrole cluster-admin --serviceaccount default:anthos-user

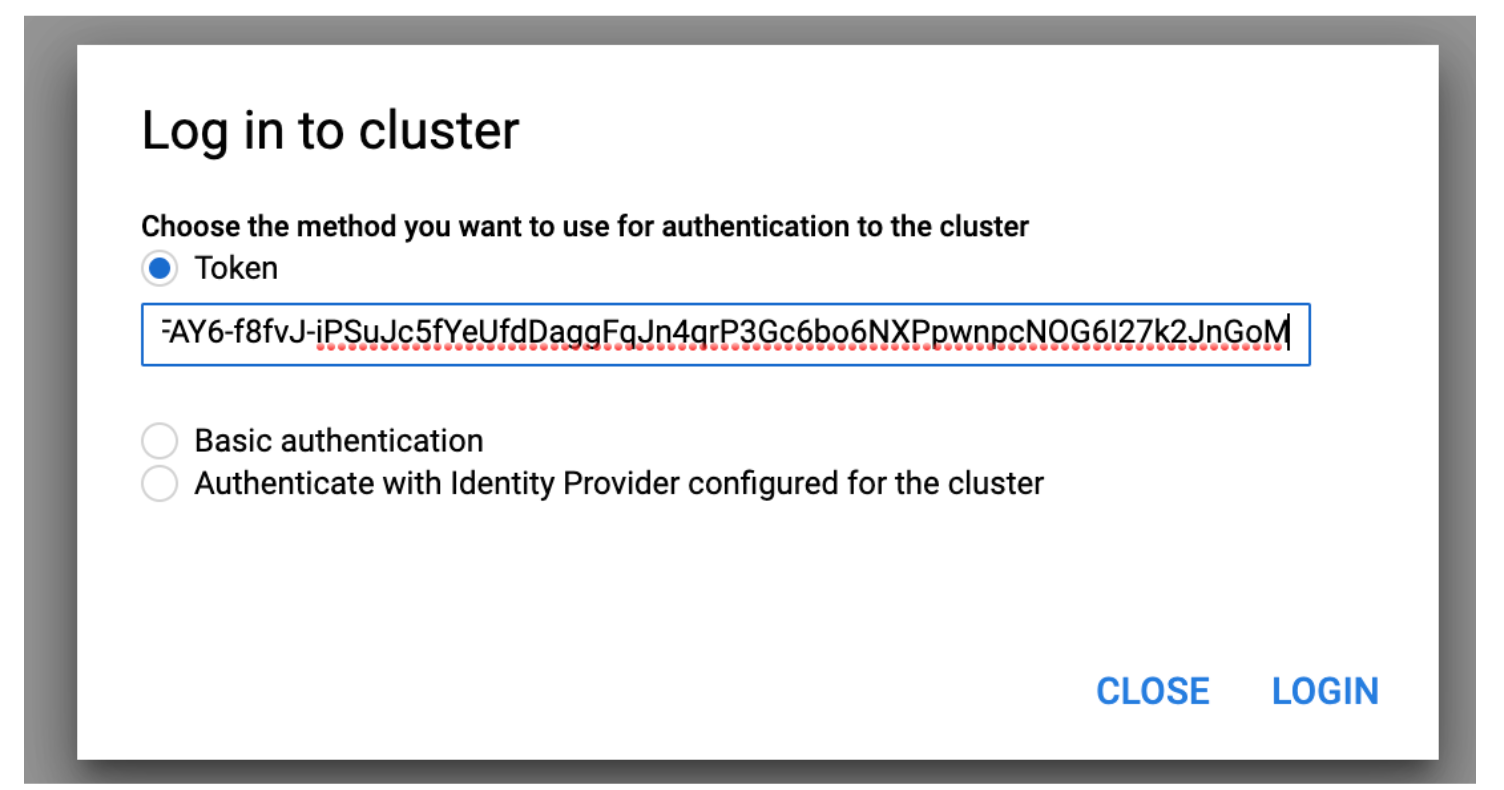

?. Get the token for the service account

CLUSTER_SECRET=$(kubectl get serviceaccount anthos-user -o jsonpath='{$.secrets[0].name}')

kubectl get secret ${CLUSTER_SECRET} -o jsonpath='{$.data.token}' | base64 --decode

?. In GCP Console, Open Kubernetes Engine / Cluster page, press Login button

?. Chose Token method, paste the token and click LOGIN

NOTE: Whichever select-and-copy technique you are using, make sure the token is a single line of characters with no end-of-line characters.

The cluster is attached now:

NOTE: use either Azure Cloud Shell or GCP Cloud Shell's kubectl and cluster config file

?., download and install cert-manager

kubectl apply --validate=false -f $CERT_MANAGER_MANIFEST

See for details: https://docs.microsoft.com/en-us/azure/aks/static-ip

?. Create public IP

az network public-ip create --resource-group $RESOURCE_GROUP --name $CLUSTER-public-ip --location $AZ_REGION --sku Standard --allocation-method static --query publicIp.ipAddress -o tsv

The provisioned IPv4 address will be shown. Make a note of if.

?. Assign delegated permissions to the resource group for our Service Principal.

export SP_PRINCIPAL_ID=$(az aks show --name $CLUSTER --resource-group $RESOURCE_GROUP --query servicePrincipalProfile.clientId -o tsv)

export SUBSCRIPTION_ID=$(az account show --query id -o tsv)

az role assignment create --assignee $SP_PRINCIPAL_ID --role "Network Contributor" --scope /subscriptions/$SUBSCRIPTION_ID/resourcegroups/$RESOURCE_GROUP

?. Edit $HYBRID_ENV to configure RUNTIME_IP (at the end of the file):

NOTE: Use following az and sed commands to do it programmatically

export RUNTIME_IP=$(az network public-ip show --resource-group $RESOURCE_GROUP --name $CLUSTER-public-ip --query ipAddress --output tsv) sed -i -E "s/^(export RUNTIME_IP=).*/\1$RUNTIME_IP/g" $HYBRID_ENV

vi $HYBRID_ENV

export RUNTIME_IP=<insert-the-output-of-previous-command>

?. Set up a profile variable for on-prem ASM configuration,

vi $HYBRID_ENV

export ASM_PROFILE=asm-multicloud

?. re-source $HYBRID_ENV to actualise changed values in the current session

source $HYBRID_ENV

?. Prepare session variables to create an IstioOperator manifest file.

NOTE: Those are 'derived' variables present in the template file and replaced by

ahr-cluster-ctl templatecommand. Therefore, we don't need them in the $HYBRID_ENV

export PROJECT_NUMBER=$(gcloud projects describe ${PROJECT} --format="value(projectNumber)")

export ref=\$ref

export ASM_RELEASE=$(echo "$ASM_VERSION"|awk '{sub(/\.[0-9]+-asm\.[0-9]+/,"");print}')

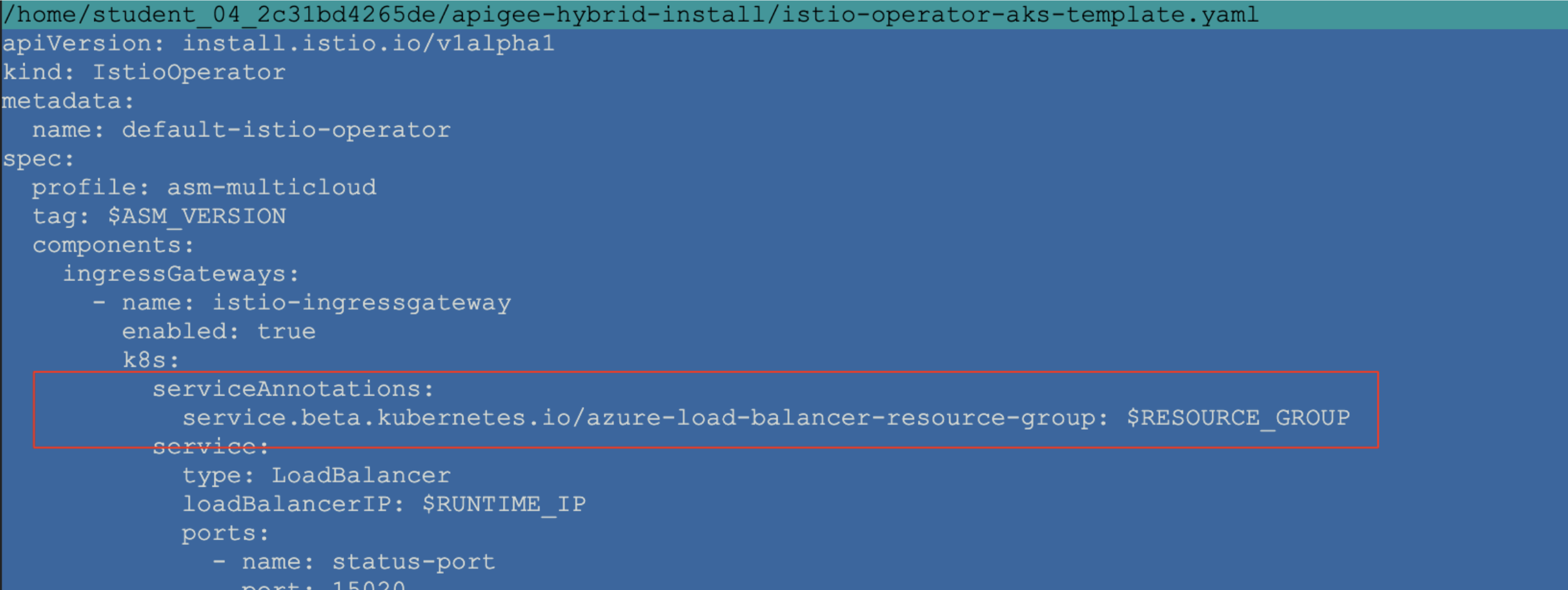

?. As it happens with IstioOperator, there always are some annotations and/or parameters you need to fine-tune on a Kubernetes platform-by-platform basis.

For AKS, we need to set up an annotation for resource group so that static IP address is correctly identified.

Clone provided IstioOperator template for on-prem/multi-cloud installation.

cp $AHR_HOME/templates/istio-operator-$ASM_RELEASE-$ASM_PROFILE.yaml $HYBRID_HOME/istio-operator-aks-template.yaml

?. Edit $HYBRID_HOME/istio-operator-aks-template.yaml to add necessary service annotation

We need to create a

service.beta.kubernetes.io/azure-load-balancer-resource-group: $RESOURCE_GROUP in the

spec.components.ingressGateways[name=istio-ingressgateway].k8s.serviceAnnotations property.

NOTE: We are using a variable name here, because we are going to process this template and resolve the variable in the next step.

vi $HYBRID_HOME/istio-operator-aks-template.yaml

service.beta.kubernetes.io/azure-load-balancer-resource-group: $RESOURCE_GROUP

The result should look like this:

WARNING: If you are using Azure DNS for your service, you need to apply a DNS label to the service.

?. Process the template to obtain $ASM_CONFIG file suitable for on-prem ASM installation

ahr-cluster-ctl template $HYBRID_HOME/istio-operator-aks-template.yaml > $ASM_CONFIG

?. Inspect $ASM_CONFIG file

vi $ASM_CONFIG

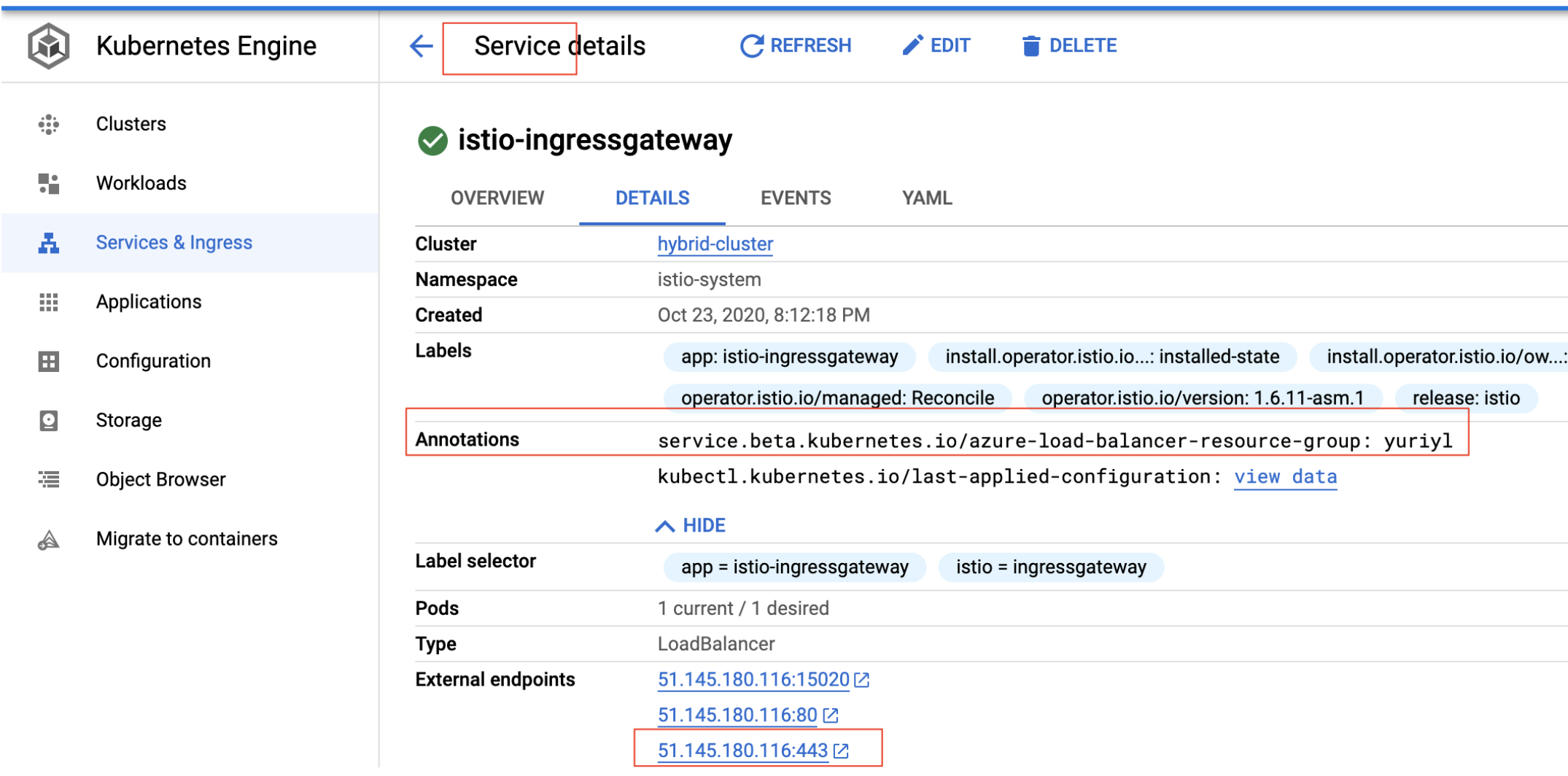

Observe:

- the AKS service annotation contains correct value for you $RESOURCE_GROUP;

- we configured spec.components.k8s.service.loadBalancerIP property;

- we open ports 443 and 80 for https and http traffic. we are not going to use http, only redirect to the https;

- meshConfig contains envoy access log configuration as per https://cloud.google.com/apigee/docs/hybrid/v1.3/install-download-cert-manager-istio-aks

?. Get ASM installation files

ahr-cluster-ctl asm-get $ASM_VERSION

?. Define ASM_HOME and add ASM bin directory to the path by copying and pasting provided export statements from the previous command output.

export ASM_HOME=$HYBRID_HOME/istio-$ASM_VERSION

export PATH=$ASM_HOME/bin:$PATH

?. Install ASM into our cluster

istioctl install -f $ASM_CONFIG

! global.mtls.enabled is deprecated; use the PeerAuthentication resource instead

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Addons installed

✔ Installation complete

?. At the Services & Ingress page of Kubernetes Engine section, observe configured Ingress IP address.

?. Drill down into istio-ingressgateway Service configuration and observe the annotation created:

? Create hybrid organization, environment, and environment group.

ahr-runtime-ctl org-create $ORG --ax-region $AX_REGION

ahr-runtime-ctl env-create $ENV

ahr-runtime-ctl env-group-create $ENV_GROUP $RUNTIME_HOST_ALIAS

ahr-runtime-ctl env-group-assign $ORG $ENV_GROUP $ENV

?. SAs and their Keys

ahr-sa-ctl create-sa all

ahr-sa-ctl create-key all

?. Set up synchronizer Service Account identifier (email)

ahr-runtime-ctl setsync $SYNCHRONIZER_SA_ID

?. Configure self-signed certificate for Ingress Gateway

ahr-verify-ctl cert-create-ssc $RUNTIME_SSL_CERT $RUNTIME_SSL_KEY $RUNTIME_HOST_ALIAS

?. Get apigeectl and hybrid distribution

ahr-runtime-ctl get

?. Configure APIGEE_HOME variable

export APIGEECTL_HOME=$HYBRID_HOME/$(tar tf $HYBRID_HOME/$HYBRID_TARBALL | grep VERSION.txt | cut -d "/" -f 1)

?. Add apigeectl directory to your PATH

export PATH=$APIGEECTL_HOME:$PATH

?. Generate Runtime Configuration yaml file

ahr-runtime-ctl template $AHR_HOME/templates/overrides-small-1.3-template.yaml > $RUNTIME_CONFIG

?. Inspect generated runtime configuration file

vi $RUNTIME_CONFIG

?. Observe:

- TODO:

?. Deploy apigee hybrid init components

ahr-runtime-ctl apigeectl init -f $RUNTIME_CONFIG

?. Wait until all containers are ready

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

?. Deploy hybrid components

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG

?. Wait until they are ready

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG

?. Deploy provided ping proxy

$AHR_HOME/proxies/deploy.sh

?. Execute a test request

curl --cacert $RUNTIME_SSL_CERT https://$RUNTIME_HOST_ALIAS/ping -v --resolve "$RUNTIME_HOST_ALIAS:443:$RUNTIME_IP" --http1.1

?. Delete cluster

az aks delete \

--name $CLUSTER \

--resource-group $RESOURCE_GROUP \

--yes

?. Delete public ip

az network public-ip delete \

--name $CLUSTER-public-ip \

--resource-group $RESOURCE_GROUP

?. Delete network

az network vnet delete \

--name $AZ_VNET \

--resource-group $RESOURCE_GROUP

- TADA: Apigee Hybrid Container Traffic Analysis with tcpdump for Target Requests

- TADAA: Cloud Code IDE Java Callout Debugging

- Hybrid Ingress Walkthrough 1.5

- Hybrid CRD Objects Diagram 1.3.2

- List of Components for Air-gapped Deployments

- GKE/EKS Multi-cloud Small Topology 1.4 [single-project] | AHR Profile Quick Start

- Single-Zone Cluster, Small footprint 1.1

- Multi-Zone Cluster, Large footprint 1.1

- Multi-Region Cluster, Large footprint 1.1

-

Private Cluster

Private Cluster

-

Performance Testing: distGatling

Performance Testing: distGatling

- Delete Hybrid Installation

- Hybrid Ingress Walkthrough 1.3

AHR-*-CTL

AHR-*-CTL