-

Notifications

You must be signed in to change notification settings - Fork 12

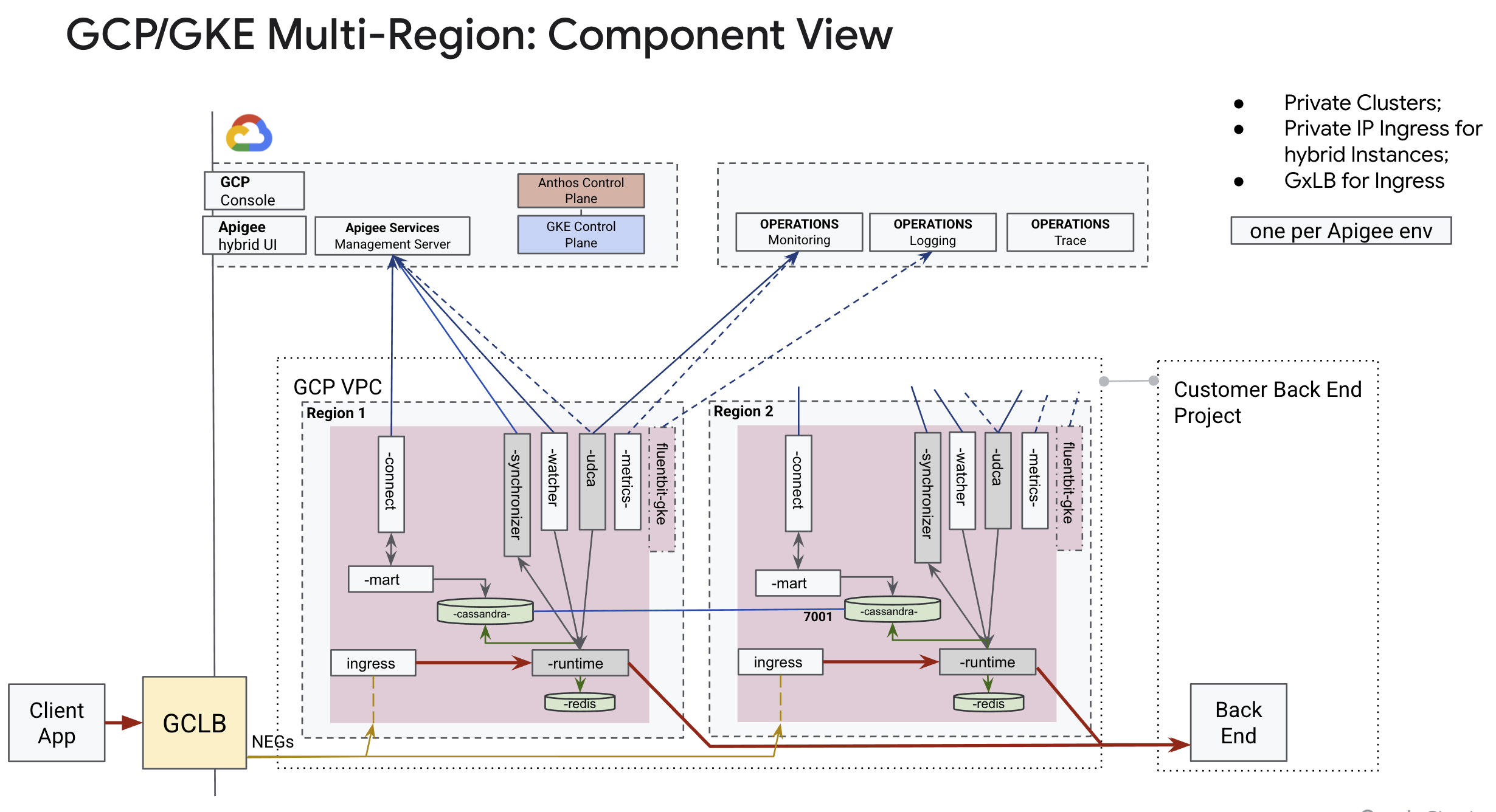

GKE Multi Region 1.5

This walkthrough is a 4-in-1:

- Detailed command-line installation of two-region small Hybrid topology with GTM configuration (GXLB);

- Script-based installation;

- Large size topology;

- Custom subnet mode networking.

It is easy to create a multi-region Apigee Hybrid multi-cluster topology using default VPC network provided by a GCP project.

The main choices would be to pick your regions.

There is a number of other decisions you would need to take into account when coming up with final set of installation steps.

The High-level steps of the installation:

- VPC network, provided by GCP default configuration;

- multi-zone GKE clusters;

- large footprint: 2 node-pools: apigee-runtime and apigee-data

- large footprint: 3-node-per-region Cassandra;

- Service Accounts with json key files;

- Usage of config example and template files from ahr main distribution;

- Usage of

install-profilefor the 1st Hybrid instance and "coarse" component-level commands for the 2nd region;

The main tasks to create a dual data centre are:

- Plan your cluster, hybrid topology layout, and required infrastructure components;

- Create clusters;

- Install Runtime in a 1st region;

- Clone cert-manager secret from 1st to 2nd region

- Configure Cassandra seed node in 2nd region Runtime Config file;

- Install Runtime in a 2nd region;

- Configure GTM.

?. Let's define configuration of the installation.

Project: $PROJECT

Cluster Type: Multi-Zonal

| config | region/zones | data x 3 | runtime x 3 | |

|---|---|---|---|---|

| common networking | mr-gcp-networking.env | e2-standard-4 | e2-standard-4 | |

| common hybrid | mr-hybrid-common.env | e2-standard-4 | e2-standard-4 | |

| r1 cluster | mr-r1-gke-cluster.env | us-east1-1 a, b, c | ||

| r2 cluster | mr-r2-gke-cluster.env | europe-west2 a, b, c |

NOTE: Keep an eye on total core count as well as mem requirements.

?. Configure Bastion Host using instructions from the following page: https://github.com/apigee/ahr/wiki/bastion-host

TODO: [ ] those are generic ahr usage instructions. move to a separate page.

?. Clone ahr and source ahr environment

cd ~

git clone https://github.com/apigee/ahr.git

export AHR_HOME=~/ahr

export PATH=$AHR_HOME/bin:$PATH?. ahr scripts require yq for yaml file processing. We need it also to correct template files. This command fetches and installs yq binary into a ~/bin directory.

$AHR_HOME/bin/ahr-verify-ctl prereqs-install-yq

source ~/.profile # to add ~/.bin to your PATH variable of the current session?. Create a project directory:

export HYBRID_HOME=~/apigee-hybrid-install

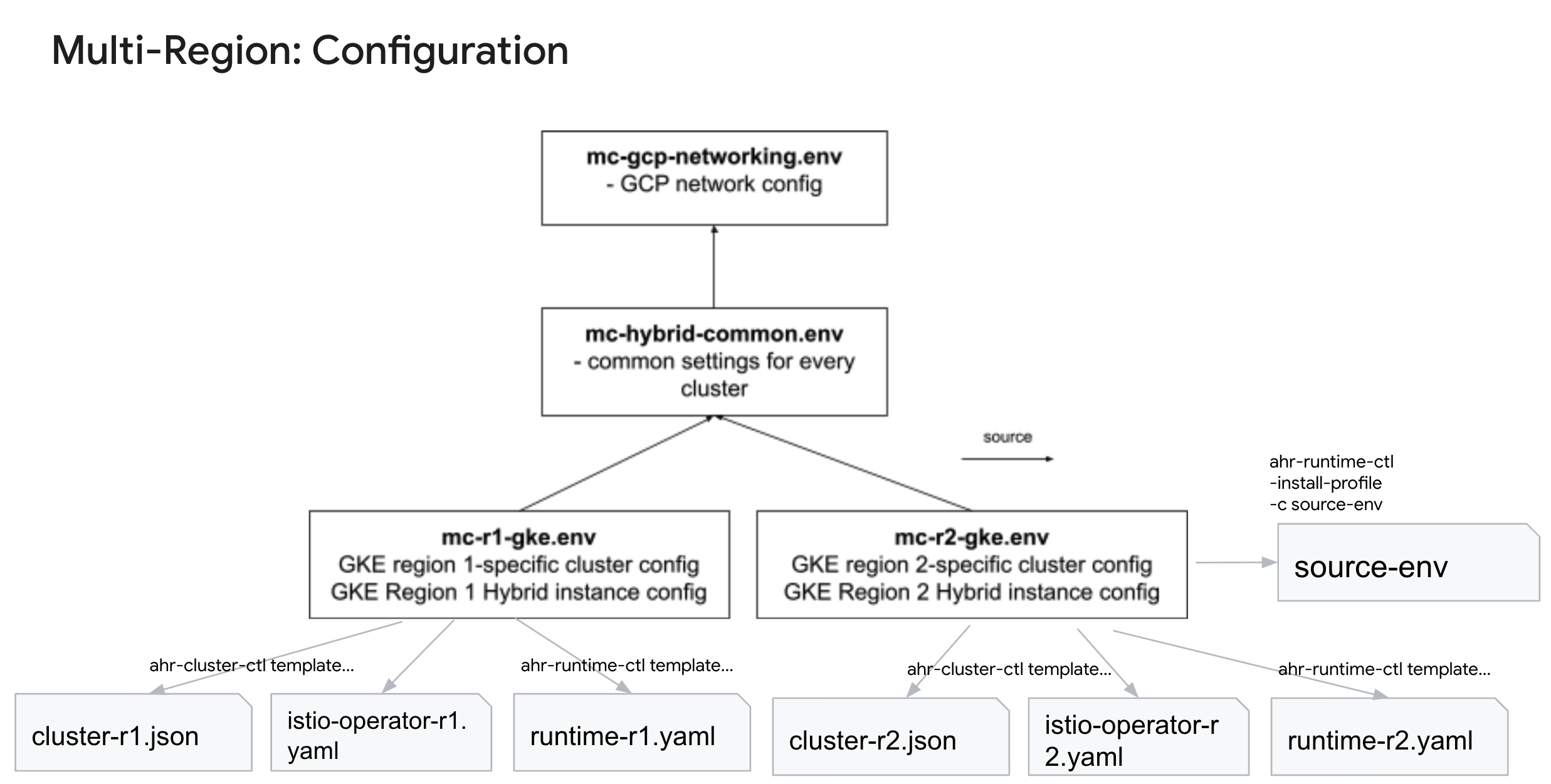

mkdir -p $HYBRID_HOME?. Study example-set of multi-region environment variable files.

cp -R $AHR_HOME/examples-mr-gcp/. $HYBRID_HOME?. You can explore now the Hybrid runtime configuration

vi $HYBRID_HOME/mr-hybrid-common.envThis .env file contains environment variables that are common to every data centre. Put them into mr-hybrid-common.env config file. Define differing elements in the each data centre file.

Three main groups of variables are:

- Hybrid version

- Project definition

- Cluster parameters

- Runtime configuration

TODO:? [ ] Expand??

Changes in this case:

export PROJECT=<project-id> We are going to configure Internal Load Balancers. As of now, the *cluster*.env config files contain a dummy IP address, 203.0.113.10 (from a documentation IP CIDR, https://datatracker.ietf.org/doc/html/rfc5737#section-3) for each DC regions and zones runtime IPs

OPTIONAL: We use default kubectl

~/.kube/configfile. If you prefer to keep things nice and tidy while working with multiple sets of logically groped Kubernetes clauster, define cluster credentials config file in the project directory.export KUBECONFIG=$PWD/mr-gcp-config

?. Configure PROJECT variable

NOTE: use those pre-canned commands if you're using qwiklabs

export PROJECT=$(gcloud projects list|grep qwiklabs-gcp|awk '{print $1}')

export PROJECT=<project-id>?. Check that project reflect correct project.

echo $PROJECTNOTE: You can cd to a project directory and use relative file references, however to keep things CI/CD-friendly, all our file invocations are using full paths, and therefore are current-location independent.

?. Enable required APIs

ahr-verify-ctl api-enable?. Source 1st region configuration into a current terminal session

source $HYBRID_HOME/mr-r1-gke-cluster.env?. Generate cluster config for cluster-r1 in region 1 using cluster template

ahr-cluster-ctl template $CLUSTER_TEMPLATE > $CLUSTER_CONFIG?. Inspect cluster config file.

vi $CLUSTER_CONFIG- Verify that the $CLUSTER_CONFIG value is

$HYBRID_HOME/cluster-r1.jsonfile; - Verify that a region

.cluster.locationproperty value is a us-central1-a zone.

?. Create the GKE cluster in Region 1

ahr-cluster-ctl create?. Inspect secondary subnets for pods and services that were automatically created. For GKE, run the command:

gcloud container clusters describe cluster-r1 --zone=us-central1-a |grep CidrSample output:

clusterIpv4Cidr: 10.116.0.0/14

clusterIpv4Cidr: 10.116.0.0/14

clusterIpv4CidrBlock: 10.116.0.0/14

servicesIpv4Cidr: 10.120.0.0/20

servicesIpv4CidrBlock: 10.120.0.0/20

podIpv4CidrSize: 24

servicesIpv4Cidr: 10.120.0.0/20?. Use Google Console to see those secondary ranges created for default subnet of default network of the region $R1. Notice that those CIDRs will be outside of 10.128.0.0/9, which is allocated and controlled by Auto Subnet mode creation. It might feel convenient. Yet it is dangerous.

?. Source 2nd region configuration into a current terminal session

source $HYBRID_HOME/mr-r2-gke-cluster.env?. Generate cluster config for cluster-r2 in region 2 using cluster template

ahr-cluster-ctl template $CLUSTER_TEMPLATE > $CLUSTER_CONFIGYou can inspect cluster config file using vi $CLUSTER_CONFIG command

?. Create the GKE cluster in Region 1

ahr-cluster-ctl create?. Inspect cluster config file.

- Verify that the $CLUSTER_CONFIG value is

$HYBRID_HOME/cluster-r2.jsonfile; - Verify that a region

.cluster.locationproperty value is aeurope-west2-azone.

?. Inspect secondary subnets for pods and services that were automatically created. For GKE, run the command:

gcloud container clusters describe cluster-r2 --zone=europe-west2-a |grep CidrSample output:

clusterIpv4Cidr: 10.76.0.0/14

clusterIpv4Cidr: 10.76.0.0/14

clusterIpv4CidrBlock: 10.76.0.0/14

servicesIpv4Cidr: 10.80.0.0/20

servicesIpv4CidrBlock: 10.80.0.0/20

podIpv4CidrSize: 24

servicesIpv4Cidr: 10.80.0.0/20?. After the clusters are created, your ~/.kube/config contains entries for both clusters: cluster-r1 and cluster-r2.

kubectl config get-contextsKeep in mind that only one of them is a current one. You either need to be sure to set the cluster you work with as current

kubectl config use-context $R1_CLUSTER

kubectl get nodes -o wideor explicitly reference required cluster via --context option

kubectl --context $R2_CLUSTER get nodes -o wideOur Cassandra Pods from regional clusters should be able to communicate via port 7001.

In terms of the firewall rule, you can be as generic or as specific, as your Security Department demands. For example, we are creating a rule that allows ingress for our pod IP ranges yet from any instance in the network. You can narrow it down further by specifying cluster node tags [tracing them via managed instance group referenced in a cluster description, and defined in an instance template].

?. Configure a firewall rule that enables port 7001 to be able to communicate between pods in clusters

Using source ranges we obtained from gcloud commands earlier, property clusterIpv4Cidr: 10.116.0.0/14 and 10.76.0.0/14, define the firewall rulle:

gcloud compute firewall-rules create allow-cs-7001 \

--project $PROJECT \

--network $NETWORK \

--allow tcp:7001 \

--direction INGRESS \

--source-ranges 10.116.0.0/14,10.76.0.0/14?. You need to use two ssh sessions to perform this connectivity check. To initialize session variables in the second session:

export AHR_HOME=~/ahr

export HYBRID_HOME=~/apigee-hybrid-install

source $HYBRID_HOME/mr-hybrid-common.env?. Using following commands, validate connectivity between two cluster on port 7001 that Cassandra will be using for inter-regional communication.

# run a busybox container in cluster 1

kubectl --context $R1_CLUSTER run -i --tty busybox --image=busybox --rm --restart=Never -- sh

# run a busybox container in cluster 2

kubectl --context $R2_CLUSTER run -i --tty busybox --image=busybox --rm --restart=Never -- sh

# display IP address of the current busybox container

hostname -i

# ping

ping <ip-address-of-opposite-container>

# start nc in a listening mode

nc -l 0.0.0.0 -p 7001 -v

# Probe in an opposing direction

nc -v <ip-address-of-nc-listener> 7000?. Verify if Organization name we are going to use passes validity checks

ahr-runtime-ctl org-validate-name $ORG?. Create Organization and define Analytics region

ahr-runtime-ctl org-create $ORG --ax-region $AX_REGION?. Create Environment $ENV

ahr-runtime-ctl env-create $ENV?. Define Environment Group and Host Name

ahr-runtime-ctl env-group-create $ENV_GROUP $RUNTIME_HOST_ALIASES?. Assign environment $ENV to the environment Group $ENV_GROUP

ahr-runtime-ctl env-group-assign $ORG $ENV_GROUP $ENV?. Create all apigee component Services Accounts in directory SA_DIR

ahr-sa-ctl create-sa all

ahr-sa-ctl create-key all?. Configure synchronizer and apigeeconnect component

ahr-runtime-ctl setsync $SYNCHRONIZER_SA_ID?. Verify Hybrid Control Plane Configuration

ahr-runtime-ctl org-config?. Observe:

- check that the organizaton is hybrid-enabled

- check that apigee connect is enabled

- check is sync is correct

?. Source region 1 environment configuration

export HYBRID_ENV=$HYBRID_HOME/mr-r1-gke-cluster.env

source $HYBRID_ENV?. Select cluster-r1, current value of $CLUSTER as kubectl's active context

kubectl config use-context $CLUSTER?. Install Certificate Manager

kubectl apply --validate=false -f $CERT_MANAGER_MANIFESTWe are using supplied istio-operator.yaml template. ASM templates are major.minor version specific. So, first we would need to calculate a couple of variables.

The IstioOperator manifest is mostly the same for both region cluster. Yet it is slightly different for the supplied one. As we are going to provision GCP Internal Load balancers, we need specific serviceAnnotation instructions.

Another specific stanza we need to add is to expose 15021 port. This is an Istio ingress service health check port. Unless we use internal load balancers that are going to be exposed via

We are going to clone the supplied template, then inject those annotations in it. The file with injections we will then use to generate final IstioOperator manifests.

?. Define a couple of variable that the template expects and calculate an $ASM_RELEASE and an $ASM_VERSION_MINOR number from $ASM_VERSION we plan to use.

export PROJECT_NUMBER=$(gcloud projects describe ${PROJECT} --format="value(projectNumber)")

export ref=\$ref

export ASM_RELEASE=$(echo "$ASM_VERSION"|awk '{sub(/\.[0-9]+-asm\.[0-9]+/,"");print}')

export ASM_VERSION_MINOR=$(echo "$ASM_VERSION"|awk '{sub(/\.[0-9]+-asm\.[0-9]+/,"");print}')?. Copy stock template into a $HYBRID_HOME/istio-operator-gke-template.yaml file

cp $AHR_HOME/templates/istio-operator-$ASM_RELEASE-$ASM_PROFILE.yaml $HYBRID_HOME/istio-operator-gke-template.yaml?. Add serviceAnnotations to the ingress gateway to provision an internal load balancer.

yq merge -i $HYBRID_HOME/istio-operator-gke-template.yaml - <<"EOF"

spec:

components:

ingressGateways:

- name: istio-ingressgateway

k8s:

serviceAnnotations:

networking.gke.io/load-balancer-type: "Internal"

networking.gke.io/internal-load-balancer-allow-global-access: "true"

EOF?. As we are configuring internal lb, expose status-port for health-checks

yq write -i -s - $HYBRID_HOME/istio-operator-gke-template.yaml <<"EOF"

- command: update

path: spec.components.ingressGateways.(name==istio-ingressgateway).k8s.service.ports[+]

value:

name: status-port

port: 15021

protocol: TCP

targetPort: 15021

EOF?. Get/download an ASM installation and add its _HOME value to the PATH variable

source <(ahr-cluster-ctl asm-get $ASM_VERSION)?. Provision static private IP for Internal Load Balancer

gcloud compute addresses create runtime-ip \

--region "$REGION" \

--subnet "$SUBNETWORK" \

--purpose SHARED_LOADBALANCER_VIP?. Fetch the allocated IP into RUNTIME_IP env variable

export RUNTIME_IP=$(gcloud compute addresses describe runtime-ip --region "$REGION" --format='value(address)')?. Persist RUNTIME_IP to the $HYBRID_ENV file

sed -i -E "s/^(export RUNTIME_IP=).*/\1$RUNTIME_IP/g" $HYBRID_ENV?. Generate the IstioOperator for cluster_r1 into file $ASM_CONFIG

ahr-cluster-ctl template $HYBRID_HOME/istio-operator-gke-template.yaml > $ASM_CONFIG?. ASM for GKE requires additional cluster-level configurations. Configure GKE cluster

ahr-cluster-ctl asm-gke-configure "$ASM_VERSION_MINOR"?. Install ASM/Istio into $CLUSTER

istioctl install -f $ASM_CONFIG?. Create Key and Self-signed Certificate for Istio Ingress Gateway

ahr-verify-ctl cert-create-ssc $RUNTIME_SSL_CERT $RUNTIME_SSL_KEY $RUNTIME_HOST_ALIAS?. Calculate $HYBRID_VERSION_MINOR

export HYBRID_VERSION_MINOR=$(echo -n "$HYBRID_VERSION"|awk '{sub(/\.[0-9]$/,"");print}')?. Generate $RUNTIME_CONFIG using template $RUNTIME_TEMPLATE

ahr-runtime-ctl template $RUNTIME_TEMPLATE > $RUNTIME_CONFIG?. Get Hybrid installation and set up APIGEECTL_HOME in PATH

source <(ahr-runtime-ctl get-apigeectl)?. Install Hybrid Apigee Controller Manager

ahr-runtime-ctl apigeectl init -f $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIGNOTE: When automating this process, you need to wait for ~0.5 minutes before running wait-for-ready command. There is no practical way yet to identify if Controllers are deployed.

?. Deploy Hybrid Runtime using configuration: $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG?. Generate source.env file for R1_CLUSTER

source <(ahr-runtime-ctl get-apigeectl-home $HYBRID_HOME/$APIGEECTL_TARBALL)

ahr-runtime-ctl install-profile small asm-gcp -c source-env?. Let's deploy a proxy we can use to verify that 1st region cluster is working correctly

$AHR_HOME/proxies/deploy.sh?. Execute test request for Ping proxy

curl --cacert $RUNTIME_SSL_CERT https://$RUNTIME_HOST_ALIAS/ping -v --resolve "$RUNTIME_HOST_ALIAS:443:$RUNTIME_IP" --http1.1Successful request should contain:

...

* SSL connection using TLSv1.3 / TLS_AES_256_GCM_SHA384

* ALPN, server accepted to use http/1.1

...

* SSL certificate verify ok.

> GET /ping HTTP/1.1

> Host: test-group-qwiklabs-gcp-03-dcb3442a67a7-us-central1.hybrid-apigee.net

> User-Agent: curl/7.64.0

> Accept: */*

...

< HTTP/1.1 200 OK

...

< server: istio-envoy

<

* Connection #0 to host test-group-qwiklabs-gcp-03-dcb3442a67a7-us-central1.hybrid-apigee.net left intact

pongTechnically, we create multi-region topology by setting up first region, then extending topology to the second region, then third, etc.

For those regions to communicate correctly, then need to share encryption secrets.

?. Set up session variables for Region 2

export HYBRID_ENV=$HYBRID_HOME/mr-r2-gke-cluster.env

source $HYBRID_ENV?. Set cluster-r2 as active context

kubectl config use-context $CLUSTER?. Propagate encryption secret that Cassandra will use during communication from Region 1 to Region 2

kubectl create namespace cert-manager

kubectl --context=$R1_CLUSTER get secret apigee-ca --namespace=cert-manager -o yaml | kubectl --context=$R2_CLUSTER apply --namespace=cert-manager -f -?. Install Certificate Manager to cluster-r2

kubectl apply --validate=false -f $CERT_MANAGER_MANIFEST?. Provision static private IP for Internal Load Balancer for cluster-r2

gcloud compute addresses create runtime-ip \

--region "$REGION" \

--subnet "$SUBNETWORK" \

--purpose SHARED_LOADBALANCER_VIP?. Fetch the allocated IP into RUNTIME_IP env variable

export RUNTIME_IP=$(gcloud compute addresses describe runtime-ip --region "$REGION" --format='value(address)')?. Persist RUNTIME_IP to the $HYBRID_ENV file

sed -i -E "s/^(export RUNTIME_IP=).*/\1$RUNTIME_IP/g" $HYBRID_ENVAs ASM IstioOperator template and env variables are set and are correct [unless you lost your session; then you need to re-initialize it], we can go straight to processing template; configuring GKE cluster and installing ASM.

?. Generate the IstioOperator for cluster_r1 into file $ASM_CONFIG

ahr-cluster-ctl template $HYBRID_HOME/istio-operator-gke-template.yaml > $ASM_CONFIG?. ASM for GKE requires additional cluster-level configurations. Configure GKE cluster

ahr-cluster-ctl asm-gke-configure "$ASM_VERSION_MINOR"?. Install ASM/Istio into $CLUSTER

istioctl install -f $ASM_CONFIGTo configure Cassandra for 2nd region, we nominate a seed host from the first region, then create Cassandra StatefulSet. Afterwards, we rebuild nodes at the new datacenter and tidy up config file by removing that temporary seed node property.

?. Set up variable for Cassandra credentials. Those are defaults. Correct as appropriate when you changed the password.

export CS_USERNAME=jmxuser

export CS_PASSWORD=iloveapis123?. Fetch the status of the Cassandra ring

CS_STATUS=$(kubectl --context $R1_CLUSTER -n apigee exec apigee-cassandra-default-0 -- nodetool -u $CS_USERNAME -pw $CS_PASSWORD status)Variable CS_STATUS has one or list of Cassandra nodes. We need to pick up IP of any of them, for example the first one.

echo -e "$CS_STATUS"?. Note a lot of choice in our case, we we configured single node Cassandra ring so far. Let's nominate first node IP as the seed one.

export DC1_CS_SEED_NODE=$(echo "$CS_STATUS" | awk '/dc-1/{getline;getline;getline;getline;getline; print $2}')?. Check it

echo $DC1_CS_SEED_NODE

10.116.0.12?. Create Key and Self-signed Certificate for Istio Ingress Gateway. This time it's for Region 2

ahr-verify-ctl cert-create-ssc $RUNTIME_SSL_CERT $RUNTIME_SSL_KEY $RUNTIME_HOST_ALIAS?. Generate $RUNTIME_CONFIG processing template $RUNTIME_TEMPLATE for Region 2

ahr-runtime-ctl template $RUNTIME_TEMPLATE > $RUNTIME_CONFIG?. Edit $RUNTIME_CONFIG to add cassandra.multiRegionSeedHost property.

yq m -i $RUNTIME_CONFIG - <<EOF

cassandra:

multiRegionSeedHost: $DC1_CS_SEED_NODE

datacenter: "dc-2"

rack: "ra-1"

EOFObserve that besides adding a seed host instruction, we also configure datacenter and rack properties of 2nd region Cassandra instance.

?. Install Hybrid Apigee Controller Manager

WARNING: remember about half-minute delay, when using following lines in an automation scenario.

ahr-runtime-ctl apigeectl init -f $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIGNOTE: When automating this process, you need to wait for ~0.5 minutes before running wait-for-ready command. There is no practical way yet to identify if Controllers are deployed.

?. Deploy Hybrid Runtime using configuration: $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG

ahr-runtime-ctl apigeectl wait-for-ready -f $RUNTIME_CONFIG?. Output the nodetool status command to verify our two-datacenter Cassandra ring wellbeing

kubectl --context $R1_CLUSTER -n apigee exec apigee-cassandra-default-0 -- nodetool -u $CS_USERNAME -pw $CS_PASSWORD statusExpected Output:

Datacenter: dc-1

================

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.116.0.12 420.28 KiB 256 100.0% a9f1b84a-ac70-43ef-b17e-25b843d57dd6 ra-1

Datacenter: dc-2

================

Status=Up/Down

|/ State=Normal/Leaving/Joining/Moving

-- Address Load Tokens Owns (effective) Host ID Rack

UN 10.76.0.12 130.36 KiB 256 100.0% 1d543816-2e35-4f07-85e4-2a9c6dce69fd ra-1?. Rebuild node(s) in the new datacenter

kubectl --context $R2_CLUSTER exec apigee-cassandra-default-0 -n apigee -- nodetool -u $CS_USERNAME -pw $CS_PASSWORD rebuild -- dc-1?. Reset seed node property in the $UNTIME_CONFIG file and apply the change to the --datastore component (a.k.a Apigee Hybrid Cassandra)

yq d -i $RUNTIME_CONFIG cassandra.multiRegionSeedHost

ahr-runtime-ctl apigeectl apply --datastore -f $RUNTIME_CONFIG?. Execute test request for Ping proxy against Hybrid endpoint in region 2

curl --cacert $RUNTIME_SSL_CERT https://$RUNTIME_HOST_ALIAS/ping -v --resolve "$RUNTIME_HOST_ALIAS:443:$RUNTIME_IP" --http1.1You should receive pong in the output.

?. Here is the contents of the $HYBRID_HOME directory so far:

~/apigee-hybrid-install$ ls -go

total 52300

drwxr-xr-x 7 4096 Jul 27 18:27 anthos-service-mesh-packages

drwxr-xr-x 7 4096 Jul 27 19:49 apigeectl_1.5.1-64c047b_linux_64

-rw-r--r-- 1 5611185 Jul 27 19:49 apigeectl_linux_64.tar.gz

-rw-r--r-- 1 2175 Jul 27 17:15 cluster-r1.json

-rw-r--r-- 1 2178 Jul 27 17:21 cluster-r2.json

-rw-r--r-- 1 1200 Jul 27 20:53 hybrid-cert-europe-west2.pem

-rw-r--r-- 1 1200 Jul 27 20:00 hybrid-cert-us-central1.pem

-rw------- 1 1704 Jul 27 20:53 hybrid-key-europe-west2.pem

-rw------- 1 1708 Jul 27 20:00 hybrid-key-us-central1.pem

drwxr-x--- 6 4096 Oct 21 2020 istio-1.7.3-asm.6

-rw-r--r-- 1 47857860 Jul 27 18:27 istio-1.7.3-asm.6-linux-amd64.tar.gz

-rw-r--r-- 1 3159 Jul 27 18:16 istio-operator-gke-template.yaml

-rw-r--r-- 1 3296 Jul 27 18:34 istio-operator-r1-gke.yaml

-rw-r--r-- 1 3297 Jul 27 20:39 istio-operator-r2-gke.yaml

-rw-r--r-- 1 607 Jul 27 17:14 mr-gcp-networking.env

-rw-r--r-- 1 2776 Jul 27 19:10 mr-hybrid-common.env

-rw-r--r-- 1 844 Jul 27 18:33 mr-r1-gke-cluster.env

-rw-r--r-- 1 844 Jul 27 20:35 mr-r2-gke-cluster.env

-rw-r--r-- 1 2148 Jul 27 19:11 runtime-sz-r1-gke.yaml

-rw-r--r-- 1 2189 Jul 27 21:09 runtime-sz-r2-gke.yaml

drwx------ 2 4096 Jul 27 17:34 service-accounts

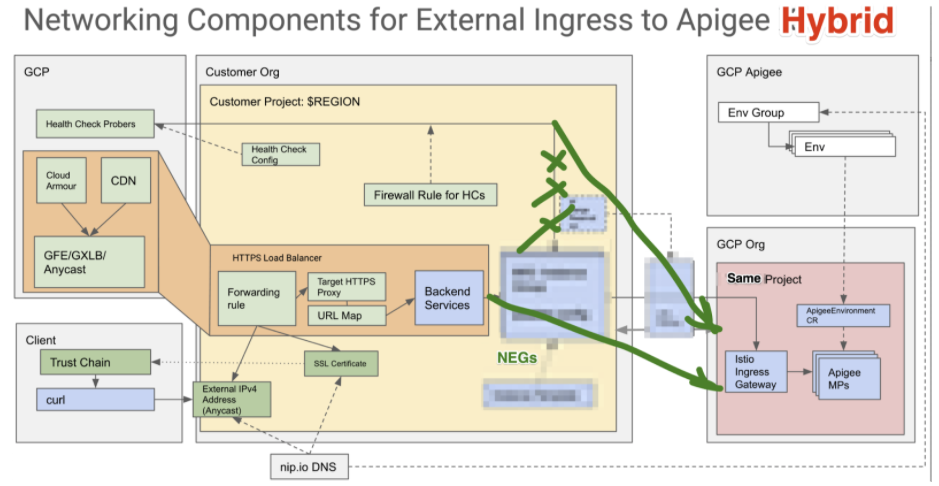

-rw-r--r-- 1 851 Jul 27 20:14 source.envGoogle Load Balancer is a powerful but complex product. Mastering details could be daunting. Versatility of your requirements is one of the reasons why there is no single best implementation scenario. Versatility of G*LB product sets, luckily, allows you to implement any scenario.

In the given scenario assumptions are:

- Google global https external load balancer is used for the benefit of CloudArmour;

- Native GKE/NEG for better performance;

- Explicit Load Balancer configuration is used to front multi-region Hybrid topology.

High-level Installation steps:

- Deploy non-SNI Apigee Route os t TODO: xxxx

Doc REF: https://cloud.google.com/kubernetes-engine/docs/how-to/standalone-neg#create_a_service

?. Source Configuration and make the $R1_CLUSTER active for Region 1

source $HYBRID_HOME/source.env

source $HYBRID_HOME/mr-r1-gke-cluster.env

kubectl config use-context $CLUSTER?. Extract cluster node tags. We will need them later

export R1_CLUSTER_TAG=$(gcloud compute instances describe "$(kubectl --context $R1_CLUSTER get nodes -o jsonpath='{.items[0].metadata.name}')" --zone $R1_CLUSTER_ZONE --format="value(tags.items[0])")

export R2_CLUSTER_TAG=$(gcloud compute instances describe "$(kubectl --context $R2_CLUSTER get nodes -o jsonpath='{.items[0].metadata.name}')" --zone $R2_CLUSTER_ZONE --format="value(tags.items[0])")

export CLUSTER_NETWORK_TAGS=$R1_CLUSTER_TAG,$R2_CLUSTER_TAG?. Define firewall for Google Health probers

gcloud compute firewall-rules create fw-allow-health-check-and-proxy-hybrid \

--network=$NETWORK \

--action=allow \

--direction=ingress \

--target-tags=$CLUSTER_NETWORK_TAGS \

--source-ranges=130.211.0.0/22,35.191.0.0/16 \

--rules=tcp:8443This operation will take time, up to 60 minutes. Yet we don't need to wait till it finishes. We can continue with the rest of the setup.

?. Provision GTM's VIP:

export GTM_VIP=hybrid-vip

gcloud compute addresses create $GTM_VIP \

--ip-version=IPV4 \

--global?. We are going to use nip.io proof-of-ownership service for our provisioned certificate.

export GTM_IP=$(gcloud compute addresses describe $GTM_VIP --format="get(address)" --global --project "$PROJECT")

echo $GTM_IP

export GTM_HOST_ALIAS=$(echo "$GTM_IP" | tr '.' '-').nip.io

echo "INFO: External IP: Create Google Managed SSL Certificate for FQDN: $GTM_HOST_ALIAS"?. Add to $GTM_HOST_ALIAS to an $ENV_GROUP

HOSTNAMES=$(ahr-runtime-ctl env-group-config $ENV_GROUP|jq '.hostnames' | jq '. + ["'$GTM_HOST_ALIAS'"]'|jq -r '. | join(",")' )

ahr-runtime-ctl env-group-set-hostnames "$ENV_GROUP" "$HOSTNAMES"?. Provision Google-managed certificate

gcloud compute ssl-certificates create apigee-ssl-cert \

--domains="$GTM_HOST_ALIAS" --project "$PROJECT"?. TCP Heath Check configuration

gcloud compute health-checks create tcp https-basic-check \

--use-serving-port?. Backend Service configuration

gcloud compute backend-services create hybrid-bes \

--port-name=https \

--protocol HTTPS \

--health-checks https-basic-check \

--global?. URL map

gcloud compute url-maps create hybrid-web-map \

--default-service hybrid-bes?. Target https proxy

gcloud compute target-https-proxies create hybrid-https-lb-proxy \

--ssl-certificates=apigee-ssl-cert \

--url-map hybrid-web-map?. Forwarding Rule

gcloud compute forwarding-rules create hybrid-https-forwarding-rule \

--address=$GTM_VIP \

--global \

--target-https-proxy=hybrid-https-lb-proxy \

--ports=443?. Define a secret for non-SNI ApigeeRoute

export TLS_SECRET=$ORG-$ENV_GROUP?. Manifest for apigee-route-non-sni ApigeeRoute

Doc REF: https://cloud.google.com/apigee/docs/hybrid/v1.5/enable-non-sni

cat <<EOF > $HYBRID_HOME/apigeeroute-non-sni.yaml

apiVersion: apigee.cloud.google.com/v1alpha1

kind: ApigeeRoute

metadata:

name: apigee-route-non-sni

namespace: apigee

spec:

enableNonSniClient: true

hostnames:

- "*"

ports:

- number: 443

protocol: HTTPS

tls:

credentialName: $TLS_SECRET

mode: SIMPLE

minProtocolVersion: TLS_AUTO

selector:

app: istio-ingressgateway

EOF

kubectl apply -f $HYBRID_HOME/apigeeroute-non-sni.yaml?. Reconfigure virtual host in Region 1 with .virtualhosts.additionalGateways array

yq merge -i $RUNTIME_CONFIG - <<EOF

virtualhosts:

- name: $ENV_GROUP

additionalGateways: ["apigee-route-non-sni"]

EOF

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG --settings virtualhosts --env $ENV?. Define region's NEG name

export NEG_NAME=hybrid-neg-$REGION?. Edit istio operator to add NEG annotation

yq merge -i $ASM_CONFIG - <<EOF

spec:

components:

ingressGateways:

- name: istio-ingressgateway

k8s:

serviceAnnotations:

cloud.google.com/neg: '{"exposed_ports": {"443":{"name": "$NEG_NAME"}}}'

EOF

istioctl install -f $ASM_CONFIG?. Chose your capacity setting wisely

export MAX_RATE_PER_ENDPOINT=100?. Add region 1 backend to the load balancer

gcloud compute backend-services add-backend hybrid-bes --global \

--network-endpoint-group $NEG_NAME \

--network-endpoint-group-zone $CLUSTER_ZONE \

--balancing-mode RATE --max-rate-per-endpoint $MAX_RATE_PER_ENDPOINT?. Switch current env variable config to the region 2

source $HYBRID_HOME/mr-r2-gke-cluster.env

kubectl config use-context $CLUSTER?. Define region's NEG name

export NEG_NAME=hybrid-neg-$REGION?. Edit istio operator to add NEG annotation

yq merge -i $ASM_CONFIG - <<EOF

spec:

components:

ingressGateways:

- name: istio-ingressgateway

k8s:

serviceAnnotations:

cloud.google.com/neg: '{"exposed_ports": {"443":{"name": "$NEG_NAME"}}}'

EOF

istioctl install -f $ASM_CONFIG?. Create non-SNI ApigeeRoute

kubectl apply -f $HYBRID_HOME/apigeeroute-non-sni.yaml?. Add additionalGateways value to the virtual host $ENV_GROUP

yq merge -i $RUNTIME_CONFIG - <<EOF

virtualhosts:

- name: $ENV_GROUP

additionalGateways: ["apigee-route-non-sni"]

EOF

ahr-runtime-ctl apigeectl apply -f $RUNTIME_CONFIG --settings virtualhosts --env $ENV?. Add region 2 backend to the load balancer

gcloud compute backend-services add-backend hybrid-bes --global \

--network-endpoint-group $NEG_NAME \

--network-endpoint-group-zone $CLUSTER_ZONE \

--balancing-mode RATE --max-rate-per-endpoint $MAX_RATE_PER_ENDPOINT?. After the Certificate is finally provisioned, we can send the test request from anywhere to call our API Proxy

curl https://$GTM_HOST_ALIAS/ping?. Verify svc, svcneg and/or SNEG configuration

kubectl get svc istio-ingressgateway -o yaml -n istio-system |less

kubectl get svcneg $NEG_NAME -n istio-system -o yaml |less

kubectl describe service istio-ingressgateway -n istio-system |less

kubectl get ServiceNetworkEndpointGroup $NEG_NAME -n istio-system -o yaml |less?. Inspect NEGs directly

gcloud compute network-endpoint-groups list

gcloud compute network-endpoint-groups list-network-endpoints $NEG_NAME --zone $CLUSTER_ZONE?. Get neg name and location

gcloud compute network-endpoint-groups listgcloud compute backend-services describe hybrid-bes --global

?. check the health of the individual endpoints:

gcloud compute backend-services get-health hybrid-bes --global?. My favorite D&T container

kubectl run -it --rm --restart=Never multitool --image=praqma/network-multitool -- sh?. Non-SNI Call a NEG endpoint

curl https://$GTM_HOST_ALIAS/ping -k -v -H "Host: 34-149-100-82.nip.io"?. Poor man's performance load-testing tool

for i in {1..100}; do curl https://$GTM_HOST_ALIAS/ping; doneInstall script expects AHR to be installed and PROJECT variable to be configured.

# Configure PROJECT variable [for qwiklabs; edit as appropriate otherwise]

export PROJECT=$(gcloud projects list|grep qwiklabs-gcp|awk '{print $1}')

# Clone ahr and source ahr environment

cd ~

git clone https://github.com/apigee/ahr.git

export AHR_HOME=~/ahr

export PATH=$AHR_HOME/bin:$PATH

# Install yq binary into a ~/bin directory

$AHR_HOME/bin/ahr-verify-ctl prereqs-install-yq

source ~/.profile

# Create a project directory:

export HYBRID_HOME=~/apigee-hybrid-install

mkdir -p $HYBRID_HOME

# Prepare config files

cp -R $AHR_HOME/examples-mr-gcp/. $HYBRID_HOME

# Execute install scripts

cd $HYBRID_HOME

time ./install-mr-gcp.sh |& tee mr-gcp-install-`date -u +"%Y-%m-%dT%H:%M:%SZ"`.log

time ./install-mr-gtm.sh |& tee mr-gtm-install-`date -u +"%Y-%m-%dT%H:%M:%SZ"`.log[ ] clear setsync

[ ] remove SAs

[ ] delete clusters

[ ] remove PVCs

(

source $HYBRID_HOME/dc1-cluster-l-1.1.0.sh

source <(ahr-runtime-ctl home)

ahr-cluster-ctl delete

)

(

source $HYBRID_HOME/dc2-cluster-l-1.1.0.sh

source <(ahr-runtime-ctl home)

ahr-cluster-ctl delete

)

;;;;;;;;;; '' ;;; scratch pad for custom network explicit subnet allocation '''

?. Calculate CIDR allocations for pods and services Secondary Ranges

Region: us-central1 default 10.128.0.0/20

Subnet: 10.128.0.0/20

first last Count

10.128.0.0 10.128.15.255 4,096

Services: 10.128.240.0/20

10.128.240.0 10.128.255.255 4,096

Pods: 10.129.0.0/16

10.129.0.0 10.129.255.255 65,536

Region: us-west2 default 10.168.0.0/20

Services: 10.168.240.0/20

10.168.240.0 10.168.255.255 4,096

Pods: 10.169.0.0/16

10.169.0.0 10.169.255.255 65,536>> common

## for default/default, us-central1: 10.128.0.0/20

#export R1_SUBNET_PODS=$SUBNETWORK-pods

#export R1_SUBNET_PODS_CIDR=10.129.0.0/16

#export R1_SUBNET_SERVICES=$SUBNETWORK-services

#export R1_SUBNET_SERVICES_CIDR=10.128.240.0/20/20

# for default/default, europe-west2: 10.168.0.0/20

#export R2_SUBNET_PODS=$SUBNETWORK-pods

#export R2_SUBNET_PODS_CIDR=10.169.0.0/16

#export R2_SUBNET_SERVICES=$SUBNETWORK-services

#export R2_SUBNET_SERVICES_CIDR=10.168.240.0/20

> r1

#export SUBNET_PODS=$R1_SUBNET_PODS

#export SUBNET_PODS_CIDR=$R1_SUBNET_PODS_CIDR

#export SUBNET_SERVICES=$R1_SUBNET_SERVICES

#export SUBNET_SERVICES_CIDR=$R1_SUBNET_SERVICES_CIDR

r2

#export SUBNET_PODS=$R2_SUBNET_PODS

#export SUBNET_PODS_CIDR=$R2_SUBNET_PODS_CIDR

#export SUBNET_SERVICES=$R2_SUBNET_SERVICES

#export SUBNET_SERVICES_CIDR=$R2_SUBNET_SERVICES_CIDR

?. Source hybrid environment configuration where CLUSTER_TEMPLATE is defined.

source $HYBRID_HOME/mr-hybrid-common.env?. Generate cluster config for cluster-r1 in region 1 using cluster template

ahr-cluster-ctl template $CLUSTER_TEMPLATE > $CLUSTER_CONFIG?. Verify that $HYBRID_HOME/cluster_template.yaml contains added lines in correct position.

?. Duplicate provided $CLUSTER_TEMPLATE into $HYBRID_HOME/cluster_template.yaml file while adding secondary ranges template fragment to the .cluster.ipAllocationPolicy stanza to define secondary ranges explicitly.

NOTE: We need to edit an original provided template and add following lines with env variables references in the

.cluster.ipAllocationPolicystanza after"useIpAliases": true,line."clusterSecondaryRangeName": "$SUBNET_PODS", "servicesSecondaryRangeName": "$SUBNET_SERVICES"Of course, you always can use an editor of your choice to do this manipulation, but in this wiki we are always staying automation-conscious. Therefore following snippet uses CLI (awk/bash) to achieve same effect.

export SECONDARY_RANGES=$( cat <<"EOT"

"clusterSecondaryRangeName": "$SUBNET_PODS",

"servicesSecondaryRangeName": "$SUBNET_SERVICES"

EOT

)

awk -v ranges="$SECONDARY_RANGES" '//;/useIpAliases/{print ranges}' $CLUSTER_TEMPLATE > $HYBRID_HOME/cluster_template.yaml ?. Verify that $HYBRID_HOME/cluster_template.yaml contains added lines in correct position.

?. Source 1st region configuration into a current terminal session

source $HYBRID_HOME/mr-r1-gke-cluster.env?. Generate cluster config file from a cluster template using environment variables.

ahr-cluster-ctl template $HYBRID_HOME/cluster_template.yaml > $CLUSTER_CONFIGYou can inspect cluster config file using vi $CLUSTER_CONFIG command

?. Create Secondary subnets for pods and services

gcloud compute networks subnets update $SUBNETWORK \

--region=$REGION \

--add-secondary-ranges=$SUBNET_PODS=$SUBNET_PODS_CIDR,$SUBNET_SERVICES=$SUBNET_SERVICES_CIDR?.

ahr-cluster-ctl create?. S

- TADA: Apigee Hybrid Container Traffic Analysis with tcpdump for Target Requests

- TADAA: Cloud Code IDE Java Callout Debugging

- Hybrid Ingress Walkthrough 1.5

- Hybrid CRD Objects Diagram 1.3.2

- List of Components for Air-gapped Deployments

- GKE/EKS Multi-cloud Small Topology 1.4 [single-project] | AHR Profile Quick Start

- Single-Zone Cluster, Small footprint 1.1

- Multi-Zone Cluster, Large footprint 1.1

- Multi-Region Cluster, Large footprint 1.1

-

Private Cluster

Private Cluster

-

Performance Testing: distGatling

Performance Testing: distGatling

- Delete Hybrid Installation

- Hybrid Ingress Walkthrough 1.3

AHR-*-CTL

AHR-*-CTL