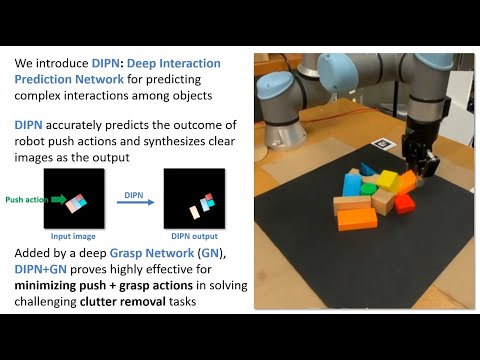

We propose a Deep Interaction Prediction Network (DIPN) for learning to predict complex interactions that ensue as a robot end-effector pushes multiple objects, whose physical properties, including size, shape, mass, and friction coefficients may be unknown a priori. DIPN "imagines" the effect of a push action and generates an accurate synthetic image of the predicted outcome. DIPN is shown to be sample efficient when trained in simulation or with a real robotic system. The high accuracy of DIPN allows direct integration with a Grasp Network (GN), yielding a robotic manipulation system capable of executing challenging clutter removal tasks while being trained in a fully self-supervised manner. The overall network demonstrates intelligent behavior in selecting proper actions between push and grasp for completing clutter removal tasks and significantly outperforms the previous state-of-the-art VPG.

Videos:

Paper: https://arxiv.org/abs/2011.04692

Citation: If you use this code in your research, please cite the paper:

@inproceedings{huang2020dipn,

title= {Dipn: Deep interaction prediction network with application to clutter removal}

author={Huang, Baichuan and Han, Shuai D and Boularias, Abdeslam and Yu, Jingjin},

booktitle={2021 IEEE International Conference on Robotics and Automation (ICRA)},

year={2021}}

Demo videos of a real robot in action can be found here (no editing).

This implementation requires the following dependencies (tested on Ubuntu 18.04.5 LTS, GTX 2080 Ti / GTX 1070):

- Python 3

- NumPy, SciPy, OpenCV-Python, Matplotlib, PyTorch==1.6.0, torchvision==0.7.0, Shapely, Tensorboard, Pillow, imutils, scikit-image, pycocotools (use pip or conda to install)

Conda failed to deal with conflicts, so we used pip. Tested the code on Windows (GTX 2070) with pre-trained models, it works as long as you changed the path to models in Windows syntax. Please replace

conda create --name dipn python=3.8.5 conda activate dipn pip install matplotlib numpy scipy pillow scikit-image opencv-python shapely tensorboard imutils pycocotools conda install pytorch==1.6.0 torchvision==0.7.0 cudatoolkit=10.2 -c pytorch

pip install pycocotoolstopip install pycocotools-windows

- NumPy, SciPy, OpenCV-Python, Matplotlib, PyTorch==1.6.0, torchvision==0.7.0, Shapely, Tensorboard, Pillow, imutils, scikit-image, pycocotools (use pip or conda to install)

- CoppeliaSim, the simulation environment

- GPU, 8GB memory is tested.

Note: To pre-train Grasp Network, it requires pytorch==1.5.1 and torchvision==0.6.1 due to a bug from torchvision

- Download this repo

git clone https://github.com/rutgers-arc-lab/dipn.git - Download models (download folders and unzip) from Google Drive and put them in

dipnfolder - Navigate to folder which contains CoppeliaSim and run

bash coppeliaSim.sh ~/dipn/simulation/simulation_new_4.1.0.ttt - Run

bash run.sh

Data from each test case will be saved into a session directory in the logs folder. To report the average testing performance over a session, run the following:

python utils/evaluate.py --session_directory 'logs/YOUR-SESSION-DIRECTORY-NAME-HERE' --method 'reinforcement' --num_obj_complete NWe used the simulation to generate two types of data, one is randomly dropped, one is some randomly generated "hard" cases.

The "hard" cases is mainly used for fine-tuning process so the Mask R-CNN can distinguish packed objects with same color.

random-maskrcnn and random-maskrcnn-hard contain objects which have different shapes and colors to the final objects that will be trained and evaluted on.

python train_maskrcnn.py --dataset_root 'logs/random-maskrcnn/data'

cd logs/random-maskrcnn/data/maskrcnn.pth logs/random-maskrcnn-hard/data

python train_maskrcnn.py --dataset_root 'logs/random-maskrcnn-hard/data' --epochs 10 --resumeWe count the dataset push as part of training action, we used 1500 actions so the --cutoff 15.

To mimic the real world setup, for a 10 cm push, we only used the 0 to 5 cm to train the model.

Nevertheless, dataset random could also be used, it provides a little bit worse prediction accuracy, but we do not need piror knowledge about the objects and it's in the simulation, so, we can use as many data as we want for free.

python train_push_prediction.py --dataset_root 'logs_push/push/data' --distance 5 --epoch 50 --cutoff 15 --batch_size 8 Switch to pytorch==1.5.1 and torchvision==0.6.1.

random-pretrain contains objects which have different shapes and colors to the final objects that will be trained and evaluted on.

random-pretrain could be replaced with random-maskrcnn, they are essentially collected in the same way.

You may need to restart the training several times, so the loss will go down to around 60.

python train_foreground.py --dataset_root 'logs/random-pretrain/data'To use DIPN+GN, we just need to train Grasp Network in simulation.

python main.py --is_sim --grasp_only --experience_replay --explore_rate_decay --save_visualizations --load_snapshot --snapshot_file 'logs/random-pretrain/data/foreground_model.pth' It will train DQN grasp and push in the same time, which is one of baseline in the paper.

python main.py --is_sim --push_rewards --experience_replay --explore_rate_decay --save_visualizations --load_snapshot --snapshot_file 'logs/random-pretrain/data/foreground_model.pth' The dataset we used can be download from Google Drive.

The Push prediction related files are located in logs_push

The Mask R-CNN, Grasp Network related files are located in logs

Collect push prediction data (only for sim now).

python collect_data.py --is_simCollect dataset for Mask R-CNN and pre-training, --is_mask collects data for Mask R-CNN.

You need to drag the Box object to the center in CoppeliaSim.

python create_mask_rcnn_image.py --is_sim --is_maskCreate dataset like random-maskrcnn-hard, you should run python utils/generate_hard_case.py first, then,

python create_mask_rcnn_image.py --is_sim --is_mask --test_preset_casesTo design your own challenging test case:

-

Open the simulation environment in CoppeliaSim (navigate to your CoppeliaSim directory and run

bash coppeliaSim.sh ~/dipn/simulation/simulation_new_4.1.0.ttt). -

In another terminal window, navigate to this repository and run the following:

python create.py

-

In the CoppeliaSim window, use the CoppeliaSim toolbar (object shift/rotate) to move around objects to desired positions and orientations.

-

In the terminal window type in the name of the text file for which to save the test case, then press enter.

-

Try it out: run a trained model on the test case by running

push_main.pyjust as in the demo, but with the flag--test_preset_filepointing to the location of your test case text file.

Change is_real in constants.py to True

- Download and install librealsense SDK 2.0

- Download the chilitags library, replace

chilitags/samples/detection/detect-from-file.cppwith TODO, and compile. Replacereal/detect-from-livewith the compileddetect-from-fileececutable. - Generate 4 chilitag markers and paste them outside of the workspace but inside the camera's view. Put tag info and their center 2D positions in the robot's frame into

tag_loc_robotvariables.

The workflow is the same as in the simulation. The software version of UR5e is URSoftware 5.1.1.

- Use

touch.pyanddebug.pyto test the robot first. - Download the dataset Google Drive.

- Put the downloaded folders to

dipn. Change the path of model in the codepush_main.py, line 83 & 88. The pre-trained DIPN is inlogs_push/push/data/15_push_prediction_model.pth, the Mask R-CNN is inlogs/real-data/data/maskrcnn.pth, - Place Your objects on the workspace.

- Run

python push_main.py --save_visualizations --is_testing --load_snapshot --snapshot_file 'logs/real-GN/model/models/snapshot-000500.reinforcement.pth

- Fine-tune the Mask R-CNN with real data

real-data. - Fine-tune the pre-training of Grasp Network with real data

real-data. - Train the Grasp Network with the real robot.

- Use

touch.pyto test calibrated camera extrinsics -- provides a UI where the user can click a point on the RGB-D image, and the robot moves its end-effector to the 2D location of that point. - Use

debug.pyto test robot communication and primitive actions. - Use

utils/range-detector.pyto find the color range. - Use

utils/mask-auto-annotate.pyto automatically annotate objects by color.

The code has dependencies over https://github.com/andyzeng/visual-pushing-grasping".

The code contaies DINP and DQN + GN