-

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

WIP/Feedback: X-Ray daemon & service integrations. #737

Conversation

|

Hey! Thanks for sending this pull request :) Definitely an interesting feature to surface to customers. We will review the code shortly and help you with open questions. |

|

@Tanbouz Awesome! I am going to start looking through this PR now. We appreciate the time you have put into this! Would you be willing to create a design doc for this. It will help capture the change and give everyone a way to digest what is going on. We are trying to do this for all new features. |

|

@Tanbouz I am having a hard time understanding the use-case for supporting AWS X-Ray locally. X-Ray is great for profiling your production (even pre-production) Lambda Functions but why would you want to profile locally? What is the benefit of doing this locally vs doing this on the environment directly (aka AWS Lambda)? I think a design doc for this would go a long way. Good questions to cover:

@thesriram noticed that AWS X-Ray only supports tracing for applications that are written in Node.js, Java, and .NET (https://aws.amazon.com/xray/features/). This leaves out many other languages that we currently support. |

|

First of all, sorry had an incident updating my branch from upstream. Design doc 👍, just to quickly answer these for now... Who would this be valuable to? @jfussI'm curious too about other use cases in #217 How would this be valuable to customers?My own use case in bold:

(-1 for PR) X-Ray is already awesome and supports patching HTTP clients or SQL connectors which I guess doesn't require much work from a developer or require SAM supporting X-Ray locally during development. (+1 for PR) My intent was not to profile locally but develop custom X-Ray subsegments that wrap critical parts of my code that I would like to profile later when it goes into production. How does this work with Lambda to Lambda calls locally?If we decided to move forward, it is something I would like to explore along with supporting it across SAM CLI (single lambda invokes..etc), not only in Supported Languages: @thesriramNot sure why that document mentions only 3 languages. Conflicts with: I'm also testing against a live Python Lambda function with active tracing enabled. |

|

The team appreciates the time and effort you put into this @Tanbouz. At this time, we want to find a better way to support X-Ray related use cases. The team understands this is a customer pain point in developing and running locally but we want to ensure we can maintain the solution (keeping this updated as X-Ray updates, performance impacts with needing to start multiple containers, etc). Please add further thoughts, ideas, use-cases in the tracking issue: #217 |

Issue: [Feature Request] Add support for X-Ray on SAM Local #217

This does not replicate AWS X-Ray service itself. It attempts to:

The X-Ray segments will still be emitted to your AWS account if the AWS creds allow so. Better use a non-production AWS account!

Description of changes:

--xray-xonly instart-api) an X-Ray daemon service in a docker container alongside other invoked Lambdas.AWS::LambdaandAWS::Lambda::Function._X_AMZN_TRACE_IDandlet segment = new AWSXRay.getSegment()for example)_X_AMZN_TRACE_IDto be available inside Lambda in all runtimes. Fix only applied to nodejs8.10 for testing Tanbouz/docker-lambda@4328937Tracingsetting.Sample

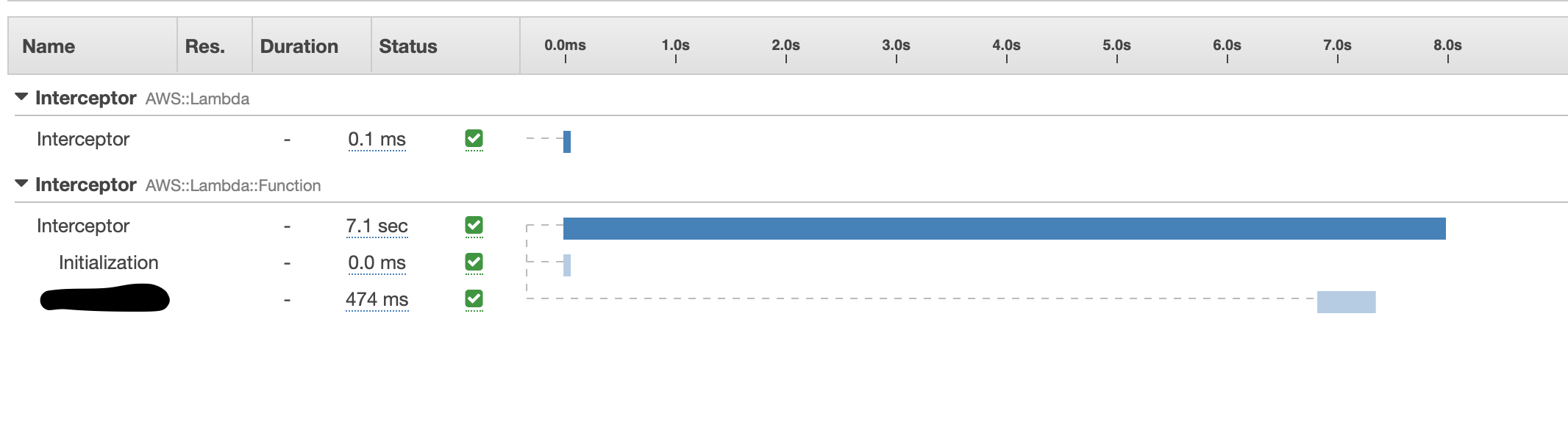

X-Ray segments submitted from SAM CLI.

Interceptorwas my Lambda function name. The crossed out subsegment was added inside my Lambda function code.Other issues:

start-apifor now.Tests:

Requesting feedback.

😞 Had a hard time figuring where to place the code within the project structure too, feedback welcome

By submitting this pull request, I confirm that my contribution is made under the terms of the Apache 2.0 license. 👍