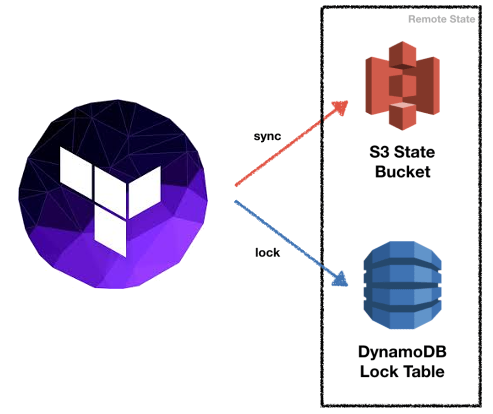

Terraform module to provision an S3 bucket to store terraform.tfstate file and a DynamoDB table to lock the state file to prevent concurrent modifications and state corruption.

We have a tfstate S3 Bucket per account

-

Versions:

<= 0.x.y(Terraform 0.11.x compatible) -

Versions:

>= 1.x.y(Terraform 0.12.x compatible)

| Name | Version |

|---|---|

| terraform | >= 1.1.9 |

| aws | ~> 5.0 |

| Name | Version |

|---|---|

| aws | 5.21.0 |

| aws.primary | 5.21.0 |

| aws.secondary | 5.21.0 |

| local | 2.4.0 |

| time | 0.9.1 |

No modules.

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| acl | The canned ACL to apply to the S3 bucket | string |

"private" |

no |

| additional_tag_map | Additional tags for appending to each tag map | map(string) |

{} |

no |

| attributes | Additional attributes (e.g. state) |

list(string) |

[ |

no |

| backend_config_filename | Name of the backend configuration file to generate. | string |

"backend.tf" |

no |

| backend_config_filepath | Directory where the backend configuration file should be generated. | string |

"" |

no |

| backend_config_profile | AWS profile to use when interfacing the backend infrastructure. | string |

"" |

no |

| backend_config_role_arn | ARN of the AWS role to assume when interfacing the backend infrastructure, if any. | string |

"" |

no |

| backend_config_state_file | Name of the state file in the S3 bucket to use. | string |

"terraform.tfstate" |

no |

| backend_config_template_file | Path to the template file to use when generating the backend configuration. | string |

"" |

no |

| billing_mode | DynamoDB billing mode. Can be PROVISIONED or PAY_PER_REQUEST | string |

"PAY_PER_REQUEST" |

no |

| block_public_acls | Whether Amazon S3 should block public ACLs for this bucket. | bool |

true |

no |

| block_public_policy | Whether Amazon S3 should block public bucket policies for this bucket. | bool |

true |

no |

| bucket_lifecycle_enabled | Enable/Disable bucket lifecycle | bool |

true |

no |

| bucket_lifecycle_expiration | Number of days after which to expunge the objects | number |

90 |

no |

| bucket_lifecycle_transition_glacier | Number of days after which to move the data to the GLACIER storage class | number |

60 |

no |

| bucket_lifecycle_transition_standard_ia | Number of days after which to move the data to the STANDARD_IA storage class | number |

30 |

no |

| bucket_replication_enabled | Enable/Disable replica for S3 bucket (for cross region replication purpose) | bool |

true |

no |

| bucket_replication_name | Set custom name for S3 Bucket Replication | string |

"replica" |

no |

| bucket_replication_name_suffix | Set custom suffix for S3 Bucket Replication IAM Role/Policy | string |

"bucket-replication" |

no |

| context | Default context to use for passing state between label invocations | map(string) |

{} |

no |

| create_kms_key | Whether to create a KMS key | bool |

true |

no |

| delimiter | Delimiter to be used between namespace, environment, stage, name and attributes |

string |

"-" |

no |

| dynamodb_monitoring | DynamoDB monitoring settings. | any |

{} |

no |

| enable_point_in_time_recovery | Enable DynamoDB point in time recovery | bool |

true |

no |

| enable_server_side_encryption | Enable DynamoDB server-side encryption | bool |

true |

no |

| enforce_ssl_requests | Enable/Disable replica for S3 bucket (for cross region replication purpose) | bool |

false |

no |

| enforce_vpc_requests | Enable/Disable VPC endpoint for S3 bucket | bool |

false |

no |

| environment | Environment, e.g. 'prod', 'staging', 'dev', 'pre-prod', 'UAT' | string |

"" |

no |

| force_destroy | A boolean that indicates the S3 bucket can be destroyed even if it contains objects. These objects are not recoverable | bool |

false |

no |

| ignore_public_acls | Whether Amazon S3 should ignore public ACLs for this bucket. | bool |

true |

no |

| kms_key_deletion_windows | The number of days after which the KMS key is deleted after destruction of the resource, must be between 7 and 30 days | number |

7 |

no |

| kms_key_rotation | Specifies whether key rotation is enabled | bool |

true |

no |

| label_order | The naming order of the id output and Name tag | list(string) |

[] |

no |

| logging | Bucket access logging configuration. | object({ |

null |

no |

| mfa_delete | A boolean that indicates that versions of S3 objects can only be deleted with MFA. ( Terraform cannot apply changes of this value; hashicorp/terraform-provider-aws#629 ) | bool |

false |

no |

| mfa_secret | The numbers displayed on the MFA device when applying. Necessary when mfa_delete is true. | string |

"" |

no |

| mfa_serial | The serial number of the MFA device to use. Necessary when mfa_delete is true. | string |

"" |

no |

| name | Solution name, e.g. 'app' or 'jenkins' | string |

"terraform" |

no |

| namespace | Namespace, which could be your organization name or abbreviation, e.g. 'eg' or 'cp' | string |

"" |

no |

| notifications_events | List of events to enable notifications for | list(string) |

[ |

no |

| notifications_sns | Whether to enable SNS notifications | bool |

true |

no |

| notifications_sqs | Wether to enable SQS notifications | bool |

false |

no |

| read_capacity | DynamoDB read capacity units | number |

5 |

no |

| regex_replace_chars | Regex to replace chars with empty string in namespace, environment, stage and name. By default only hyphens, letters and digits are allowed, all other chars are removed |

string |

"/[^a-zA-Z0-9-]/" |

no |

| replica_logging | Bucket access logging configuration. | object({ |

null |

no |

| restrict_public_buckets | Whether Amazon S3 should restrict public bucket policies for this bucket. | bool |

true |

no |

| stage | Stage, e.g. 'prod', 'staging', 'dev', OR 'source', 'build', 'test', 'deploy', 'release' | string |

"" |

no |

| tags | Additional tags (e.g. map('BusinessUnit','XYZ') |

map(string) |

{} |

no |

| vpc_ids_list | VPC id to access the S3 bucket vía vpc endpoint. The VPCe must be in the same AWS Region as the bucket. | list(string) |

[] |

no |

| write_capacity | DynamoDB write capacity units | number |

5 |

no |

| Name | Description |

|---|---|

| dynamodb_table_arn | DynamoDB table ARN |

| dynamodb_table_id | DynamoDB table ID |

| dynamodb_table_name | DynamoDB table name |

| s3_bucket_arn | S3 bucket ARN |

| s3_bucket_domain_name | S3 bucket domain name |

| s3_bucket_id | S3 bucket ID |

#

# Terraform aws tfstate backend

#

provider "aws" {

region = "us-east-1

}

provider "aws" {

region = "us-west-1"

alias = "secondary"

}

# The following creates a Terraform State Backend with Bucket Replication and all security nd compliance enhacements enabled

module "terraform_state_backend_with_replication" {

source = "../../"

namespace = "binbash"

stage = "test"

name = "terraform"

attributes = ["state"]

bucket_replication_enabled = true

providers = {

aws.primary = aws

aws.secondary = aws.secondary

}

}

# The module below creates a Terraform State Backend without bucket replication

module "terraform_state_backend" {

source = "../../"

namespace = "binbash"

stage = "test"

name = "terraform-test"

attributes = ["state"]

# By default replication is disabled but it shows below for the sake of the example

bucket_replication_enabled = false

# Notice that even though replication is not enabled, we still need to pass a secondary provider

providers = {

aws.primary = aws

aws.secondary = aws.secondary

}

# If you are moving from a previus version and want to avoid all or some of the security and compliance features you can use this example. However, we encourage to use this enhacements.

module "terraform_state_backend_with_replication" {

source = "../../"

namespace = "binbash"

stage = "test"

name = "terraform"

attributes = ["state"]

bucket_replication_enabled = true

## Avoid changes

# General

create_kms_key = false

# S3

block_public_acls = false

ignore_public_acls = false

block_public_policy = false

restrict_public_buckets = false

notifications_sns = false

notifications_sqs = false

bucket_lifecycle_enabled = false

# DynamoDB

enable_point_in_time_recovery = false

billing_mode = "PROVISIONED"

providers = {

aws.primary = aws

aws.secondary = aws.secondary

}

}

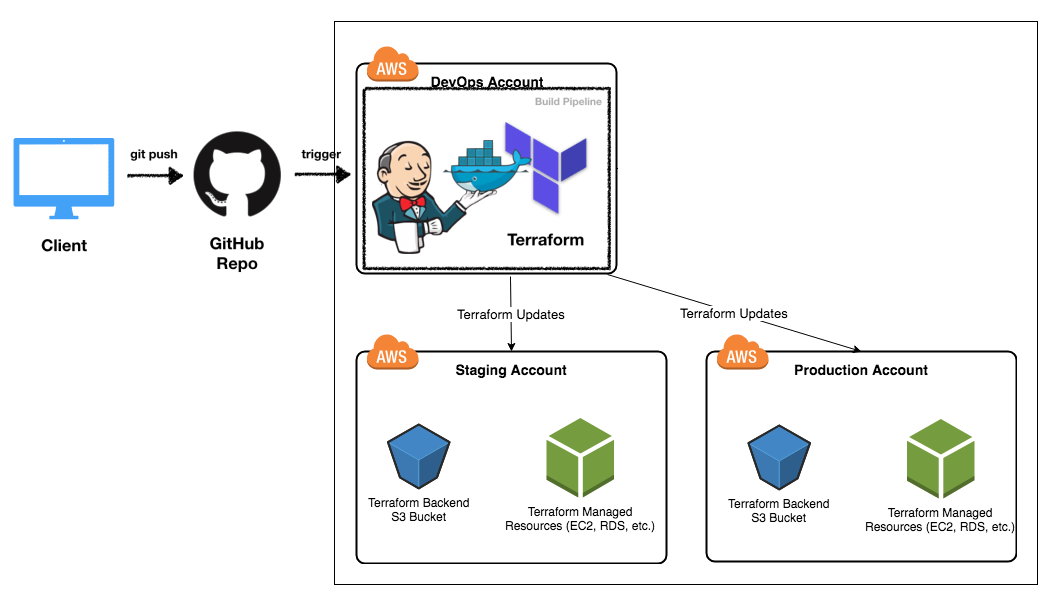

}If you choose to include this module in your own Terraform configuration to provision the backend supporting infrastructure, you can generate the backend configuration file automatically with this module.

To do so, use this module as usual, but provide at least the following input:

backend_config_filepath = "."

By default, this will make it so a backend.tf file with the backend

configuration is generated in the current working directory. Once you have

provisioned the infrastructure with terraform init && terraform apply, you

can copy over Terraform's state file to the backend bucket with the following

command:

terraform init -force-copyAfterwards, your Terraform state will have been copied over to the S3 bucket and Terraform is now ready to use it as a backend.

Refer to the list of backend_config_* inputs for more information on how to

tailor this behavior to your use case.

When using the enforce_vpc_requests = true please consider the following

AWS VPC gateway endpoint limitations

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| enforce_vpc_requests | Enable/Disable VPC endpoint for S3 bucket | bool |

false |

no |

| vpc_ids_list | VPC id to access the S3 bucket vía vpc endpoint. The VPCe must be in the same AWS Region as the bucket. | list(string) |

[] |

no |

- You cannot use an AWS prefix list ID in an outbound rule in a network ACL to allow or deny outbound traffic to the service specified in an endpoint. If your network ACL rules restrict traffic, you must specify the CIDR block (IP address range) for the service instead. You can, however, use an AWS prefix list ID in an outbound security group rule. For more information, see Security groups.

- Endpoints are supported within the same Region only. You cannot create an endpoint between a VPC and a service in a different Region.

- Endpoints support IPv4 traffic only.

- You cannot transfer an endpoint from one VPC to another, or from one service to another.

- You have a quota on the number of endpoints you can create per VPC. For more information, see VPC endpoints.

- Endpoint connections cannot be extended out of a VPC. Resources on the other side of a VPN connection, VPC peering connection, transit gateway, AWS Direct Connect connection, or ClassicLink connection in your VPC cannot use the endpoint to communicate with resources in the endpoint service.

- You must enable DNS resolution in your VPC, or if you're using your own DNS server, ensure that DNS requests to the required service (such as Amazon S3) are resolved correctly to the IP addresses maintained by AWS.

In order to get the full automated potential of the

Binbash Leverage DevOps Automation Code Library

you should initialize all the necessary helper Makefiles.

You must execute the make init-makefiles command at the root context

╭─delivery at delivery-I7567 in ~/terraform/terraform-aws-backup-by-tags on master✔ 20-09-17

╰─⠠⠵ make

Available Commands:

- init-makefiles initialize makefiles

You'll get all the necessary commands to automatically operate this module via a dockerized approach, example shown below

╭─delivery at delivery-I7567 in ~/terraform/terraform-aws-backup-by-tags on master✔ 20-09-17

╰─⠠⠵ make

Available Commands:

- circleci-validate-config ## Validate A CircleCI Config (https

- format-check ## The terraform fmt is used to rewrite tf conf files to a canonical format and style.

- format ## The terraform fmt is used to rewrite tf conf files to a canonical format and style.

- tf-dir-chmod ## run chown in ./.terraform to gran that the docker mounted dir has the right permissions

- version ## Show terraform version

- init-makefiles ## initialize makefiles╭─delivery at delivery-I7567 in ~/terraform/terraform-aws-backup-by-tags on master✔ 20-09-17

╰─⠠⠵ make format-check

docker run --rm -v /home/delivery/Binbash/repos/Leverage/terraform/terraform-aws-backup-by-tags:"/go/src/project/":rw -v :/config -v /common.config:/common-config/common.config -v ~/.ssh:/root/.ssh -v ~/.gitconfig:/etc/gitconfig -v ~/.aws/bb:/root/.aws/bb -e AWS_SHARED_CREDENTIALS_FILE=/root/.aws/bb/credentials -e AWS_CONFIG_FILE=/root/.aws/bb/config --entrypoint=/bin/terraform -w "/go/src/project/" -it binbash/terraform-awscli-slim:0.12.28 fmt -check- pipeline-job (NOTE: Will only run after merged PR)

- releases

- changelog