Significant performance boost on some environments. #21

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Hi CJ,

After I issued the last pull-request with setNoDelay() call, I checked the beanstalkc library (https://github.com/earl/beanstalkc/) and the redis-py if they also set the TCP_NODELAY flag.

To my surprise neither of those libraries set that flag. This sparked my interest to understand why this is a problem in fivebeans but not in the other clients.

We are using beanstalkd to push messages, rather than jobs, so performance is important.

Ok, time for some data.

Disclamer: results may vary, this is the worst offender: Ubuntu 12.10 quantal 64-bit on my ASUS UX32 laptop.

My Ubuntu 10.10 32-bit desktop does not perform as bad with v1.0.3, but the code in this PR does increase performance there too.

Using this code:

https://gist.github.com/jtsoi/7788515

I get following output with v1.0.3:

This is very very slow. And notice that there seems to be 40ms delay between each "event", this is key. =)

BTW, the numbers are per second.

Ok same machine with v1.0.4: (And the setNoDelay() flag)

Significantly better!

Now with this PR code:

Even better! And this, without the setNoDelay() code.

The reason for this is that the original code calls stream.write() several times during a put. (any commands with payload) Once for the command itself and then the data. This causes at least 2 TCP packets to go out.

The problem occurs on hosts where the server socket has "Delayed ack" enabled and the client socket has "Nagle's algorithm" enabled. Delayed ack causes the server socket to delay the ACK until the second packet arrives OR 40ms timer expires (can be up to 200ms on some hosts). And the client socket won't send the second packet, (data payload) until it sees the ACK for packet 1.

More on this: http://youtu.be/2CMueBcQNtk

So the solution, is to call stream.write() ONCE per command and include data in the write.

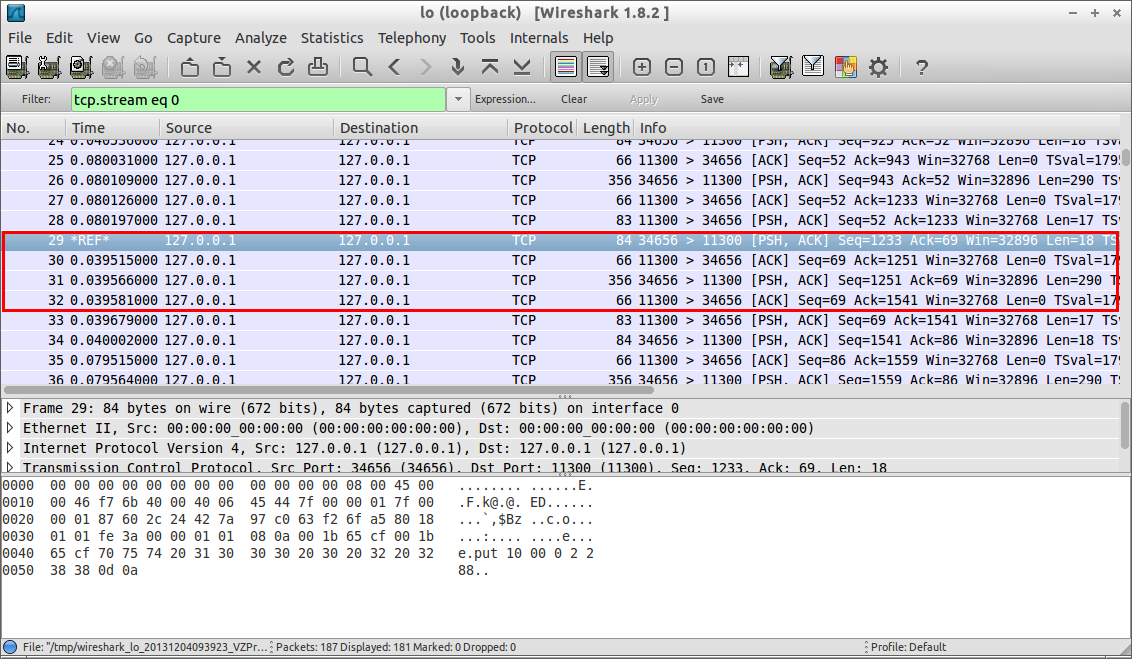

The difference in traffic: v1.0.3:

The red box is one put command roundtrip, notice the 40ms delay between the first packet and the server ACK.

After the ACK the second packet is sent with the data payload.

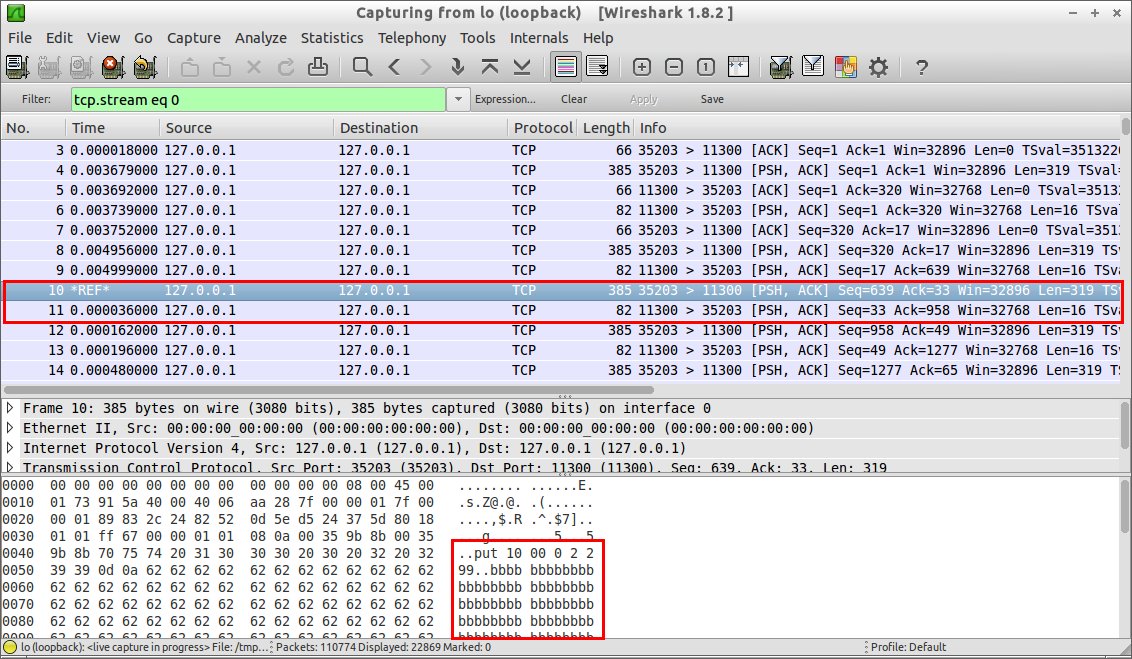

This is how traffic looks with this PR:

Notice how the command and data are sent in one packet, and the ACK for this packet piggybacks on the server reply containing "INSERTED...", this way, it really does not matter it Nagle's algorithm is enabled or not, as the packets are ACKed as soon as server replies. And we also reduced the number of packets from 4 to 2!

This is how beanstalkc lib and redis-py do this as well.

PS.

Before pulling this in, can you run the tests on your machine? They run fine and pass on my desktop, but are flaky on the laptop sometimes (timeouts.)

PPS.

The Buffer.concat() has a dependency on node >= 0.8 hope this is OK.