This project explores the opportunities of deep learning for character animation and control as part of my Ph.D. research at the University of Edinburgh in the School of Informatics, supervised by Taku Komura. Over the last couple years, this project has become a modular and stable framework for data-driven character animation, including data processing, network training and runtime control, developed in Unity3D / Tensorflow / PyTorch. This repository enables using neural networks for animating biped locomotion, quadruped locomotion, and character-scene interactions with objects and the environment, or sports games. Further advances on this research will continue to be added to this project.

SIGGRAPH 2020

Local Motion Phases for Learning Multi-Contact Character Movements

Sebastian Starke,

Yiwei Zhao,

Taku Komura,

Kazi Zaman.

ACM Trans. Graph. 39, 4, Article 54.

Not sure how to align complex character movements? Tired of phase labeling? Unclear how to squeeze everything into a single phase variable? Don't worry, a solution exists!

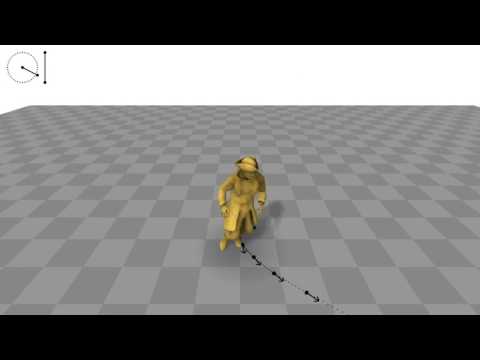

Controlling characters to perform a large variety of dynamic, fast-paced and quickly changing movements is a key challenge in character animation. In this research, we present a deep

learning framework to interactively synthesize such animations in high quality, both from unstructured motion data and without any manual labeling. We introduce the concept of local

motion phases, and show our system being able to produce various motion skills, such as ball dribbling and professional maneuvers in basketball plays, shooting, catching, avoidance,

multiple locomotion modes as well as different character and object interactions, all generated under a unified framework.

- Video - Paper - Code (coming soon) -

SIGGRAPH Asia 2019

Neural State Machine for Character-Scene Interactions

Sebastian Starke,

He Zhang,

Taku Komura,

Jun Saito.

ACM Trans. Graph. 38, 6, Article 178.

(*Joint First Authors)

- Video - Paper - Code & Data -

SIGGRAPH 2018

Mode-Adaptive Neural Networks for Quadruped Motion Control

He Zhang,

Sebastian Starke,

Taku Komura,

Jun Saito.

ACM Trans. Graph. 37, 4, Article 145.

(*Joint First Authors)

- Video - Paper - Code - Mocap Data - Windows Demo - Linux Demo - Mac Demo - ReadMe -

SIGGRAPH 2017

Phase-Functioned Neural Networks for Character Control

Daniel Holden,

Taku Komura,

Jun Saito.

ACM Trans. Graph. 36, 4, Article 42.

- Video - Paper - Code (Unity) - Windows Demo - Linux Demo - Mac Demo -

In progress. More information will be added soon.

This project is only for research or education purposes, and not freely available for commercial use or redistribution. The intellectual property for different scientific contributions belongs to the University of Edinburgh, Adobe Systems and Electronic Arts. Licensing is possible if you want to use the code for commercial use. For scientific use, please reference this repository together with the relevant publications below.

The motion capture data is available only under the terms of the Attribution-NonCommercial 4.0 International (CC BY-NC 4.0) license.