This repository contains an implementation of reinforcement learning based on:

* DDPG but using parallel agents to solve the unity reacher environment

* Proximal Policy Optimization with a Critic Network as a baseline and with a Generalized Advantage Estimation

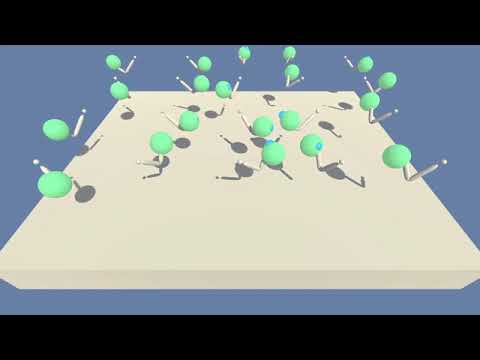

It has a 20 double-jointed arms. Each one has to reach a target. Whenever one arm reaches its target, a reward of up to +0.1 is received. This environment is simliar to the reacher of Unity.

The action space is continuous [-1.0, +1.0] and consists of 4 values for 4 torques to be applied to the two joints.

The environment is considered as solved if the average score of the 20 agents is +30 for 100 consecutive episodes.

A video of a trained agent can be found by clicking on the image here below

- report.pdf: a document that describes the details of implementation of the DDPG, along with ideas for future work

- report-ppo.pdf: a document that describes the details of implementation of the PPO

- folder agents: contains the implementation of

- a parallel DDPG using one network shared by all agents

- a parallel DDPG with multiple network

- an Actor-Critic network model using tanh as activation

- a ReplayBuffer

- an ActionNoise that disturb the output of the actor network to promote exploration

- a ParameterNoise that disturb the weight of the actor network to promote exploration

- an Ornstein-Uhlenbeck noise generator

- an implementation of a Proximal Policy Optimization

- a Gaussian Actor Critic network for the PPO

- folder started_to_converge: weights of a network that started to converge but slowly

- folder weights:

- weights of the network trained with DDPG that solved this environment. It contains as well the history of the weights.

- weights of the Gaussian Actor Critic Network that solved this environment with PPO

- folder research:

- Cozmo25 customized the source code of ShangTong to solve the reacher using PPO

- this folder contains one file all.py that has only the code necessary by the PPO

- compare.ipynb to compare the performance between that implementation and the ppo.py of this repository

- Notebooks

- jupyter notebook Continuous_Control.ipynb: run this notebook to train the agents using DDPG

- jupyter notebook noise.ipynb: use this notebook to optimize the hyperparameter of the noise generator to check that its output would not limit the exploration

- jupyter notebook view.ipynb: a notebook that can load the different saved network weights trained with DDPG and visualize the agents

- jupyter notebook Continuous_Control-PPO.ipynb: a notebook to train an agent using PPO and then to view the trained agent

To run the codes, follow the next steps:

- Create a new environment:

- Linux or Mac:

conda create --name ddpg python=3.6 source activate ddpg- Windows:

conda create --name ddpg python=3.6 activate ddpg

- Perform a minimal install of OpenAI gym

- If using Windows,

- download swig for windows and add it the PATH of windows

- install Microsoft Visual C++ Build Tools

- then run these commands

pip install gym pip install gym[classic_control] pip install gym[box2d]

- If using Windows,

- Install the dependencies under the folder python/

cd python

pip install .- Fix an issue of pytorch 0.4.1 to allow backpropagate the torch.distribution.normal function up to its standard deviation parameter

- change the line 69 of Anaconda3\envs\drlnd\Lib\site-packages\torch\distributions\utils.py

# old line

# tensor_idxs = [i for i in range(len(values)) if values[i].__class__.__name__ == 'Tensor']

# new line

tensor_idxs = [i for i in range(len(values)) if isinstance(values[i], torch.Tensor)]- Create an IPython kernel for the

ddpgenvironment

python -m ipykernel install --user --name ddpg --display-name "ddpg"-

Download the Unity Environment (thanks to Udacity) which matches your operating system

-

Start jupyter notebook from the root of this python codes

jupyter notebook- Once started, change the kernel through the menu

Kernel>Change kernel>ddpg - If necessary, inside the ipynb files, change the path to the unity environment appropriately