-

-

Notifications

You must be signed in to change notification settings - Fork 3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Disk space usage of old Files API nodes #3254

Comments

|

Possibly, also you might have old files, or not longer reachable files lying around. You might want to make sure that all of your important files are pinned or reachable by Files API root and run |

|

The script only adds files, it doesn't delete them, so there shouldn't be any old files. With that being the case, is a gc safe, as all files are reachable from root? Thanks |

|

It should be on 0.4.3. |

All data on the device is from IPFS, is there a way to get around this? |

|

@lgierth, @whyrusleeping: I think you in the past recovered from situation like that so I think you might be more of a help. |

|

You'll have to free up a few kilobytes somehow, go-ipfs is currently not able to start if there's no space left. You could also move some subdirectory of the repo to a ramdisk or similar. |

|

Moving the datastore folder worked and gc ran successfully. Is it suggested to run gc regularly on cron with my usecase? The problem I see is that it requires an exclusive lock so the daemon can't run. Ideally old roots would be automatically cleaned up by the files API, is this planned? Thanks |

|

You can have the daemon itself trigger a gc automatically by starting it like this Edit:

You should be able to initiate a gc while the daemon is running or not so long as it's not already locked. I tend to do mine manually while it is up. You can also prune specific hashes with the |

|

Edit: s/Duplicate/Possible duplicate/ |

|

I know the files are being added with files api, but additionally they are pinned, and the extra store is likely due to the same reason as #3621 regardless of how the files are being added (the almost twice size of storage increase might be a coincidence). This can be quickly tested though. |

|

They don't have to be pinned and files API doesn't use pinset for pinning. |

|

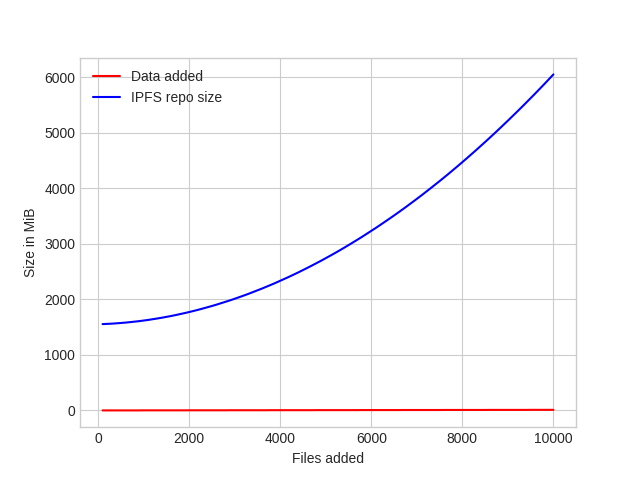

Confirmed there is an additional storage explosion coming from Tested on #3640 (even after deterministic pin sharding). |

|

The trick is to use |

Version information: Official 0.4.3 64 bit Linux binary

Type: Files API

Priority: P3

Description:

I've been adding a large amount of small files, using the files API, and on a cronjob using "ipfs name publish $(ipfs files stat / | head -n 1)" to publish to IPNS.

The disk is now full. The disk IPFS is using is 250GB of Digital Ocean Block storage:

root@ipfs:~# df -h | grep ipfsFilesystem Size Used Avail Use% Mounted on/dev/sda 246G 246G 0 100% /root/.ipfsI tried to run "ipfs files stat /", but it failed due to no disk space being available (is this a bug?), so I instead did this to get the root object's stats:

root@ipfs:~# ipfs resolve /ipns/QmekbrSJGBAJy6Yzbj5e2pJh61nxWsTwpx88FraUVHwq8x /ipfs/QmTgJ1ZWcGDhyyAnvMn3ggrQntrR6eVrhMmuVxjcT7Ct3Droot@ipfs:~# ipfs object stat /ipfs/QmTgJ1ZWcGDhyyAnvMn3ggrQntrR6eVrhMmuVxjcT7Ct3DNumLinks: 1BlockSize: 55LinksSize: 53DataSize: 2CumulativeSize: 124228002182The useful data is ~124GB, so almost twice the amount of storage is being used as there is added data. Is this because of old root objects hanging around?

Over 99% of disk usage is in .ipfs/blocks

The text was updated successfully, but these errors were encountered: