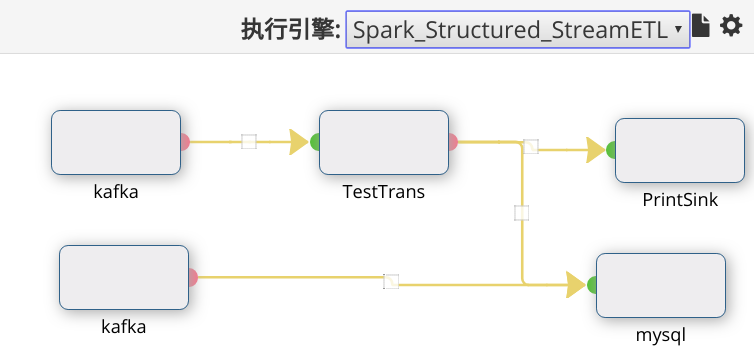

The Sylph is Stream Job management platform. The Sylph core idea is to build distributed applications through workflow descriptions. Support for

- spark1.x Spark-Streaming

- spark2.x Structured-Streaming

- flink stream

create function get_json_object as 'ideal.sylph.runner.flink.udf.UDFJson';

create source table topic1(

key varchar,

message varchar,

event_time bigint

) with (

type = 'ideal.sylph.plugins.flink.source.TestSource'

);

-- 定义数据流输出位置

create sink table event_log(

key varchar,

user_id varchar,

event_time bigint

) with (

type = 'hdfs', -- write hdfs

hdfs_write_dir = 'hdfs:///tmp/test/data/xx_log',

eventTime_field = 'event_time',

format = 'parquet'

);

insert into event_log

select key,get_json_object(message, 'user_id') as user_id,event_time

from topic1The registration of the custom function is consistent with the hive

create function get_json_object as 'ideal.sylph.runner.flink.udf.UDFJson';Support flink-stream spark-streaming spark-structured-streaming(spark2.2x)

sylph builds use Gradle and requires Java 8.

# Build and install distributions

./gradlew clean assemble

After building Sylph for the first time, you can load the project into your IDE and run the server. Me recommend using IntelliJ IDEA.

After opening the project in IntelliJ, double check that the Java SDK is properly configured for the project:

- Open the File menu and select Project Structure

- In the SDKs section, ensure that a 1.8 JDK is selected (create one if none exist)

- In the Project section, ensure the Project language level is set to 8.0 as Sylph makes use of several Java 8 language features

- HADOOP_HOME(2.6.x+) SPARK_HOME(2.3.x+) FLINK_HOME(1.5.x+)

Sylph comes with sample configuration that should work out-of-the-box for development. Use the following options to create a run configuration:

- Main Class: ideal.sylph.main.SylphMaster

- VM Options: -Dconfig=etc/sylph/sylph.properties -Dlog4j.file=etc/sylph/sylph-log4j.properties

- ENV Options: FLINK_HOME= HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

- Working directory: sylph-dist/build

- Use classpath of module: sylph-main

- yezhixinghai@gmail.com - For discussions about code, design and features

- lydata_jia@163.com - For discussions about code, design and features

- jeific@outlook.com - For discussions about code, design and features

We need more power to improve the view layer. If you are interested, you can contact me by email.

- sylph被设计来处理分布式实时ETL,实时StreamSql计算,分布式程序监控和托管.

- 加入QQ群 438625067