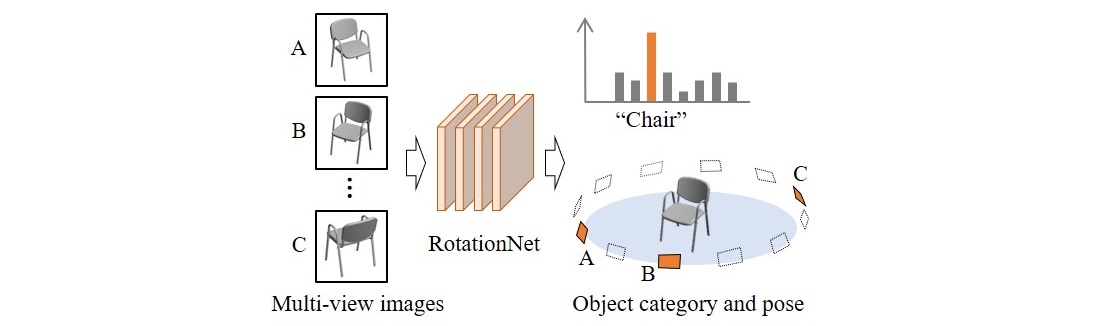

RotationNet takes multi-view images of an object as input and jointly estimates its pose and object category.

Asako Kanezaki, Yasuyuki Matsushita and Yoshifumi Nishida. RotationNet: Joint Object Categorization and Pose Estimation Using Multiviews from Unsupervised Viewpoints. CVPR, pp.5010-5019, 2018. (pdf) (project)

[2018.08.10] We uploaded the scripts and pre-trained models that reproduce our BEST results on ModelNet10 and ModelNet40.

[2017.02] We got the first prize at the SHREC2017 RGB-D Object-to-CAD Retrieval Contest!

[2017.02] We got the first prize at Task 1 in the SHREC2017 Large-scale 3D Shape Retrieval from ShapeNet Core55 Challenge!

Please see SHREC2017_track3 repository to reproduce our results on SHREC2017 track3.

1. Prepare caffe-rotationnet2

$ git clone https://github.com/kanezaki/caffe-rotationnet2.git

$ cd caffe-rotationnet2

Prepare your Makefile.config and compile.

$ make; make pycaffe

$ git clone https://github.com/kanezaki/rotationnet.git

$ cd rotationnet

Models trained on ModelNet10 and ModelNet40 (full set)

$ wget https://data.airc.aist.go.jp/kanezaki.asako/pretrained_models/rotationnet_modelnet10_case2_ori2.caffemodel

$ wget https://data.airc.aist.go.jp/kanezaki.asako/pretrained_models/rotationnet_modelnet40_case2_ori4.caffemodel

Models trained on ModelNet40 (subset)

$ wget https://data.airc.aist.go.jp/kanezaki.asako/pretrained_models/rotationnet_modelnet40_case1.caffemodel

$ wget https://data.airc.aist.go.jp/kanezaki.asako/pretrained_models/rotationnet_modelnet40_case2.caffemodel

Change 'caffe_root' in save_scores.py to your path to caffe-rotationnet2 repository.

Run the demo script.

$ bash demo.sh

This predicts the category of testing images. Please see below and run "demo2.sh" for testing pose estimation.

$ bash get_full_modelnet_png.sh

$ bash test_full_modelnet10.sh

$ bash test_full_modelnet40.sh

$ bash get_modelnet_png.sh

[Su et al. 2015] H. Su, S. Maji, E. Kalogerakis, E. Learned-Miller. Multi-view Convolutional Neural Networks for 3D Shape Recognition. ICCV2015.

$ bash test_modelnet40.sh

$ bash get_modelnet_png.sh

Please download the file "ilsvrc_2012_train_iter_310k" according to R-CNN repository

This is done by the following command:

$ wget http://www.cs.berkeley.edu/~rbg/r-cnn-release1-data.tgz

$ tar zxvf r-cnn-release1-data.tgz

$ ./caffe-rotationnet2/build/tools/caffe train -solver Training/rotationnet_modelnet40_case2_solver.prototxt -weights caffe_nets/ilsvrc_2012_train_iter_310k 2>&1 | tee log.txt

$ ./caffe-rotationnet2/build/tools/caffe train -solver Training/rotationnet_modelnet40_case1_solver.prototxt -weights caffe_nets/ilsvrc_2012_train_iter_310k 2>&1 | tee log.txt

$ bash get_modelnet_png.sh

[Su et al. 2015] H. Su, S. Maji, E. Kalogerakis, E. Learned-Miller. Multi-view Convolutional Neural Networks for 3D Shape Recognition. ICCV2015.

$ bash make_reference_poses.sh case1 # for case (1)

$ bash make_reference_poses.sh case2 # for case (2)

This predicts the viewpoints of training images and writes the image file paths in the predicted order.

$ bash demo2.sh

This predicts the category and viewpoints of testing images, and then displays 10 training objects in the predicted category seen from the predicted viewpoints.

Please see SHREC2017_track3 repository

BSD