-

Notifications

You must be signed in to change notification settings - Fork 4

Integers

Prev: Swift on Raspberry Pi

Swift's high-level dynamic-language-like syntax, combined with its type-inferencing, often leads to confusion for new developers when its static-typed native code compilation underpinnings shine through.

One such situation of particular interest to programming the Raspberry Pi hardware is Swift's treatment of integer types.

Swift has a large family of different integers covering a range of sizes in both signed and unsigned variants:

IntInt8Int16Int32Int64UIntUInt8UInt16UInt32UInt64

However, unlike other languages, Swift's strict type handling treats each integer type as a distinct type. This, along with some other differences in behavior, can cause confusion for new developers.

Part of the confusion stems from a key difference between integer literals in Swift and other languages.

As we'd expect, we can initialize an integer from a literal:

let x: Int = 42And we can initialize integers of different types from literals as well:

let y: UInt = 42But initializing one integer from another of a different type fails:

let x: Int = 42

let y: UInt = x

🛑 Cannot convert value of type 'Int' to specfied type 'UInt'If we're used to C-based languages, we would find it surprising that initializing from the literal worked, while the variable did not. In C the literal 42 has a type of int, so it's not obviously different from initializing from a variable of that same type.

In Swift, literals do not implicitly have a type. Instead the type is dictated by the context. When initializing a UInt, the literal is inferred to be of that type, and no type conversion is performed.

But when Swift cannot determine the literal's type from context, it will have an inferred type of Int, which is why this works:

let x = 42 // IntIn many situations this doesn't cause any problems since Swift can infer the correct type for the literal based on the type it's being assigned to, or the type of the function or closure parameter, etc. But it does cause gotchas in a few places when Swift has to fall back on inferring the type as Int.

The most common example is when a literal is required that would exceed the capacity of the Int type:

let x = 0x7fffffff

🛑 integer literal '4294967295' overflows when stored into 'Int'The correct fix depends on the exact situation; where a variable is being initialized directly, usually the right fix is to simply provide the type of the variable:

let x: UInt = 0x7fffffffBut other circumstances occur when you're providing the literal to a function that has multiple overloads, or a generic. Swift will default to inferring Int if that is a permitted option, even when there are larger capacity options available.

In those situations we can coerce the value of the literal:

0x7fffffff as UIntSince implicit conversions between integer types are not allowed, when we do need to convert integers from one type to another, we need to use explicit conversions.

The type-casting operator in Swift is as, but it only works on types within a hierarchy, for types that can be bridged between other languages, and for literal coercion. The various integer types, while confirming to the same set of protocols, are not otherwise related to each other, so we cannot use this operator to convert them.

Instead the correct way is by using the various constructors available on the integer types. Opening the library reference may surprise you by just many variations there are.

It's important to use the right one, which takes a little thought.

Remember that even the signed and unsigned types of the same size are considered distinct types in Swift.

Firstly lets consider the conversion from an Int32 to an Int. This is the easiest example because we know that Int will be either 32-bits or 64-bits, so the conversion is guaranteed to have at least same number of bits available.

We can use the simplest constructor:

let fixedValue: Int32 = 42

let value = Int(fixedValue)This constructor is available for all type conversions, however it has a literal trap for the unwary. If it is not possible to perform the conversion, the program will stop with a runtime error:

let largerValue: Int64 = Int64.max

let value = Int32(largerValue)

🛑 Fatal error: Not enough bits to represent a signed valueSo how do we safely perform integer conversions between types of different sizes?

Since any conversion between an integer of a larger size to a lesser one is a potentially lossy one, it actually depends on exactly what we want to preserve.

When the integer value itself matters most, we want to perform an exact conversion.

This is a conversion that succeeds when the value fits into the new integer type, or fails in a manner we can detect when it does not:

if let value = Int(exactly: largerValue) {

// Fits

} else {

// Does not fit

}When the scale of the conversion matters, and we want to still obey mathematical rules, a clamping conversion is the most appropriate.

Clamping conversions always succeed; when the value fits into the new integer type, the new type has the same mathematical value as the old. If the value does not fit, the new type has the largest or smallest possible value for it—as appropriate.

let largerValue: Int64 = Int64.max

let value = Int32(clamping: largerValue) // == Int32.maxA clamping conversion always preserves the sign of the input.

A similar type of conversion is a truncating one. These are used when the mathematical value of the integer isn't relevant, but the pattern of bits within it is.

As with a clamping conversion, a truncating conversion always succeeds, but with different rules.

When the value does not fit into the new integer type, only the least significant bits are retained:

let largerValue: Int16 = 0b01010111_11110101

let value = Int8(truncatingIfNeeded: largerValue) // == 0b11110101Note that in this case the least significant eight bits had a bit pattern that changed the sign of the number from a positive one to a negative one.

When the value does fit, the new value is sign-extended if necessary to fill the new type:

let expandValue = Int16(truncatingIfNeeded: value) // == 0b11111111_11110101Thus truncating conversions preserve the sign and thus mathematical value when moving to a larger type, but do not the sign when moving to a smaller type.

The final type of conversion is a nuanced version of the truncating conversion.

Unlike the others, it is not defined in the BinaryInteger protocol, but is defined on each integer type directly.

It is the conversion between a signed and unsigned type of the same width, while retaining exactly the same bit pattern:

let unsignedValue: UInt16 = 0b11110000_10101100

let signedValue = Int16(bitPattern: unsignedValue)This conversion can also be used as a way to initialize an integer from a literal bit pattern that would otherwise not fit due to the sign bit:

let largeConstant = Int(bitPattern: 0xffffffff)In practice this has the same effect as a truncating conversion, since the type has the same size, however better documents the intent of the conversion.

Swift's rules for the size of these types is relatively simple, and another source of surprise for C programmers.

On 32-bit platforms (such as x86, and ARM) the size of Int and UInt is 32-bits.

On 64-bit platforms (such as x86-64/AMD64 and ARM64) the size of Int and UInt is 64-bits.

This differs from the rules of C, where int often remains 32-bits on the modern 64-bit platforms. The Swift Int type in fact has the size of the C long type.

Swift also considers the Int and Int32 or Int64 types to be distinct, even when they have the same width. So conversions need to be performed between them, even on platforms where they are the same width.

While this seems like a needless chore, in fact it improves portability. For example, if you regularly code and test on a Mac, while deploying to a Raspberry Pi running a 32-bit version of Linux, this is something you will have to care about since the Mac has a 64-bit Int while the Raspberry Pi would have a 32-bit Int.

Additionally while you might use a 32-bit version of Linux, the newer Raspberry Pi boards are capable of operating in 64-bit mode, and there are 64-bit Debian images available; so your code might be compiled by users intending to run in the 64-bit environment where Int will have a different size.

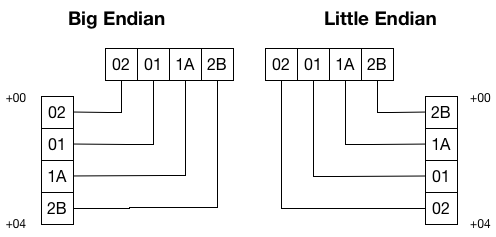

Endianness refers to the ordering of bytes in memory for larger values. For example, a 32-bit integer occupies 4 bytes in memory; to represent the number 0x02011A2B the four bytes 0x02, 0x01, 0x1A, and 0x2B have to be stored and there are two reasonable ways of doing so:

When a number is stored big-endian, the most significant byte goes first in memory. This is the same way that we write decimal numbers, so it's pretty reasonable for humans to read.

When a nunber is stored little-endian, the least significant byte goes first. If you were to read the bytes individually, as a human, they would appear backwards.

There are all sorts of practical reasons for both orderings, and the most common for computer architectures is actually little-endian, while so-called "network byte order" is big-endian.

For the most part this is not anything you need to worry about since Swift handles the endianness of integers for you, and its truncating conversion constructors are not endian-specific in behavior; the same byte is the least significant, whether it comes at the higher or lower memory address.

The only time we have to care is when the local rules of the land are broken, for example when transmitting data over a network.

If we know that a value is in big-endian format, and we want it converted to our local endianness, there is a constructor for that:

let value = Int(bigEndian: networkValue)On big-endian architectures, this would not change the value.

Likewise we can take a value in our local endianness, and convert it into big-endian format for another platform:

let networkValue = value.bigEndianSince most network traffic is transmitted in big-endian order (also known as network byte order), these are common conversion.

Fortunately the rules on conversions are only necessary when storing values, and when performing mathematical operations on them.

For the common case of comparing values, like most languages, Swift allows comparison between integers of different types:

let signedValue: Int = 42

let unsignedValue: UInt = 1

x == y // trueAnother trap to fall into with Swift is that its behavior on mathematical overflow is to produce a runtime error:

let value = Int.max

value + 1

🛑 Fatal error: OverflowThis is actually usually appropriate since overflow almost always ends up with undefined results.

If it's acceptable to be discarded, an alternate family of operators is provided: &*, &+, and &-.

Swift provides the common << and >> shift operators, however the rules are a little different than in some languages, and these are known as smart shift operators.

The key difference is that a negative shift value results in the opposite shift being performed. The following are equivalent:

value << 2

value >> -2The left shift operator always fills with zeros, so an overshift gives a result of zero. The right shift operator fils with the sign bit, so an overshift either gives a result of zero or -1 depending on whether the input was a positive or negative number.

When right shifting unsigned types, the sign bit is considered to be zero.

Alternative masking shift operators &<< and &>> are also provided. These first bitmask the shift value into the appropriate range for the bit width, and then perform the shift as normal.

Next: Pointers