-

Notifications

You must be signed in to change notification settings - Fork 0

Decision Tree

LordBenzo edited this page Jan 30, 2023

·

2 revisions

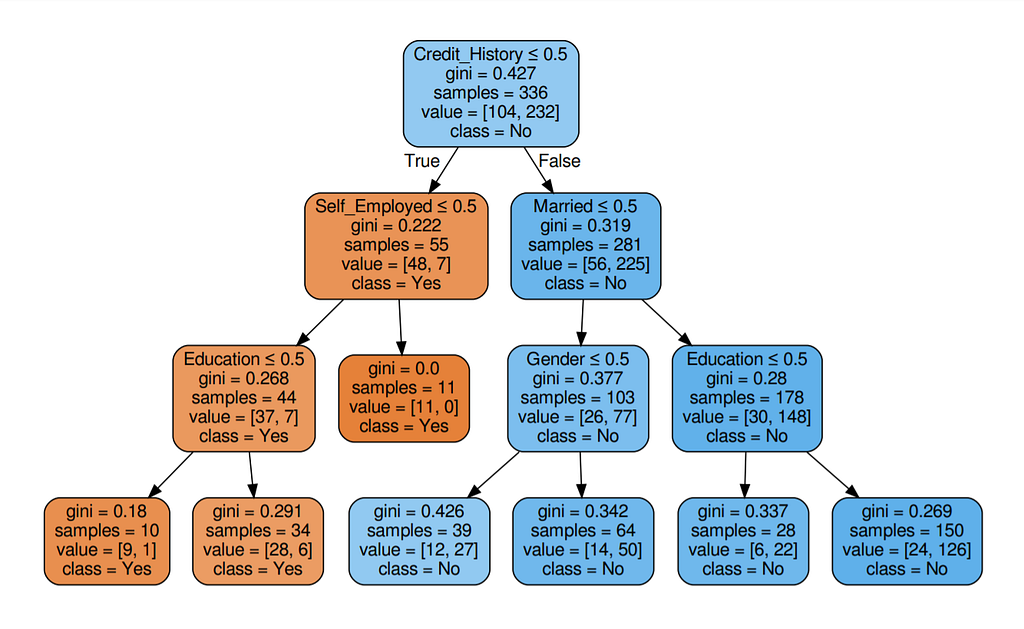

Follows a set of if-else conditions to classify the data based on conditions.

- Root Node: This attribute is used for dividing the data into two or more sets. The feature attribute in this node is selected based on Attribute Selection Techniques.

- Branch or Sub-Tree: A part of the entire decision tree is called a branch or sub-tree.

- Splitting: Dividing a node into two or more sub-nodes based on if-else conditions.

- Decision Node: After splitting the sub-nodes into further sub-nodes, then it is called the decision node.

- Leaf or Terminal Node: This is the end of the decision tree where it cannot be split into further sub-nodes.

- Pruning: Removing a sub-node from the tree is called pruning.

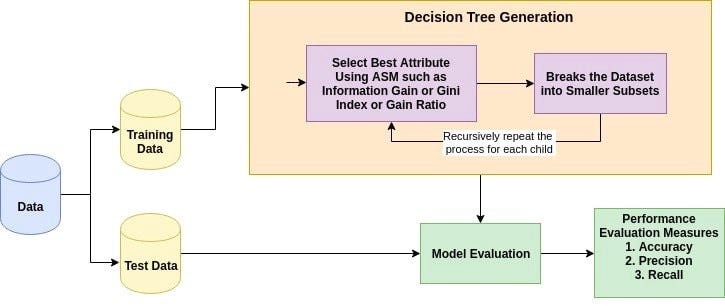

Root nood is selected based on results from Attribute Selection Measure (such as Gini index, IG, Gain ratio).

Information gain/gain ratio are suitable for discrete data, while Gini index is often used for continuous data. Can overfit on the data and is very sensitive to change in dataset. It's good to capture non-linear patterns in a dataset.

Classification is used for discrete while Regression is used for continuous target.

https://www.youtube.com/watch?v=ZVR2Way4nwQ&t=157s

https://towardsai.net/p/programming/decision-trees-explained-with-a-practical-example-fe47872d3b53