-

Notifications

You must be signed in to change notification settings - Fork 10

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Automatic sensor elevation measurement as part of the initial calibration component #40

Comments

|

The calibration utility mentioned here might also be of use. |

|

I was thinking of a similar approach. The procedure would roughly look like this:

Question - what is the best way to store these variables and make these persistent across different sessions? Should these be saved to an external *.txt file or are there smarter ways of approaching things? |

|

Another issue is how to calibrate a projector with the sandbox. Our current approach is to have a floating viewport maximized on the external screen (projector) and manually adjust the zoom factor. This is not very precise, nor is it fun to do. Ideas for automating this step are more than welcome. |

|

Revisiting this topic. @philipbelesky, how do you align your projector with the sandbox? I am having trouble finding proper settings, which would give satisfying results across the board. Can get parts of the box aligned properly (center, right side, left side) but never the entire thing. Drawing inspiration from other domains - it is essentially a video mapping exercise, that we are facing. So I took vpt8 for a spin and tried out its mapping tool set. It resulted in a near-perfect alignment. See the below image for reference, where I used 5 sand balls as markers, one in each corner and one in the center. There are a few downsides to this approach:

So here is my question: do you know of a way to warp the viewport, so that it matches our sandbox geometry better? @BarusXXX, is this something Horster could do? |

|

Rather than modifying the viewport, I tried warping the geometry instead. Used In the top view, one can see, how much warping needs to be applied to counter for hardware misalignment. |

|

Agree that the box morphing and the like are unideal. Could these alignment issues pictured by corrected in your mounting system itself, or are some unavoidable? I was assuming there would always be a degree of barrel/lens distortion with the projected but that setting the focal length of the Rhino camera might be able to cancel it out. Generally I've been operating the sandbox without a projector as I don't have a mobile mounting system for it. We do have a number of very large screens on wheels though, which means they can be juxtaposed relatively nicely with the sandbox. That last I used a projector we had it suspended from the ceiling and I didn't notice any dramatic alignments. I'll get a projector setup going at some point, but need to sink a bit of time into evaluating whether a smaller/pico style projector is good enough (in which case the mounting can use a boom arm/tripod of some sort) or if a more heavy duty setup will be needed. |

|

Calibration is done: |

The point of this is for users to be able to automatically (and precisely) define the input for the

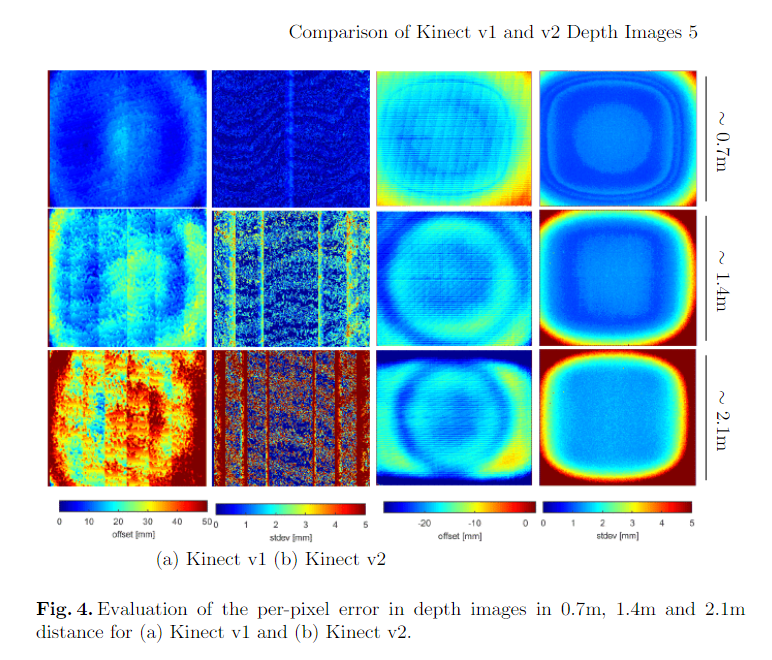

sensorElevationvariable. This logic could also output a depth scan of the flat table to correct for Kinect inaccuracies and sensor tilt.Technical background in this paper.

The text was updated successfully, but these errors were encountered: