This is a fully functional implementation of the work of Niklaus et al. [1] on Adaptive Separable Convolution, which claims high quality results on the video frame interpolation task. We apply the same network structure trained on a smaller dataset and experiment with various different loss functions, in order to determine the optimal approach in data-scarce scenarios.

For detailed information, please see our report on arXiv:1809.07759.

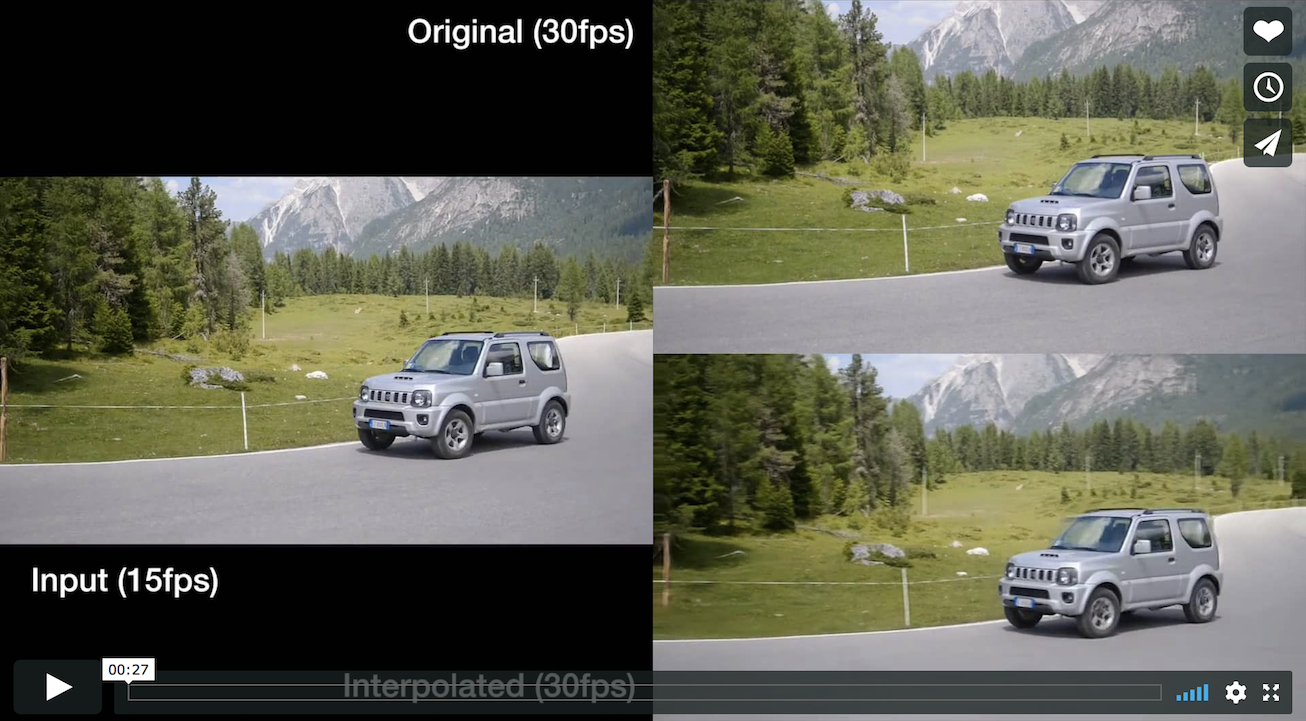

The video below is an example of the capabilities of this implementation. Our pretrained model (used in this instance) can be downloaded from here.

Note that the following instructions apply in the case of a fresh Ubuntu 17 machine with a CUDA-enabled GPU. In other scenarios (ex. if you want to work in a virtual environment or prefer to use the CPU), you may need to skip or change some of the commands.

sudo apt-get install python3-setuptools

sudo easy_install3 pip

sudo apt-get install gcc libdpkg-perl python3-dev

sudo apt-get install ffmpeg

curl -O https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1604/x86_64/cuda-repo-ubuntu1604_8.0.61-1_amd64.deb

sudo dpkg -i ./cuda-repo-ubuntu1604_8.0.61-1_amd64.deb

sudo apt-get update

sudo apt-get install cuda-8-0 -y

sudo apt-get install nvidia-cuda-toolkit

sudo pip3 install -r ./path/to/requirements.txt

cd ./sepconv

echo -e "from src.default_config import *\r\n\r\n# ...custom constants here" > ./src/config.py

python3 -m src.main

This project is released under the MIT license. See LICENSE for more information.

The following dependencies are bundled with this project, but are under terms of a separate license:

[1] Video Frame Interpolation via Adaptive Separable Convolution, Niklaus 2017, arXiv:1708.01692