The purpose of this proyect is mainly to explore the field of gesture recognition, specifically hand gestures, and apply that to control a computer and interact with it. It uses the Leap Motion device and includes simple and complex hand gesture recognition.

- Python 2.7

- Leap Motion device

git clone <this_repo>

cd LEAP_MyMouse

pip install -r requirements.txt

python main.py

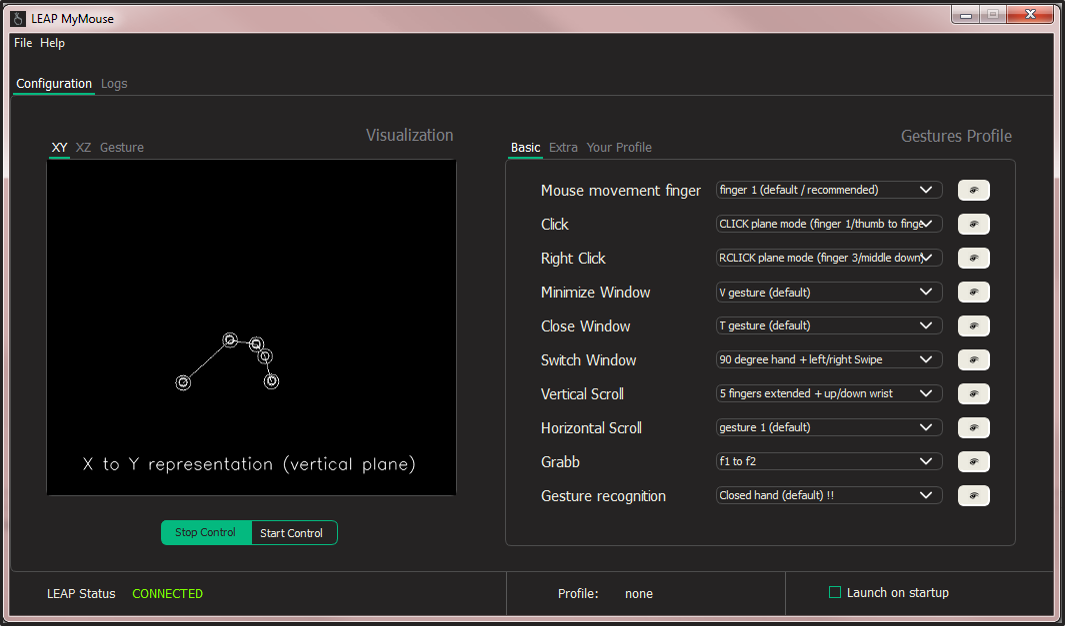

We have two main sections, the left one is related to visualize data of our hands, in both planes, XY and XZ, and the right section is about configuration settings. There you can customize all the action-gesture assignments.

In the menubar, you can save and load customized configuration files, between other options.

- Simple gestures

- Complex gestures

Here we have the $DollarRecognizer algorithm, working with some predefined letter templates, such as T, V, N, D, L, W or Z. The main future improvement is to include the implemented NN with the MNIST dataset into this control, so that the user can draw in the air the 1 to 9 numbers instead of this letters, offering this way the user two ways to perform complex gestures.

This way, when you draw a letter in the air, the assigned action is performed, for example close or minimize a window, show the desktop etc.

Extra: some PowerPoint interactions.

Some features to perform are:

- Multilayer Neural Network included into the interaction

- Add more templates

- Add gifs

- Pretty hand visualization :)

- You can download the full PDF document of the project (spanish) here: https://mega.nz/#!P0QgQIqa!cOsuy9KXdbuxyVKCUtuxyoTVq2GHXsHhX0H1f_9Bp2o

- Mario Varas: elasgard@hotmail.es