-

-

Notifications

You must be signed in to change notification settings - Fork 43

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Internals refactor + renewed focus on perf #17

Merged

Merged

Conversation

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

…e prime can field in uint32

…th - passes all tests (32-bit and 64-bit)

|

Comparing:

The issue doesn't seem to be in moveMem. Could it be an issue in the carry flags and ccopy interaction at constantine/constantine/arithmetic/limbs.nim Lines 258 to 286 in 25972a2

|

|

Fixed the 2 leftover bugs:

|

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

This PR is a complete overhaul of Constantine internal.

Change of priorities

This stems for a renewed focus on performance.

Instead of focusing on constant-time, code size and performance in this order, the library will focus on

constant-time, performance and code size.

The new focus on performance is due to the following articles https://medium.com/loopring-protocol/zksnark-prover-optimizations-3e9a3e5578c0, https://hackmd.io/@zkteam/goff and the Cambrian explosion of Zero-Knowledge Proof protocols (ZKP).

In particular the first post shows that machines used for ZKP protocols start from $1000 (16 cores + 64GB of RAM) and in discussion at EthCC with Consensys ZKP team, I realised that clusters with ~100 of cores would be interesting to use.

At those scale, squeezing the most performance possible from the low-level implementation would significantly reduce the cost of the hardware, and might even make assembly (and it's auditing) worthwhile in the future.

Performance

Here are the performance figures, before/after.

GCC abysmal performance

Note that GCC generates very inefficient and also bloated code for multiprecision arithmetic, even when using addcarry and subborrow intrinsics.

This is so bad that GMP has a dedicated web page: https://gmplib.org/manual/Assembly-Carry-Propagation.html

Example in Godbolt: https://gcc.godbolt.org/z/2h768y

GCC

Clang

There are a couple of issues related in GCC tracker:

Benchmark & Compilation flags

Nim devel from Jan 13 2020

-d:danger

Benchmark is https://github.com/mratsim/constantine/blob/191bb771/benchmarks/bench_eth_curves.nim

which benchmarks the library on the 3 Ethereum 1 and 2 elliptic curves:

The most important item is the field Multiplication it's the building block that makes everything (exponentiation and inversion in particular) slow.

Important: My CPU is overclocked, the hardware clock is using the CPU nominal frequency instead of the overclocked frequency meaning the benchmark are only meaningful to compare between runs on my own PC

GCC before PR

Clang before PR

GCC after PR

Clang after PR

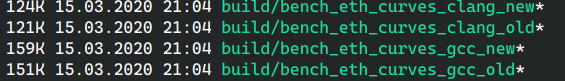

Code-size

The code-size increase to support 3 curves (with BN254 and secp256k1 using the same number of limbs) is very reasonable over the old code.

Explanation of the internals

The old internals were using the same representation as BearSSL BigInt, in particular for words uint64 words only 63-bit were used and the last one stored the carry flag as there is no easy access to the carry flag in C.

The issue caused by carries is visible in this code to handle carries in Nim compile-time VM:

constantine/constantine/arithmetic/precomputed.nim

Lines 34 to 95 in 3c9d070

vs the old representation:

constantine/constantine/arithmetic/precomputed.nim

Lines 37 to 55 in 191bb77

However the BearSSL representation has a couple of issues:

Lastly at least with Clang we have access to efficient add with carry intrinsics.

So the new representation uses the full 64-bit and uses intrinsics or uint128 to deal with add with carries.

Furthermore it uses the technique described in https://hackmd.io/@zkteam/modular_multiplication to improve speed while staying at a high-level.

Why no lazy carries or reduction

As mentioned #15 lazy carries and reductions seem to be popular. Those also have issues:

or substraction requires to reduce the Field Element

Further improvements:

CMOV / Ccopy

Currently conditional mov and copy use assembly and do a

testbefore.The test only needs to be done once when looping over bigints.

Thankfully the impact should be very small or invisible due to instruction level parallelism.

Squaring

This is planned

Multiplication

Further speed improvements are possible but will probably require either inflexible Assembly/inline assembly (i.e. always compiled-in and with predetermined number of limbs) or a mini-compiler.

An example for multi-precision addition is available in

constantine/constantine/primitives/research/addcarry_subborrow_compiler.nim

Lines 34 to 79 in cc75582

As explained in A Fast Implementation of the Optimal Ate Pairing over BN curve on Intel Haswell Processor, Shigeo Mitsunari, 2013, https://eprint.iacr.org/2013/362.pdf one of the main bottleneck on x86 is that the MUL instruction is very inflexible in terms of register and requires lots of mov before and after which significantly hinder throughput of Montgomery multiplication.

Furthermore, it pollutes the carry flags, by using MULX instead we can avoid that and even use ADCX and ADOX instructions to handle 2 independent carry chains and benefit from instruction-level parallelism as mentioned by Intel in https://www.intel.com/content/dam/www/public/us/en/documents/white-papers/ia-large-integer-arithmetic-paper.pdf