-

-

Notifications

You must be signed in to change notification settings - Fork 1.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

feat(assistant): Assistant and AssistantFiles api #1803

Conversation

✅ Deploy Preview for localai canceled.

|

|

This is still missing threads, messages and runs. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The assistant functionality is not complete. Maybe flag it as a draft?

|

@grazz this is the assistant/assistant file api itself which is complete. For: #1273 to be complete you'd need other apis like threads, runs, etc. The assistant api itself is just a grouping of files uploaded and a model. You'd need to create a thread and run in order this feature to be a mostly done: https://platform.openai.com/docs/api-reference/runs/createThreadAndRun. You'd then either stream the output or retrieve the message at a later point in time. |

go.mod

Outdated

|

|

||

| replace github.com/M0Rf30/go-tiny-dream => /Users/schristou/Personal/LocalAI/sources/go-tiny-dream | ||

|

|

||

| replace github.com/mudler/go-piper => /Users/schristou/Personal/LocalAI/sources/go-piper |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this is added when building outside of Docker and should not be included.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

ah! yeah you're right. will remove this.

|

Yup LGTM. |

| os.MkdirAll(appConfig.ModelPath, 0755) | ||

|

|

||

| // Load config jsons | ||

| utils.LoadConfig(appConfig.UploadDir, openai.UploadedFilesFile, &openai.UploadedFiles) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm currently in the middle of refactoring things such that api.go isn't responsible for general application startup tasks [so that we can have multiple transports supported] - would it be possible to move this config file handling code (and perhaps some of the related functionality) down to core/startup?

| return c.Status(fiber.StatusBadRequest).JSON(fiber.Map{"error": "Cannot parse JSON"}) | ||

| } | ||

|

|

||

| if !modelExists(ml, request.Model) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This one is a bit pickier - my refactor is to split things up "around hereish", so that the business logic of implementing each piece of functionality would live down in core/services

If this isn't clear enough, feel free to just reply to this comment saying so or close it - I can help with this or put it on the to-do list for later 👍

[Only flagging this site, but I mean this for all the endpoints]

| @@ -20,6 +20,7 @@ type ApplicationConfig struct { | |||

| ImageDir string | |||

| AudioDir string | |||

| UploadDir string | |||

| ConfigsDir string | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it maybe makes more sense to call this FilesDir, at a first glance I thought this was a directory for LocalAI config files

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks good here, the points raised by @dave-gray101 can be addressed in a follow-up, I don't think should be a blocker - a small nit in https://github.com/mudler/LocalAI/pull/1803/files#r1536639901 that can be also addressed later

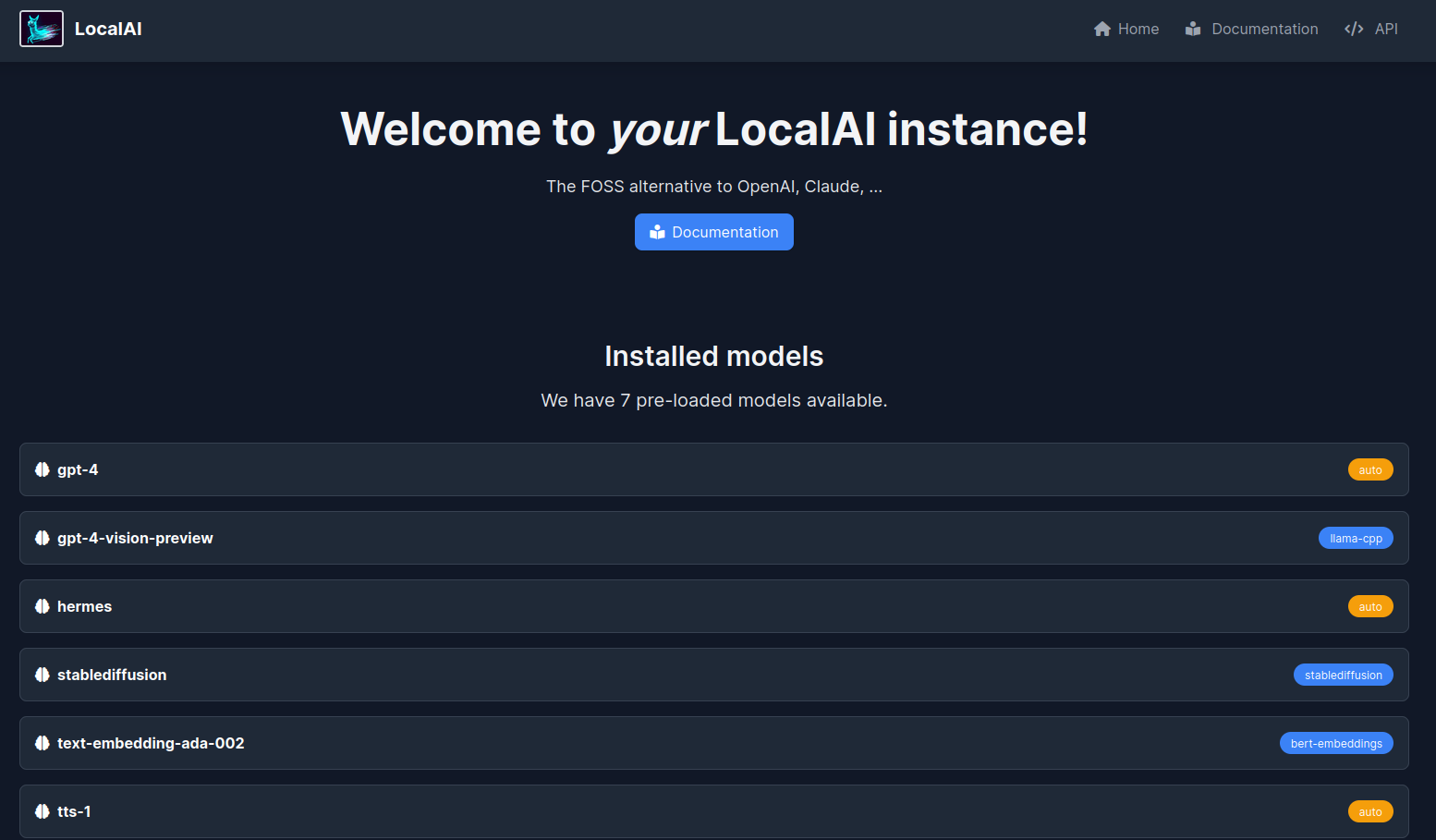

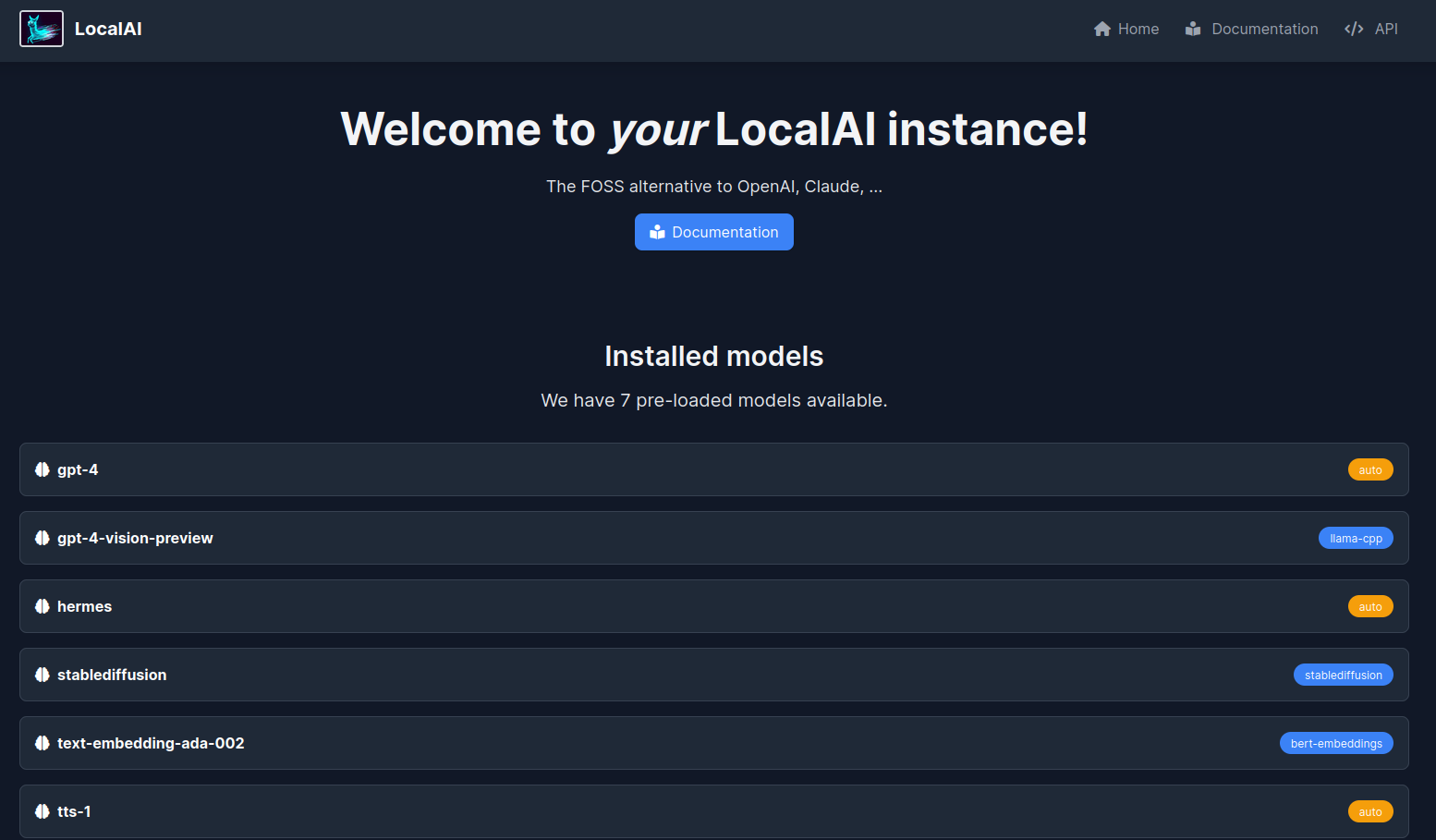

…2.1 by renovate (#20490) This PR contains the following updates: | Package | Update | Change | |---|---|---| | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0-cublas-cuda11-ffmpeg-core` -> `v2.12.1-cublas-cuda11-ffmpeg-core` | | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0-cublas-cuda11-core` -> `v2.12.1-cublas-cuda11-core` | | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0-cublas-cuda12-ffmpeg-core` -> `v2.12.1-cublas-cuda12-ffmpeg-core` | | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0-cublas-cuda12-core` -> `v2.12.1-cublas-cuda12-core` | | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0-ffmpeg-core` -> `v2.12.1-ffmpeg-core` | | [docker.io/localai/localai](https://togithub.com/mudler/LocalAI) | minor | `v2.11.0` -> `v2.12.1` | --- > [!WARNING] > Some dependencies could not be looked up. Check the Dependency Dashboard for more information. --- ### Release Notes <details> <summary>mudler/LocalAI (docker.io/localai/localai)</summary> ### [`v2.12.1`](https://togithub.com/mudler/LocalAI/releases/tag/v2.12.1) [Compare Source](https://togithub.com/mudler/LocalAI/compare/v2.12.0...v2.12.1) I'm happy to announce the v2.12.1 LocalAI release is out! ##### 🌠 Landing page and Swagger Ever wondered what to do after LocalAI is up and running? Integration with a simple web interface has been started, and you can see now a landing page when hitting the LocalAI front page:  You can also now enjoy Swagger to try out the API calls directly:  ##### 🌈 AIO images changes Now the default model for CPU images is https://huggingface.co/NousResearch/Hermes-2-Pro-Mistral-7B-GGUF - pre-configured for functions and tools API support! If you are an Intel-GPU owner, the Intel profile for AIO images is now available too! ##### 🚀 OpenVINO and transformers enhancements Now there is support for OpenVINO and transformers got token streaming support now thanks to [@​fakezeta](https://togithub.com/fakezeta)! To try OpenVINO, you can use the example available in the documentation: https://localai.io/features/text-generation/#examples ##### 🎈 Lot of small improvements behind the scenes! Thanks for our outstanding community, we have enhanced several areas: - The build time of LocalAI was speed up significantly! thanks to [@​cryptk](https://togithub.com/cryptk) for the efforts in enhancing the build system - [@​thiner](https://togithub.com/thiner) worked hardly to get Vision support for AutoGPTQ - ... and much more! see down below for a full list, be sure to star LocalAI and give it a try! ##### 📣 Spread the word! First off, a massive thank you (again!) to each and every one of you who've chipped in to squash bugs and suggest cool new features for LocalAI. Your help, kind words, and brilliant ideas are truly appreciated - more than words can say! And to those of you who've been heros, giving up your own time to help out fellow users on Discord and in our repo, you're absolutely amazing. We couldn't have asked for a better community. Just so you know, LocalAI doesn't have the luxury of big corporate sponsors behind it. It's all us, folks. So, if you've found value in what we're building together and want to keep the momentum going, consider showing your support. A little shoutout on your favorite social platforms using @​LocalAI_OSS and @​mudler_it or joining our sponsors can make a big difference. Also, if you haven't yet joined our Discord, come on over! Here's the link: https://discord.gg/uJAeKSAGDy Every bit of support, every mention, and every star adds up and helps us keep this ship sailing. Let's keep making LocalAI awesome together! Thanks a ton, and here's to more exciting times ahead with LocalAI! ##### What's Changed ##### Bug fixes 🐛 - fix: downgrade torch by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1902](https://togithub.com/mudler/LocalAI/pull/1902) - fix(aio): correctly detect intel systems by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1931](https://togithub.com/mudler/LocalAI/pull/1931) - fix(swagger): do not specify a host by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1930](https://togithub.com/mudler/LocalAI/pull/1930) - fix(tools): correctly render tools response in templates by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1932](https://togithub.com/mudler/LocalAI/pull/1932) - fix(grammar): respect JSONmode and grammar from user input by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1935](https://togithub.com/mudler/LocalAI/pull/1935) - fix(hermes-2-pro-mistral): add stopword for toolcall by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1939](https://togithub.com/mudler/LocalAI/pull/1939) - fix(functions): respect when selected from string by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1940](https://togithub.com/mudler/LocalAI/pull/1940) - fix: use exec in entrypoint scripts to fix signal handling by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1943](https://togithub.com/mudler/LocalAI/pull/1943) - fix(hermes-2-pro-mistral): correct stopwords by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1947](https://togithub.com/mudler/LocalAI/pull/1947) - fix(welcome): stable model list by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1949](https://togithub.com/mudler/LocalAI/pull/1949) - fix(ci): manually tag latest images by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1948](https://togithub.com/mudler/LocalAI/pull/1948) - fix(seed): generate random seed per-request if -1 is set by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1952](https://togithub.com/mudler/LocalAI/pull/1952) - fix regression [#​1971](https://togithub.com/mudler/LocalAI/issues/1971) by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1972](https://togithub.com/mudler/LocalAI/pull/1972) ##### Exciting New Features 🎉 - feat(aio): add intel profile by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1901](https://togithub.com/mudler/LocalAI/pull/1901) - Enhance autogptq backend to support VL models by [@​thiner](https://togithub.com/thiner) in [https://github.com/mudler/LocalAI/pull/1860](https://togithub.com/mudler/LocalAI/pull/1860) - feat(assistant): Assistant and AssistantFiles api by [@​christ66](https://togithub.com/christ66) in [https://github.com/mudler/LocalAI/pull/1803](https://togithub.com/mudler/LocalAI/pull/1803) - feat: Openvino runtime for transformer backend and streaming support for Openvino and CUDA by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1892](https://togithub.com/mudler/LocalAI/pull/1892) - feat: Token Stream support for Transformer, fix: missing package for OpenVINO by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1908](https://togithub.com/mudler/LocalAI/pull/1908) - feat(welcome): add simple welcome page by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1912](https://togithub.com/mudler/LocalAI/pull/1912) - fix(build): better CI logging and correct some build failure modes in Makefile by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1899](https://togithub.com/mudler/LocalAI/pull/1899) - feat(webui): add partials, show backends associated to models by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1922](https://togithub.com/mudler/LocalAI/pull/1922) - feat(swagger): Add swagger API doc by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1926](https://togithub.com/mudler/LocalAI/pull/1926) - feat(build): adjust number of parallel make jobs by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1915](https://togithub.com/mudler/LocalAI/pull/1915) - feat(swagger): update by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1929](https://togithub.com/mudler/LocalAI/pull/1929) - feat: first pass at improving logging by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1956](https://togithub.com/mudler/LocalAI/pull/1956) - fix(llama.cpp): set better defaults for llama.cpp by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1961](https://togithub.com/mudler/LocalAI/pull/1961) ##### 📖 Documentation and examples - docs(aio-usage): update docs to show examples by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1921](https://togithub.com/mudler/LocalAI/pull/1921) ##### 👒 Dependencies - ⬆️ Update docs version mudler/LocalAI by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1903](https://togithub.com/mudler/LocalAI/pull/1903) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1904](https://togithub.com/mudler/LocalAI/pull/1904) - ⬆️ Update M0Rf30/go-tiny-dream by [@​M0Rf30](https://togithub.com/M0Rf30) in [https://github.com/mudler/LocalAI/pull/1911](https://togithub.com/mudler/LocalAI/pull/1911) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1913](https://togithub.com/mudler/LocalAI/pull/1913) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1914](https://togithub.com/mudler/LocalAI/pull/1914) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1923](https://togithub.com/mudler/LocalAI/pull/1923) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1924](https://togithub.com/mudler/LocalAI/pull/1924) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1928](https://togithub.com/mudler/LocalAI/pull/1928) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1933](https://togithub.com/mudler/LocalAI/pull/1933) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1934](https://togithub.com/mudler/LocalAI/pull/1934) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1937](https://togithub.com/mudler/LocalAI/pull/1937) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1941](https://togithub.com/mudler/LocalAI/pull/1941) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1953](https://togithub.com/mudler/LocalAI/pull/1953) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1958](https://togithub.com/mudler/LocalAI/pull/1958) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1959](https://togithub.com/mudler/LocalAI/pull/1959) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1964](https://togithub.com/mudler/LocalAI/pull/1964) ##### Other Changes - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1927](https://togithub.com/mudler/LocalAI/pull/1927) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1960](https://togithub.com/mudler/LocalAI/pull/1960) - fix(hermes-2-pro-mistral): correct dashes in template to suppress newlines by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1966](https://togithub.com/mudler/LocalAI/pull/1966) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1969](https://togithub.com/mudler/LocalAI/pull/1969) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1970](https://togithub.com/mudler/LocalAI/pull/1970) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1973](https://togithub.com/mudler/LocalAI/pull/1973) ##### New Contributors - [@​thiner](https://togithub.com/thiner) made their first contribution in [https://github.com/mudler/LocalAI/pull/1860](https://togithub.com/mudler/LocalAI/pull/1860) **Full Changelog**: mudler/LocalAI@v2.11.0...v2.12.1 ### [`v2.12.0`](https://togithub.com/mudler/LocalAI/releases/tag/v2.12.0) [Compare Source](https://togithub.com/mudler/LocalAI/compare/v2.11.0...v2.12.0) I'm happy to announce the v2.12.0 LocalAI release is out! ##### 🌠 Landing page and Swagger Ever wondered what to do after LocalAI is up and running? Integration with a simple web interface has been started, and you can see now a landing page when hitting the LocalAI front page:  You can also now enjoy Swagger to try out the API calls directly:  ##### 🌈 AIO images changes Now the default model for CPU images is https://huggingface.co/NousResearch/Hermes-2-Pro-Mistral-7B-GGUF - pre-configured for functions and tools API support! If you are an Intel-GPU owner, the Intel profile for AIO images is now available too! ##### 🚀 OpenVINO and transformers enhancements Now there is support for OpenVINO and transformers got token streaming support now thanks to [@​fakezeta](https://togithub.com/fakezeta)! To try OpenVINO, you can use the example available in the documentation: https://localai.io/features/text-generation/#examples ##### 🎈 Lot of small improvements behind the scenes! Thanks for our outstanding community, we have enhanced several areas: - The build time of LocalAI was speed up significantly! thanks to [@​cryptk](https://togithub.com/cryptk) for the efforts in enhancing the build system - [@​thiner](https://togithub.com/thiner) worked hardly to get Vision support for AutoGPTQ - ... and much more! see down below for a full list, be sure to star LocalAI and give it a try! ##### 📣 Spread the word! First off, a massive thank you (again!) to each and every one of you who've chipped in to squash bugs and suggest cool new features for LocalAI. Your help, kind words, and brilliant ideas are truly appreciated - more than words can say! And to those of you who've been heros, giving up your own time to help out fellow users on Discord and in our repo, you're absolutely amazing. We couldn't have asked for a better community. Just so you know, LocalAI doesn't have the luxury of big corporate sponsors behind it. It's all us, folks. So, if you've found value in what we're building together and want to keep the momentum going, consider showing your support. A little shoutout on your favorite social platforms using @​LocalAI_OSS and @​mudler_it or joining our sponsors can make a big difference. Also, if you haven't yet joined our Discord, come on over! Here's the link: https://discord.gg/uJAeKSAGDy Every bit of support, every mention, and every star adds up and helps us keep this ship sailing. Let's keep making LocalAI awesome together! Thanks a ton, and here's to more exciting times ahead with LocalAI! ##### What's Changed ##### Bug fixes 🐛 - fix: downgrade torch by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1902](https://togithub.com/mudler/LocalAI/pull/1902) - fix(aio): correctly detect intel systems by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1931](https://togithub.com/mudler/LocalAI/pull/1931) - fix(swagger): do not specify a host by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1930](https://togithub.com/mudler/LocalAI/pull/1930) - fix(tools): correctly render tools response in templates by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1932](https://togithub.com/mudler/LocalAI/pull/1932) - fix(grammar): respect JSONmode and grammar from user input by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1935](https://togithub.com/mudler/LocalAI/pull/1935) - fix(hermes-2-pro-mistral): add stopword for toolcall by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1939](https://togithub.com/mudler/LocalAI/pull/1939) - fix(functions): respect when selected from string by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1940](https://togithub.com/mudler/LocalAI/pull/1940) - fix: use exec in entrypoint scripts to fix signal handling by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1943](https://togithub.com/mudler/LocalAI/pull/1943) - fix(hermes-2-pro-mistral): correct stopwords by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1947](https://togithub.com/mudler/LocalAI/pull/1947) - fix(welcome): stable model list by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1949](https://togithub.com/mudler/LocalAI/pull/1949) - fix(ci): manually tag latest images by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1948](https://togithub.com/mudler/LocalAI/pull/1948) - fix(seed): generate random seed per-request if -1 is set by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1952](https://togithub.com/mudler/LocalAI/pull/1952) - fix regression [#​1971](https://togithub.com/mudler/LocalAI/issues/1971) by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1972](https://togithub.com/mudler/LocalAI/pull/1972) ##### Exciting New Features 🎉 - feat(aio): add intel profile by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1901](https://togithub.com/mudler/LocalAI/pull/1901) - Enhance autogptq backend to support VL models by [@​thiner](https://togithub.com/thiner) in [https://github.com/mudler/LocalAI/pull/1860](https://togithub.com/mudler/LocalAI/pull/1860) - feat(assistant): Assistant and AssistantFiles api by [@​christ66](https://togithub.com/christ66) in [https://github.com/mudler/LocalAI/pull/1803](https://togithub.com/mudler/LocalAI/pull/1803) - feat: Openvino runtime for transformer backend and streaming support for Openvino and CUDA by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1892](https://togithub.com/mudler/LocalAI/pull/1892) - feat: Token Stream support for Transformer, fix: missing package for OpenVINO by [@​fakezeta](https://togithub.com/fakezeta) in [https://github.com/mudler/LocalAI/pull/1908](https://togithub.com/mudler/LocalAI/pull/1908) - feat(welcome): add simple welcome page by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1912](https://togithub.com/mudler/LocalAI/pull/1912) - fix(build): better CI logging and correct some build failure modes in Makefile by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1899](https://togithub.com/mudler/LocalAI/pull/1899) - feat(webui): add partials, show backends associated to models by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1922](https://togithub.com/mudler/LocalAI/pull/1922) - feat(swagger): Add swagger API doc by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1926](https://togithub.com/mudler/LocalAI/pull/1926) - feat(build): adjust number of parallel make jobs by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1915](https://togithub.com/mudler/LocalAI/pull/1915) - feat(swagger): update by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1929](https://togithub.com/mudler/LocalAI/pull/1929) - feat: first pass at improving logging by [@​cryptk](https://togithub.com/cryptk) in [https://github.com/mudler/LocalAI/pull/1956](https://togithub.com/mudler/LocalAI/pull/1956) - fix(llama.cpp): set better defaults for llama.cpp by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1961](https://togithub.com/mudler/LocalAI/pull/1961) ##### 📖 Documentation and examples - docs(aio-usage): update docs to show examples by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1921](https://togithub.com/mudler/LocalAI/pull/1921) ##### 👒 Dependencies - ⬆️ Update docs version mudler/LocalAI by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1903](https://togithub.com/mudler/LocalAI/pull/1903) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1904](https://togithub.com/mudler/LocalAI/pull/1904) - ⬆️ Update M0Rf30/go-tiny-dream by [@​M0Rf30](https://togithub.com/M0Rf30) in [https://github.com/mudler/LocalAI/pull/1911](https://togithub.com/mudler/LocalAI/pull/1911) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1913](https://togithub.com/mudler/LocalAI/pull/1913) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1914](https://togithub.com/mudler/LocalAI/pull/1914) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1923](https://togithub.com/mudler/LocalAI/pull/1923) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1924](https://togithub.com/mudler/LocalAI/pull/1924) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1928](https://togithub.com/mudler/LocalAI/pull/1928) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1933](https://togithub.com/mudler/LocalAI/pull/1933) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1934](https://togithub.com/mudler/LocalAI/pull/1934) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1937](https://togithub.com/mudler/LocalAI/pull/1937) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1941](https://togithub.com/mudler/LocalAI/pull/1941) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1953](https://togithub.com/mudler/LocalAI/pull/1953) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1958](https://togithub.com/mudler/LocalAI/pull/1958) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1959](https://togithub.com/mudler/LocalAI/pull/1959) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1964](https://togithub.com/mudler/LocalAI/pull/1964) ##### Other Changes - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1927](https://togithub.com/mudler/LocalAI/pull/1927) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1960](https://togithub.com/mudler/LocalAI/pull/1960) - fix(hermes-2-pro-mistral): correct dashes in template to suppress newlines by [@​mudler](https://togithub.com/mudler) in [https://github.com/mudler/LocalAI/pull/1966](https://togithub.com/mudler/LocalAI/pull/1966) - ⬆️ Update ggerganov/whisper.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1969](https://togithub.com/mudler/LocalAI/pull/1969) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1970](https://togithub.com/mudler/LocalAI/pull/1970) - ⬆️ Update ggerganov/llama.cpp by [@​localai-bot](https://togithub.com/localai-bot) in [https://github.com/mudler/LocalAI/pull/1973](https://togithub.com/mudler/LocalAI/pull/1973) ##### New Contributors - [@​thiner](https://togithub.com/thiner) made their first contribution in [https://github.com/mudler/LocalAI/pull/1860](https://togithub.com/mudler/LocalAI/pull/1860) **Full Changelog**: mudler/LocalAI@v2.11.0...v2.12.0 </details> --- ### Configuration 📅 **Schedule**: Branch creation - At any time (no schedule defined), Automerge - At any time (no schedule defined). 🚦 **Automerge**: Enabled. ♻ **Rebasing**: Whenever PR becomes conflicted, or you tick the rebase/retry checkbox. 🔕 **Ignore**: Close this PR and you won't be reminded about these updates again. --- - [ ] <!-- rebase-check -->If you want to rebase/retry this PR, check this box --- This PR has been generated by [Renovate Bot](https://togithub.com/renovatebot/renovate). <!--renovate-debug:eyJjcmVhdGVkSW5WZXIiOiIzNy4yODEuMiIsInVwZGF0ZWRJblZlciI6IjM3LjI4MS4yIiwidGFyZ2V0QnJhbmNoIjoibWFzdGVyIiwibGFiZWxzIjpbImF1dG9tZXJnZSIsInVwZGF0ZS9kb2NrZXIvZ2VuZXJhbC9ub24tbWFqb3IiXX0=-->

Description

This PR adds the assistants and assistant files api.

Signed commits