-

Notifications

You must be signed in to change notification settings - Fork 2

Preview3

This page is separated into a number of sections:

- Introduction

- Bootstrapping - Overview of cloudinit.d, the bootstrapping tool.

- Running System - Overview of the EPU system and epumgmt, the EPU management tool.

- Installation - Step by step instructions for installing the software.

Then Next Steps -- after installation, choose your adventure:

- A simple example: Sleeper Must Awaken

- Basic OOI services

- SQLStream example

- Example of using only cloudinit.d: Rabbit stress testing

***

The EPU system is used to control IaaS based services that:

- Do not disappear because failures are compensated with replacements.

- Can be configured to be "elastic" if the service can coordinate any number of participant instances being launched to join in the work -- and can tolerate instances dropping out.

The EPU system is running in virtual machines itself (in the future we hope to make a more standalone "lite" version) and is made up of two central software components:

- A central provisioner service that is used to launch IaaS instances and make sure they contextualize as desired.

- Each service being "EPU-ified" has a dedicated group of EPU services responsible for making sure that the service has the capacity desired by policy for the situation at hand.

Something needs to bootstrap and monitor the base system itself from a pre-existing system and that is cloudinit.d. This tool is not specific to the EPU architecture but it is integral to launching and monitoring it. It takes a "launch plan" as input, this is made up of bootlevel-style descriptions of what needs to run.

Once launched, the epumgmt tool can be used to inspect what is happening in the EPU system. Since cloudinit.d is not aware of VMs that are launched by the provisioner service (the provisioner service it launches is "just another service"), epumgmt is also what should be used to cleanly tear a running EPU system down.

***

cloudinit.d consumes a "launch plan" which is a description of services to run, broken out into bootlevels. After launching, the tool can then be used to monitor each configured service/VM.

cloudinit.d is IaaS-agnostic. Even within a single launch plan, you can launch different VMs on different clouds.

Each VM is launched/verified in the order necessary for dependencies to work out. The tool will not proceed past each level until a verification has been run (the verification step is particular to each service; it's also optional).

Each level can contain any number of service/VM configurations, it could be just one or it could be 100. Like in the unix runlevel concept (cloudinit.d's namesake), if there is more than one thing configured in a level then you are stating that they can be started simultaneously -- as long as it's after all the things in the previous runlevels.

Further, attributes can be provided from one level to the next. For example, the hostname of a dependency started in a previous level can be specified as a configuration input to a subsequent VM. In the EPU system, this is used to bootstrap things like RabbitMQ and Cassandra and feed their coordinates into ION service configurations (cf. this outline of the EPU bootstrap).

To learn the details of the configuration and verification systems, see the README. Below we will also give instructions to start sample launch plans, that is a good way to learn the details as well.

***

Once cloudinit.d initiates an EPU-specific launch plan and confirms that each bootlevel was successful using its verification mechanisms, the EPU is off and running.

For each EPU-ified service, a non-zero "minimum instances" configuration might be in place in your launch plan. That will cause worker instances to be launched immediately via the EPU provisioner service. At this point, there are now VM instances running under your credential that cloudinit.d does not know about.

This is where epumgmt comes into play. epumgmt is a tool that inspects a cloudinit.d launch (via API) and looks for something named "provisioner" as well as any name with a prefix of "epu-".

Using this convention, it can then inspect the EPU system. For example, it can analyze log files for specific events. That helps for information purposes and troubleshooting as well as for running system-level tests.

Also, it is equipped with a mechanism to tear down the system (it can send a special signal to the provisioner service to shutdown all the workers). That is important to note because if you tear down with cloudinit.d, then you would leave the EPU worker instances running. We will learn the details below when it's time to launch and manage sample launch plans.

In the following overview diagram, note for now the major sections.

- Section #2: cloudinit.d and launch-plans. The database here is based on SQLite: it's just a file, no need to worry about setting up any DB on the node.

- Section #3: cloudinit.d is itself configured with credentials to interact with one or more IaaS systems. It's here that the base system instances are started which is section #4.

- Section #4: any supporting infrastructure (like message brokers, context broker, and Cassandra instances for example) and the EPU infrastructure itself (the provisioner and EPU controllers etc).

- Section #5: When policy dictates, the EPU controllers use the provisioner to instantiate worker nodes (Section #6) using its own configured credentials and its own configured IaaS systems. In the samples below these will be the same IaaS and IaaS credentials as cloudinit.d used, though.

- Section #1: Use epumgmt to inspect the EPU infrastructure or terminate the entire system (including the worker nodes launched by the provisioner).

The "support nodes" in this diagram are the "dedicated group of EPU services" mentioned in the introduction. Each EPU-ified service in the system needs to have these "watching" services (an instance of "EPU controller" and "Sensor Aggregator") that make decisions about that particular service's worker node pool.

Decisions about the number of instances (both to compensate failures and to expand and contract to handle workloads/deadlines) are based on sensor information and configured policies. The heart of the decision making process takes place in a "decision engine".

There are default implementations of the decision engine with many configurations but it is fully customizable if you drop to writing Python. There is an abstraction in place that splits the EPU controller service into two parts.

-

(1) The "outer shell" that takes care of all the messaging and persistence work to make the EPU behave as it should in the context of the EPU system as a whole.

-

(2) The decision engine itself is a configurable Python class that follows a certain fairly simple API and semantics for getting information about the system or carrying out changes in the system. The actual decision making logic can be as complex as you want for custom engines.

That would be for some pretty advanced cases: in the samples below we are going to work with the default decision engine: npreserving.

The npreserving decision engine maintains a certain "N" number of worker instances. If one exhibits an issue, it will be replaced. You configure this number in the initial configuration but there is also a remote operation that lets you reconfigure it (the SQLStream/ANF dynamic controller integrates with this).

An alternative is the queue-length inspecting decision engine, this will not be used in the sample launches below. With that, the "queuelen_high_water" and "queuelen_low_water" cause the infrastructure to look at pending messages in the service's message queue to make decisions.

- When the high water is passed, the queue is considered backed-up and a compensating node is launched.

- When the queue's pending message count drops below the low water configuration, a node is taken away.

- If there is still work on the queue, the node count will not drop to zero.

- Also you can force the minimum node count (regardless of queuelen)

Enabling this will be part of a future documentation section.

***

OK, you have some background, time to get started with some sample launch plans.

There are prerequisites though, there are things you must install on a preexisting system and credentials you must obtain for preexisting services.

For the samples we will use the EC2 IaaS service, but the software is compatible with Nimbus IaaS as well.

For cloudinit.d (there is a sample below that only uses cloudinit.d):

- (1) Python 2.5+ (but not Python 3.x)

- (2) virtualenv installation

- (3) git installation

- (4) cloudinit.d software and dependencies

- (5) EC2 credentials

For all of the other samples:

- (1) Python 2.5+ (but not Python 3.x)

- (2) virtualenv installation

- (3) git installation

- (4) cloudinit.d software and dependencies

- (5) EC2 credentials

- (6) EC2 credentials for EPU infrastructure

- (7) A default security group in EC2 that allows incoming connections to port 22 and port

- (8) Context Broker credentials

- (9) epumgmt software and dependencies

***

-

(1) Python 2.5+ (but not Python 3.x)

Installed to your system via package manager. This has all only been tested on Linux.

$ python --version

-

(2) virtualenv installation

Installed to your system via package manager. Alternatively try:

$ easy_install virtualenvVisit this page for more information.

Run the following command to setup and activate one:

$ cd /tmp # sample directory $ virtualenv --no-site-packages --python=python2.6 venv $ cd /tmp/venv $ source bin/activateAfter that last command you are now in a sandboxed Python environment and can install libraries at will (without needing a system account).

-

(3) git installation

Temporarily need a git installation to get the software (as a last resort there should be tarball available at those github links).

$ git

-

(4) cloudinit.d software and dependencies

$ mkdir deps $ cd deps/ $ pwd /tmp/venv/deps $ git clone https://github.com/nimbusproject/cloudinit.d.git $ cd cloudinit.d/ $ git checkout -b preview3 ceipreview3 $ which python # Checking that virtualenv is active. /tmp/venv/bin/python $ python setup.py install $ cd ../../ $ which cloudinit /tmp/venv/bin/cloudinitd $ cloudinitd -h $ cloudinitd commands

-

(5) EC2 credentials

You will need to register with Amazon Web Services and create an account to launch EC2 virtual machines. In the future we will provide instructions for using Nimbus IaaS as well.

In your account you will find values for AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY. The access key is not your account ID # but is a key listed in the credentials section.

You will also need to register an SSH key with EC2 (in the US West region but go ahead and do both East and West for good measure). You can name it anything you like if you are just using cloudinit.d but convention for EPU things is to use the name 'ooi'.

Keep these handy, you will install them into the "main.conf" file of a launch plan (this is what cloudinit.d uses to start VMs).

(TODO: At this point perhaps ask the reader to try a simple cloudinit.d only launch?)

***

For launch plans that include launching the EPU framework (that is most of them below), you will do all of the steps in the previous section but also the following:

-

(6) EC2 credentials for EPU infrastructure

The EC2 credentials you used for cloudinit.d can also be used for the provisioner service, they will go into the "provisioner/cei_environment" of a launch plan if you are using the EPU infrastructure (this is what the provisioner service uses to start VMs).

That could be different than the cloudinit.d configuration but probably don't get too fancy too quickly :-)

An EC2 SSH key named 'ooi' (convention)

You will need to register an SSH key with EC2 (in the US West region but go ahead and do both East and West for good measure) with the the name 'ooi'.

-

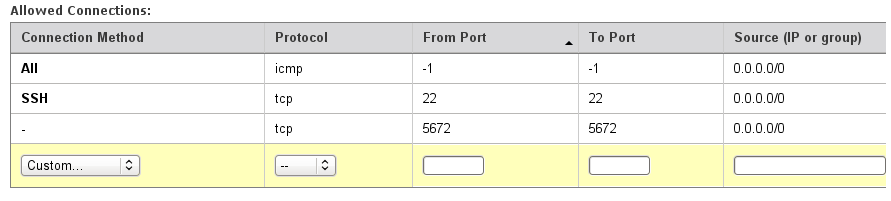

(7) A default security group in EC2 that allows incoming connections to port 22 and port

You will need to use a default "security group" that opens port 22 (for SSH) and port 5672 (for RabbitMQ connections). Here is what the tested one looks like in the AWS control panel:

-

(8) Context Broker credentials

The context broker is used to configure images that are started via the provisioner service.

In the near future these launch plans will run a context broker for you and configure the credentials automatically, but for now you should get an account on the public, standalone context broker, email nimbus@mcs.anl.gov for credentials).

These will go into the "provisioner/cei_environment" of a launch plan as well.

(Basically, think of the "cei_environment" file as a repository of credentials the provisioner needs to do its work with IaaS and Context Broker services).

-

(9) epumgmt software and dependencies

$ which python # Checking that virtualenv is active. /tmp/venv/bin/python $ easy_install simplejson $ cd /tmp/venv/deps $ git clone https://github.com/nimbusproject/cloudyvents.git $ git clone https://github.com/nimbusproject/cloudminer.git $ (cd cloudyvents && python setup.py install) $ (cd cloudminer && python setup.py install) $ cd /tmp/venv $ git clone https://github.com/nimbusproject/epumgmt.git $ cd epumgmt $ git checkout -b preview3 ceipreview3 $ ./sbin/check-dependencies.sh OK, looks like the dependencies are set up. $ ./bin/epumgmt.sh -h

***

Now you need to install the sample launch plans.

$ cd /tmp/venv

$ git clone https://github.com/ooici/launch-plans.git

$ cd launch-plans

$ git checkout -b preview3 ceipreview3

$ ls sandbox/burned-sleeper/

Follow the simple steps in "/tmp/venv/launch-plans/sandbox/burned-sleeper/README.txt"

Those steps will install the provisioner credentials (see above).

For cloudinit.d, you will export the following environment variables:

- CLOUDBOOT_IAAS_ACCESS_KEY

- CLOUDBOOT_IAAS_SECRET_KEY

The can also be installed directly as strings to "/tmp/venv/launch-plans/sandbox/burned-sleeper/main.conf"

(Still in progress): Choose your adventure:

- A simple EPU example: Sleeper Must Awaken

- Basic OOI services

- SQLStream example

- Example of using only cloudinit.d: Rabbit stress testing