-

Notifications

You must be signed in to change notification settings - Fork 728

Add test for InverseMelScale #448

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

@vincentqb Once we settle on the test, we can do;

|

|

For the record; code for plot |

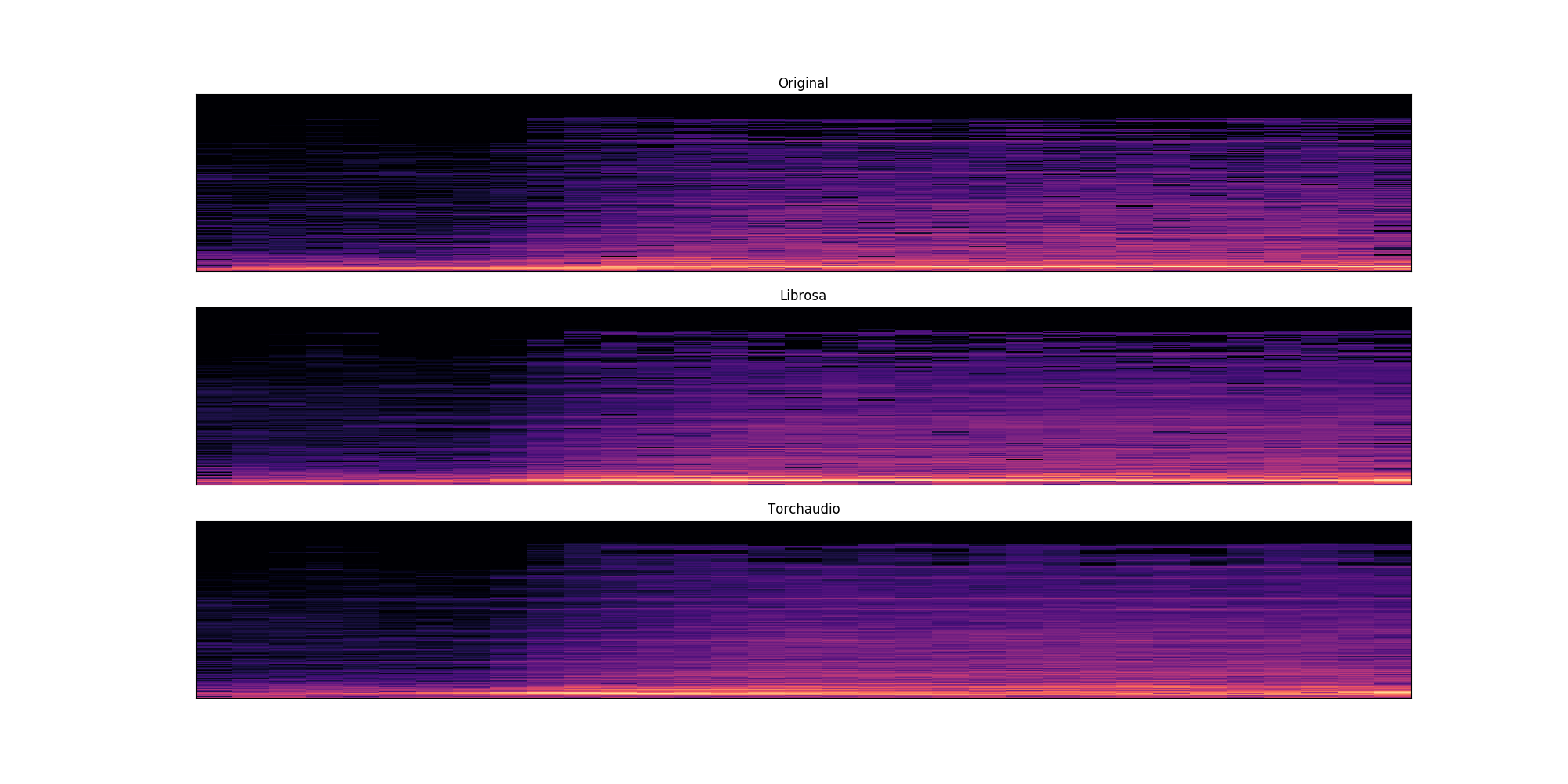

This PR follows up pytorch#366 and adds test for `InverseMelScale` (and `MelScale`) for librosa compatibility. For `MelScale` compatibility test; 1. Generate spectrogram 2. Feed the spectrogram to `torchaudio.transforms.MelScale` instance 3. Feed the spectrogram to `librosa.feature.melspectrogram` function. 4. Compare the result from 2 and 3 elementwise. Element-wise numerical comparison is possible because under the hood their implementations use the same algorith. For `InverseMelScale` compatibility test, it is more elaborated than that. 1. Generate the original spectrogram 2. Convert the original spectrogram to Mel scale using `torchaudio.transforms.MelScale` instance 3. Reconstruct spectrogram using torchaudio implementation 3.1. Feed the Mel spectrogram to `torchaudio.transforms.InverseMelScale` instance and get reconstructed spectrogram. 3.2. Compute the sum of element-wise P1 distance of the original spectrogram and that from 3.1. 4. Reconstruct spectrogram using librosa 4.1. Feed the Mel spectrogram to `librosa.feature.inverse.mel_to_stft` function and get reconstructed spectrogram. 4.2. Compute the sum of element-wise P1 distance of the original spectrogram and that from 4.1. (this is the reference.) 5. Check that resulting P1 distance are in a roughly same value range. Element-wise numerical comparison is not possible due to the difference algorithms used to compute the inverse. The reconstructed spectrograms can have some values vary in magnitude. Therefore the strategy here is to check that P1 distance (reconstruction loss) is not that different from the value obtained using `librosa`. For this purpose, threshold was empirically chosen ``` print('p1 dist (orig <-> ta):', torch.dist(spec_orig, spec_ta, p=1)) print('p1 dist (orig <-> lr):', torch.dist(spec_orig, spec_lr, p=1)) >>> p1 dist (orig <-> ta): tensor(1482.1917) >>> p1 dist (orig <-> lr): tensor(1420.7103) ``` This value can vary based on the length and the kind of the signal being processed, so it was handpicked.

|

As you noticed, test is failing. |

| self.assertTrue(torch.allclose(computed, expected)) | ||

|

|

||

| def test_batch_InverseMelScale(self): | ||

| n_fft = 8 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm wondering if it makes sense to vary some of these parameters a bit in a few subsequent tests. In particular to also include edge cases to see what the error behavior is. Unless this is verified through other tests.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I agree with running test on multiple parameters.

However, I think that it can be accomplished better with reorganizing the whole test suite.

Right now Tester class contains all kinds of test, (like batch test, torch script test, librosa compatibility test etc...) and it was hard to tell what type of test I should add and where to add.

By creating separate test suite for different test, it will be much easier.

So I think, I would add that kind parameterized test later, and add similar things to the existing ones.

| S=spec_lr, sr=sample_rate, n_fft=n_fft, hop_length=hop_length, | ||

| win_length=n_fft, center=True, window='hann', n_mels=n_mels, htk=True, norm=None) | ||

| # Note: Using relaxed rtol instead of atol | ||

| assert torch.allclose(melspec_ta, torch.from_numpy(melspec_lr[None, ...]), rtol=1e-3) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

using self.assertTrue might yield a nicer message if this fails.

In the future and in a separate PR we might want to look into introducing some of the Unittest extensions that PyTorch implements that'll enable things such as self.assertAllClose and also does torch.Tensor specific checks such as dtype,memory layout etc. . allclose might do upcasting, broadcasting etc., but actually we care that those properties match. cc @vincentqb

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

using self.assertTrue might yield a nicer message if this fails.

I found it opposite. Using assertTrue on torch.allclose only says

> self.assertTrue(torch.allclose(spec_ta, spec_lr, atol=threshold))

E AssertionError: False is not true

test/test_transforms.py:618: AssertionError

whereas assert says

(although this is still hard to read due to combination of multiple line messages and pytest's annotation)

> assert torch.allclose(spec_ta, spec_lr, atol=threshold)

E AssertionError: assert False

E + where False = <built-in method allclose of type object at 0x121552eb0>(tensor([[[0.8752, 0.8655, 0.6858, ..., 0.7232, 0.3609, 0.2115],\n [0.7756, 1.1142, 0.9477, ..., 0.8303, 1.985...0338, 0.0434, 0.0437, ..., 0.0581, 0.0294, 0.0445],\n [0.4310, 0.7263, 0.4167, ..., 0.1131, 0.5628, 0.8183]]]), tensor([[[0.0000e+00, 0.0000e+00, 0.0000e+00, ..., 0.0000e+00,\n 0.0000e+00, 0.0000e+00],\n [7.7557e-0...e-04, 2.5366e-04],\n [3.0709e-11, 5.1357e-12, 3.0357e-12, ..., 0.0000e+00,\n 4.2634e-11, 1.0426e-10]]]), atol=1.0)

E + where <built-in method allclose of type object at 0x121552eb0> = torch.allclose

test/test_transforms.py:618: AssertionError

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Combined with your comment on parameterized test, I think reorganizing test structure and using PyTorch's helper functions to show a good example of how to write a test will be great benefit for all developers.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The greater discussion about testing is good to have. I'd say we should definitely reorganizing the tests, and use standard pytorch tools for this, and open issues upstream to improve them too.

Given the original scope of this PR, this is ready to merge. Thanks for working on this @jaeyeun97 and @mthrok!

Fix typo in word_embeddings_tutorial.py. Thanks Zhiqiang.

This PR follows up #366 and adds test for

InverseMelScale(andMelScale) for librosa compatibility.For

MelScalecompatibility test;torchaudio.transforms.MelScaleinstancelibrosa.feature.melspectrogramfunction.Element-wise numerical comparison is possible because under the hood their implementations use the same algorith.

For

InverseMelScalecompatibility test, it is more elaborated than that.torchaudio.transforms.MelScaleinstance3.1. Feed the Mel spectrogram to

torchaudio.transforms.InverseMelScaleinstance and get reconstructed spectrogram.3.2. Compute the sum of element-wise P1 distance of the original spectrogram and that from 3.1.

4.1. Feed the Mel spectrogram to

librosa.feature.inverse.mel_to_stftfunction and get reconstructed spectrogram.4.2. Compute the sum of element-wise P1 distance of the original spectrogram and that from 4.1. (this is the reference.)

Element-wise numerical comparison is not possible due to the difference algorithms used to compute the inverse. The reconstructed spectrograms can have some values vary in magnitude.

Therefore the strategy here is to check that P1 distance (reconstruction loss) is not that different from the value obtained using

librosa. For this purpose, threshold was empirically chosenThis value can vary based on the length and the kind of the signal being processed, so it was handpicked.

Closes #366