New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Apply functional batch consistency tests to batches of different items #1315

Apply functional batch consistency tests to batches of different items #1315

Conversation

Issues that occur when different items in a batch influence one another will not present when a batch is composed of identical items. When checking the consistency of batched behavior, in order to catch these issues items should be different. Thus, use different items for the `functional` batch consistency tests wherever possible.

Don't duplicate a single channel multiple times. Since this is used for testing, generate different noise in each channel so data leakage between channels can be detected.

Rather than creating a batch of 3 items in each test and slicing it to test two different batch sizes at once, parameterize the batch size on the TestFunctional class itself. This will generate a separate set of tests for each batch size (better isolating failures) and removes a leaky abstraction where the test calling `assert_batch_consistencies` had to know to give it a batch size greater than 1.

Check inputs to the batch consistency operations too, to ensure any in-place operations operate the same on items as batches - not just that they output the same result.

Using a 5-second signal for the phaser test takes a long time on CPU, much longer than the other batch consistency tests. Use a shorter signal instead.

What do you think about the single-item test? I know this is what was there since the early time and I kept it in the first major test refactoring, but I cannot convince myself that it is serving well as a base case, and I feel something like 2 as a base case and 32 as a common use case might suit better for the purpose of the test. of course 32 increases computational cost for running the test.

Sounds good. nice to know that it does not disturb other tests.

Nice!

Testing multiple frequency sounds a good suggestion and improvement.

I am open for updating the other batch_consistency_test as well, but let's keep it a separate PR. Smaller PRs are easier to review, thus faster approval. |

| self.assert_batch_consistency( | ||

| F.sliding_window_cmn, waveforms, center=False, norm_vars=False) | ||

|

|

||

| def test_vad_from_file(self): | ||

| common_utils.set_audio_backend('default') | ||

| filepath = common_utils.get_asset_path("vad-go-mono-32000.wav") | ||

| waveform, sample_rate = torchaudio.load(filepath) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

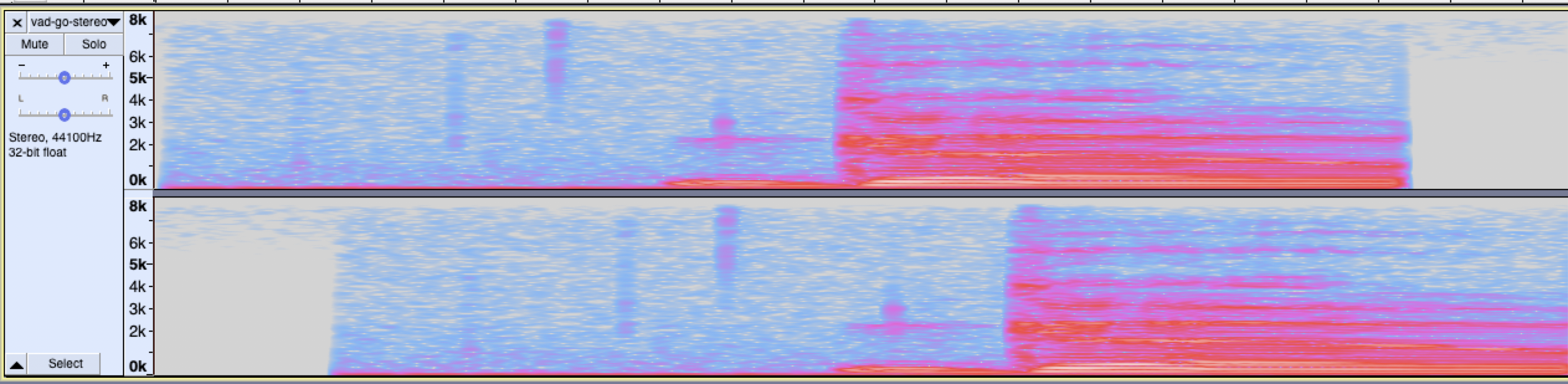

How about using vad-go-stereo-44100.wav? Its second channel is slightly delayed, so we can consider it different waveform from the first channel.

Also could you replace torchaudio.load with common_utils.load_wav, then remove backend='default' from the class? Using I/O function makes this test depend on both F.vad and torchaudio.load, which is not ideal for unit test as it makes harder to grasp what caused test failure.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's a good idea. I'll use that instead (and switch the loading method).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've updated this to use the dual-channel wav. For now I'm ignoring the parameterized batch dimension and passing a two-item batch, but that means the test is generated twice, so it might be worth extracting it. (I could also manipulate the two-item batch depending on the parameterized dimension.)

The stereo wav has two channels, slightly offset, so they'll count as different items.

I assumed that was there to isolate when issues came specifically from adding a dimension, rather than from processing multiple items. I could see the distinction coming up (e.g. reshaping issues) but I can't imagine dropping it would really impede diagnosis.

That's up to you guys. Just ran a quick test and it pushes the run time for the

Awesome. In that case I'll get this done first. |

The pitch frequency batch test was using the same frequency for each item, which may not catch data leakage between items within a batch. Use different frequencies so these kinds of issues would be triggered, just like the other batch consistency tests.

| self.assert_batch_consistencies( | ||

| F.contrast, waveform, enhancement_amount=80.) | ||

| torch.random.manual_seed(0) | ||

| waveforms = torch.rand(self.batch_size, 2, 100) - 0.5 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you also take this opportunity to replace these waveform generation with get_whitenoise?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure thing

No need, since it's now using common_utils.load_wav

|

Sorry about that - I just posted a comment that had a couple of things wrong. I've deleted it while I double check everything. |

|

Thanks for the update. I will go ahead and merge this PR so that you can start working on the other batch consistency test. If you come up with an update on top of this PR, you can add that in the new PR. Regarding my comment about replacing |

The

batch_consistency_test.pyfiles check that behavior is consistent on batches vs. items, but the current infrastructure does this with batches of identical items. This approach may miss bugs related to data leakage between items within a batch, for example #994.This PR makes changes

functional/batch_consistency_test.pyso (most) batches are generated with items that are not identical, then the entire batch is passed to the consistency check. Batch sizes are parameterized so tests are generated for single-item and three-item batches. I've also changed the white noise generator to generate different data in each channel (rather than duplicating one wave), and the consistency check now checks both inputs and outputs, to detect inconsistencies with in-place operations.I am still repeating a single item in a couple of places - with a one-channel signal loaded from disk, and for pitch frequency. Using different frequencies within the same batch may be a more robust way to test pitch frequency.

I haven't touched the root

batch_consistency_test.pyyet, but it seems that this approach could be extended to that file too. Let me know if that sounds sensible.Closes #1294