The Neurally-Guided Shape Parser: Grammar-based Labeling of 3D Shape Regions with Approximate Inference

By R. Kenny Jones, Aalia Habib, Rana Hanocka and Daniel Ritchie

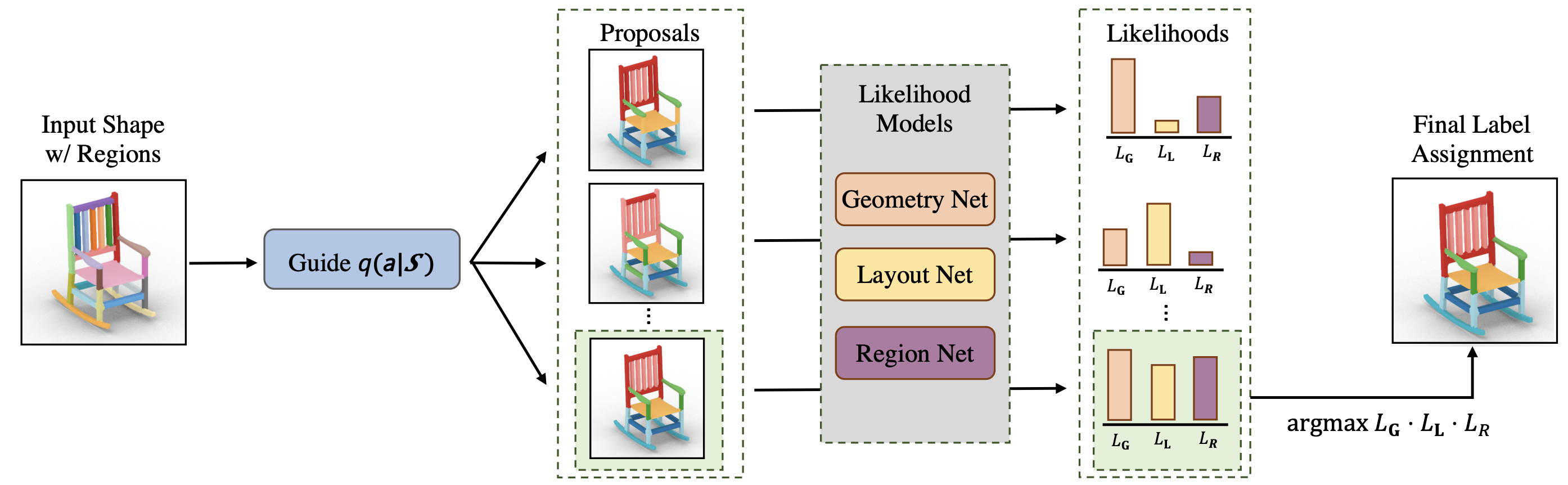

We propose the Neurally-Guided Shape Parser (NGSP), a method that learns how to assign fine-grained semantic labels to regions of a 3D shape. NGSP solves this problem via MAP inference, modeling the posterior probability of a label assignment conditioned on an input shape with a learned likelihood function. To make this search tractable, NGSP employs a neural guide network that learns to approximate the posterior. NGSP finds high-probability label assignments by first sampling proposals with the guide network and then evaluating each proposal under the full likelihood.

Paper: https://rkjones4.github.io/pdf/ngsp.pdf

Presented at CVPR 2022.

Project Page: https://rkjones4.github.io/ngsp.html

@article{jones2022NGSP,

title={The Neurally-Guided Shape Parser: Grammar-based Labeling of 3D Shape Regions with Approximate Inference},

author={Jones, R. Kenny and Habib, Aalia and Hanocka, Rana and Ritchie, Daniel},

journal={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

This repo contains code + data for the experiments presented in our paper. We run fine-grained semantic segmentation experiments on CAD shapes from PartNet. We include training + inference logic for NGSP, along with a suite of baseline methods (PartNet, BAENet, LEL, and LHSS).

Unless otherwise stated, all commands should be run from the code/ directory

To start up any new runs, the following steps must be taken. This repo has been tested with python3.8, cuda 11, PyTorch 1.10 and Ubuntu 20.04 .

-

Create a new conda environment sourced from environment.yml

-

Verify the binaries for the PointNet++ back-bone models are working, details in the code/pointnet2 folder README

-

Change the DATA_DIR variable in make_dataset.py to point to a version of PartNet. For instance the path might look like: '/home/{USER}/partnet/data_v0/'. Then run:

python3 all_data_script.py

- After the all_data_script has finished, get the download area data from this link, put it in the data/ directory, and unzip it. Alternatively, this data can be regenerated by running the following:

python3 make_areas.py

-

Download pretrained models from this link . The MD5Sum of ngsp_model_weights.zip is 85fe117003db7ffd59519a4b91d8eef9 . Place ngsp_model_weights.zip in the code directory, then unzip it. This will place models into the code/model_output and code/lik_mods/model_output directories. The zip file contains all models trained for 400 labeled training shapes, for all methods we consider.

-

Finally, unzip pc_models.zip in the code/pc_enc/ directory, and unzip query_points.zip in code/tmp/ directory (for LHSS and BAE-Net).

To run MAP inference with ngsp, first you must choose a category to evaluate. This category must be one of [chair / table / lamp / storagefurniture / knife / vase]

Then you can spawn an evaluation run for the pretrained NGSP models with the following command:

python3 ngsp_eval.py -en {exp_name} -c {CATEGORY}

Results will be written to cat code/model_output/{exp_name}/log.txt

Before training a new NGSP run, you must first create a set of artificially perturbed proposals with the following command:

python3 make_arti_data.py -sdp lik_exps/arti_props/

Alternatively these can be downloaded from this link, put into the code/lik_mods/arti_props/ directory, and unzipped.

Once the perturbations have been generated, you can train the guide network, the semantic label likelihood networks, the region group likelihood networks and run MAP inference on the new models with a command like:

python3 train_ngsp.py {exp_name} {CATEGORY} {TS}

where TS is the size of the training set (we choose 10, 40, 400 for paper experiments).

The output models / logs will be saved under the model_output/{exp_name}/* directories and lik_exps/model_output/{exp_name}/* directories.

We provide code that reimplements the bottom-up semantic segmentation network proposed by PartNet.

To run inference with partnet, run a command like:

python3 train_partnet.py -en {exp_name} -emp model_output/partnet_{CATEGORY}_400/base/models/base_net.pt -evo y -c {CATEGORY}

To train partnet, run a command like:

python3 train_partnet.py -en {exp_name} -c {CATEGORY} -ts {TS}

We provide code that reimplements BAE-NET.

BAE-Net requires mesh voxelizations and inside/outside information for query points.

This script relies on two binary scripts: manifold and fastwinding.

manifold can be rebuilt from https://github.com/hjwdzh/Manifold if the binary does not run on your machine.

fastwinding can be rebuilt from https://github.com/GavinBarill/fast-winding-number-soups.git if the binary does not run on your machine.

To generate and cache this data before training or inference run, run a command like:

python3 make_bae_data.py -c {CATEGORY}

To run inference with BAE-Net, run a command like:

python3 train_bae_net.py -en {exp_name} -emp model_output/bae_{CATEGORY}_400_u1000/bae/models/bae_net.pt -evo y -c {CATEGORY}

If you want to train a new BAE-NET model, run a command like:

python3 train_bae_net.py -en {exp_name} -c {CATEGORY} -ts {TS} -us {US}

where US is the max number of unlabeled example shapes that will be used (we set to 1000).

We provide a method that makes use of the self-supervised learning objective proposed by the LEL method.

To run inference with LEL, run a command like:

python3 train_lel_net.py -en {exp_name} -emp model_output/lel_{CATEGORY}_400_u1000/lel/models/lel_net.pt -evo y -c {CATEGORY}

If you want to train a new LEL model, run a command like:

python3 train_lel_net.py -en {exp_name} -c {CATEGORY} -ts {TS}

We provide a reimplementation of the LHSS method.

To train / infer for LHSS requires additional code, please follow README in lhss/feat_code/ to get extra code from the author's implementation.

Then, like BAE-NET, LHSS requires additional feature computation per shape instance, this computation can be done in a preprocess with commands like:

python3 make_lhss_data.py -c {CATEGORY} -sn train

python3 make_lhss_data.py -c {CATEGORY} -sn val

python3 make_lhss_data.py -c {CATEGORY} -sn test

Please note:

- successfully creating LHSS features requires Julia

- we set the lambda in the MRF formulation to 0.1, following a grid search, but this can be changed on line 17 of code/train_lhss.py

To run inference with lhss, run a command like:

python3 infer_lhss.py -en {exp_name} -c {CATEGORY}

To train a new LHSS model, you can run a command like:

python3 train_lhss.py -en {exp_name} -c {CATEOGRY} -ts {TS}

-

The code directory contains all code needed to run our experiments and recreate our dataset. Further details are provided in the code/README.md file.

-

The data folder is where datasets go, after creation.

-

The hier folder contains the semantic shape grammars from PartNet that we use for our experiments.