-

Notifications

You must be signed in to change notification settings - Fork 1.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hotfix skipping the first chunks of the artifacts #4989

Conversation

|

@matklad sorry for that, for some reason this doesn't error out on my laptop, we should publish a hotfix for this |

|

That code is so weird. Does attaching the I would have thought that either |

|

The first chunks of the file are received asynchronously after the current call stack is empty. stream.on("data", chunk => { reportProgress(chunk.length) });

await withTempFile(async x => await stream.pipeline(x));

// Inside of `withTempFile() we await for async operations with the file system,

// whilst we have already put the first handler for `data` event, this means

// that the stream starts being consumed and while we are waiting for async file system operations

// the handler with `reportProgress()` starts consuming the first chunks of the stream

// after the async operations for creating the temp file are done `stream.pipeline()` internally puts

// its own `data` handler but this happens only after the part of the stream has already been consumed.After the hotfix: await withTempFile(x => {

// we have put our own handler, the stream will be consumed starting from the next spin of the event loop

stream.on("data", chunk => { reportProgress(chunk.length) });

// Now stream.pipeline() has put its own handler (now there are 2 handlers total)

// But no bytes have been consumed, since handlers are called only on the next spin of the event loop

await stream.pipeline(x);

}) |

Ohh.. |

|

In pseudocode: - registrer on("data", chunk => reportProgress(chunk.length))

- await withTempFile() -> await mkdirTemp() // empties the current async callstack

- reportPorgess() handler consumes the first chunk

- mkdirTemp() is finished, await is resumed

- await stream.pipeline() // registers own on("data") handler, empties the current async callstack

- reportProgress() and internal stream.pipeline's handler consume the **second** chunk |

|

I got it. I was missing the "stream starts on the next event loop after the first handler is attached" part. |

|

bors r+ Thanks for looking into this @Veetaha ! Seems like a hell of a bug 😱 :( I'll try to make a patch release... |

|

Yup, this hotfix is sufficient as a fix, but the core problem was in mixing temp dir logic with the download logic, I'll submit a PR to decouple them to further prevent such mistakes |

4989: Hotfix skipping the first chunks of the artifacts r=matklad a=Veetaha Quick hotfix. fixes: #4986, #4987 The stream starts being consumed once we put a handler for `data` event. When extracting `stream.pipeline()` under `withTempFile` in #4963 I didn't move it into the scope too, which due to preliminary awaiting for async operations with the file system allowed for the first chunks of the file to be skipped Co-authored-by: Veetaha <veetaha2@gmail.com>

|

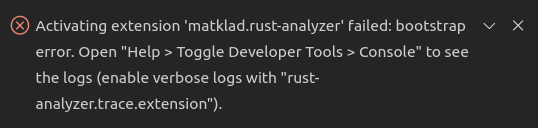

Release is published! |

4992: Never disable error logging on the frontend r=matklad a=Veetaha 4993: Make bootstrap error message more informative and better-fitting r=matklad a=Veetaha Now this better fits standard vscode extension activation failure message and suggests enabling verbose logs.  4994: Decouple http file stream logic from temp dir logic r=matklad a=Veetaha Followup for #4989 4997: Update manual.adoc r=matklad a=gwutz GNOME Builder (Nightly) supports now rust-analyzer 4998: Disrecommend trace.server: "verbose" for regular users r=matklad a=Veetaha This option has never been useful for me, I wonder if anyone finds regular users can use this for sending logs Co-authored-by: Veetaha <veetaha2@gmail.com> Co-authored-by: Günther Wagner <info@gunibert.de>

Quick hotfix.

fixes: #4986, #4987

The stream starts being consumed once we put a handler for

dataevent.When extracting

stream.pipeline()underwithTempFilein #4963 I didn't move it into the scope too, which due to preliminary awaiting for async operations with the file system allowed for the first chunks of the file to be skipped