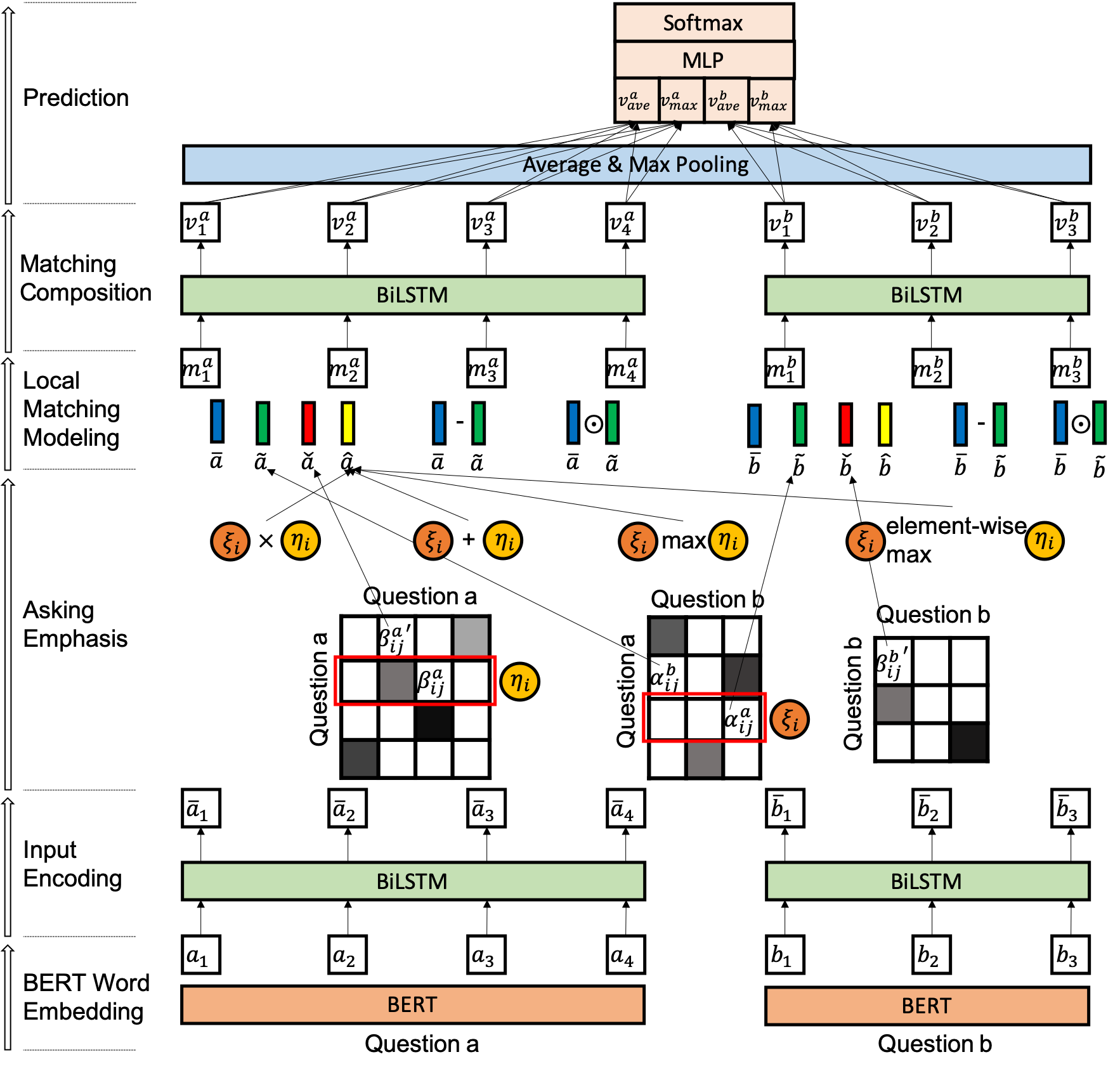

What Do Questions Exactly Ask? MFAE: Duplicate Question Identification with Multi-Fusion Asking Emphasis

This repository includes the source code of the paper "What Do Questions Exactly Ask? MFAE: Duplicate Question Identification with Multi-Fusion Asking Emphasis". Please cite our paper when you use this program! 😍 This paper has been accepted to the conference "SIAM International Conference on Data Mining (SDM20)". The paper can be downloaded here.

@inproceedings{zhang2020questions,

title={What Do Questions Exactly Ask? MFAE: Duplicate Question Identification with Multi-Fusion Asking Emphasis},

author={Zhang, Rong and Zhou, Qifei and Wu, Bo and Li, Weiping and Mo, Tong},

booktitle={Proceedings of the 2020 SIAM International Conference on Data Mining},

pages={226--234},

year={2020},

organization={SIAM}

}

python3

pip install -r requirements.txt

The codes support four datasets for Duplicate Sentence Identification.

- Quora Question Pairs

- CQADupStack

- SNLI

- MultiNLI

After the datasets have been downloaded, you can preprocess the data.

cd scripts/preprocessing

python process_quora_bert.py

python preprocess_cqadup_bert.py

python preprocess_snli_bert.py

python process_mnli_bert.py

cd scripts/preprocessing

python process_quora.py

python preprocess_snli.py

python preprocess_mnli.py

If you want to train models with BERT word embedding, please use the bert-as-service, and then run the following scripts.

sh -x run.sh

python bert_quora.py >> log/quora/quora_bert.log

python bert_cqadup.py >> log/cqadup/cqadup_bert.log

python bert_snli.py >> log/snli/snli_bert.log

python bert_mnli.py >> log/mnli/mnli_bert.log

python train_quora_elmo.py >> log/quora/quora_elmo.log

python train_snli_elmo.py >> log/snli/snli_elmo.log

python train_mnli_elmo.py >> log/mnli/mnli_elmo.log

After the models have been trained, you can test the models.

python test_bert_quora.py

python test_bert_cqadup.py

python test_bert_snli.py

python test_bert_mnli.py

python test_elmo_quora.py

python test_elmo_snli.py

python test_elmo_mnli.py

Please let us know, if you encounter any problems.

The contact email is rzhangpku@pku.edu.cn