-

Notifications

You must be signed in to change notification settings - Fork 1.1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Hindsight Experience Replay as a replay buffer #753

Conversation

Codecov Report

@@ Coverage Diff @@

## master #753 +/- ##

==========================================

- Coverage 91.77% 91.24% -0.53%

==========================================

Files 70 71 +1

Lines 4934 5082 +148

==========================================

+ Hits 4528 4637 +109

- Misses 406 445 +39

Flags with carried forward coverage won't be shown. Click here to find out more.

📣 We’re building smart automated test selection to slash your CI/CD build times. Learn more |

|

The ubuntu GPU test is run on gym==0.25.2. I'll give some feedback later today. |

|

I see. I'll fix it after the feedback then. |

| @@ -39,6 +39,7 @@ | |||

| - [Generalized Advantage Estimator (GAE)](https://arxiv.org/pdf/1506.02438.pdf) | |||

| - [Posterior Sampling Reinforcement Learning (PSRL)](https://www.ece.uvic.ca/~bctill/papers/learning/Strens_2000.pdf) | |||

| - [Intrinsic Curiosity Module (ICM)](https://arxiv.org/pdf/1705.05363.pdf) | |||

| - [Hindsight Experience Replay (HER)](https://arxiv.org/pdf/1707.01495.pdf) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

add in docs/index.rst

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

updated: 9d52936

tianshou/data/buffer/her.py

Outdated

| `acheived_goal`, `desired_goal`, `info` and returns the reward(s). | ||

| Note that the goal arguments can have extra batch_size dimension and in that \ | ||

| case, the rewards of size batch_size should be returned |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

can mention the size/shape here, is it for single or batch input?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

updated: 63e65c0

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Here I also remove the third argument, info. Previously this argument was manually set to empty dict {} during the sampling because its shape was complicated. Originally I have it there because Fetch env's compute_reward function also takes info argument, although it was never used.

| """ | ||

| if indices.size == 0: | ||

| return | ||

| # Construct episode trajectories |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I do think you should sort indices here. An example:

indices = [9, 7, 8, 6, 4, 5, 3, 1, 2, 0]

done = [0, 0, 1, 0, 0, 1, 0, 0, 1, 1]

after some processing with horizon=8, unique_ep_open_indices = [7, 4, 1, 0] and unique_ep_close_indices=[ 3, 0, -1, 9], which makes no sense

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Previously I sort indices in sample_indices method (since it is the only one that calls rewrite_transitions) but yeah, I agree that sort indices should be inside rewrite_transitions.

fixed: 63e65c0

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My bad. I didn't see L92 previously, but it also has an issue: if the replay buffer start index is not 0 (i.e., overwrite previous data point in a circular buffer), the sort approach will cause some bugs at some point. It should start with x where x is the smallest index that is greater or equal to start index.

ref:

tianshou/tianshou/data/buffer/base.py

Lines 285 to 289 in 0181fe7

| elif batch_size == 0: # construct current available indices | |

| return np.concatenate( | |

| [np.arange(self._index, self._size), | |

| np.arange(self._index)] | |

| ) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right. This case somehow slipped my mind.

In rewrite_transitions, sorting indices serves as a way to group indices from the same episode together and order them chronologically. So to apply with the circular property, I'm thinking of sorting the indices, then shifting them so that the oldest index is at the front.

Will fix this later maybe today or tomorrow.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just few lines I think:

mask = indice >= self._index

indice[~mask] += self._length

sort(indice)

indice[indice >= self._length] -= self._lengthThere was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Updated & add some test : 6ee7d08

| # episode indices that will be altered | ||

| her_ep_indices = np.random.choice( | ||

| len(unique_ep_open_indices), | ||

| size=int(len(unique_ep_open_indices) * self.future_p), |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could you please briefly explain why we need self.future_p here, instead of all indices?

If I understand correctly, these few lines do some random HER update on the part of trajectories instead of all trajectories.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, you are correct. HER only performs goal re-writing on some random episodes (the amount is depending on the ratio parameter future_k, or in here, probablity future_p).

tianshou/data/buffer/her.py

Outdated

| return | ||

| self._meta[self._altered_indices] = self._original_meta | ||

| # Clean | ||

| del self._original_meta, self._altered_indices |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

no need to manually delete them because it has been overwritten in the next line?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

fixed: 63e65c0

|

Btw, I tried verifying documentation page by using The error, just in case: |

tianshou/data/buffer/her.py

Outdated

| `HERReplayBuffer` is to be used with goal-based environment where the \ | ||

| observation is a dictionary with keys `observation`, `achieved_goal` and \ | ||

| `desired_goal`. Currently support only HER's future strategy, online sampling. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| `HERReplayBuffer` is to be used with goal-based environment where the \ | |

| observation is a dictionary with keys `observation`, `achieved_goal` and \ | |

| `desired_goal`. Currently support only HER's future strategy, online sampling. | |

| HERReplayBuffer is to be used with goal-based environment where the | |

| observation is a dictionary with keys ``observation``, ``achieved_goal`` and | |

| ``desired_goal``. Currently support only HER's future strategy, online sampling. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

updated: 7802451

tianshou/data/buffer/her.py

Outdated

| :param compute_reward_fn: a function that takes 2 `np.array` arguments, \ | ||

| `acheived_goal` and `desired_goal`, and returns rewards as `np.array`. | ||

| The two arguments are of shape (batch_size, *original_shape) and the returned \ | ||

| rewards must be of shape (batch_size,). | ||

| :param int horizon: the maximum number of steps in an episode. | ||

| :param int future_k: the 'k' parameter introduced in the paper. In short, there \ | ||

| will be at most k episodes that are re-written for every 1 unaltered episode \ | ||

| during the sampling. | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

| :param compute_reward_fn: a function that takes 2 `np.array` arguments, \ | |

| `acheived_goal` and `desired_goal`, and returns rewards as `np.array`. | |

| The two arguments are of shape (batch_size, *original_shape) and the returned \ | |

| rewards must be of shape (batch_size,). | |

| :param int horizon: the maximum number of steps in an episode. | |

| :param int future_k: the 'k' parameter introduced in the paper. In short, there \ | |

| will be at most k episodes that are re-written for every 1 unaltered episode \ | |

| during the sampling. | |

| :param compute_reward_fn: a function that takes 2 ``np.array`` arguments, | |

| ``acheived_goal`` and ``desired_goal``, and returns rewards as ``np.array``. | |

| The two arguments are of shape (batch_size, *original_shape) and the returned | |

| rewards must be of shape (batch_size,). | |

| :param int horizon: the maximum number of steps in an episode. | |

| :param int future_k: the 'k' parameter introduced in the paper. In short, there | |

| will be at most k episodes that are re-written for every 1 unaltered episode | |

| during the sampling. | |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

updated: 7802451

Have you tried to reinstall deps by |

|

I have tried |

|

|

tianshou/data/buffer/her.py

Outdated

| mask = indices >= self._index | ||

| indices[~mask] += self.maxsize |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

indices[indices < self._index] += self.maxsize

bump version to 0.4.10 (thu-ml#757)

|

Sorry for missing for a few weeks, I had to clear up some work at hand. In the latest update, 72b4ab4, I have:

|

|

LGTM. Great work, @Juno-T ! |

|

@Trinkle23897 I have fixed the test failures. Feel free to approve or suggest more changes. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM! Thanks for the great works!

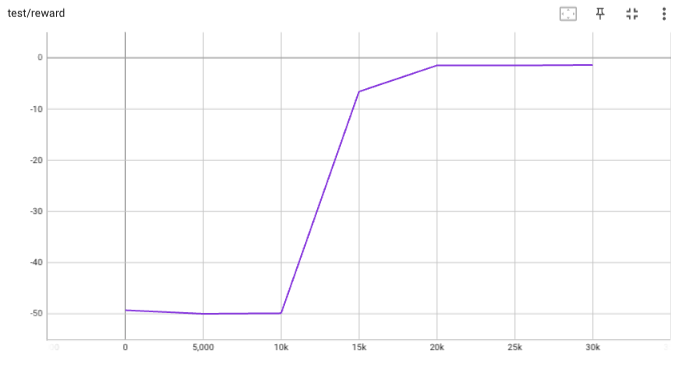

## implementation I implemented HER solely as a replay buffer. It is done by temporarily directly re-writing transitions storage (`self._meta`) during the `sample_indices()` call. The original transitions are cached and will be restored at the beginning of the next sampling or when other methods is called. This will make sure that. for example, n-step return calculation can be done without altering the policy. There is also a problem with the original indices sampling. The sampled indices are not guaranteed to be from different episodes. So I decided to perform re-writing based on the episode. This guarantees that the sampled transitions from the same episode will have the same re-written goal. This also make the re-writing ratio calculation slightly differ from the paper, but it won't be too different if there are many episodes in the buffer. In the current commit, HER replay buffer only support 'future' strategy and online sampling. This is the best of HER in term of performance and memory efficiency. I also add a few more convenient replay buffers (`HERVectorReplayBuffer`, `HERReplayBufferManager`), test env (`MyGoalEnv`), gym wrapper (`TruncatedAsTerminated`), unit tests, and a simple example (examples/offline/fetch_her_ddpg.py). ## verification I have added unit tests for almost everything I have implemented. HER replay buffer was also tested using DDPG on [`FetchReach-v3` env](https://github.com/Farama-Foundation/Gymnasium-Robotics). I used default DDPG parameters from mujoco example and didn't tune anything further to get this good result! (train script: examples/offline/fetch_her_ddpg.py).

make format(required)make commit-checksmake check-codestyle(required)Related thread: pr#510

Hi, I've implemented Hindsight Experience Replay (HER) as a replay buffer and would like to contribute to tianshou.

I saw the discussion in the mentioned pr#510 and it seems to be inactive for quite a while so I decided to make a new, independent pull request.

implementation

I implemented HER solely as a replay buffer. It is done by temporarily directly re-writing transitions storage (

self._meta) during thesample_indices()call. The original transitions are cached and will be restored at the beginning of the next sampling or when other methods is called. This will make sure that. for example, n-step return calculation can be done without altering the policy.There is also a problem with the original indices sampling. The sampled indices are not guaranteed to be from different episodes. So I decided to perform re-writing based on the episode. This guarantees that the sampled transitions from the same episode will have the same re-written goal. This also make the re-writing ratio calculation slightly differ from the paper, but it won't be too different if there are many episodes in the buffer.

In the current commit, HER replay buffer only support 'future' strategy and online sampling. This is the best of HER in term of performance and memory efficiency.

I also add a few more convenient replay buffers (

HERVectorReplayBuffer,HERReplayBufferManager), test env (MyGoalEnv), gym wrapper (TruncatedAsTerminated), unit tests, and a simple example (examples/offline/fetch_her_ddpg.py).verification

I have added unit tests for almost everything I have implemented.

HER replay buffer was also tested using DDPG on

FetchReach-v3env. I used default DDPG parameters from mujoco example and didn't tune anything further to get this good result! (train script: examples/offline/fetch_her_ddpg.py).Todo

make doc