-

Notifications

You must be signed in to change notification settings - Fork 874

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

backtest(): Retrain every n steps

#135

Comments

|

Thanks for pointing this out @aschl. We are currently re-working quite substantially the backtesting functionality, and indeed our plan is to introduce a |

|

Awesome! Looking forward to seeing this feature. The idea of incorporating the moving window is also great and very useful. |

|

Hello @aschl, while we don't have a date yet for the release of this feature. If this something you would like to try as soon as possible the stride parameter has already been added to the |

|

I checked The idea behind scikitlearn's (I see that you implemented a |

|

Hi @aschl and sorry for the delay answering your last comment. If I understand correctly, your suggestion would be to replace If that's the case, I think you make a good point. Feel free to contribute if you can! Otherwise I'll put it on our radar. |

backtest(): Retrain every n steps

|

Yes. Exactly. |

|

Can I take at this one up if this is still open? |

|

@pravarmahajan sorry for the late reply. We would be very happy to receive a PR to introduce a "retrain every N steps" feature to historical forecasting / backtesting! |

|

Just looping back to this. As I understand, #1139 addressed the concern on retraining every n steps in the |

|

You're right @deltamacht. Gridsearch is still pretty basic... There's however a |

|

Implemented in v0.22.0 🚀 |

Recently found your library and it is really great and handy!

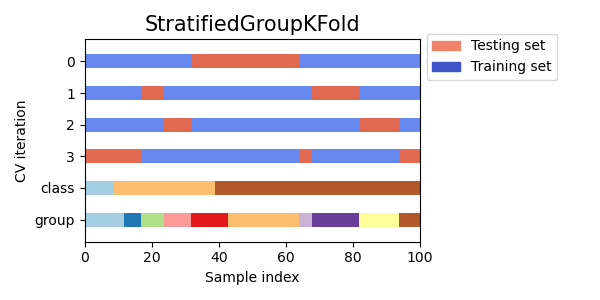

The evaluation of the forecasting performance is best done using a cross-validation approach. The

backtest_forecasting()-function does that - although it currently iterates and re-trains the model on every single time step. In my application, I am training ten-thousands of different time series and it becomes computationally unfeasible to retrain on every time step. Another approach already implemented in scikit-learn isTimeSeriesSplit(), which generates a k-fold cross-validation split designed for time series.https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.TimeSeriesSplit.html

One solution to the issue would be to add support for different cross-validation techniques implemented in scikit-learn (such as TimeSeriesSplit()). Another solution I see could be to add a

strideparameter inbacktest_forecasting()that specifies how many time steps it should skip while backtesting.The text was updated successfully, but these errors were encountered: