This is the central repository for all the resources generated by my work on DBpedia throughout the Google Summer of Code 2018.

The branch gh-pages contains the sources of the blog.

On the scripts folder, you can find several scripts to generate training datasets and other useful stuff

- vfrico/mapping-predictor-backend

- vfrico/mapping-predictor-webapp

- vfrico/dbpedia-gsoc-18/inconsistents-mappings

In this section, you can access the links to each product. Each product itself should contain a more detailed explanation of how it works.

The expected output is a CSV file that can be interpreted by the Web interface (both backend and frontend).

An annotation is a relation between two templates and attributes in two languages, which after a transformation process will be related to a RDF property. The CSV file contains the template, attribute and property of each annotations, and all calculated classifier features, named TB{1-11}, C{1-4} and M{1-5}.

To generate this file, two kind of resources are available:

-

SPARQL & Bash scripts to build n-triples files to be used by a triple store (as Virtuoso) vfrico/dbpedia-gsoc-18

-

Java project that generates CSV files with annotations and vfrico/dbpedia-gsoc-18. Note: this product is derived from Nandana's previous work, as stated on the source code files. This work is licensed under Apache 2.0 License.

This part of the work needs CSV files with the annotations as input, and will present a Web interface to allow users vote annotations and manage classification results.

-

Web App: Built with Node.js, and uses React.js as a DOM framework.

-

Source code on: vfrico/mapping-predictor-webapp

-

Docker image on: vfrico/dbpedia-mappings-webapp

-

-

Backend API: Built with JavaEE (Glassfish) and Jersey REST Framework

-

Source code available on: vfrico/mapping-predictor-backend

-

Docker image available on: vfrico/dbpedia-mappings-backend

-

API documentation available on: Github pages

-

Docker-compose file, to deploy all the client application with one-click. It may be necessary to edit the two environment variables that refer to Backend API and SPARQL endpoint. Available on: vfrico/mapping-predictor-backend

-

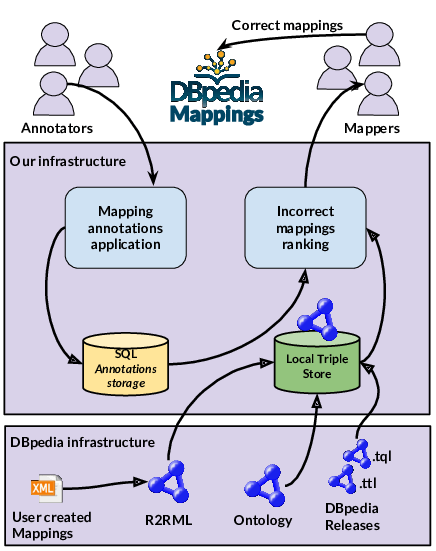

This image shows the architecture of all the modules.

Execute bash script to download spanish and english dataset and reificate them

cd scripts/

./launch_reif.shPut all of the data generated inside the system folder /opt/datos. If on further steps, problems with permissions arise, give full read and write permissions to that folder. This can be changed on docker-compose.yml file, inside volumes section.

Enter the container from the host

docker exec -it virtuosodb /bin/bashInside the container, load the isql interpreter

isql-v -U dba -P $DBA_PASSWORDLoad all triples inside data/ folder on the container (mapped from host's /opt/datos if following docker-compose.yml configuration)

ld_dir_all('data/', '*.ttl', NULL);

select * from DB.DBA.load_list;

rdf_loader_run();