This project implements a realtime interactive rasterizator with 4 BRDFs, adaptive level of details, implicit/explicit shape rendering, advanced lightings, interactive camera control, FBOs, FXAA, etc. This project associates with Brown CSCI 2230 Computer Graphics course and all project handouts can be found here.

The handout for this part can be found here.

Run the program, open the specified .json file, follow the instructions to set the parameters, and save the image with the specified file name using the "Save image" button in the UI. It should automatically suggest the correct directory - again, be sure to follow the instructions in the left column to set the file name. Once you save the images, they will appear in the table below.

If your program can't find certain files or you aren't seeing your output images appear, make sure to:

- Set your working directory to the project directory

- Clone the

scenefilessubmodule. If you forgot to do this when initially cloning this repository, rungit submodule update --init --recursivein the project directory

- Camera data: Calculation of view matrix and projection matrix is implemented in

render/camera.cpp - Shape implementations: Shape implementations are in

shapes/. The implementation has been run in lab 8 to validate the correctness of discretization and normal calculation. - Shaders: Shaders implementation and variable passing are implemented. One design choice to note is that only initializations are put in

initializeGL()and value passing and updates are put insceneChanged(), to enable correct updating of scenefiles. - Tessellation: Shape discretization changes with parameter toggles in all situations (including in adaptive level of detail).

- Software engineering, efficiency, & stability:

- Repeatable codes are packed into functions.

- When updating scenes or parameters, only necessary changes are computed.

- When changing scenes, clean ip functions are called to clear scene objects.

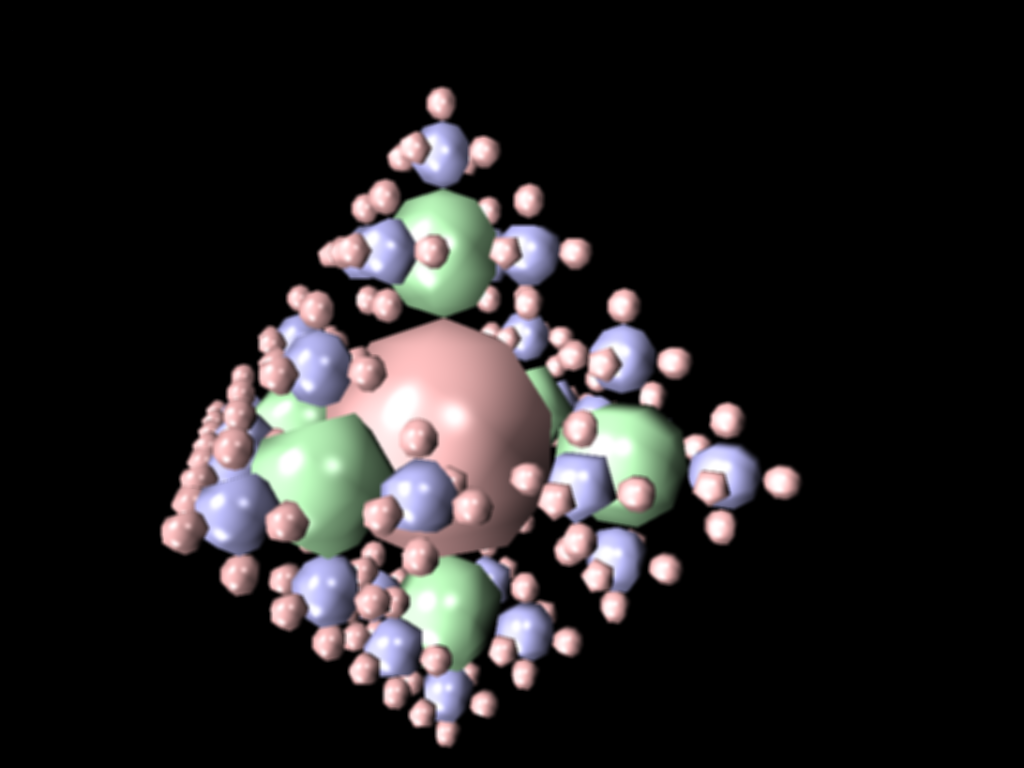

- The program can quickly render complex scenes like

recursive_sphere_7.json

- Number of objects in the scene

When number of objects is in the scene is more than 10, the discretization level would be scaled by factor 1.0 / (log(0.1 * (renderScene.sceneMetaData.shapes.size() - 10) + 1) + 1) to have smooth decreasing when the descretization level when the number of objects in the scene is changing.

Note that the lower bound 10 can be changed and the scaling factor function can also be changed to 1.0 / (log(0.1 * (renderScene.sceneMetaData.shapes.size() - lower_bound) + 1) + 1). In addition, there is a commented out fixed factor 0.5 if user need to use this simplified version.

- Distance from the object to the camera

The discretization parameters are scaled by calculateDistanceFactors(), in which the minimum distance minDistance of all the shapes in a scene is calculated and the discretization parameters for all other primitives are scaled by 1 / (distance / minDistance). Note that after the scaling, the lower bound of discretization parameters is checked to ensure that the distant primitives have reasonable shapes.

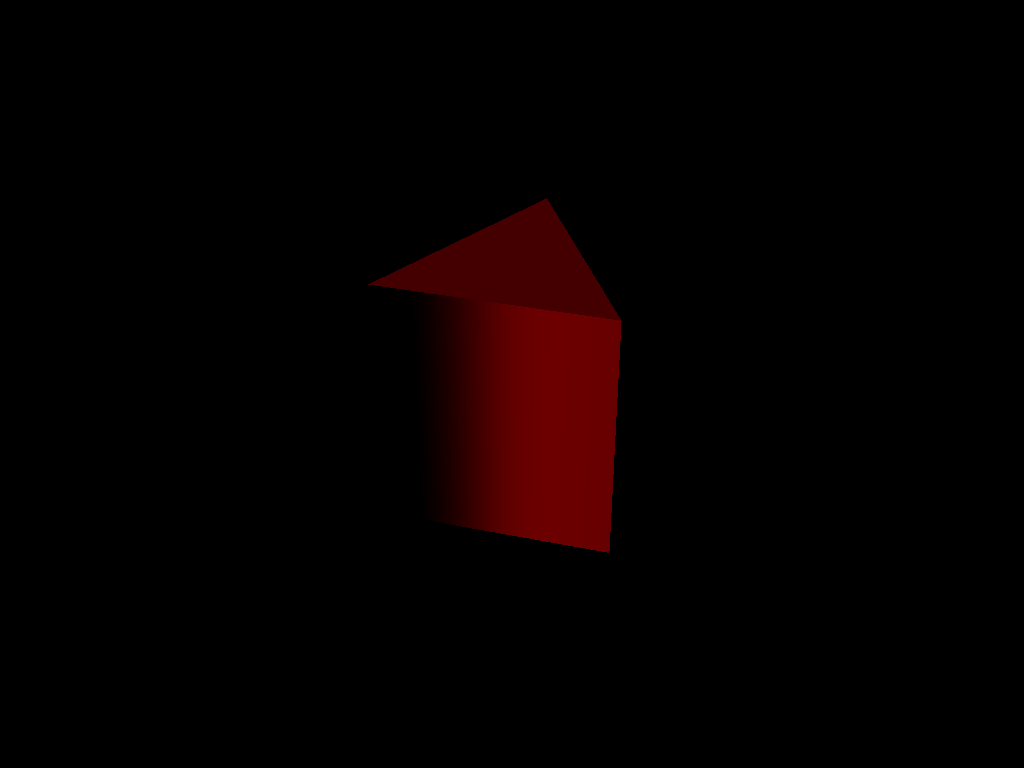

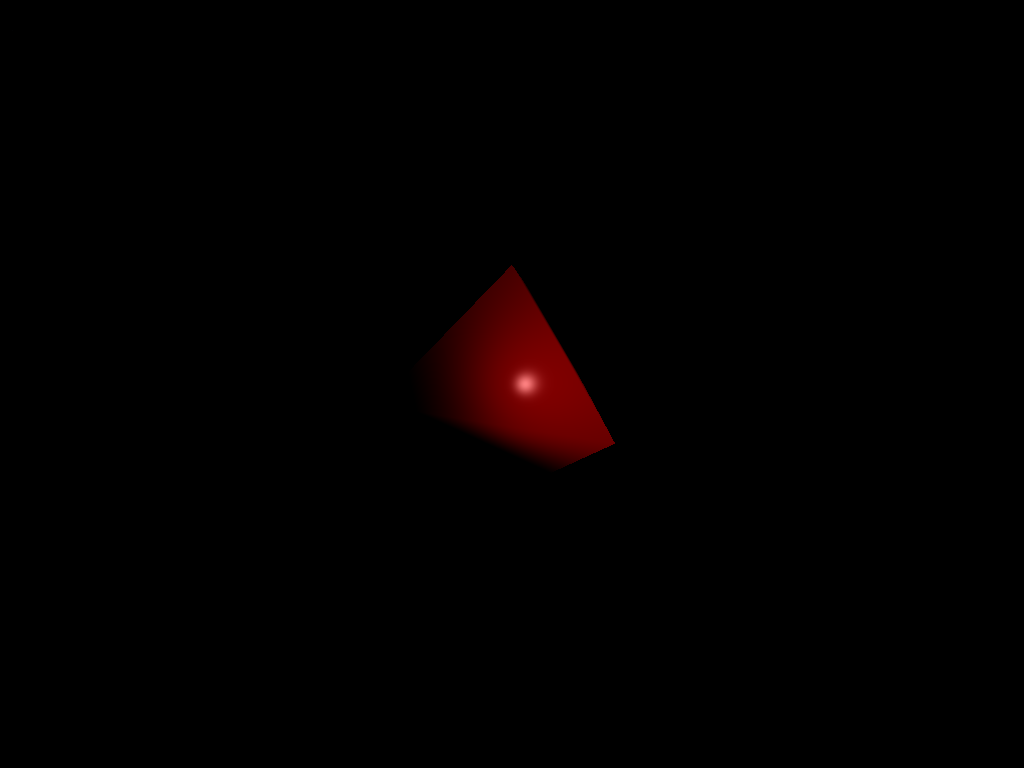

The table below shows the comparison of extra_credit2 turned off and on. When turned on, more distant spheres show fewer discretizations than nearer ones. When turned off, they have the same discretization levels.

| File/Method To Produce Output | Tunred off | Tunred on |

|---|---|---|

Input: recursive_sphere_5.jsonOutput: recursive_sphere_5.pngParameters: (25, 25, 0.1, 100) |

|

|

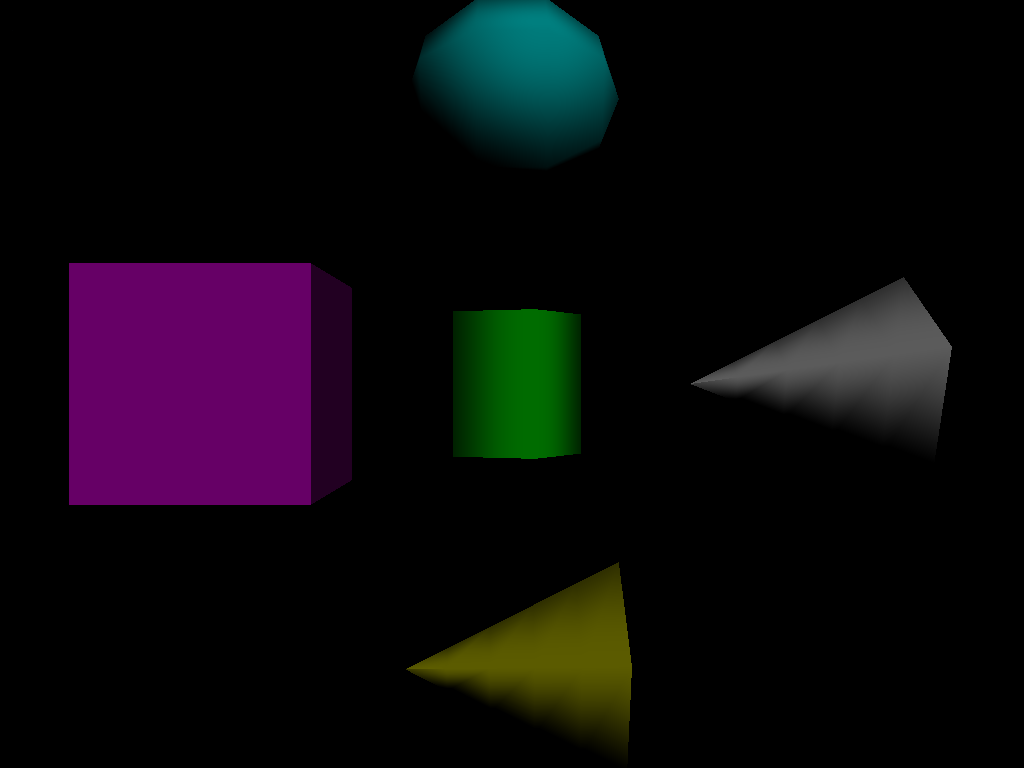

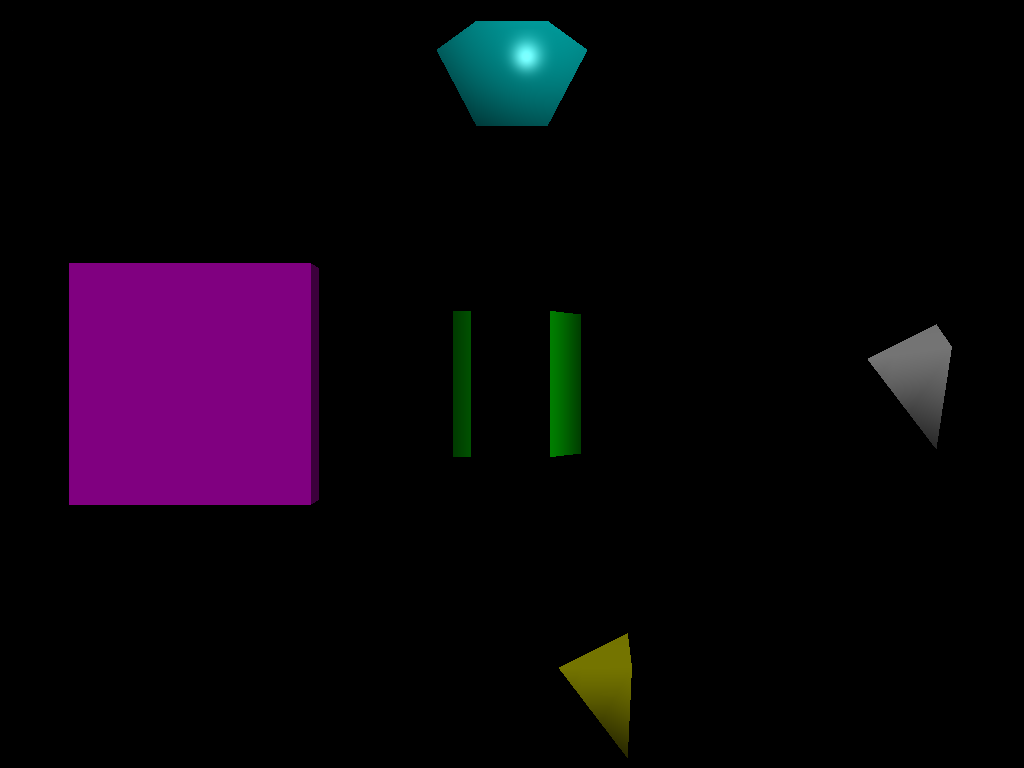

This custom scene file resembles a robot car with sensors like eyes.

| File/Method To Produce Output | Your Output |

|---|---|

Input: custom_scene_file.jsonOutput: custom_scene_file.pngParameters: (25, 25, 0.1,100) |

|

- Implemented my own .obj file reader from scratch.

- Can handle .obj files that explicitly specifies

vninfo (e.g., thedragon_mesh.obj) and files that doesn't explicitly provide them (e.g., thebunny_mesh.obj). In the later situation, the .obj reader function automatically calculates the vertex normals.

| File/Method To Produce Output | Your Output |

|---|---|

Input: bunny_mesh.jsonOutput: bunny_mesh.pngParameters: (5, 5, 0.1,100) |

|

Input: dragon_mesh.jsonOutput: dragon_mesh.pngParameters: (5, 5, 0.1,100) |

|

The handout for this part can be found here.

Important

Before generating expected outputs, make sure to:

- Set your working directory to the project directory

- From the project directory, run

git submodule update --recursive --remoteto update thescenefilessubmodule. - Change all instances of

"action"inmainwindow.cppto"action"(there should be 2 instances, one inMainWindow::onUploadFileand one inMainWindow::onSaveImage).

Run the program, open the specified .json file and follow the instructions to set the parameters.

Note that some of the output images seems that the camera is moved a bit, but the on-screen results are exactly the same as the expected output. This can be checked in demonstration.

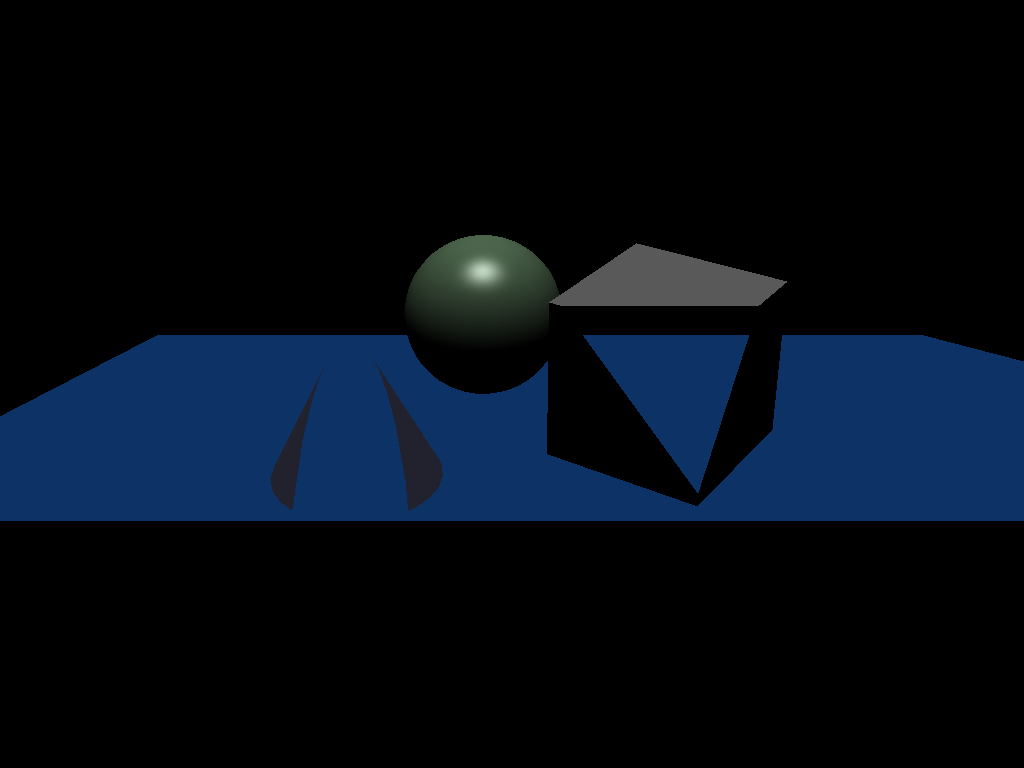

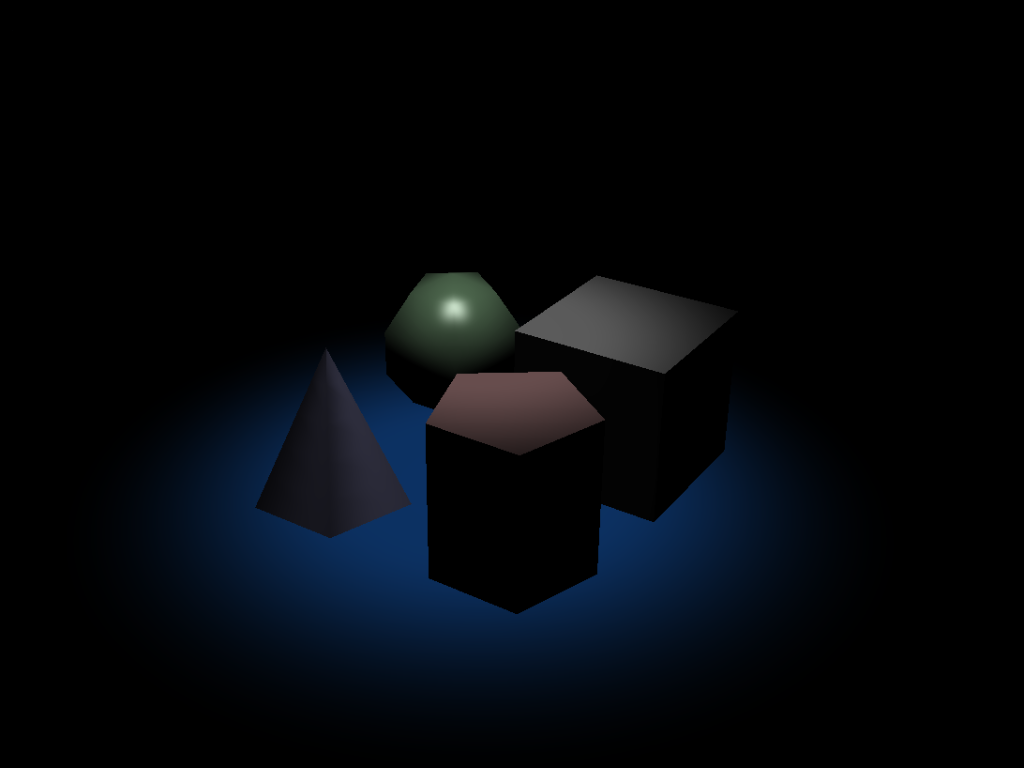

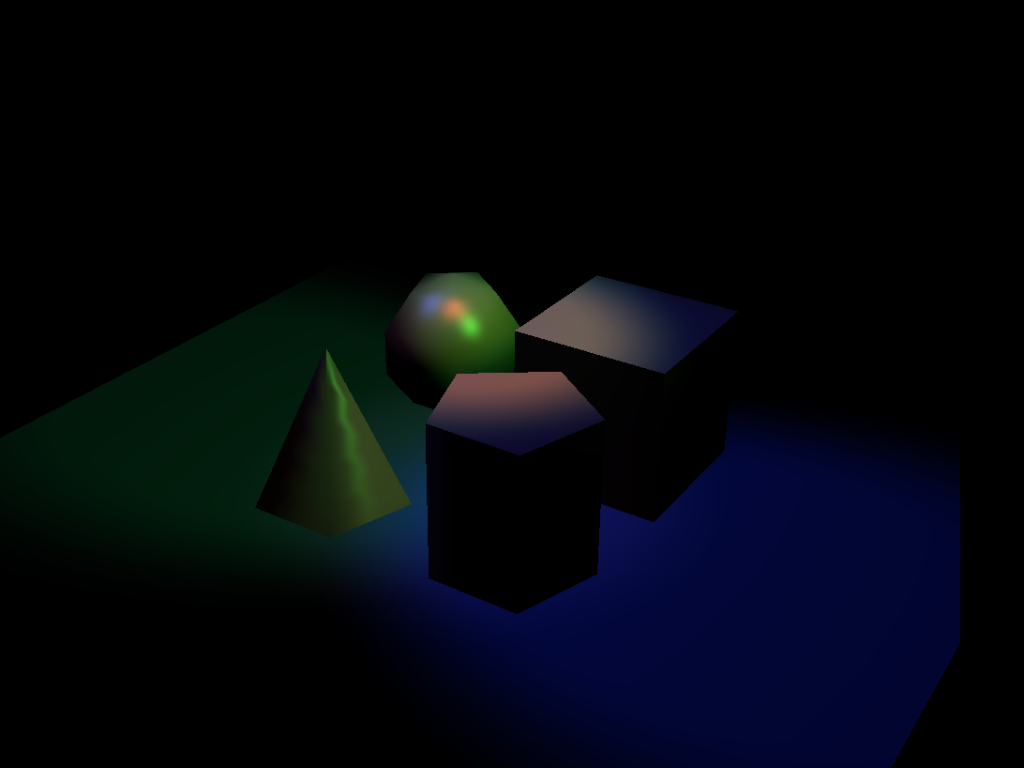

| File/Method To Produce Output | Expected Output | Your Output |

|---|---|---|

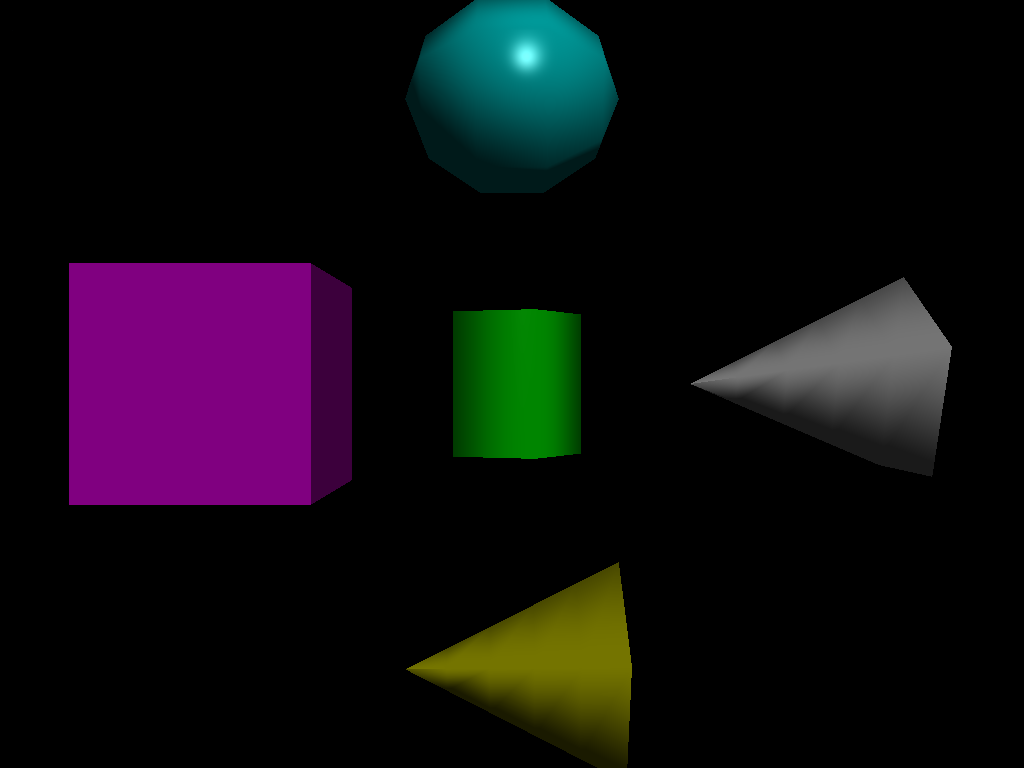

Input: primitive_salad_1.jsonApply invert filter Output: primitive_salad_1_invert.pngParameters: (5, 5, 0.1, 100) |

|

|

| File/Method To Produce Output | Expected Output | Your Output |

|---|---|---|

Input: primitive_salad_1.jsonApply grayscale filter Output: primitive_salad_1_grayscale.pngParameters: (5, 5, 0.1, 100) |

|

|

| File/Method To Produce Output | Expected Output | Your Output |

|---|---|---|

Input: recursive_sphere_4.jsonApply blur filter Output: recursive_sphere_4_blur.pngParameters: (5, 5, 0.1, 100) |

|

|

Instructions: Load chess.json. For about 1 second each in this order, press:

- W, A, S, D to move in each direction by itself

- W+A to move diagonally forward and to the left

- S+D to move diagonally backward and to the right

- Space to move up

- Cmd/Ctrl to move down

Screen.Recording.2023-11-29.at.4.34.35.AM.mov

camera_translation.mov

Instructions: Load chess.json. Take a look around!

Screen.Recording.2023-11-29.at.4.33.06.AM.mov

camera_rotation.mov

- Scene objects are regenerated only when Param1 and Param2 are changed.

- No inverse and transpase are calculated in vertex shader. The normal matrix is precomputed as uniforms and passed to vertex shader.

- Texture images are reloaded only when the texture image filepath is changed.

- Error conditions (e.g., non-exist texture files) are properly handled and can produce results without crashing.

- Common codes are wrappoed into functions to reduce redundancy.

- Code are properly annotated with explanations of key steps.

The main bug is in texture mapping. The textures which in the edge regions of a texture image show inaccurate uv location and results in some high-frequency aliasing. I have ensure that all clampings are properly used, but it seems that the rounding error of vertex positions may cause such issue.

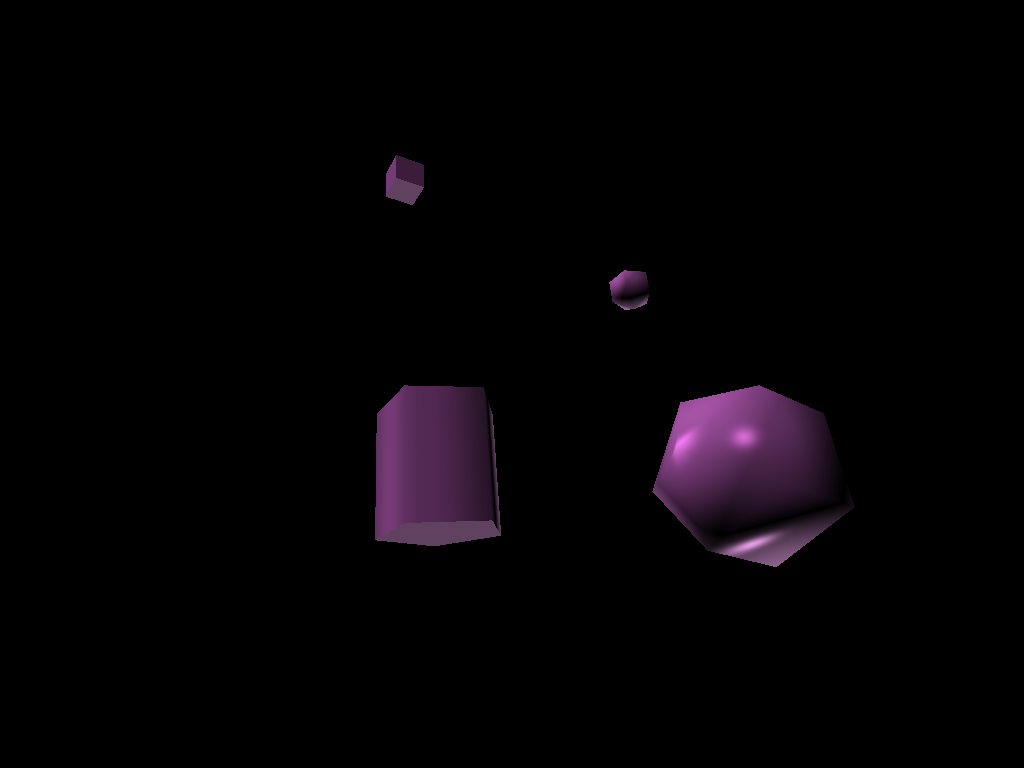

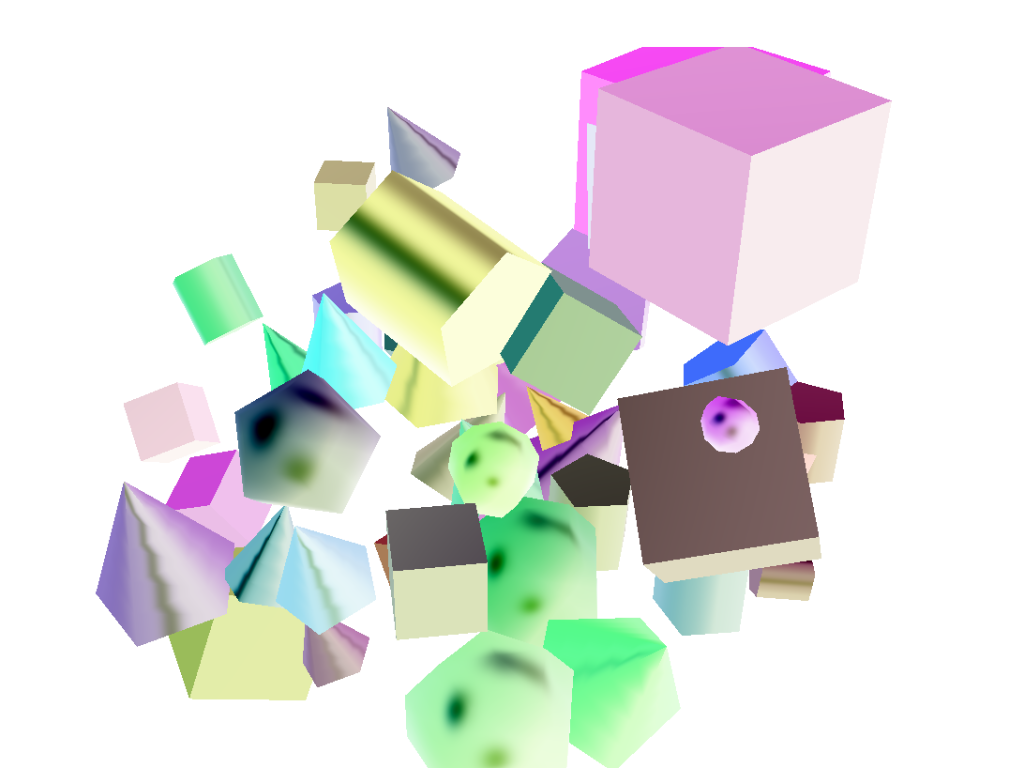

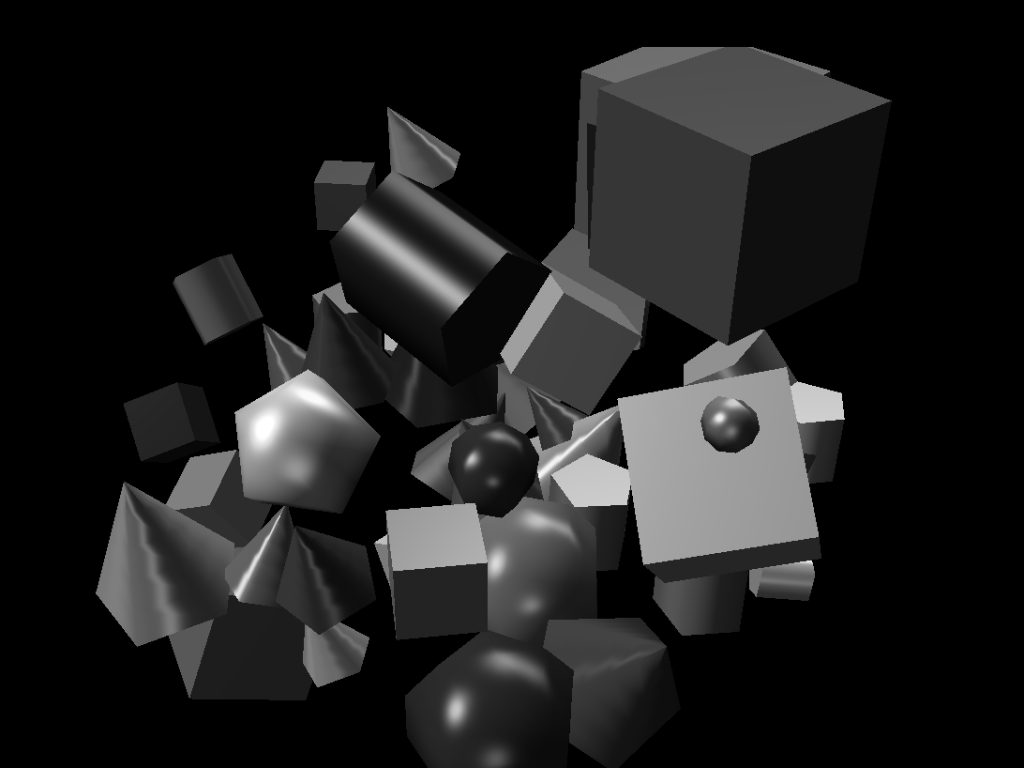

Gray scale filter and solbel filter are implemented as extra filters for per-pixel and multi-stage filter.

| Perpixel - Grayscale | Multi-Stage Filter - Sobel filter |

|---|---|

|

|

|

|

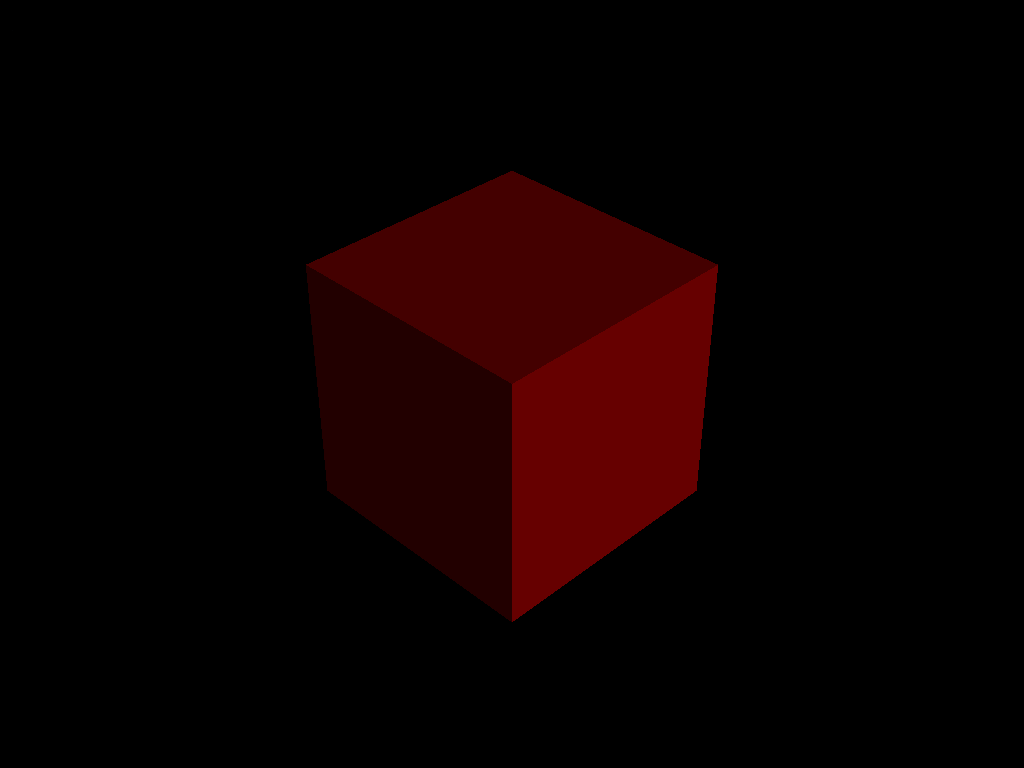

Texture mapping is implemented by adding uv coordinates to VBO and fetching texture image color in Phong illumination model.

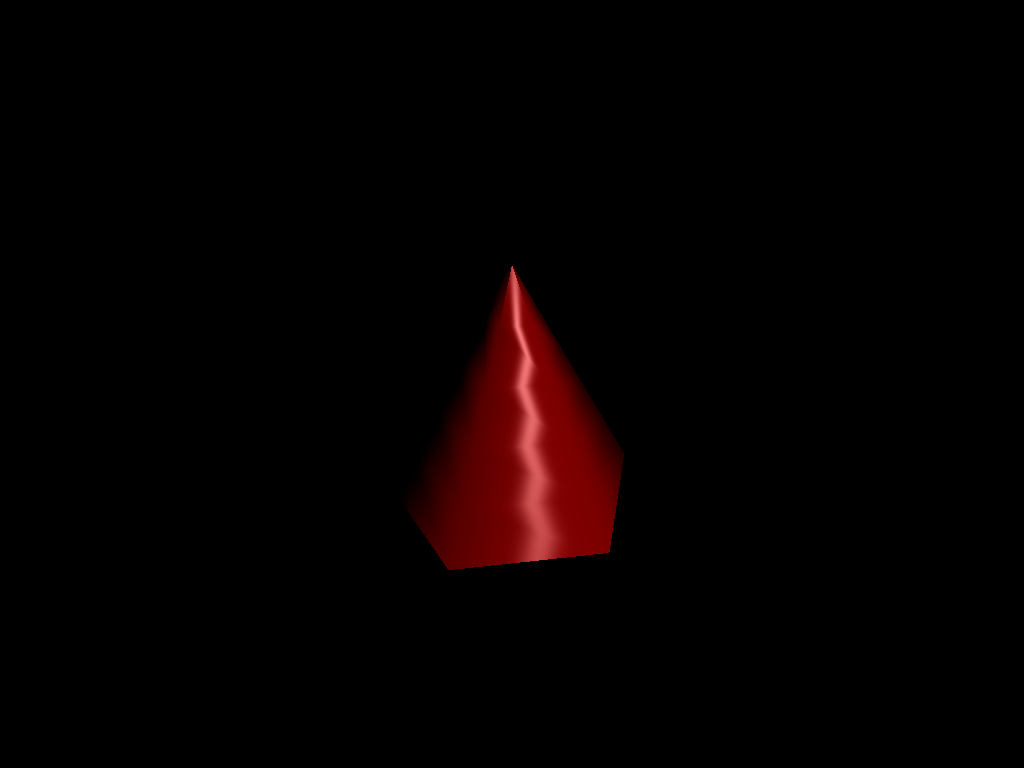

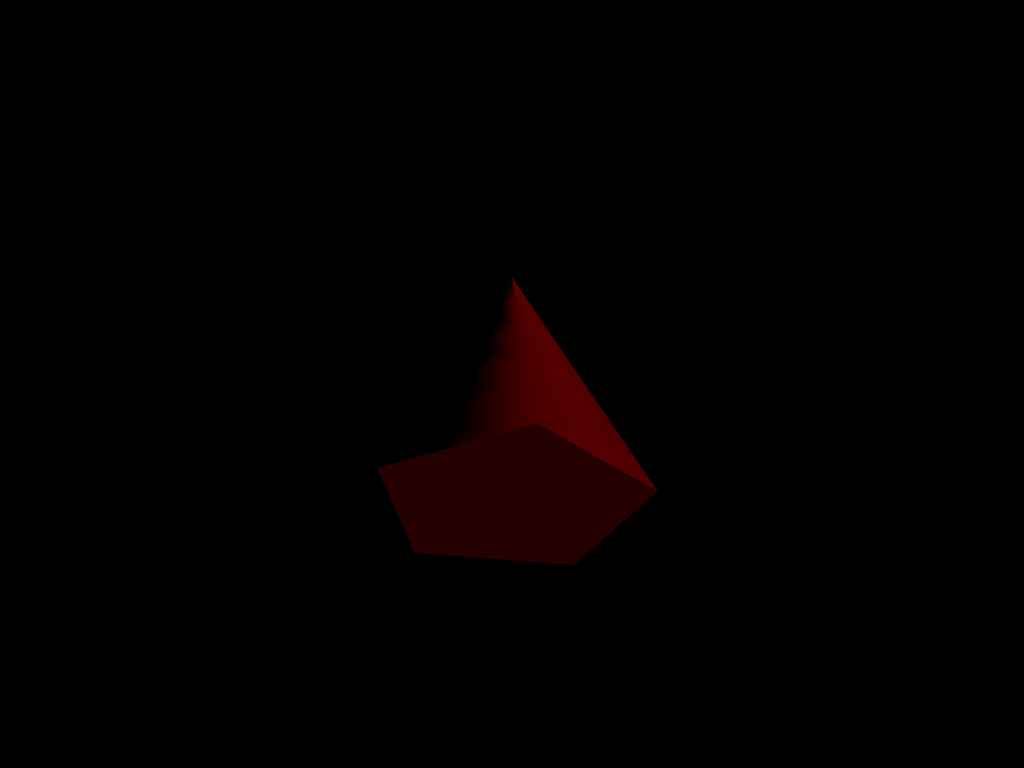

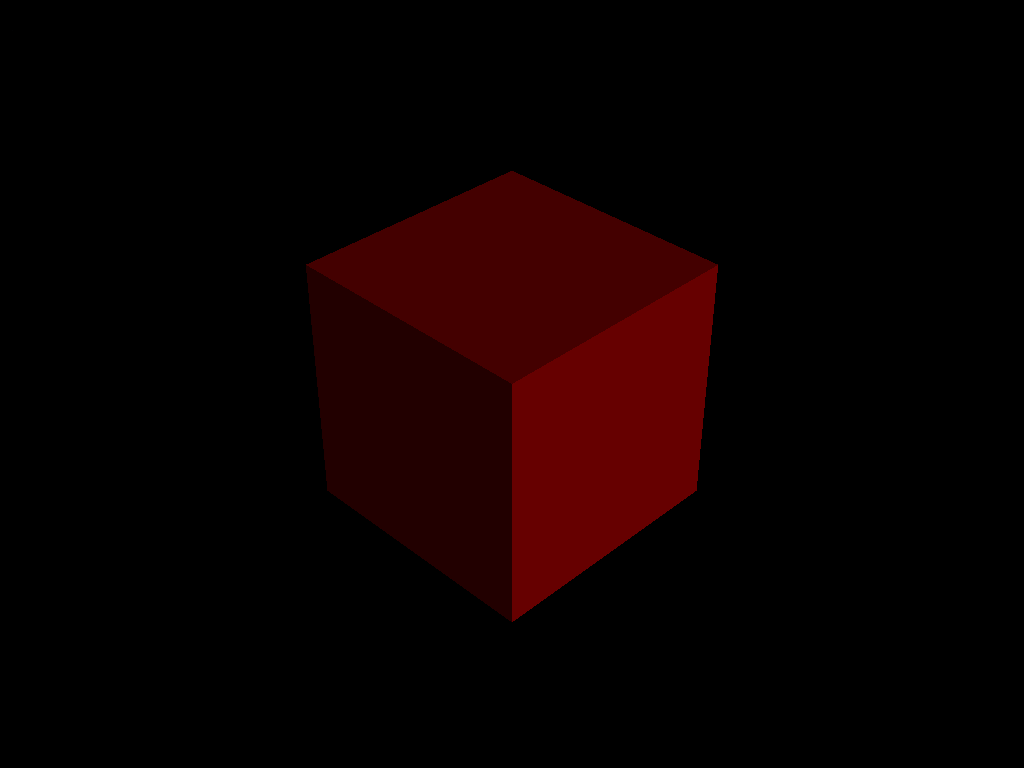

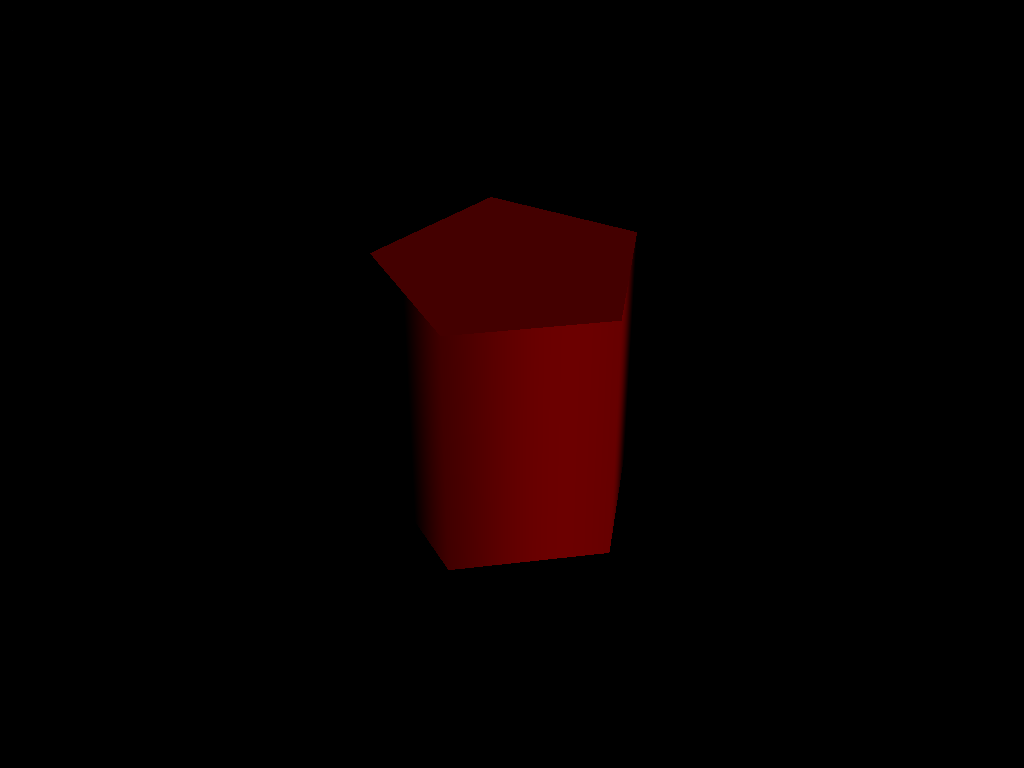

| Primitive Type | Single | Multiple |

|---|---|---|

| Sphere |  |

|

| Cube |  |

|

| Cyliner |  |

|

| Cone |  |

|

The FXAA is implemented with the following steps:

- Luminance Calculation: Compute the luminance of the current pixel and its neighbors.

- Edge Detection: Detect edges by comparing luminance differences.

- Sub-Pixel Anti-Aliasing: Incorporate finer details by considering sub-pixel variances.

- Edge Direction Determination: Calculate the gradient to determine edge direction.

- Anti-Aliasing Blend: Blend colors along the detected edges based on the edge direction and strength.

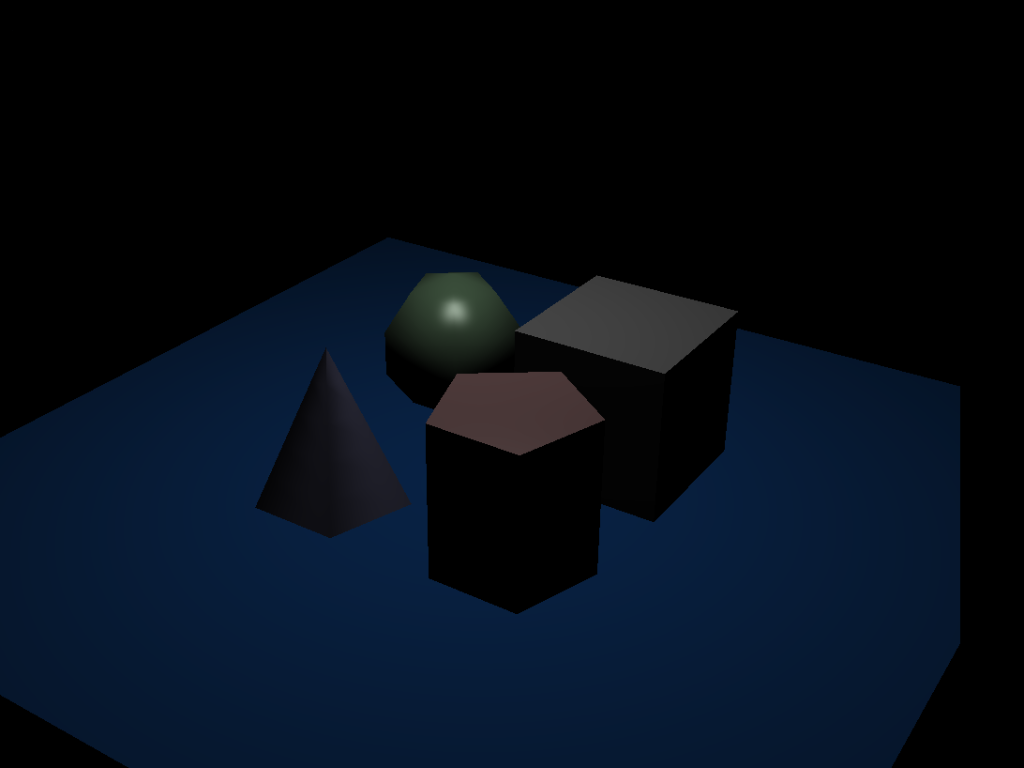

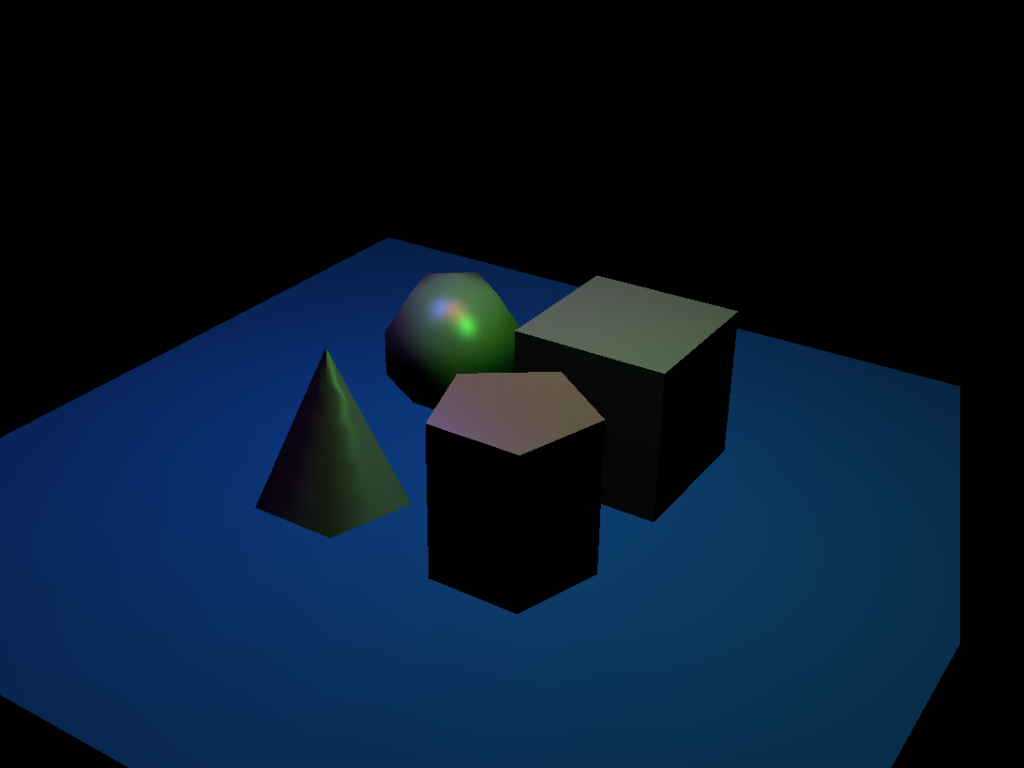

The difference is obvious if zoomed in. Also, it's obvious in live demonstration.

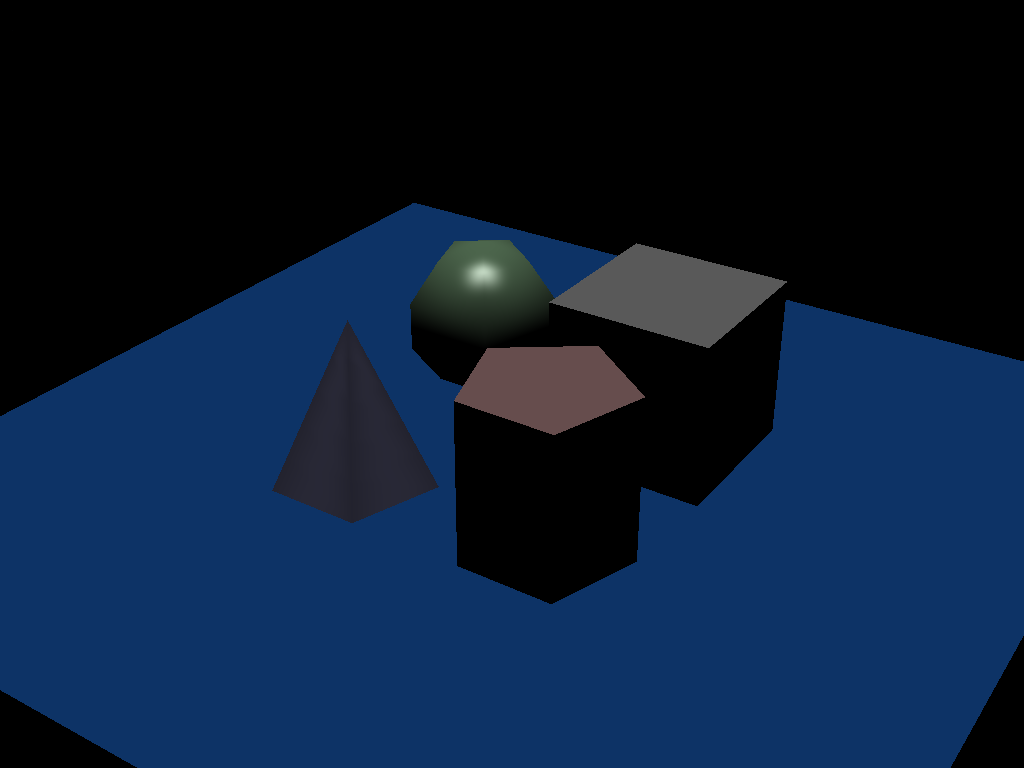

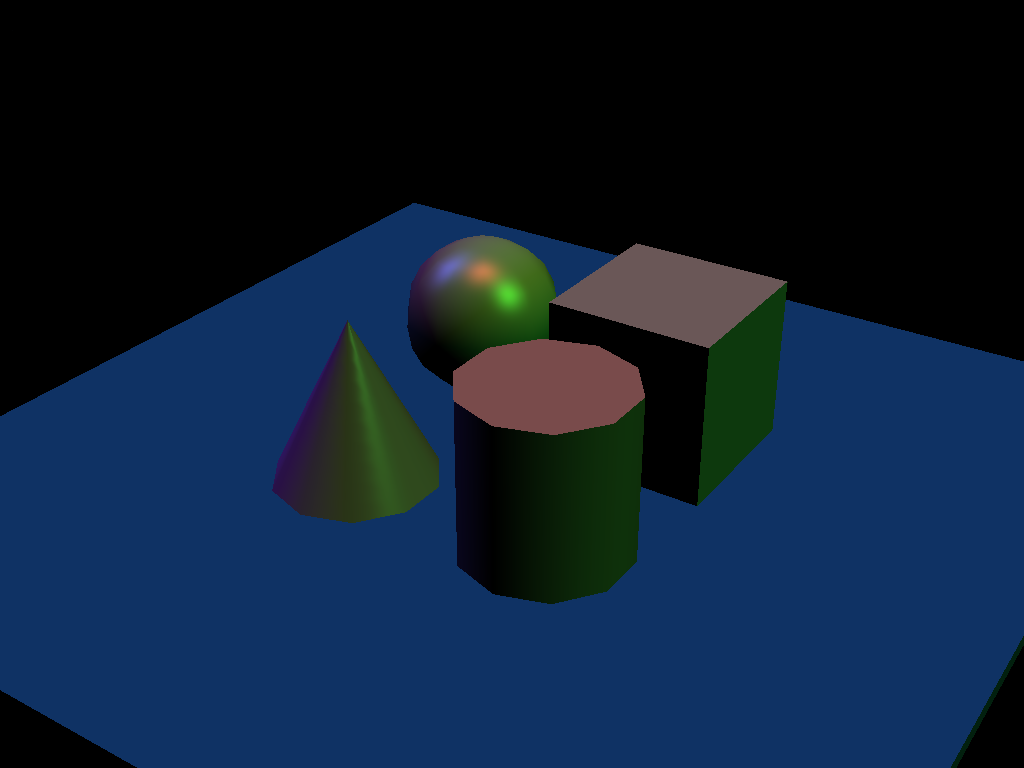

| File | No FXAA | With FXAA |

|---|---|---|

primitive_salad_1.json |

|

|

recursive_sphere_4.json |

|

|

| Cyliner |  |

|