-

Notifications

You must be signed in to change notification settings - Fork 3.4k

Closed

Description

Hi Great Team,

I just want to know what is the right way to use NVLAMB optimizer. I check the code here

| loss = torch.nn.CrossEntropyLoss(ignore_index=-1) | |

| model = TestMod() | |

| model.cuda() | |

| model.half() | |

| model.train() | |

| model = torch.jit.script(model) | |

| param_optimizer = list(model.named_parameters()) | |

| no_decay = ['bias', 'gamma', 'beta', 'LayerNorm'] | |

| optimizer_grouped_parameters = [ | |

| {'params': [p for n, p in param_optimizer if not any(nd in n for nd in no_decay)], 'weight_decay': 0.01}, | |

| {'params': [p for n, p in param_optimizer if any(nd in n for nd in no_decay)], 'weight_decay': 0.0}] | |

| grad_scaler = torch.cuda.amp.GradScaler(enabled=True) | |

| optimizer = FusedLAMBAMP(optimizer_grouped_parameters) | |

| x, y = make_classification(n_samples=N_SAMPLES, n_features=N_FEATURES, random_state=0) | |

| x = StandardScaler().fit_transform(x) | |

| inputs = torch.from_numpy(x).cuda().half() | |

| targets = torch.from_numpy(y).cuda().long() | |

| del x, y | |

| dataset = torch.utils.data.TensorDataset(inputs, targets) | |

| loader = torch.utils.data.DataLoader(dataset, batch_size=BATCH_SIZE) | |

| for epoch in range(20): | |

| loss_values = [] | |

| for i, (x, y) in enumerate(loader): | |

| with torch.cuda.amp.autocast(): | |

| out1 = model(x) | |

| # Might be better to run `CrossEntropyLoss` in | |

| # `with torch.cuda.amp.autocast(enabled=False)` context. | |

| out2 = loss(out1, y) | |

| grad_scaler.scale(out2).backward() | |

| grad_scaler.step(optimizer) | |

| grad_scaler.update() | |

| optimizer.zero_grad(set_to_none=True) | |

| loss_values.append(out2.item()) | |

| print(f"Epoch: {epoch}, Loss avg: {np.mean(loss_values)}") | |

| print_param_diff(optimizer) | |

| print("state dict check") | |

| optimizer.load_state_dict(optimizer.state_dict()) | |

| print_param_diff(optimizer) |

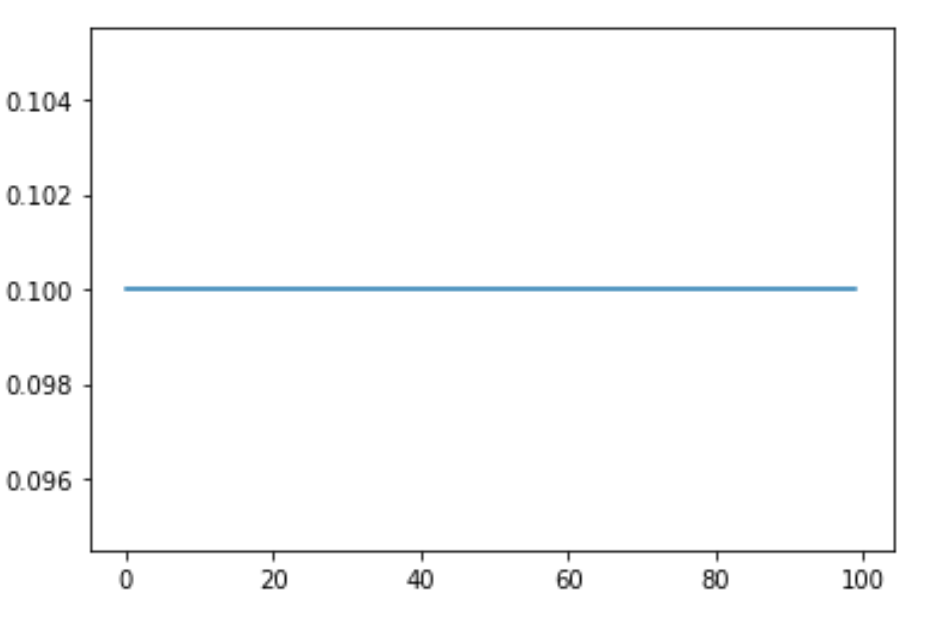

And I write a test example with PolyWarmUpScheduler, but it seems there is something wrong with the combination of optimizer and lr_scheduler.

from lamb_amp_opt.fused_lamb import FusedLAMBAMP

from schedulers import PolyWarmUpScheduler

import torch

import matplotlib.pyplot as plt

device = torch.device("cuda")

lr = 1

total_steps = 100

warmup_proportion = 0.1

model = torch.nn.Linear(10,1).to(device)

# optimizer = torch.optim.Adam(m.parameters(),lr = lr)

optimizer = FusedLAMBAMP(model.parameters(),lr=lr,)

lr_scheduler = PolyWarmUpScheduler(optimizer,

warmup=warmup_proportion,

total_steps=total_steps,

base_lr=lr,

device=device)

lrs = []

loss_fct = torch.nn.MSELoss()

for _ in range(total_steps):

output = model(torch.rand(2,10).to(device))

labels = torch.rand(2,1).to(device)

loss = loss_fct(output,labels)

loss.backward()

optimizer.step()

optimizer.zero_grad()

lr_scheduler.step()

lrs.append(optimizer.param_groups[0]['lr'].item())

plt.plot(lrs)

And I also check official Adam optimizer. Nothing changed.

Do I misunderstand something about LAMB optimizer and PolyWarmUpScheduler ? Thanks so much !

Metadata

Metadata

Assignees

Labels

No labels