-

Notifications

You must be signed in to change notification settings - Fork 13.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Landing Target Estimator refactor #20653

base: main

Are you sure you want to change the base?

Conversation

|

Wow very thorough explanation! 👏 |

|

I was hoping we could combine the follow-target estimator and the precision landing estimator. Both are estimating the position of a possibly moving object, and we shouldn't have two separate estimators for that. |

In general I would be a more comfortable with this broken up for more specific use cases and common pieces could be shared in libraries. I'm concerned that this is a bit of a monolith that's going to be difficult to maintain due to no one having access to all of these things. Separately I think it would help to capture more specific use cases we can commit to maintaining.

More of this could be composable with purpose built "tracking" modules that based on configuration the output would be published as a moving target or perhaps landing target. |

src/modules/landing_target_estimator/LandingTargetEstimator.cpp

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/LandingTargetEstimator.cpp

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/LandingTargetEstimator.cpp

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/python_derivation/SymForceKF_coupled_static.py

Outdated

Show resolved

Hide resolved

|

Latest commit updates acaef0f: New filter without the GPS bias in the state [x, y, z, vx, vy, vz]. The system automatically chooses between

The main motivations are:

Note: a simpler alternative would be to modify the GPS observation:

It is much simpler, however the number of states is not reduced, leading to un-necessary computations (e.g. 9 states instead of 6 for stationary targets). Simulation (

TODO: reduce the number of Kalman filters by chosing between coupled and decoupled. In the end we would have 4 filters: |

|

@JonasPerolini nice job! There's no need to compensate gravity, current kalman filter only had 4 states, xy axis's position and velocity; But your kalman had z axis's position and velocity, so need compensate gravity. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm surprised by the number of different source files inside landing_target_estimator/ 😅

msg/landing_target_orientation.msg

Outdated

| @@ -0,0 +1,11 @@ | |||

| # Relative orientation of precision land target in navigation (body fixed, north aligned, NED) and inertial (world fixed, north aligned, NED) frames | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Shouldn't the orientation be part of the landing_target_pose message?

https://en.wikipedia.org/wiki/Pose_(computer_vision)

Then again the velocity should not be part of the pose...

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes @potaito , in theory it should. LTEstOrientation.cpp and LTEstPosition.cpp handle the orientation and position filters respectively. So the orientation and the position/ velocity should be in different messages. Do you want me to rename landing_target_pose to landing landing_target_est_state? Note that the files and msgs should eventually be renamed to remove the word "landing" as the estimator can be used for different tasks.

msg/landing_target_orientation.msg

Outdated

| float32 theta # Orientation of the target relative to navigation frame [rad] [0 ; 2Pi] | ||

| float32 cov_theta # orientation uncertainty | ||

|

|

||

| float32 v_theta # orientation speed of the target relative to navigation frame [rad/s] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This should be called rate :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Changed v_theta and cov_v_theta it to rate and cov_rate respectively @potaito .

msg/landing_target_orientation.msg

Outdated

|

|

||

| bool orientation_valid # Flag showing whether relative position is valid | ||

|

|

||

| float32 theta # Orientation of the target relative to navigation frame [rad] [0 ; 2Pi] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Do you think a single angle is enough? In a different PR I also wanted to add the target orientation for tracking:

https://github.com/PX4/PX4-Autopilot/pull/20487/files#diff-1a356aa7922c342bc9fea340b3b616a3695f223d3abe8bb65035b3eef5d3e3e1R27

I added a Quaternion, because that's what most CV libraries for fiducial markers spit out. In the end I only cared about the orientation angle around the z-axis though, in order to adjust the drone's heading.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not against including the full orientation in the future @potaito , but I think that we should start with a single angle (which already covers most of the use cases)

| irlock_report_s orb_report{}; | ||

|

|

||

| // Get the current attitude, will be used to rotate the sensor observation into navigation frame | ||

| matrix::Quaternionf quat_att(&vehicle_attitude.q[0]); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is exactly the problem of the irlock_report message and why we need to get away from it.

An actual IR beacon does not have a measurable orientation. I do understand that in this case the message is needed because it's "the message that pipes marker data into PX4's landing target estimator".

Should we just get over it and rename it to something else? Fortunately uORB messages are not mavlink 😂

| @@ -47,6 +47,8 @@ | |||

| #include <px4_platform_common/module.h> | |||

| #include <px4_platform_common/i2c_spi_buses.h> | |||

| #include <uORB/Publication.hpp> | |||

| #include <uORB/Subscription.hpp> | |||

| #include <uORB/topics/vehicle_attitude.h> | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Generally I agree with the sentiment of taking care of tasks such as rotating a measurement into the right frame at the lowest level where it's feasible. It's the same thought that I also suggested for the ultrawide bandwidth driver, where these transformations are done in the landing target estimator instead: https://github.com/PX4/PX4-Autopilot/pull/21124/files#diff-915a40c8957689cf8e6fbcf6f7168e03d831b549e381956165f0fc3c4451ce23R278-R318

@bresch @dagar what's your opinion on this? Should a driver already take care of rotating a measurement into the appropriate coordinate frame for PX4? I think it should. I'm just struggling with the nomenclature of a "driver", since it's then doing a bit more than a regular device driver would.

src/modules/landing_target_estimator/KF_xyzb_v_coupled_moving.cpp

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/KF_xyzb_v_coupled_moving.h

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/KF_xyzb_v_decoupled_moving.cpp

Outdated

Show resolved

Hide resolved

src/modules/landing_target_estimator/KF_xyzb_v_decoupled_moving.h

Outdated

Show resolved

Hide resolved

| @@ -74,7 +74,13 @@ void LoggedTopics::add_default_topics() | |||

| add_topic("input_rc", 500); | |||

| add_optional_topic("internal_combustion_engine_status", 10); | |||

| add_optional_topic("irlock_report", 1000); | |||

| add_optional_topic("landing_target_pose", 1000); | |||

| add_optional_topic("fiducial_marker_pos_report", 100); | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looking at the size of these individual messages, the logging rates might be too high for some systems.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Which values do you recommend @potaito ?

Yess @potaito because there are 6 different position filters (which I'm currently comparing). Don't worry, once I've compared them, I will post the results and we can decide on which ones to keep (one for moving targets and one for static targets). Here's an example where I've only kept the decoupled filters https://github.com/JonasPerolini/PX4-Autopilot/tree/pr-land-target-refractor-decoupled/src/modules/landing_target_estimator |

|

Hi @JonasPerolini, I'm jumping in. I need to warmup. Is it ok to discuss the algorithm here? For the precision landing, is it worth combining very accurate variables with variables with big error? Does the EKF perfectly filter out noisy variables? For example, a GPS or positioning system can have Of course, there is more latency, but I believe that vision is only used for adjusting the EKF model. My scope is fast smooth precision landing on moving targets with initially an error of max r = |

|

Hi @kripper I will upload a detailed update early next week which will clarify the implementation and the reasoning behind it. (Also removing half of the filters making the code much more understandable). The main goal here is to be robust to sensor failures by using redundant observations. (We estimate the GNSS bias from the vision sensor which is indeed more accurate). Nevertheless, there is a parameter to enable/disable the fusion of the different measurements. If your target is moving and you don't have a GNSS on it, you can solely rely on vison (and drone velocity). Note that to land on moving targets it is best to anticipate where the target will be using the states of the filter e.g. |

|

Great! I would love to see this update and play with the code. Yesterday I was checking the implemented KF that uses acceleration + visual pose. Ok, I thought maybe in the case of moving targets, the absolute measurement variables (GNSS, IMU, etc) could be useful to better distinguish the motion of the drone vs the target. I thought (based on your nice graphs) that maybe the averages of the GNSS (law of large numbers) somehow contribute with accurate absolute measurements to the relative vision estimation and that this could somehow help to better estimate the drone and target trajectories (even when we only need precise relative information). I like the fact that you fused all variables into a general estimator and that you can enable/disable them depending on the scenario. Will you completely disable the GPS during precision landing? My DJI drone seems to provide The other idea I had to address the visual delay, was to use an EKF with only visual pose estimation + "FC commands" (set_velocities or virtual stick commands). I saw a video of a guy hacking electronically the RC to save and replay the RC actions and the drone moved incredibly consistent comparing both videos side by side. Sorry if I'm still brainstorming at this point. I will converge soon :-) |

|

:-) I made some tests with my DJI Mini SE:

Thus, on our case, we will have to estimate the drone's position only based on set velocities and use the visual estimated pose to adjust the model. I believe your model was using acceleration, so we will adapt it for using velocities instead for our case. |

|

Hi @kripper I'm happy to discuss your specific case over in Discord (Jonas Perolini#5582), this PR is intended to run on a Pixhawk in which the acceleration input will be available. As mentioned, I will provide a big update early next week so if you plan on modifying the code, you should probably wait until then. |

|

I have been following this PR for quite a while now, great that you are thoroughly improving the system @JonasPerolini ! What is currently blocking this PR? Perhaps the MAVLink PR for the landing target message? |

Hi @JonasPerolini, I finally came up with this two algorithms based solely on the (delayed observations of) the relative position of the target: |

|

Hey @kripper |

…dy to nav frame in the LTEst

…nts with the drone's attitude

…n or target GPS), fix target gps velocity bug

… and targetAbosulte msgs)

4a358a9

to

4274a33

Compare

|

Can you comment on what's actually been tested here? |

|

Hi @dagar, sorry for the late answer. In simulation with particularly high measurement uncertainties (x10 the Gazebo default), I have tested all sensors except UWB for both static and moving targets. In real life, I have extensively tested (300+ flights) the static target filter for different tasks requiring precision. I'm fusing vision (640x480p, 10fps) + GNSS velocity + GNSS position from the mission. For example, I have been consistently using the KF for precision landing for the past months and it works great. I’m eager to get it merged. Note that I do have some improvements that I still want to port to this PR when I find the time. I have also tested the moving target filter (see video attached) but not as much (20 flights) and only with a small quadcopter. The filter works as expected, however, there is still work required to use its output for precision landing. E.g. for the video I’ve combined the filter’s position and velocity estimations to anticipate the location of the target. This is not implemented in the PR because I do not consider it stable and ready for customer use. It's rather a proof of concept for potential future use. Upload.from.GitHub.for.iOS.MOVAs discussed in Discord with @dagar @potaito and @bresch the next steps will be:

|

|

Is this branch destined for release? Sad to see it not implemented into 1.14.0. |

@rizvanovic to simplify the merging process, we've created a new PR in which we create a new target estimator instead of modifying the old one. #22008 The PR is almost ready, I'm now starting the testing campaign on a real drone. |

Goal: Extend the current landing target estimator to:

Use case

Main changes:

1.

LTEST_AID_MASK: chose which measurements to sequentially fuse3.

LTEST_MODE: chose between coupled and decoupled dynamics (trade off between speed and accuracy. Since we perform the fusion in vehicle-carried NED frame, the x,y,z directions are coupled)4.

LTEST_MODEL: chose the KF model between static and moving targets. (see documentation/KF_models.pdf)5.

LTEST_BTOUT: timeout after which the landing target is considered lost without any new position measurements. Before the filter times out, the prediction (obtained with the mathematical models described below) is used to estimate the target position.6. Estimate the full target pose (x,y,z,theta).

7. Extend the state to estimate the bias between the GPS unit on the drone and the GPS on the target (or the mission land pose).

8. Properly log observations and innovations (using

estimator_aid_source_3d.msg)9. Deal with time sync issues (useful for vision measurements which require image processing). To compute the innovation, the filter state is moved back in time to be synced to the measurement time of validity

10. Complete messages to include uncertainties

Mathematical models

1. Compensate for gravity in the acceleration

2. Publish the target using the prediction step if no measurement is available (until timeout is reached)

3. Use proper timestamp

TODO

- Reduce allocated stack (for now 6000)

- Stop the target estimator module once disarmed

- Complete the landing target MAVLINK message to include measurement covariance matrix

- Diagonalize the measurement matrix to properly use sequential update

- Get drone's acceleration uncertainty

- Orientation (the precision landing algorithm doesn't take the orientation into consideration yet).Define initial values && Complete MAVLINK landing target message to include theta

- Add (x,y,z) filters when no bias is required.

Structure overview:

Target estimator class:

--- target_estimator: Defines the decoupled target estimator class. Contains the virtual funct. that each KF model will inherit

--- target_estimator_coupled: same as target_estimator but for coupled dynamics

Mathematical model of the filters derived using SymForce (see documentation/Python derivation):

--- KalmanFilter

--- KFxyzCoupledMoving

--- KFxyzDecoupledMoving

--- KFxyzCoupledStatic

--- KFxyzDecoupledStatic

Main module: LandingTargetEstimator

1. Initializes the KF instances e.g. for a static target and decoupled dynamics: init 3 filters (for x,y,z directions) with the same KF model (KFxyzDecoupledStatic).

2. Sub to relevant topics to get the available measurements

3. Handles the Kalman filter sensor fusion (init, prediction and update step)

5. Publishes the landing target and innovations

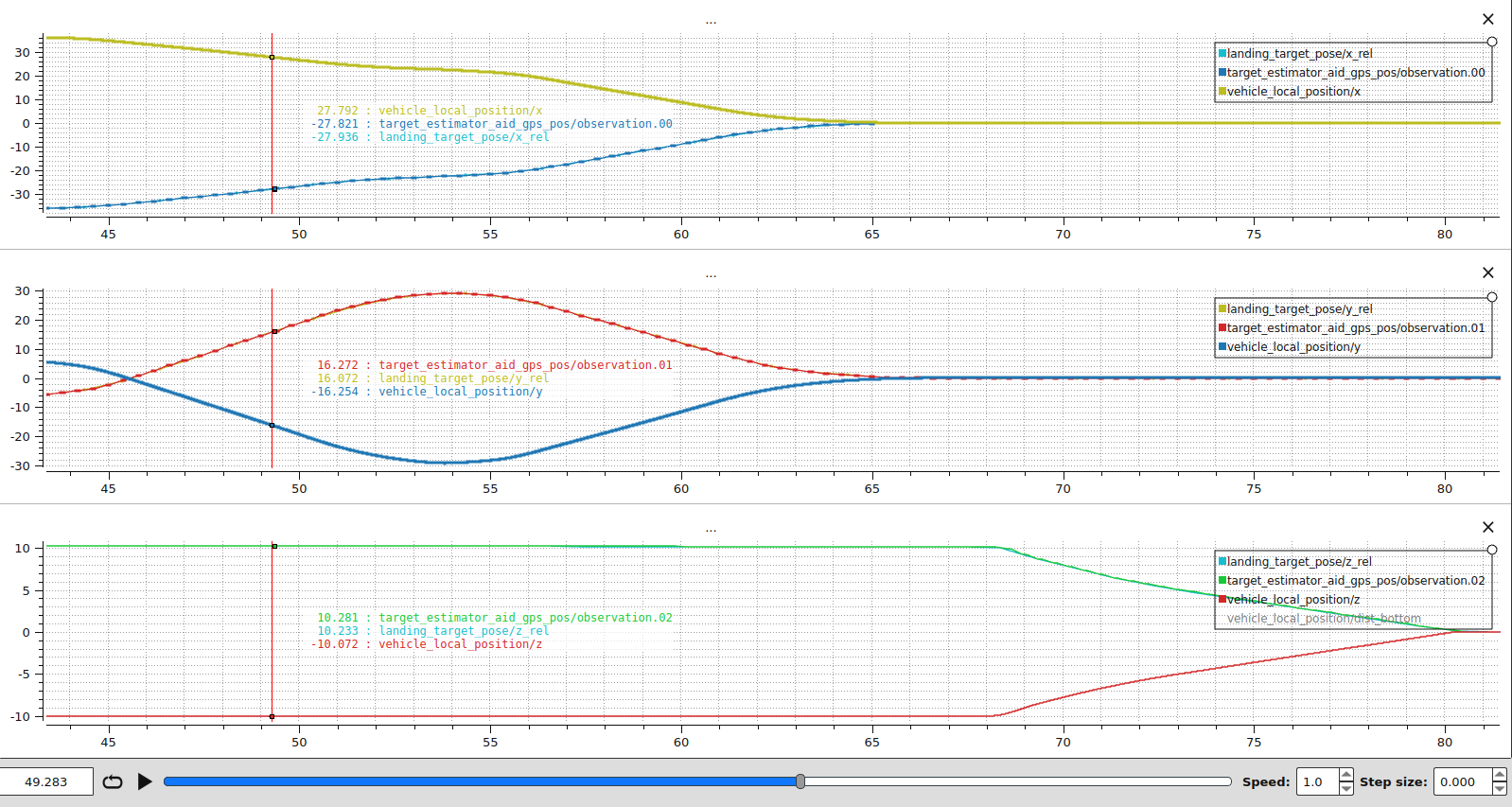

Preliminary results

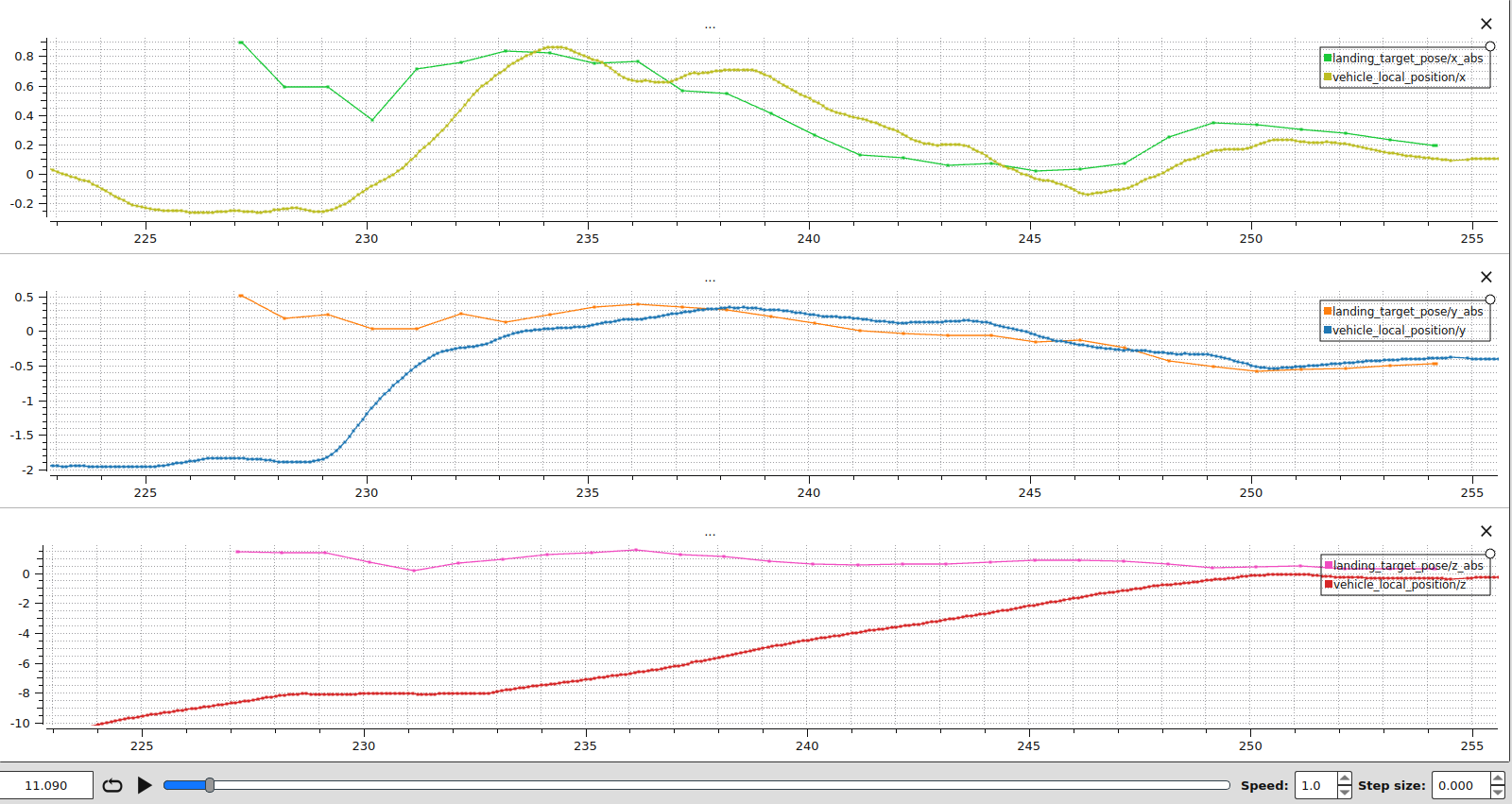

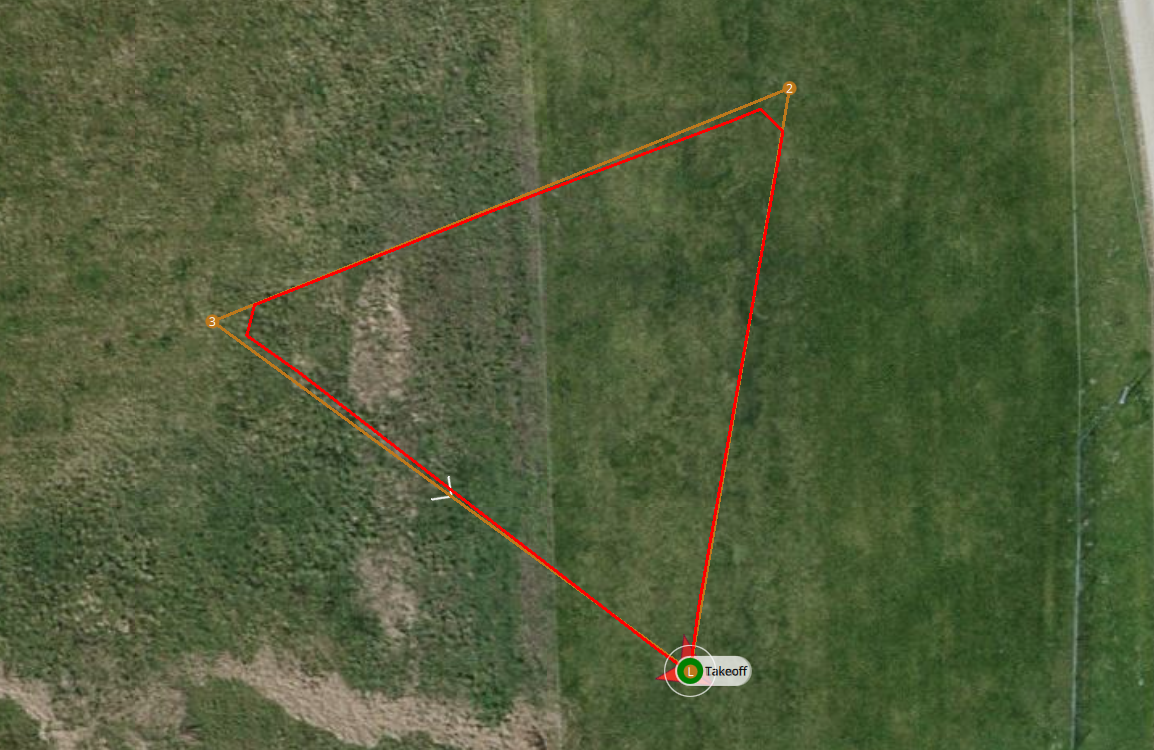

(0.8, 2.8, 7). The drone then corrects horizontally before descending above target and land.Flight_test_12m.mp4

The filter smooth-ens the target estimation (see top plot). It also allows to detect outliers in the vision measurements (most likely due to time sync issues) and still use the prediction model to properly estimate the target position.

Once again, the velocity estimation is much smoother than the observation.

The drone correctly navigated towards the target. Since the drone landed on the target (see video above) we can conclude that the target was correctly estimated.

CPU usage: the sensor fusion (matrix multiplication) starts once the next waypoint is of type land. As the fusion starts, we don't see any significant increase in CPU usage despite using coupled dynamics.

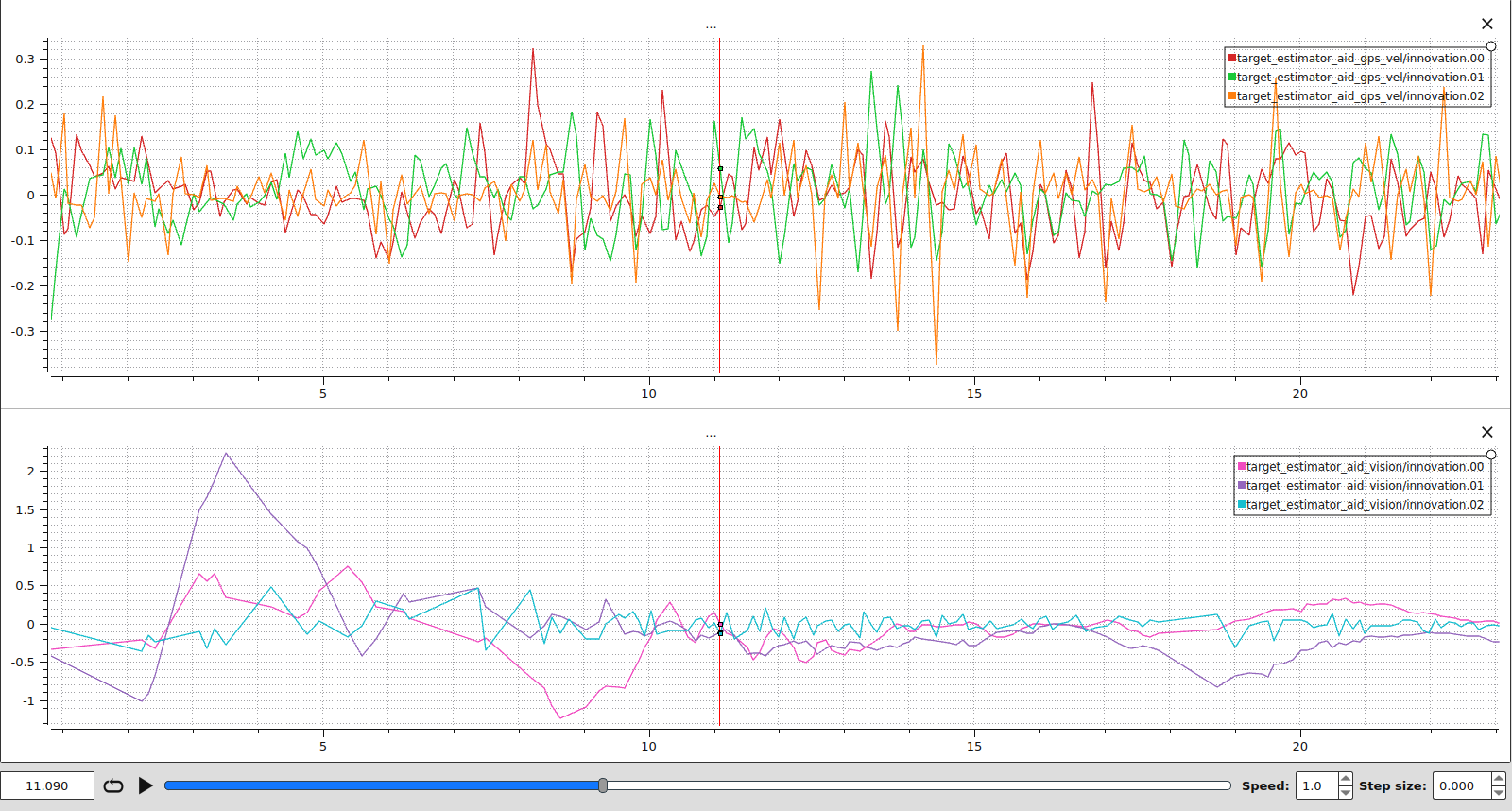

The innovations resemble white noise indicating good filter performances.

LTEST_BTOUT) and restarts. As the target is still visible, the drone is able to correct and land on the target.Flight_test_moved_correction.mp4

Flight_test_moved_no_correction.mp4

The drone takes off (origin of local frame) and lands at the same location, therefore we expect to see the local position of the drone and the local position of the target converge to zero.

The relative position to the target is obtained using the drone's GPS position and the mission position. This plot shows that the relative position is properly computed.

The target estimator timeout is set to 10 seconds i.e. the filter will estimate the target position using only the initial position and the velocity measurement during 10 seconds. In the plots we cannot determine where the target estimator times out / restarts showing that the KF model works well.

Note, if the gravity is not compensated, the prediction is not correct as shown in the bottom plot below.

It might be worth correcting the current implementation https://github.com/PX4/PX4-Autopilot/tree/main/src/modules/landing_target_estimator by adding: