The WaCoDiS Core Engine component provides core functionalities such as job scheduling, evaluation and execution.

Table of Content

- WaCoDiS Project Information

- Overview

- Installation / Building Information

- User Guide

- Developer Information

- Contact

- Credits and Contributing Organizations

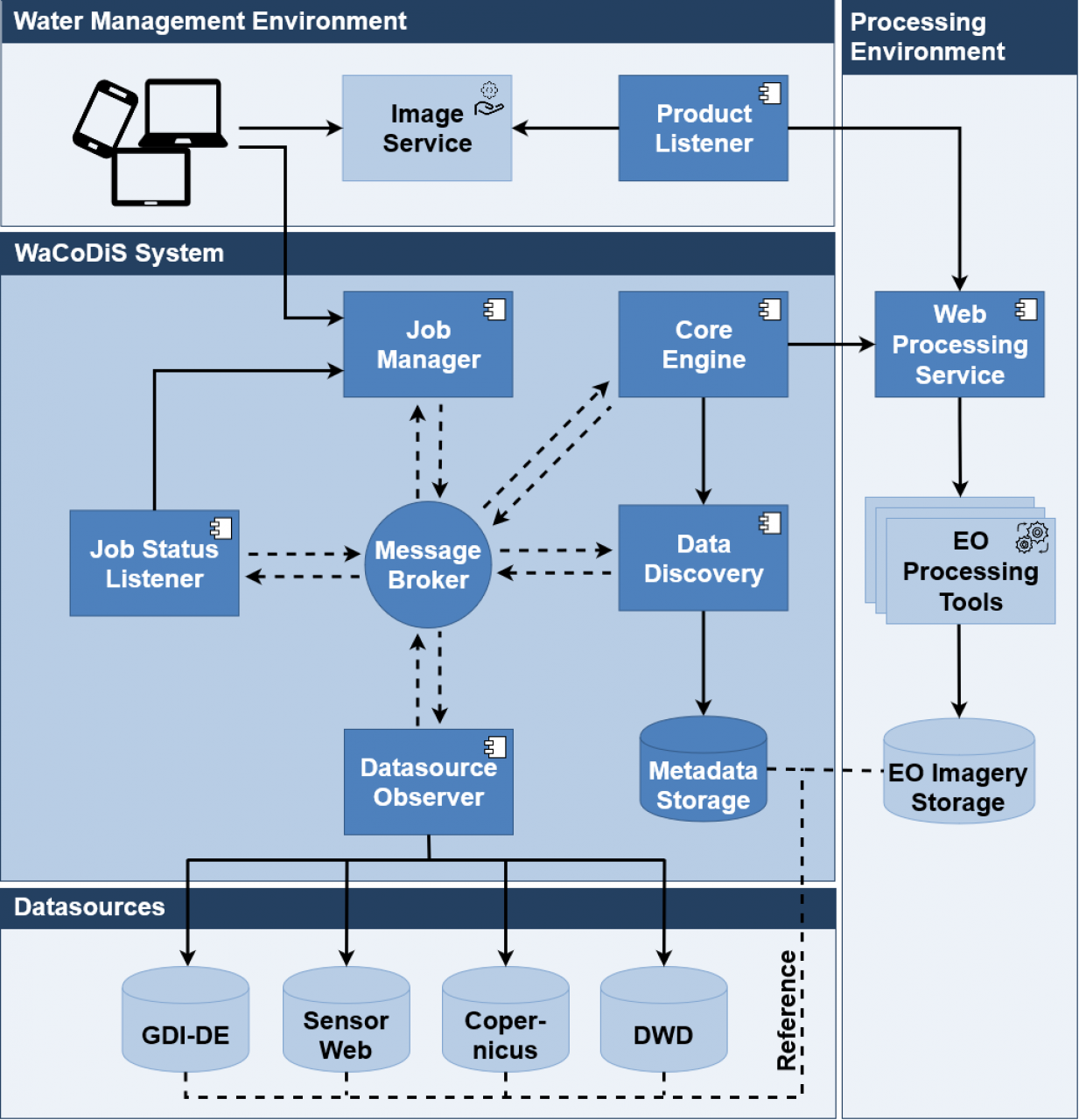

The WaCoDiS project aims to exploit the great potential of Earth Observation (EO) data (e.g. as provided by the Copernicus Programme) for the development of innovative water management analytics service and the improvement of hydrological models in order to optimize monitoring processes. Existing SDI based geodata and in-situ data from the sensors that monitor water bodies will be combined with Sentinel-1 and Sentinel-2 data. Therefore, the WaCoDiS monitoring system is designed as a modular and extensible software architecture that is based on interoperable interfaces such as the Open Geospatial Consortium (OGC) Web Processing Service. This allows a sustainable and flexible way of integrating different EO processing algorithms. In addition, we consider architectural aspects like publish/subscribe patterns and messaging protocols that increase the effectiveness of processing big EO data sets. Up to now, the WaCoDiS monitoring system comprises the following components:

Job Manager

A REST API enables users to define job descriptions for planning the execution of analysis processes.

Core Engine

The Core Engine schedules jobs for planned process executions based on the job descriptions. In addition, it is responsible for triggering WPS processes as soon as all required process input data is available.

Datasource Observer

Several observing routines requests certain datastores for new available data, such as in-situ measurements, Copernicus satellite data, sdi based geodata and services or meteorological data that are required for process executions.

Data Discovery

Data Discovery comprises two components: the WaCoDiS Data Access API and the WaCoDiS Metadata Connector.

Metadata about all incoming, available datasets discovered by the Datasource Observer is handled and bundled by the WaCoDiS Data Access API. For the purpose of defining process inputs, the WaCoDiS Data Access API generates references to the required datasets from the metadata and provides these references to the Core Engine via a REST API. The WaCoDis Data Access API uses a instance of Elasticsearch search engine to store the metdata about all available datasets.

To provide an asynchronous Publish/Subscribe pattern, the WaCoDiS Metadata Connector will listen for new datasets and then interacts with the REST API of WaCoDiS Data Access API.

Web Processing Service

The execution of analysis processes provided by EO Tools is encapsulated by a OGC

Web Processing Service (WPS), which provides a standardized interface for this purpose. Therefore a custom backend for the 52°North javaPS implementation provides certain preprocessing and execution features.

Product Listener

A Product Listener will be notified as soon as any analyis process has finished and a new earth observation product is available. The component will fetch the product from the WPS and routes it to one or more specific backends (e.g. GeoServer, ArcGIS Image Server) that provides a certain service for the user to access the product.

Product Importer

For each product service backend a certain helper component will import the earth observation product into the specific backend's datastore and may set up a service on top of it. The product importer can be provided as part of the Product Listener or can be provided as an external component (e.g. a python script for importing porduct into the ArcGIS Image Server).

Job Status Listener

The WaCoDiS Job Status Listener is intermediate component that consumes messages (published by the Core Engine) on execution progress of processing jobs. According to the job's progress the Job Status Listener updates the status in the job's describtion by interacting with the REST API of the Job Manager.

The WaCoDiS monitoring system architecture is designed in a modular fashion and follows a publish/subscribe pattern. The different components are loosely connected to each other via messages that are passed through a message broker. Each module subscribes to messages of interest at the message broker. This approach enables an independent and asynchronous handling of specific events.

The messages exchanged via message broker follow a domain model that has been defined by the OpenAPI specification. You can find these definitions and other documentation in the apis-and-workflows repo.

The WaCoDiS Core Engine is the system component that implements the core processing workflow. This processing workflow comprises the following three stepps:

- scheduling of processing jobs (jobs).

- evalutation whether all necessary input data is available to start a processing job (job evaluation)

- initiation of the execution of the processing job by sending a request to the processing environment or rather the web processing service (job execution)

The core engine is also responsible for keeping other WaCoDiS components updated about the progress of processing jobs. The core engine publishes messages via the sytem's message broke if a processing job is started, failt or executed sucessfully.

- Job

A WacodisJobDefinition (Job) describes a processing that is to be executed automatically according to a defined schedule. The WacodisJobDefinition contains (among other attributes) the input data required for execution, as well as the time frame and area of interest. - DataEnvelope

The metadata about an existing dataset is described by the AbstractDataEnvelope (DataEnvelope) data format. There are different subtypes for different data sources (e.g SensorWebDataEnvelope or CopernicusDataEnvelope). - Resource

Access to the actual data records is described by the AbstractResource (Resources) data format. There are the subtypes GetResources and PostResources. A GetResources contains only a URL while a PostResource contains a URL and a body for a HTTP-POST request. - SubsetDefinition

The required inputs of a job are described by the data format AbstractSubsetDefinition (SubsetDefinition). There are different subtypes for different types of input data (e.g SensorWebSubsetDefinition or CopernicusSubsetDefinition). There is usually a subtype of AbstractSubsetDefinition that corresponds to a subtype of AbstractDataEnvelope.

The formal definition of these data types is done with OpenAPI and is available in the apis-and-workflows repo.

The WaCoDiS Core Engine comprises three Maven modules:

- WaCoDiS Core Engine Data Models

This module contains Java classes that reflect the basic data model. This includes the data types specified with OpenAPI in the WaCoDiS apis-and workflows repository. All model classes were generated by the OpenAPI Generator, which is integrated and can be used as Maven Plugin within this module. - WaCoDiS Core Engine Job Scheduling

This module is responsible for initiating the evaluation (and execution) of a processing job according to cron pattern provided in the job's definition (WacodisJobDefinition). The scheduling is implemented based on the Java API Quartz. - WaCoDiS Core Engine Evaluator

This module is responsible for checking if a scheduled job is actually executable; that means checking if all necessary input data is available. If not, the evaluator waits until new data sets become available a re-evaluates the executably of the given processing job. - WaCoDiS Core Engine Executor

If a processing job is executable the executor module is responsible for putting together a execution request (containing all input parameters) and submitting this request to the processing environment. The Core Engine Executor keeps track of the job's execution progress and publishes messages via the system's message broker when a processing job is executed sucessfully or processing has failed. - WaCoDiS Core Engine App

Since WaCoDiS Core Engine is implemented as Spring Boot application, the App module provides the application runner as well as default externalized configurations. - WaCoDiS Core Utils

The Core Engine Utils module contains classes that implement routines used in multiple of the above modules.

- Java

WaCoDiS Core Engine is tested with Oracle JDK 8 and OpenJDK 8. Unless stated otherwise later Java versions can be used as well. - Maven

This project uses the build-management tool Apache Maven - Spring Boot

WaCoDiS Core Engine is a standalone application built with the Spring Boot framework. Therefore, it is not necessary to deploy WaCoDiS Data Access manually with a web server. - Spring Cloud

Spring Cloud is used for exploiting some ready-to-use features in order to implement an event-driven workflow. In particular, Spring Cloud Stream is used for subscribing to asynchronous messages within the WaCoDiS system. - RabbitMQ

For communication with other WaCoDiS components of the WaCoDiS system the message broker RabbitMQ is utilized. RabbitMQ is not part of WaCoDiS Core Engine and therefore must be deployed separately. - OpenAPI

OpenAPI is used for the specification of data models used within this project. - Quartz

Quartz is a Java API for execution of recurrent, regular tasks based on a Cron definition (crontab). Quartz is used for scheduling the processing jobs.

In order to build the WaCoDiS Core Engine from source Java Development Kit (JDK) must be available. Currently, WaCoDiS Core Engine (or its dependencies) can only be built with Java JDK8. There is no guarantee that the build process works with other versions of java. Since this is a Maven project, Apache Maven must be available for building it.

Before you build the Core Engine project, it is necessary to clone and build the WaCoDiS fork of the 52°North WPS Client Lib.

- clone the project:

git clone https://github.com/WaCoDiS/wps-client-lib.git - run

mvn clean installin WPS Client Lib project's root folder to build the project and install the artifacts in your local Maven repository.

As mentioned above, the build process of the WPS Client Lib might fail with other java versions than JDK8.

After you built the WPS Client Lib project, you can build the Core Engine project by running mvn clean install from (Core Engine) root directory.

The project contains a Dockerfile for building a Docker image. Simply run docker build -t wacodis/core-engine:latest .

in order to build the image. You will find some detailed information about running the Core Engine as Docker container

within the deployment section.

This section describes deployment scenarios, options and preconditions.

- (without using Docker) In order to run WaCoDiS Core Engine Java Runtime Environment (JRE) (version >= 8) must be available. In order to build Job Definition API from source Java Development Kit (JDK) version >= 8) must be abailable. Core Engine is tested with Oracle JDK 8 and OpenJDK 8.

- In order to receive message about newly available data sets (job evalutation) and to publish message about processing progress (job execution) a running instance a running instance of RabbitMQ message broker must be available.

- A running instance of WaCoDiS Job Manager must be available because during the job scheduling the Core Engine retrieves detailed job information by consuming the Job Manager's REST API.

The server addresses are configurable.

- If configuration should be fetched from Configuration Server a running instance of WaCoDiS Config Server must be available.

Just start the application by running mvn spring-boot:run from the root of the core-engine-app module. Make

sure you have installed all dependencies with mvn clean install from the project root.

- Build Docker Image from Dockerfile that resides in the project's root folder.

- Run created Docker Image.

Alternatively, latest available docker image (automatically built from master branch) can be pulled from Docker Hub. See WaCoDiS Docker repository for pre-configured Docker Compose files to run WaCoDiS system components and backend services (RabbitMQ and Elasticsearch).

Configuration is fetched from WaCoDiS Config Server. If config server is not available, configuration values located at src/main/resources/application.yml within the Core Engine App submodule are applied instead.

The following section contains descriptions for configuration parameters structured by configuration section.

configuration of message chanel for receiving messages on newly available datasets

| value | description | note |

|---|---|---|

| destination | topic used to receive messages on newly available datasets, must be algined with Data Access API config | e.g. wacodis.test.jobs.data.accessible |

| binder | defines the binder (message broker) | |

| content-type | content type of DataEnvelope acknowledgement messages (mime type) | should always be application/json |

configuration of message chanel for receiving messages when a new job is created

| value | description | note |

|---|---|---|

| destination | topic used to publish messages about created WaCoDiS jobs | e.g. wacodis.test.jobs.new |

| binder | defines the binder (message broker) | |

| content-type | content type of the messages | should always be application/json |

configuration of message chanel for receiving messages when an existing job is deleted

| value | description | note |

|---|---|---|

| destination | topic used to publish message about deleted WaCoDiS jobs | e.g. wacodis.test.jobs.deleted |

configuration of message chanel for publishing messages on successfully executed processing jobs

| value | description | note |

|---|---|---|

| destination | topic used to publish message about deleted WaCoDiS jobs | e.g. wacodis.test.tools.finished |

configuration of message chanel for publishing messages when a processing job is started (submitted to the processing environment)

| value | description | note |

|---|---|---|

| destination | topic used to publish message about deleted WaCoDiS jobs | e.g. wacodis.test.tools.execute |

configuration of message chanel for publishing messages when a processing job is started (submitted to the processing environment)

| value | description | note |

|---|---|---|

| destination | topic used to publish message about deleted WaCoDiS jobs | e.g. wacodis.test.tools.execute |

parameters related to WaCoDis message broker

| value | description | note |

|---|---|---|

| host | RabbitMQ host (WaCoDiS message broker) | e.g. localhost |

| port | RabbitMQ port (WaCoDiS message broker) | e.g. 5672 |

| username | RabbitMQ username (WaCoDiS message broker) | |

| password | RabbitMQ password (WaCoDiS message broker) |

configuration parameters related to the job scheduling module

| value | description | note |

|---|---|---|

| timezone | time zone used for job scheduling | e.g. Europe/Berlin |

| jobrepository/uri | base url of the necessary instance of Job Management API | e.g. http://localhost:8081 |

configuration parameters related to the job evaluation module

| value | description | note |

|---|---|---|

| matching/preselectCandidates | if true, a waiting processing job (missing input data) is only re-evaluated if a new data set with matching source type becomes available | boolean, if set to false requests do Data Access API will increase because every new data set triggers re-evaluation of every waiting job |

| dataaccess/uri | url of the resource/search endpoint of the necessary instance of Data Access API | e.g. http://localhost:8082/resources/search |

configuration parameters related to the job executor module, precisely the interacting with the web processing service (WPS, processing environment)

| value | description | note |

|---|---|---|

| uri | base url of the WaCoDiS (OGC) WPS | e.g. http://localhost:8083/wps |

| version | version of the OGC WPS standard that is implemented by the WaCoDiS WPS | e.g. 2.0.0 |

| defaultResourceMimeType | (optional) define mime type for every input resource | default is text/xml |

| defaultResourceSchema/name | (optional) define schema for every input resource | default is GML3, always provide name and schemaLocation |

| defaultResourceSchema/schemaLocation | (optional) define schema for every input resource | default is http://schemas.opengis.net/gml/3.1.1/base/feature.xsd |

| maxParallelWPSProcessPerJob | max. number of parallel wps processes started per wacodis processing job | only applicable if wacodis job is splitted in multiple wps processes (see WacodisJobDefinition.executionSettings.executionMode), e.g. 3 |

| processInputsDelayed | delay between start of multiple wps processes per wacodis processing job | only applicable if wacodis job is splitted in multiple wps processes (see WacodisJobDefinition.executionSettings.executionMode), boolean, e.g. false |

| initialDelay_Milliseconds | delay before the first wps process is started | applicable if processInputDelayed is true, e.g. 0 |

| delay_Milliseconds | delay between separate wps processes are started | applicable if processInputDelayed is true, e.g. 900000 |

| delay_Milliseconds | if true, squential processing of multiple wps process per wacodis processing job | overrides maxParallelWPSProcessPerJob (effectively same as maxParallelWPSProcessPerJob = 1), only applicable if wacodis job is splitted in multiple wps processes (see WacodisJobDefinition.executionSettings.executionMode), boolean |

Per default, Quartz scheduler makes use of an in-memory job store. In order to configure a JDBC-based store, Core Engine

provides a DataSource bean which can be configured using spring.datasource.core.quartz-data-source. To configure the

DataSource just follow the Spring Boot guide.

Quartz scheduler related beans are provided using spring-boot-starter-quartz.

Hence, the scheduler can be configured via externalized configuration using spring.quartz.properties. Just set the usual

Quartz configuration properties.

WaCoDiS Core Engine and WaCoDiS Data Access must be modified if new types of DataEnvelope or SubsetDefintion are added to Wacodis schemas in order to support the newly introduced data types. See the Wiki for further information.

The Core Engine Executor is currently only able to submit GetResources to the WaCoDiS processing environment. Currently the Core Engine cannot handle POSTResources.

The master branch provides sources for stable builds. The develop branch represents the latest (maybe unstable) state of development.

Apache License, Version 2.0

| Name | Organization | |

|---|---|---|

| Arne Vogt | 52° North GmbH | arnevogt |

| Sebastian Drost | 52° North GmbH | SebaDro |

| Matthes Rieke | 52° North GmbH | matthesrieke |

The WaCoDiS project is maintained by 52°North GmbH. If you have any questions about this or any other repository related to WaCoDiS, please contact wacodis-info@52north.org.

- Department of Geodesy, Bochum University of Applied Sciences, Bochum

- 52° North Initiative for Geospatial Open Source Software GmbH, Münster

- Wupperverband, Wuppertal

- EFTAS Fernerkundung Technologietransfer GmbH, Münster

The research project WaCoDiS is funded by the BMVI as part of the mFund programme