In recent years, tremendous amount of progress is being made in the field of 3D Machine Learning, which is an interdisciplinary field that fuses computer vision, computer graphics and machine learning. This repo is derived from my study notes and will be used as a place for triaging new research papers.

I'll use the following icons to differentiate 3D representations:

- 📷 Multi-view Images

- 👾 Volumetric

- 🎲 Point Cloud

- 💎 Polygonal Mesh

- 💊 Primitive-based

To make it a collaborative project, you may add content throught pull requests or open an issue to let me know.

Stanford CS468: Machine Learning for 3D Data (Spring 2017)

MIT 6.838: Shape Analysis (Spring 2017)

Princeton COS 526: Advanced Computer Graphics (Fall 2010)

Princeton CS597: Geometric Modeling and Analysis (Fall 2003)

Paper Collection for 3D Understanding

- Datasets

- Single Object Classification

- Multiple Objects Detection

- Scene/Object Semantic Segmentation

- 3D Synthesis/Reconstruction

- Style Transfer

- Scene Synthesis

- Scene Understanding

To see a survey of RGBD datasets, check out Michael Firman's collection as well as the associated paper, RGBD Datasets: Past, Present and Future. Point Cloud Library also has a good dataset catalogue.

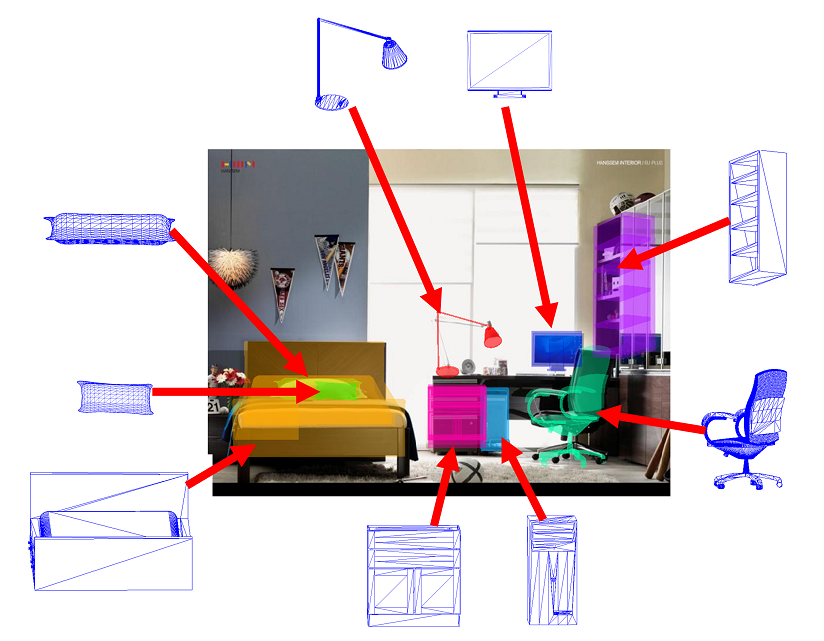

Dataset for IKEA 3D models and aligned images (2013) [Link]

759 images and 219 models including Sketchup (skp) and Wavefront (obj) files.

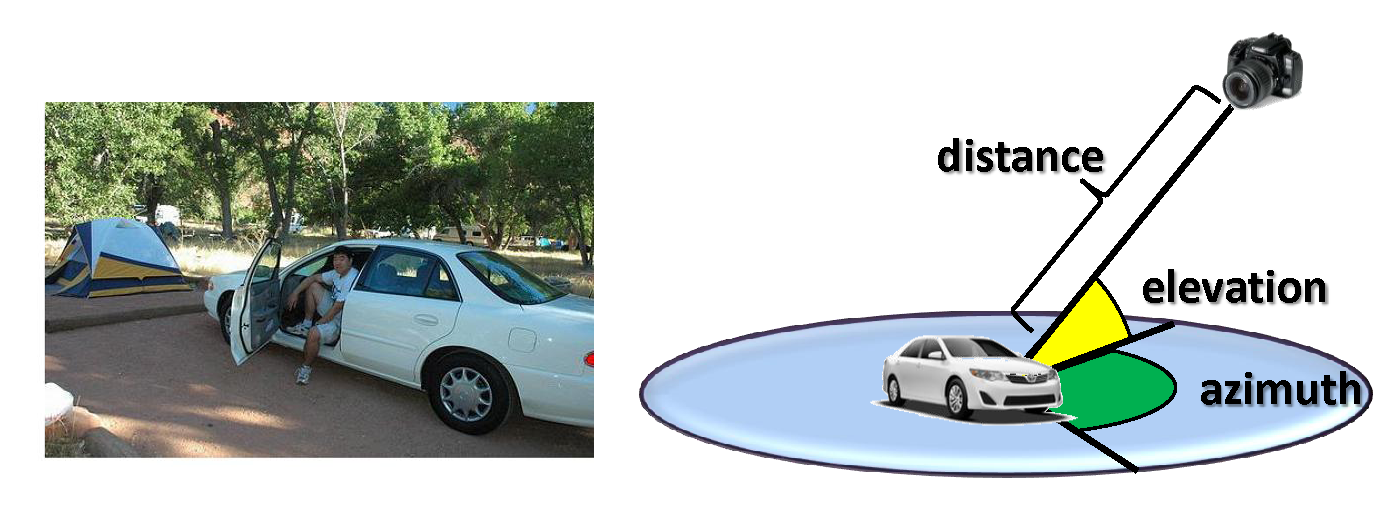

PASCAL3D+ (2014) [Link]

12 categories, on average 3k+ objects per category, for 3D object detection and pose estimation.

SHREC 2014 - Large Scale Comprehensive 3D Shape Retrieval (2014) [Link][Paper]

8,987 models, categorized into 171 classes for shape retrieval.

ModelNet (2015) [Link]

127915 3D CAD models from 662 categories

ModelNet10: 4899 models from 10 categories

ModelNet40: 12311 models from 40 categories, all are uniformly orientated

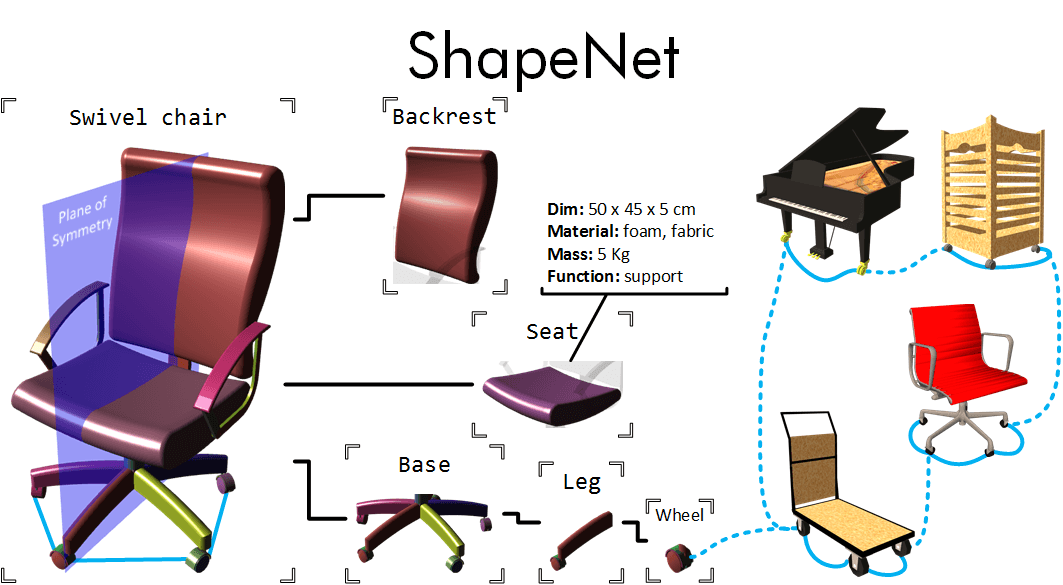

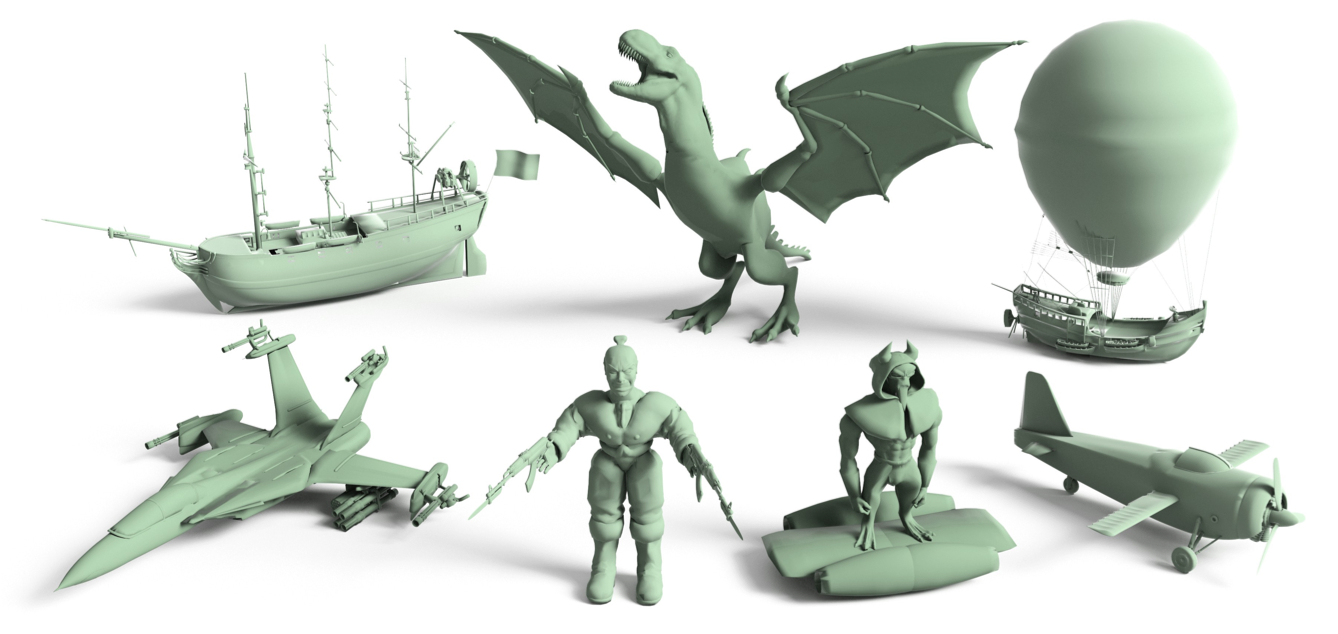

ShapeNet (2015) [Link]

3Million+ models and 4K+ categories. A dataset that is large in scale, well organized and richly annotated.

ShapeNetCore [Link]: 51300 models for 55 categories.

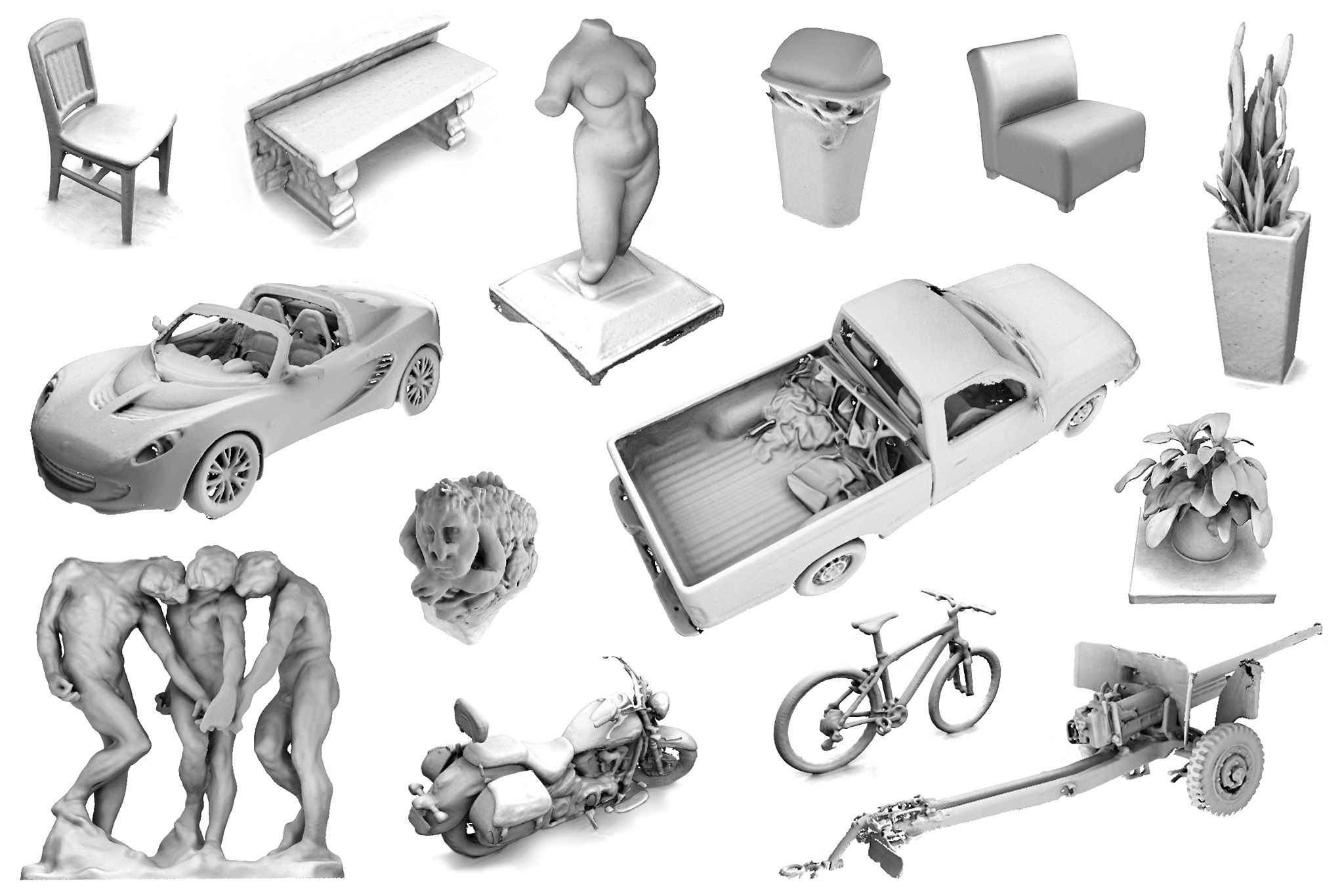

A Large Dataset of Object Scans (2016) [Link]

10K scans in RGBD + reconstructed 3D models in .PLY format.

ObjectNet3D: A Large Scale Database for 3D Object Recognition (2016) [Link]

100 categories, 90,127 images, 201,888 objects in these images and 44,147 3D shapes.

Tasks: region proposal generation, 2D object detection, joint 2D detection and 3D object pose estimation, and image-based 3D shape retrieval

SUNRGB-D 3D Object Detection Challenge [Link]

19 object categories for predicting a 3D bounding box in real world dimension

Training set: 10,355 RGB-D scene images, Testing set: 2860 RGB-D images

SceneNN (2016) [Link]

100+ indoor scene meshes with per-vertex and per-pixel annotation.

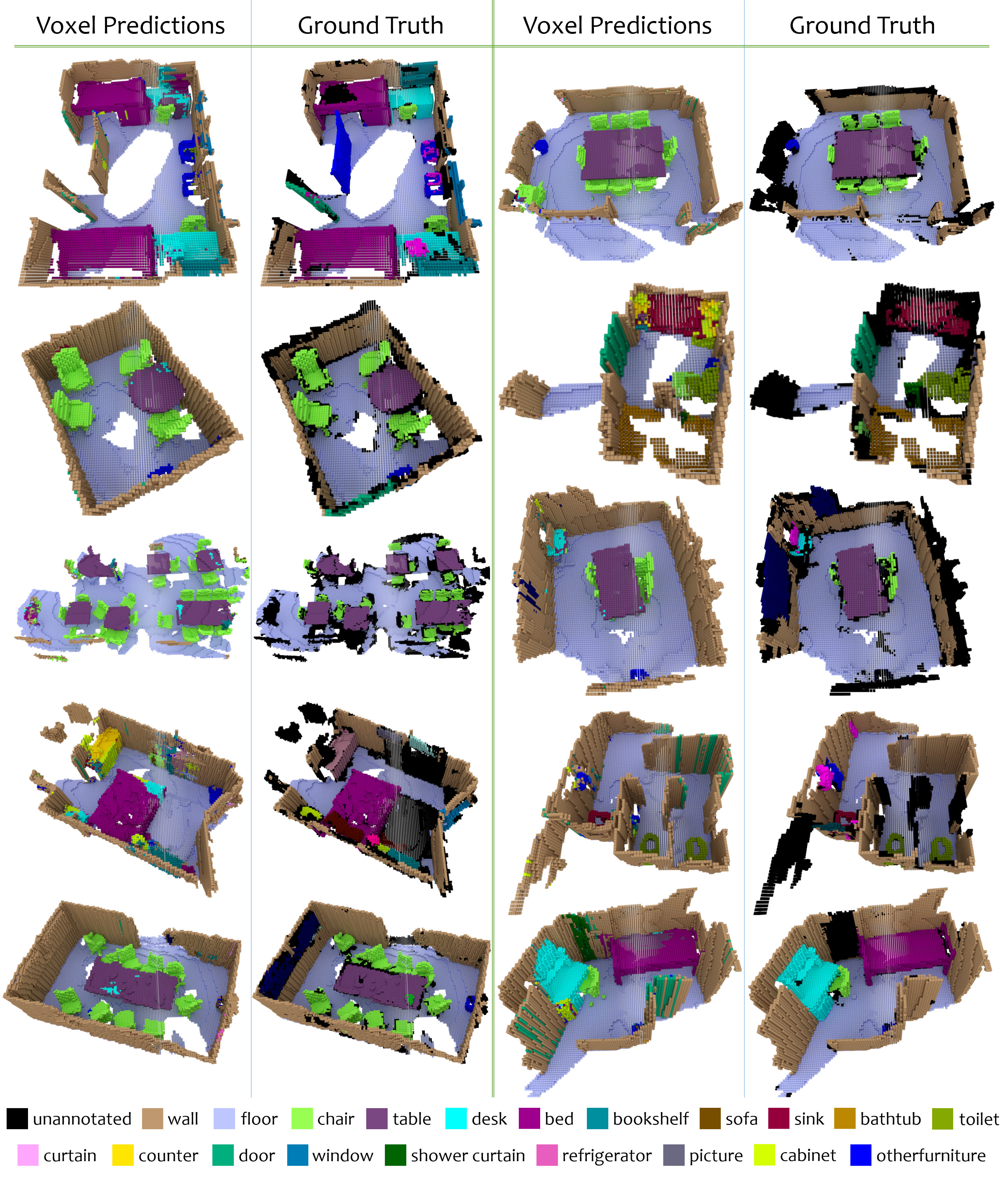

ScanNet (2017) [Link]

An RGB-D video dataset containing 2.5 million views in more than 1500 scans, annotated with 3D camera poses, surface reconstructions, and instance-level semantic segmentations.

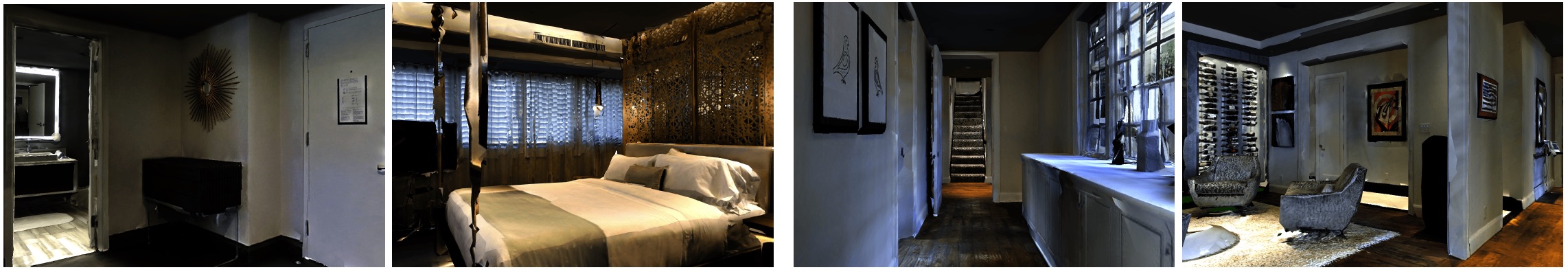

Matterport3D: Learning from RGB-D Data in Indoor Environments (2017) [Link]

10,800 panoramic views (in both RGB and depth) from 194,400 RGB-D images of 90 building-scale scenes of private rooms. Instance-level semantic segmentations are provided for region (living room, kitchen) and object (sofa, TV) categories.

SUNCG: A Large 3D Model Repository for Indoor Scenes (2017) [Link]

The dataset contains over 45K different scenes with manually created realistic room and furniture layouts. All of the scenes are semantically annotated at the object level.

MINOS: Multimodal Indoor Simulator (2017) [Link]

MINOS is a simulator designed to support the development of multisensory models for goal-directed navigation in complex indoor environments. MINOS leverages large datasets of complex 3D environments and supports flexible configuration of multimodal sensor suites. MINOS supports SUNCG and Matterport3D scenes.

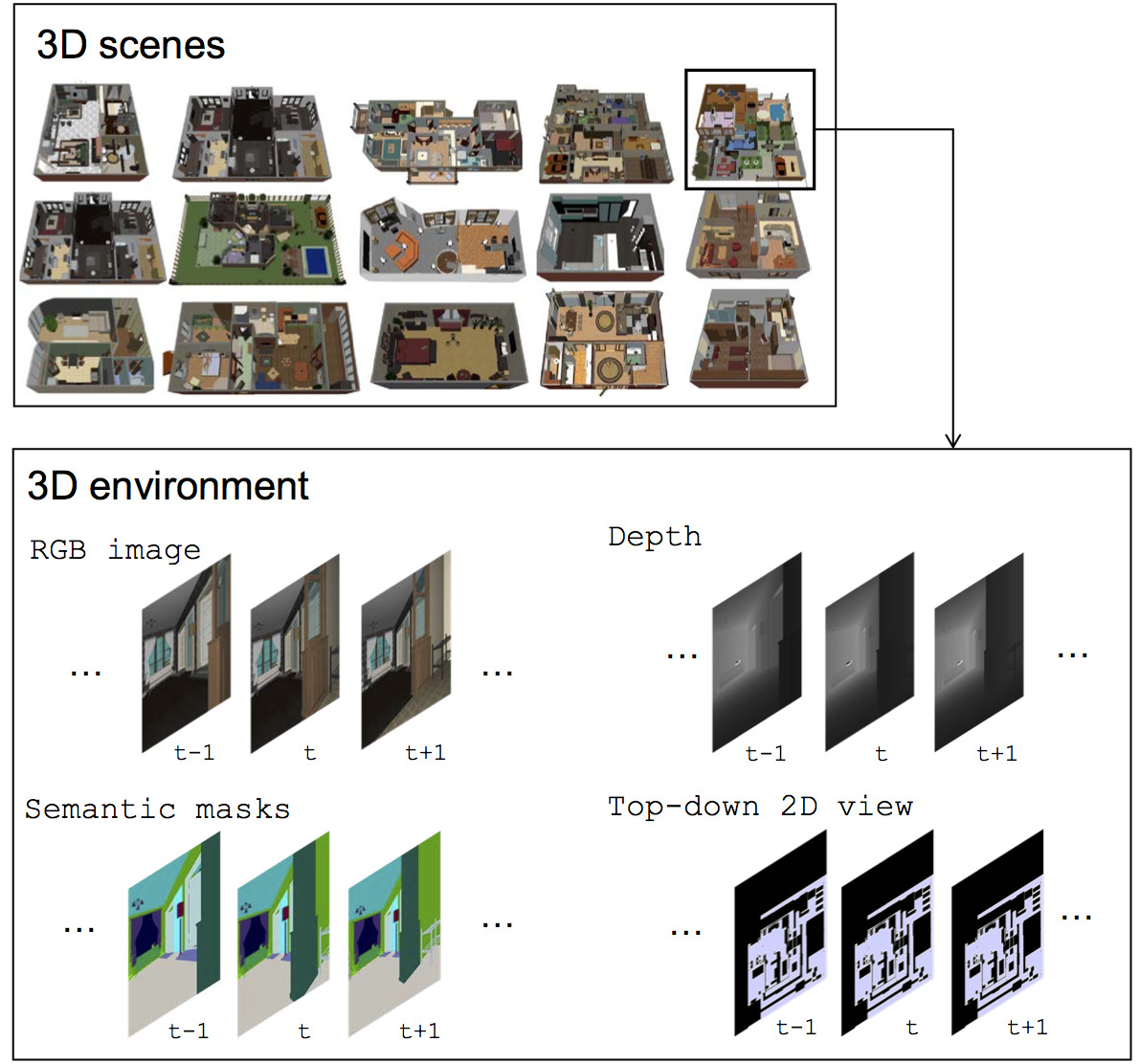

Facebook House3D: A Rich and Realistic 3D Environment (2017) [Link]

House3D is a virtual 3D environment which consists of 45K indoor scenes equipped with a diverse set of scene types, layouts and objects sourced from the SUNCG dataset. All 3D objects are fully annotated with category labels. Agents in the environment have access to observations of multiple modalities, including RGB images, depth, segmentation masks and top-down 2D map views.

HoME: a Household Multimodal Environment (2017) [Link]

HoME integrates over 45,000 diverse 3D house layouts based on the SUNCG dataset, a scale which may facilitate learning, generalization, and transfer. HoME is an open-source, OpenAI Gym-compatible platform extensible to tasks in reinforcement learning, language grounding, sound-based navigation, robotics, multi-agent learning.

AI2-THOR: Photorealistic Interactive Environments for AI Agents [Link]

AI2-THOR is a photo-realistic interactable framework for AI agents. There are a total 120 scenes in version 1.0 of the THOR environment covering four different room categories: kitchens, living rooms, bedrooms, and bathrooms. Each room has a number of actionable objects.

UnrealCV: Virtual Worlds for Computer Vision (2017) [Link][Paper]

An open source project to help computer vision researchers build virtual worlds using Unreal Engine 4.

👾 3D ShapeNets: A Deep Representation for Volumetric Shapes (2015) [Paper]

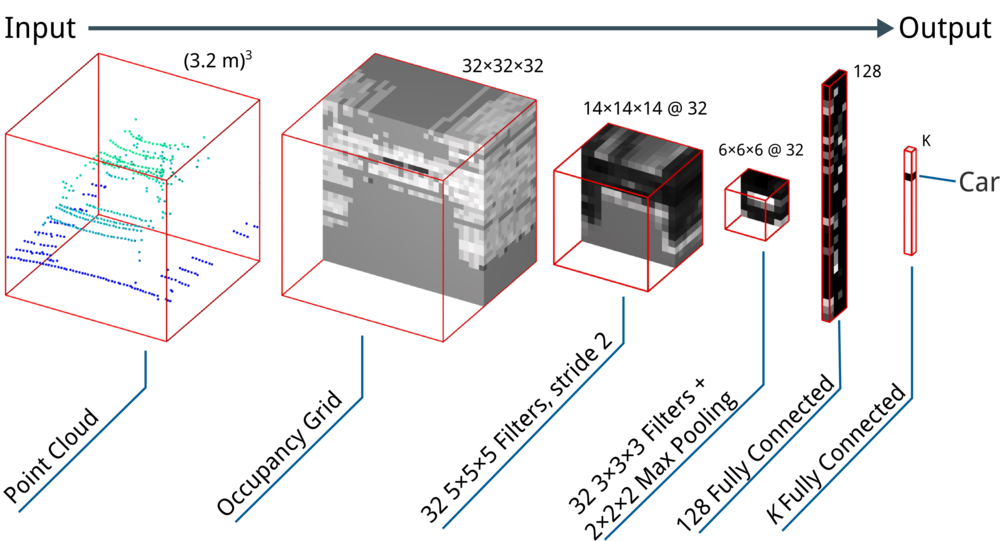

👾 VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition (2015) [Paper] [Code]

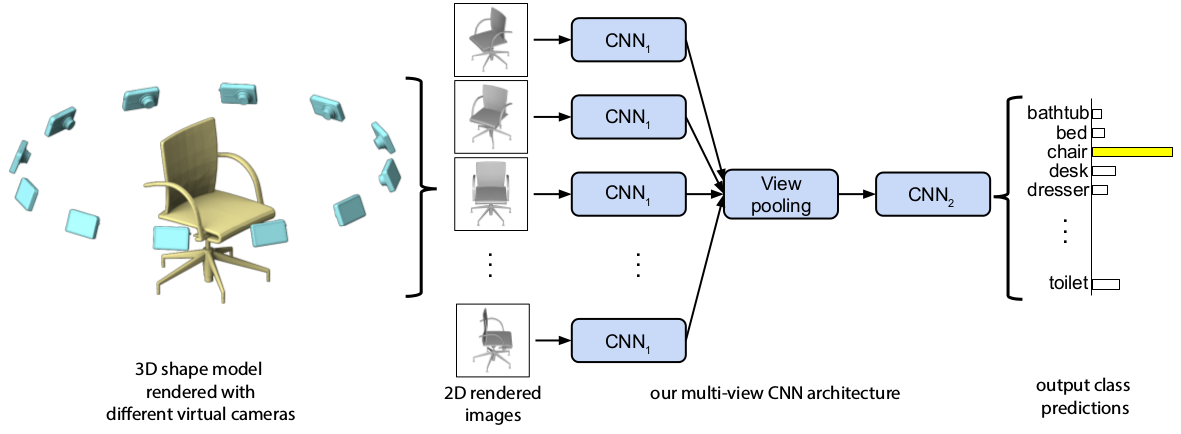

📷 Multi-view Convolutional Neural Networks for 3D Shape Recognition (2015) [Paper]

📷 DeepPano: Deep Panoramic Representation for 3-D Shape Recognition (2015) [Paper]

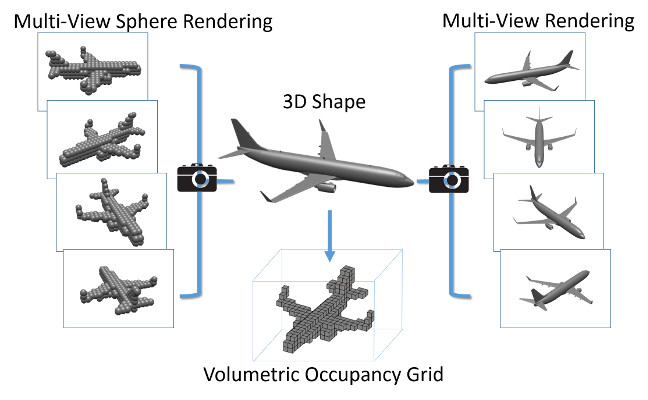

👾📷 FusionNet: 3D Object Classification Using Multiple Data Representations (2016) [Paper]

👾📷 Volumetric and Multi-View CNNs for Object Classification on 3D Data (2016) [Paper] [Code]

👾 Generative and Discriminative Voxel Modeling with Convolutional Neural Networks (2016) [Paper] [Code]

💎 Geometric deep learning on graphs and manifolds using mixture model CNNs (2016) [Link]

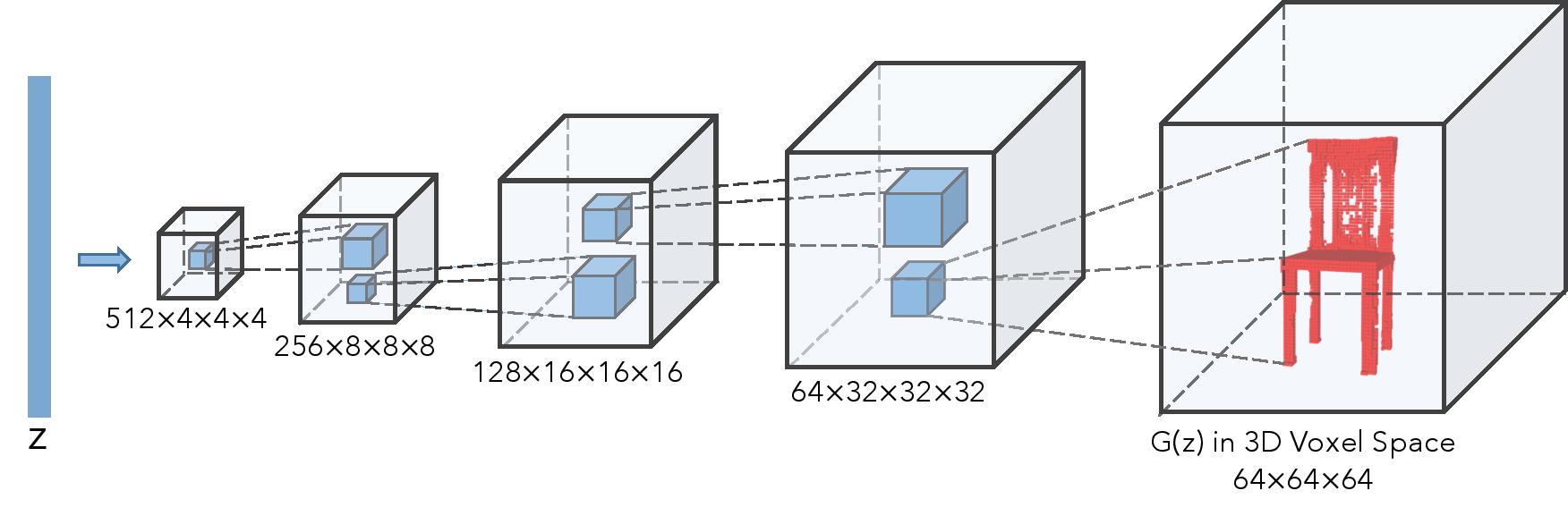

👾 3D GAN: Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling (2016) [Paper] [Code]

👾 Generative and Discriminative Voxel Modeling with Convolutional Neural Networks (2017) [Paper]

👾 FPNN: Field Probing Neural Networks for 3D Data (2016) [Paper] [Code]

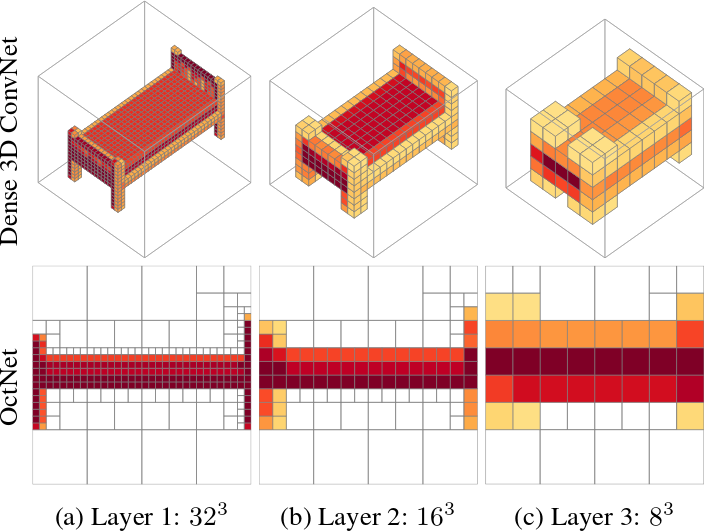

👾 OctNet: Learning Deep 3D Representations at High Resolutions (2017) [Paper] [Code]

👾 O-CNN: Octree-based Convolutional Neural Networks for 3D Shape Analysis (2017) [Paper] [Code]

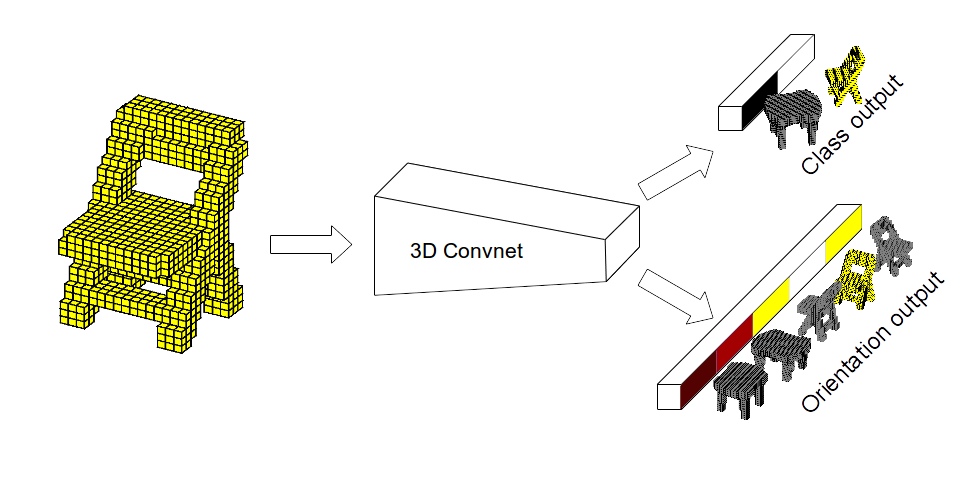

👾 Orientation-boosted voxel nets for 3D object recognition (2017) [Paper] [Code]

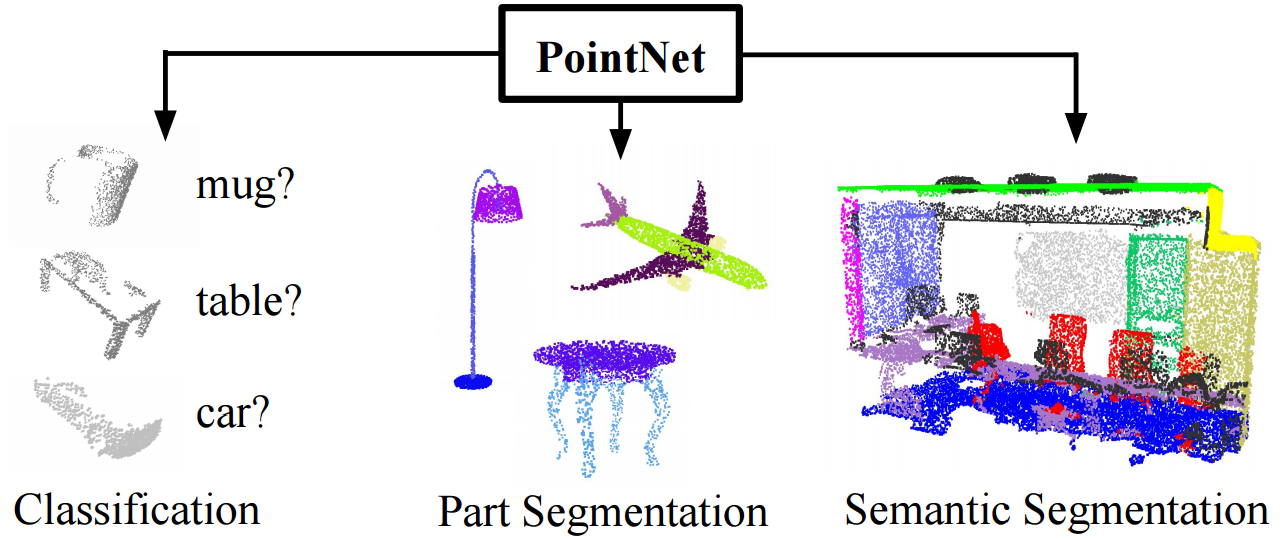

🎲 PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation (2017) [Paper] [Code]

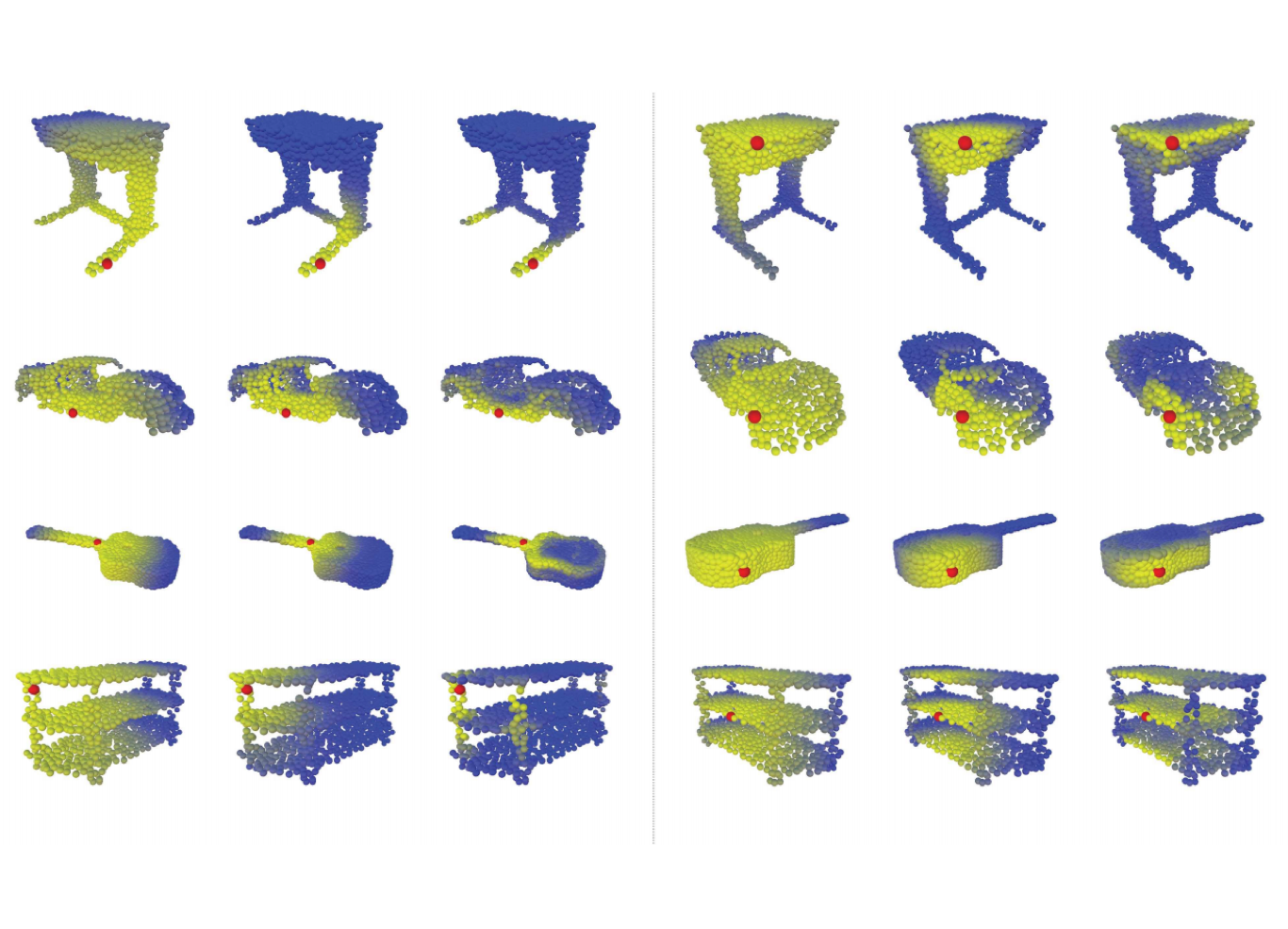

🎲 PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space (2017) [Paper] [Code]

📷 Feedback Networks (2017) [Paper] [Code]

🎲 Escape from Cells: Deep Kd-Networks for The Recognition of 3D Point Cloud Models (2017) [Paper]

🎲 Dynamic Graph CNN for Learning on Point Clouds (2018) [Paper]

🎲 PointCNN (2018) [Paper]

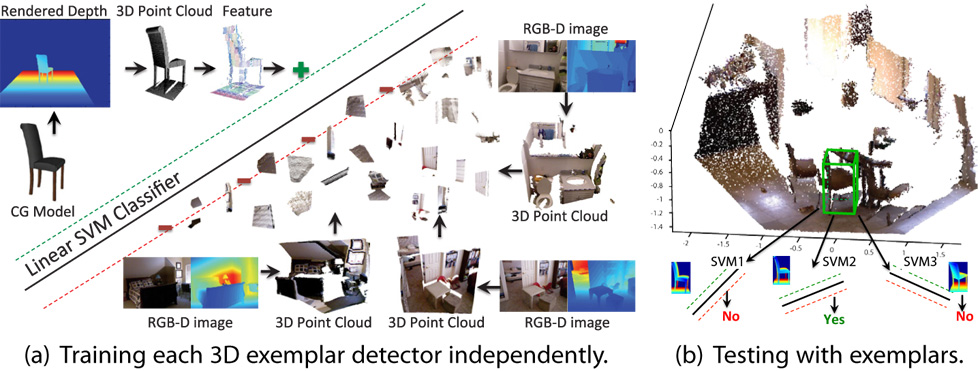

Sliding Shapes for 3D Object Detection in Depth Images (2014) [Paper]

Object Detection in 3D Scenes Using CNNs in Multi-view Images (2016) [Paper]

Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images (2016) [Paper] [Code]

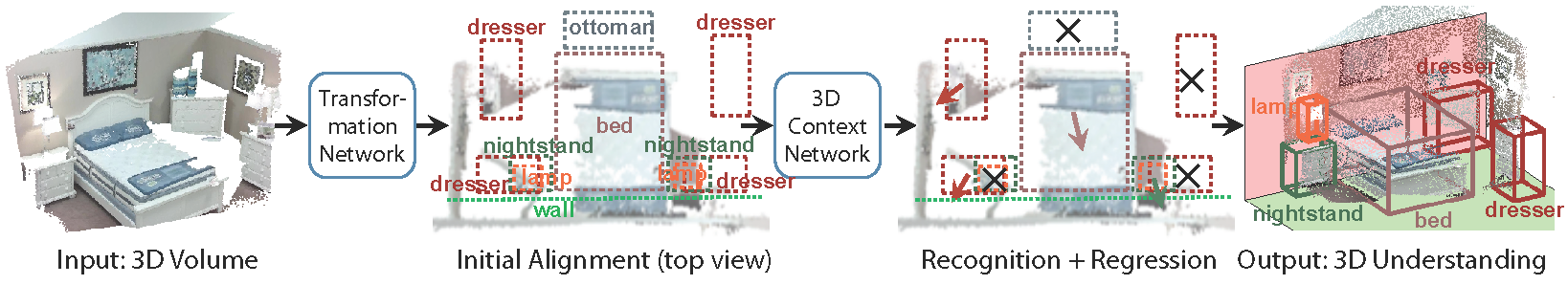

DeepContext: Context-Encoding Neural Pathways for 3D Holistic Scene Understanding (2016) [Paper]

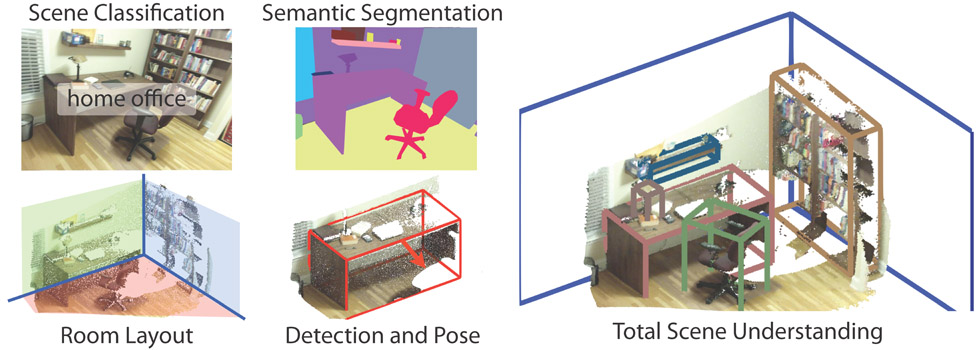

SUN RGB-D: A RGB-D Scene Understanding Benchmark Suite (2017) [Paper]

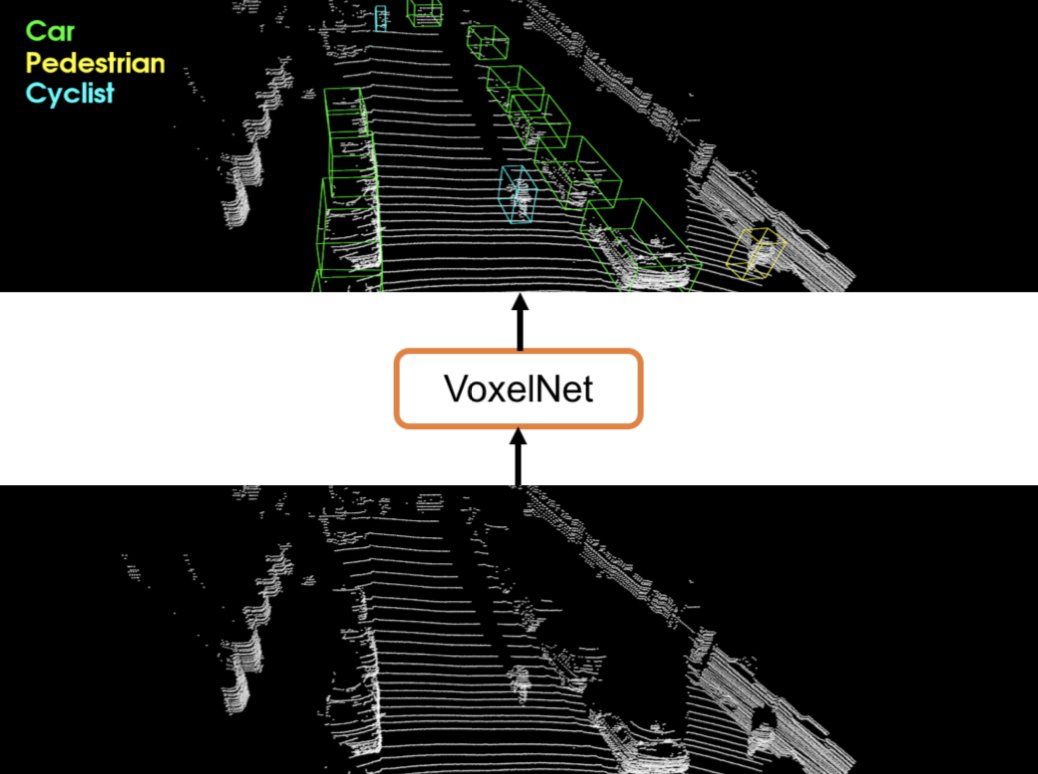

VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection (2017) [Paper]

Frustum PointNets for 3D Object Detection from RGB-D Data (2017) [Paper]

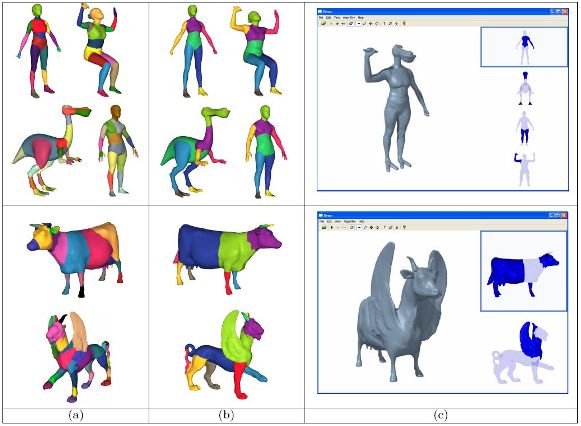

Learning 3D Mesh Segmentation and Labeling (2010) [Paper]

Unsupervised Co-Segmentation of a Set of Shapes via Descriptor-Space Spectral Clustering (2011) [Paper]

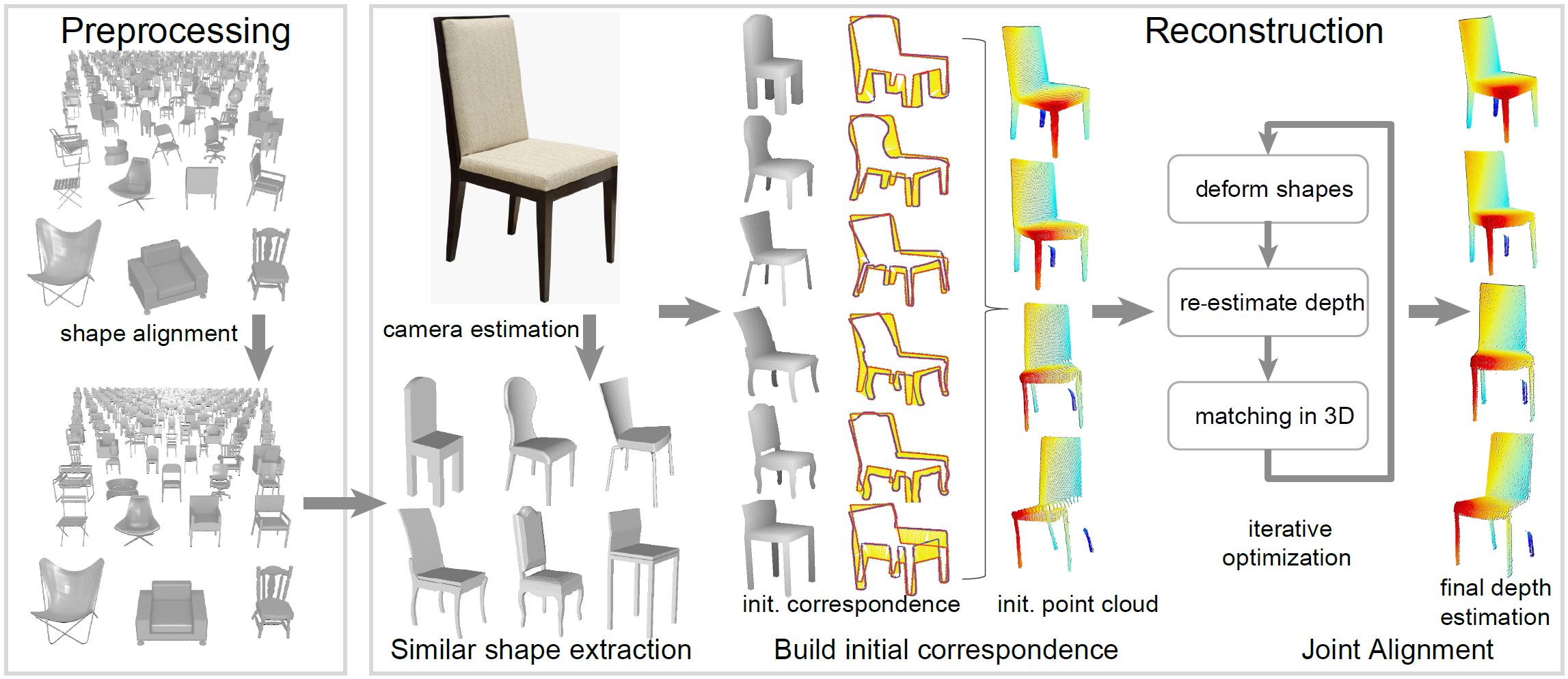

Single-View Reconstruction via Joint Analysis of Image and Shape Collections (2015) [Paper] [Code]

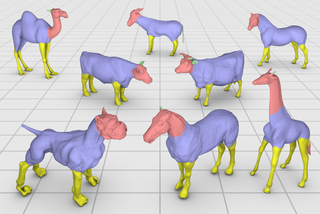

3D Shape Segmentation with Projective Convolutional Networks (2017) [Paper] [Code]

Learning Hierarchical Shape Segmentation and Labeling from Online Repositories (2017) [Paper]

🎲 PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation (2017) [Paper] [Code]

🎲 PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space (2017) [Paper] [Code]

🎲 3D Graph Neural Networks for RGBD Semantic Segmentation (2017) [Paper]

🎲 3DCNN-DQN-RNN: A Deep Reinforcement Learning Framework for Semantic Parsing of Large-scale 3D Point Clouds (2017) [Paper]

🎲👾 Semantic Segmentation of Indoor Point Clouds using Convolutional Neural Networks (2017) [Paper]

🎲👾 SEGCloud: Semantic Segmentation of 3D Point Clouds (2017) [Paper]

🎲👾 Large-Scale 3D Shape Reconstruction and Segmentation from ShapeNet Core55 (2017) [Paper]

🎲 Dynamic Graph CNN for Learning on Point Clouds (2018) [Paper]

🎲 PointCNN (2018) [Paper]

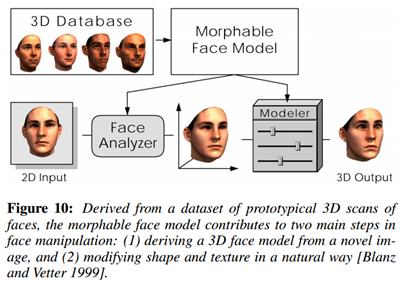

Parametric Morphable Model-based methods

A Morphable Model For The Synthesis Of 3D Faces (1999) [Paper][Code]

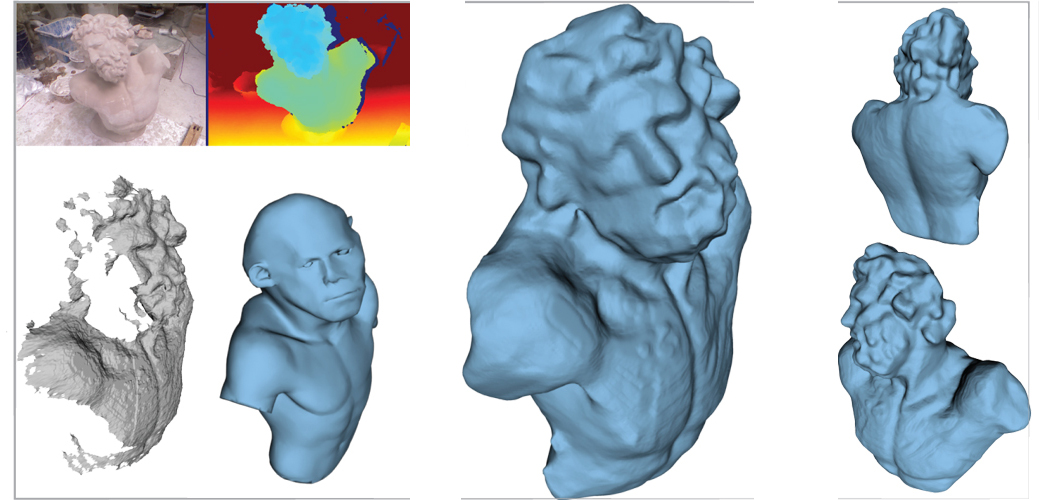

The Space of Human Body Shapes: Reconstruction and Parameterization from Range Scans (2003) [Paper]

Part-based Template Learning methods

Modeling by Example (2004) [Paper]

Model Composition from Interchangeable Components (2007) [Paper]

Data-Driven Suggestions for Creativity Support in 3D Modeling (2010) [Paper]

Photo-Inspired Model-Driven 3D Object Modeling (2011) [Paper]

Probabilistic Reasoning for Assembly-Based 3D Modeling (2011) [Paper]

A Probabilistic Model for Component-Based Shape Synthesis (2012) [Paper]

Structure Recovery by Part Assembly (2012) [Paper]

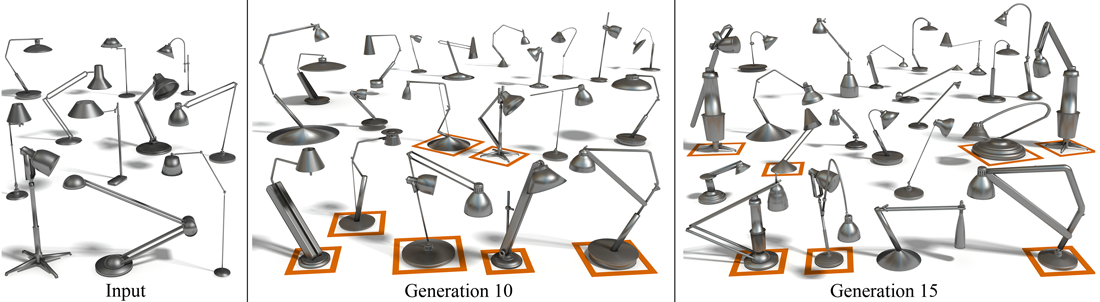

Fit and Diverse: Set Evolution for Inspiring 3D Shape Galleries (2012) [Paper]

AttribIt: Content Creation with Semantic Attributes (2013) [Paper]

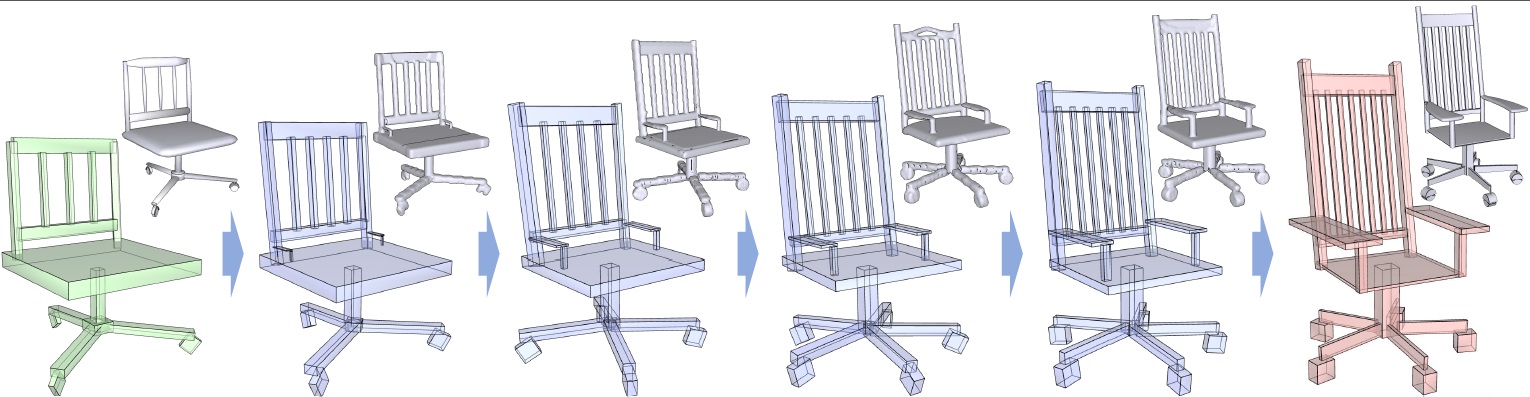

Learning Part-based Templates from Large Collections of 3D Shapes (2013) [Paper]

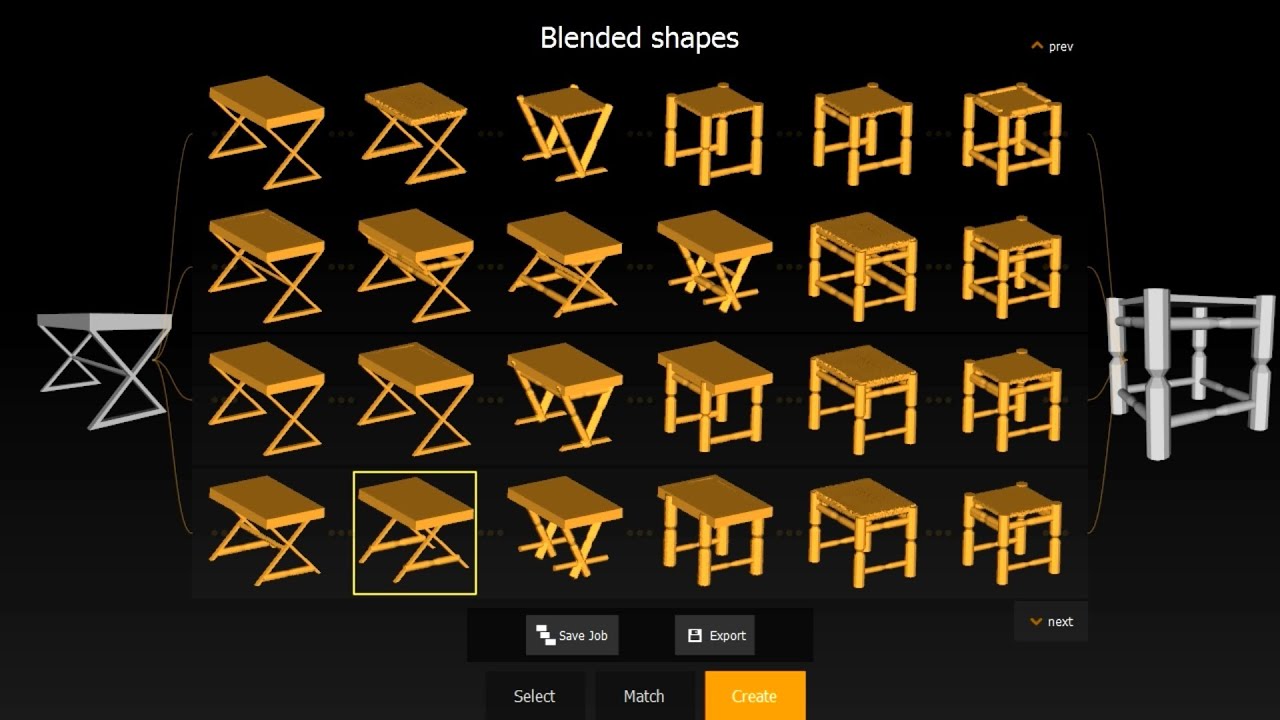

Topology-Varying 3D Shape Creation via Structural Blending (2014) [Paper]

Estimating Image Depth using Shape Collections (2014) [Paper]

Single-View Reconstruction via Joint Analysis of Image and Shape Collections (2015) [Paper]

Interchangeable Components for Hands-On Assembly Based Modeling (2016) [Paper]

Shape Completion from a Single RGBD Image (2016) [Paper]

Deep Learning Methods

📷 Learning to Generate Chairs, Tables and Cars with Convolutional Networks (2014) [Paper]

📷 Weakly-supervised Disentangling with Recurrent Transformations for 3D View Synthesis (2015, NIPS) [Paper]

🎲 Analysis and synthesis of 3D shape families via deep-learned generative models of surfaces (2015) [Paper]

📷 Weakly-supervised Disentangling with Recurrent Transformations for 3D View Synthesis (2015) [Paper] [Code]

📷 Multi-view 3D Models from Single Images with a Convolutional Network (2016) [Paper] [Code]

📷 View Synthesis by Appearance Flow (2016) [Paper] [Code]

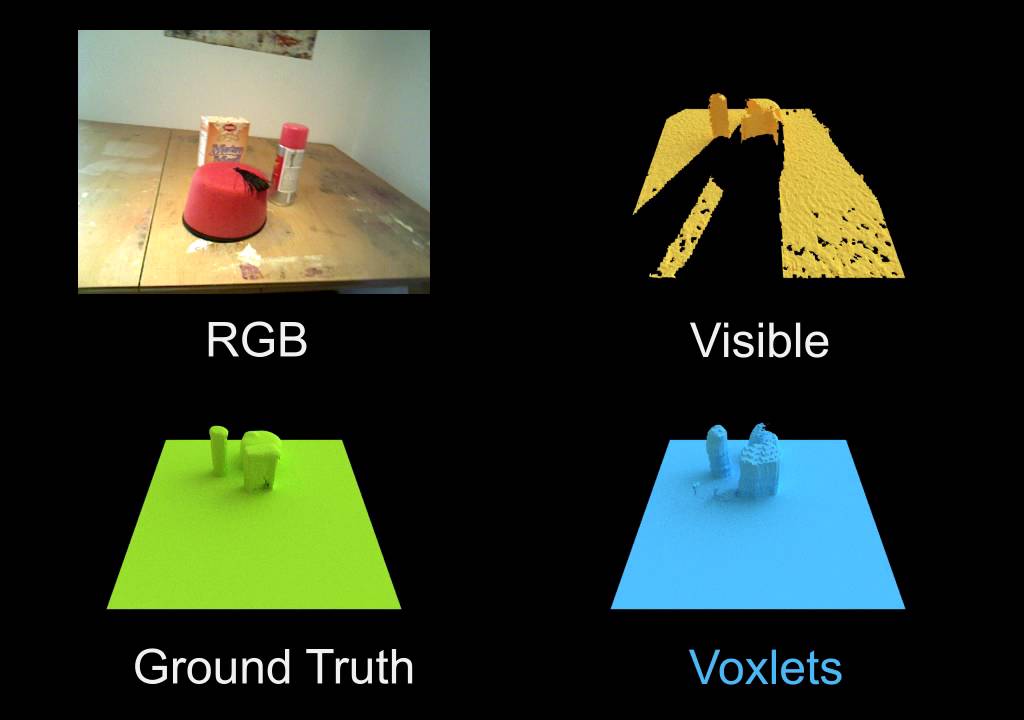

👾 Voxlets: Structured Prediction of Unobserved Voxels From a Single Depth Image (2016) [Paper] [Code]

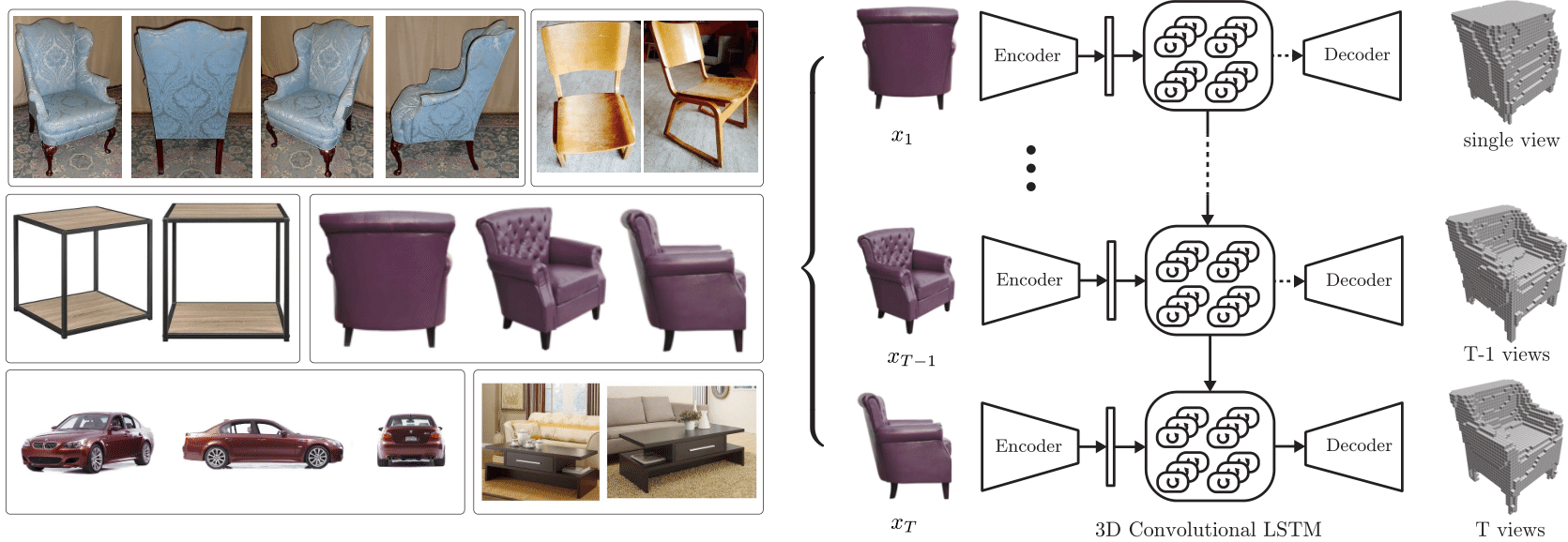

👾 3D-R2N2: 3D Recurrent Reconstruction Neural Network (2016) [Paper] [Code]

👾 Perspective Transformer Nets: Learning Single-View 3D Object Reconstruction without 3D Supervision (2016) [Paper]

👾 TL-Embedding Network: Learning a Predictable and Generative Vector Representation for Objects (2016) [Paper]

👾 3D GAN: Learning a Probabilistic Latent Space of Object Shapes via 3D Generative-Adversarial Modeling (2016) [Paper]

👾 3D Shape Induction from 2D Views of Multiple Objects (2016) [Paper]

📷 Unsupervised Learning of 3D Structure from Images (2016) [Paper]

👾 Generative and Discriminative Voxel Modeling with Convolutional Neural Networks (2016) [Paper] [Code]

📷 Multi-view Supervision for Single-view Reconstruction via Differentiable Ray Consistency (2017) [Paper]

📷 Synthesizing 3D Shapes via Modeling Multi-View Depth Maps and Silhouettes with Deep Generative Networks (2017) [Paper] [Code]

👾 Octree Generating Networks: Efficient Convolutional Architectures for High-resolution 3D Outputs (2017) [Paper] [Code]

👾 Hierarchical Surface Prediction for 3D Object Reconstruction (2017) [Paper]

👾 OctNetFusion: Learning Depth Fusion from Data (2017) [Paper] [Code]

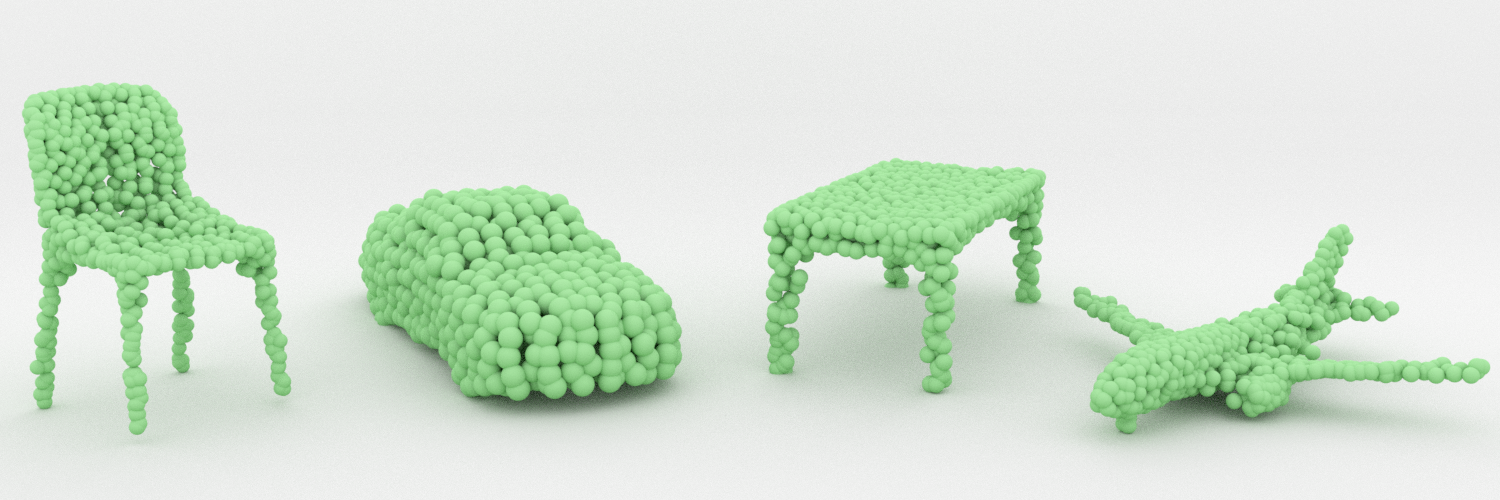

🎲 A Point Set Generation Network for 3D Object Reconstruction from a Single Image (2017) [Paper] [Code]

🎲 Learning Representations and Generative Models for 3D Point Clouds (2017) [Paper] [Code]

🎲 Shape Generation using Spatially Partitioned Point Clouds (2017) [Paper]

🎲 PCPNET Learning Local Shape Properties from Raw Point Clouds (2017) [Paper]

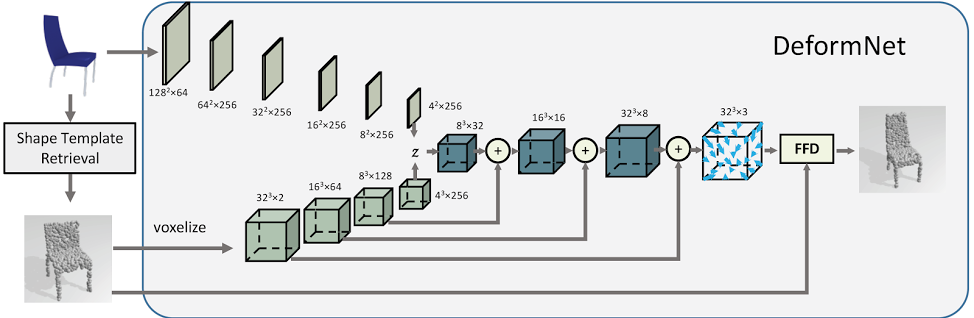

🎲 DeformNet: Free-Form Deformation Network for 3D Shape Reconstruction from a Single Image (2017) [Paper]

📷 Transformation-Grounded Image Generation Network for Novel 3D View Synthesis (2017) [Paper] [Code]

📷 Tag Disentangled Generative Adversarial Networks for Object Image Re-rendering (2017) [Paper]

📷 3D Shape Reconstruction from Sketches via Multi-view Convolutional Networks (2017) [Paper] [Code]

👾 Interactive 3D Modeling with a Generative Adversarial Network (2017) [Paper]

📷👾 Weakly supervised 3D Reconstruction with Adversarial Constraint (2017) [Paper] [Code]

💎 Exploring Generative 3D Shapes Using Autoencoder Networks (Autodesk 2017) [Paper]

💊 GRASS: Generative Recursive Autoencoders for Shape Structures (SIGGRAPH 2017) [Paper] [Code] [code]

💎 Mesh-based Autoencoders for Localized Deformation Component Analysis (2017) [Paper]

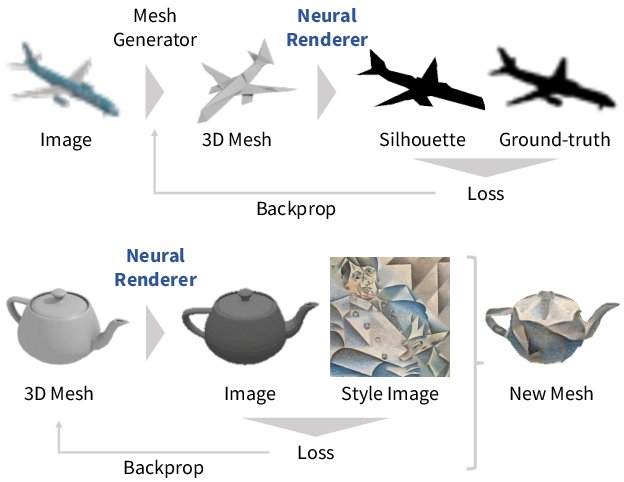

💎 Neural 3D Mesh Renderer (2017) [Paper] [Code]

🎲👾 Large-Scale 3D Shape Reconstruction and Segmentation from ShapeNet Core55 (2017) [Paper]

👾 Pix2vox: Sketch-Based 3D Exploration with Stacked Generative Adversarial Networks (2017) [Code]

📷👾 What You Sketch Is What You Get: 3D Sketching using Multi-View Deep Volumetric Prediction (2017) [Paper]

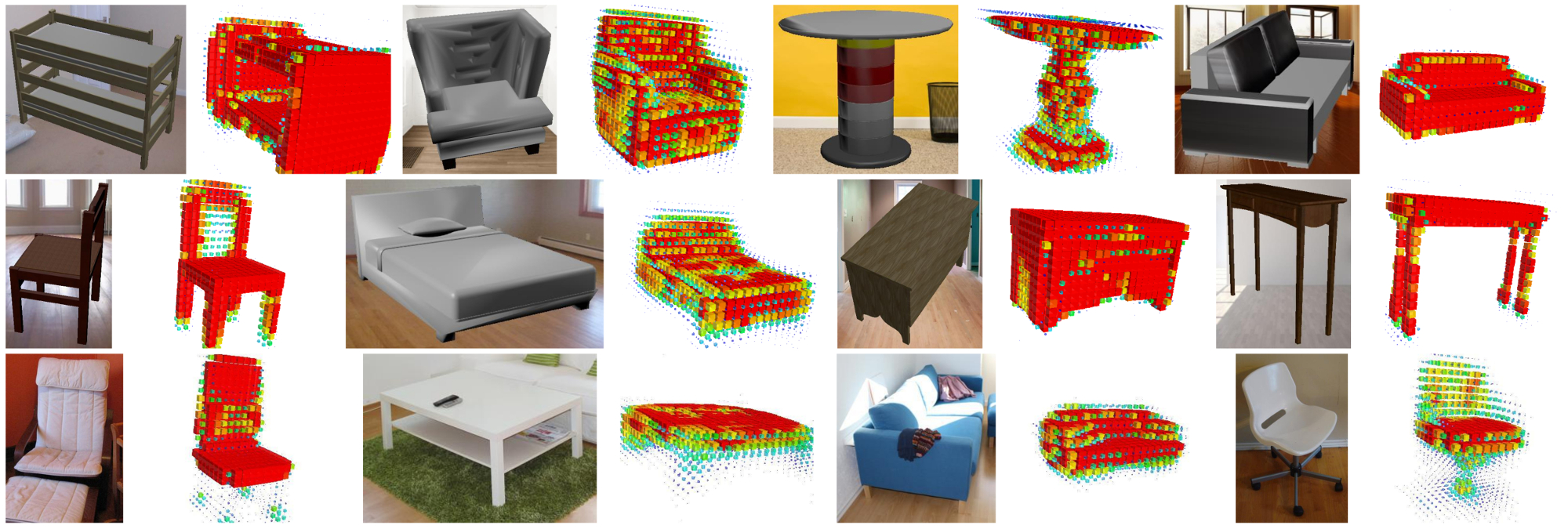

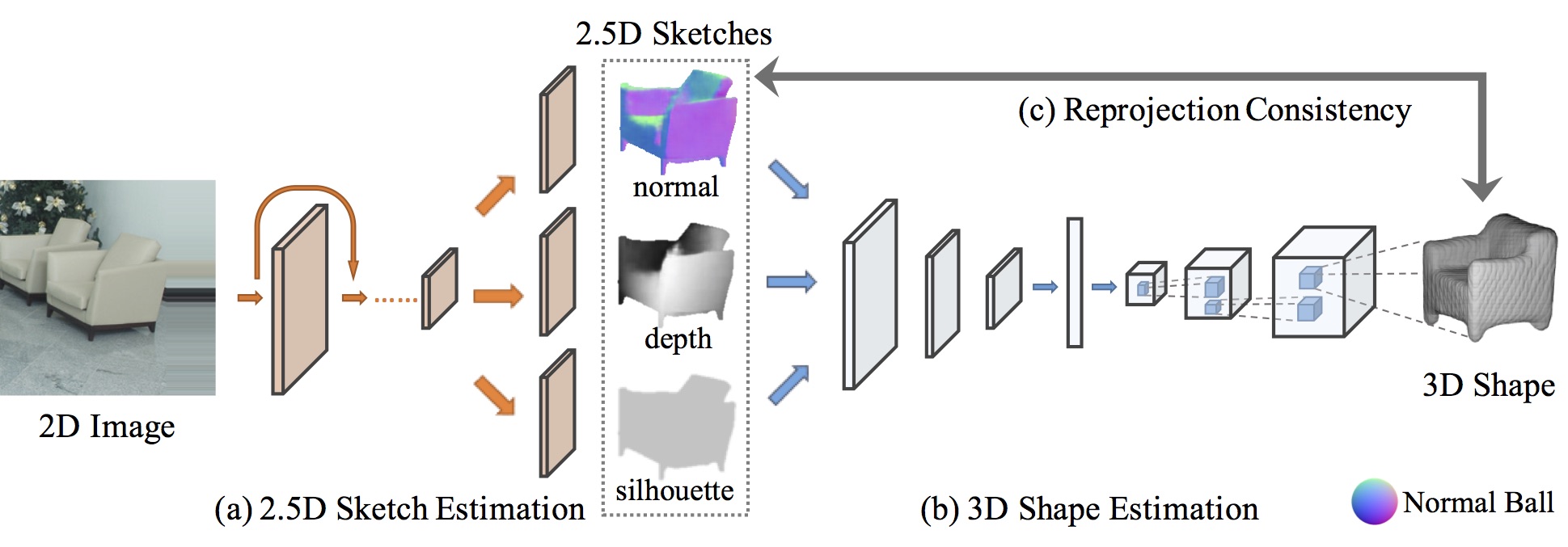

📷👾 MarrNet: 3D Shape Reconstruction via 2.5D Sketches (2017) [Paper]

🎲 PU-Net: Point Cloud Upsampling Network (2018) [Paper] [Code]

Style-Content Separation by Anisotropic Part Scales (2010) [Paper]

Design Preserving Garment Transfer (2012) [Paper]

Analogy-Driven 3D Style Transfer (2014) [Paper]

Elements of Style: Learning Perceptual Shape Style Similarity (2015) [Paper] [Code]

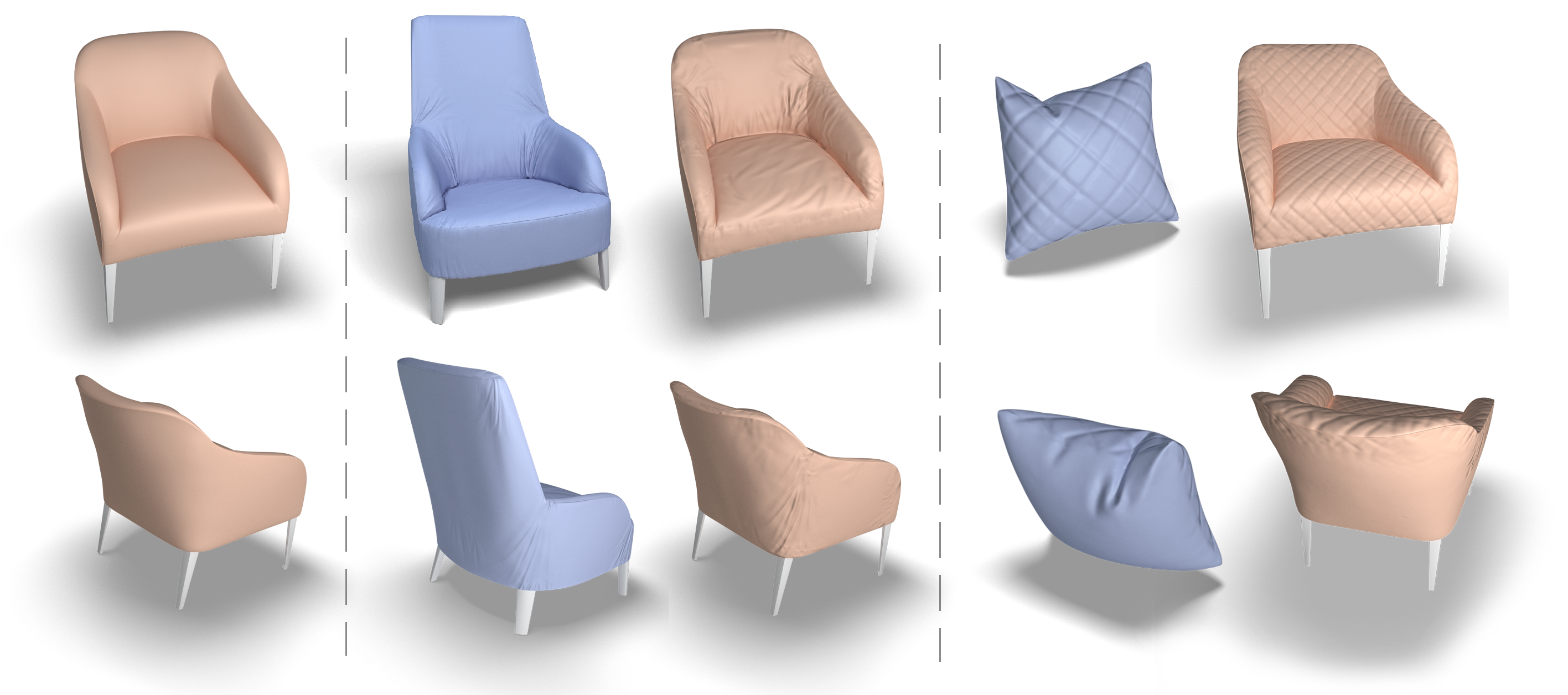

Functionality Preserving Shape Style Transfer (2016) [Paper] [Code]

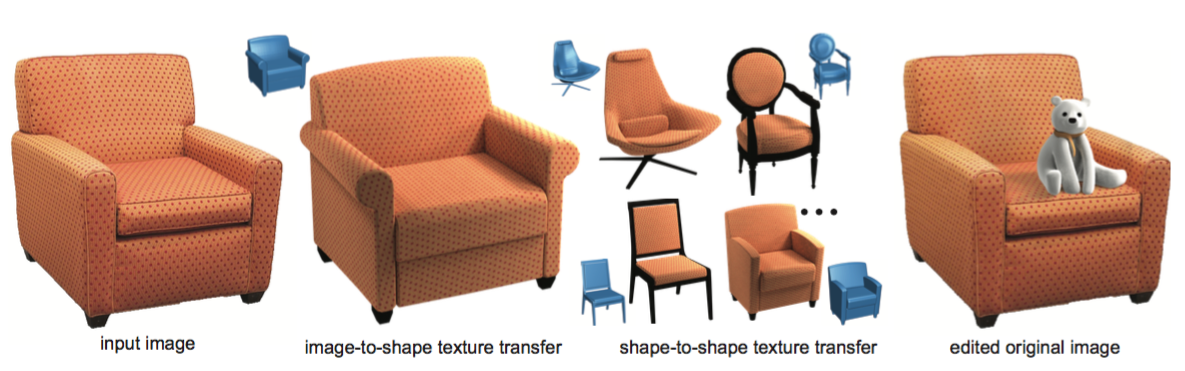

Unsupervised Texture Transfer from Images to Model Collections (2016) [Paper]

Learning Detail Transfer based on Geometric Features (2017) [Paper]

Neural 3D Mesh Renderer (2017) [Paper] [Code]

Make It Home: Automatic Optimization of Furniture Arrangement (2011, SIGGRAPH) [Paper]

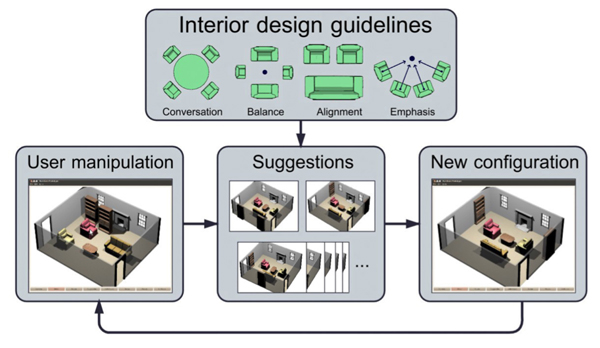

Interactive Furniture Layout Using Interior Design Guidelines (2011) [Paper]

Synthesizing Open Worlds with Constraints using Locally Annealed Reversible Jump MCMC (2012) [Paper]

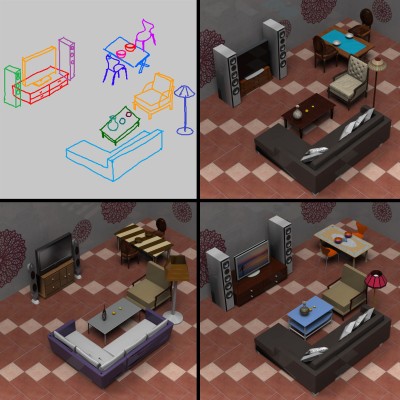

Sketch2Scene: Sketch-based Co-retrieval and Co-placement of 3D Models (2013) [Paper]

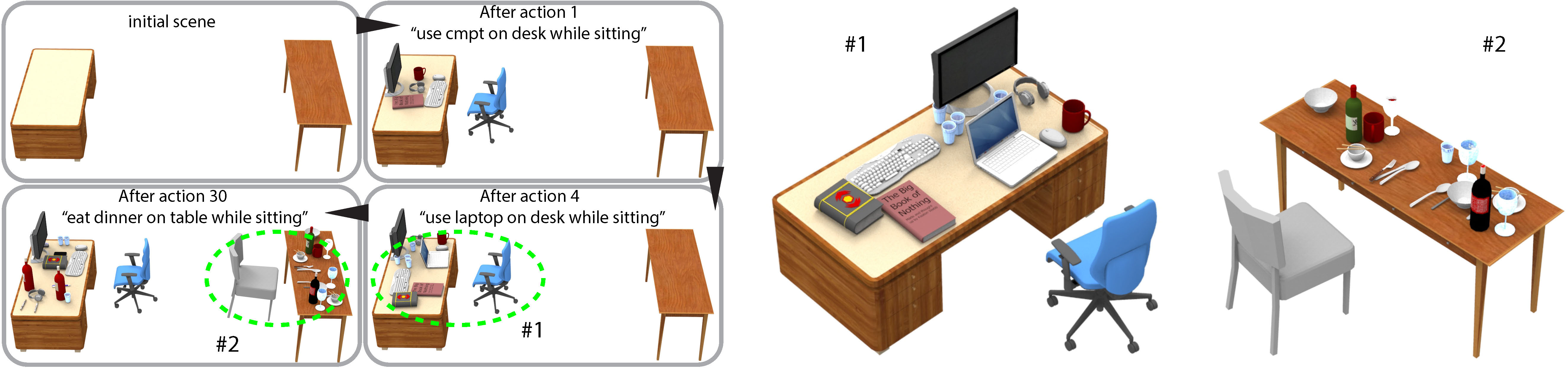

Action-Driven 3D Indoor Scene Evolution (2016) [Paper]

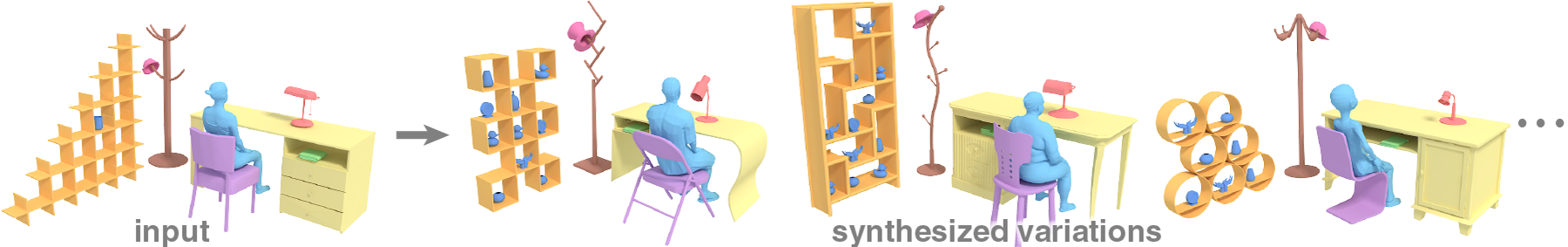

Relationship Templates for Creating Scene Variations (2016) [Paper]

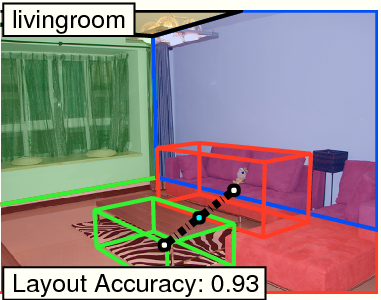

IM2CAD (2017) [Paper]

Raster-to-Vector: Revisiting Floorplan Transformation (2017, ICCV) [Paper] [Code]

Understanding Indoor Scenes Using 3D Geometric Phrases (2013) [Paper]

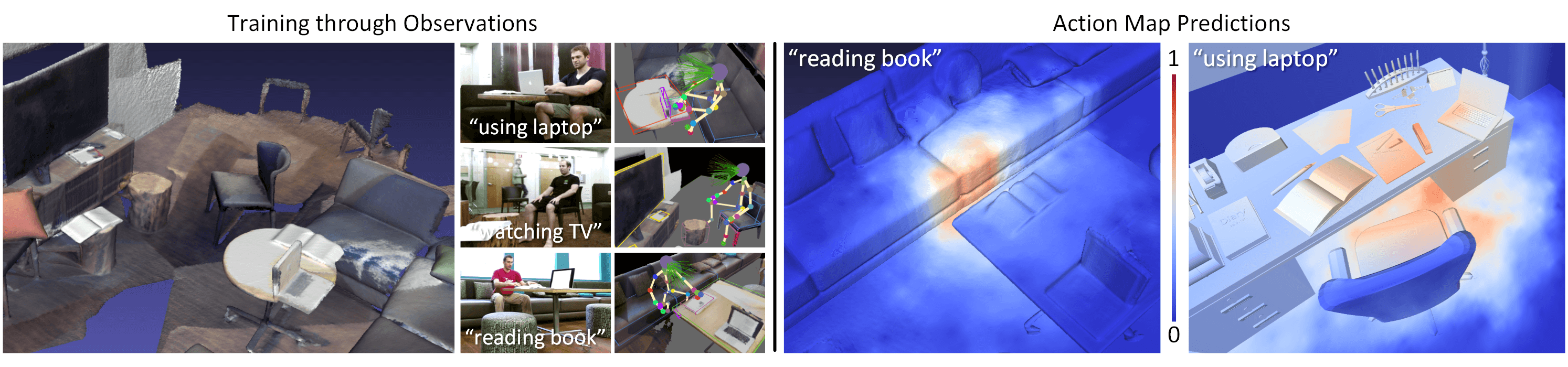

SceneGrok: Inferring Action Maps in 3D Environments (2014, SIGGRAPH) [Paper]

PanoContext: A Whole-room 3D Context Model for Panoramic Scene Understanding (2014) [Paper]

Learning Informative Edge Maps for Indoor Scene Layout Prediction (2015) [Paper]

Rent3D: Floor-Plan Priors for Monocular Layout Estimation (2015) [Paper]

A Coarse-to-Fine Indoor Layout Estimation (CFILE) Method (2016) [Paper]

DeLay: Robust Spatial Layout Estimation for Cluttered Indoor Scenes (2016) [Paper]

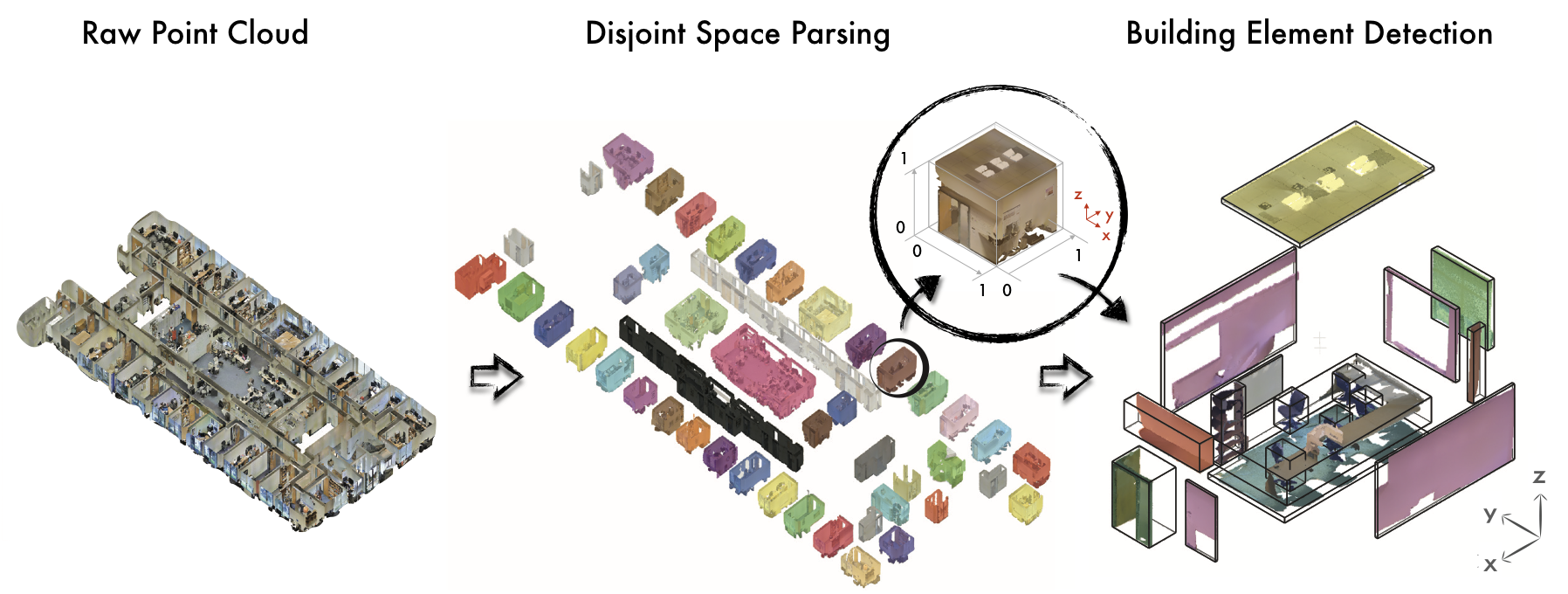

3D Semantic Parsing of Large-Scale Indoor Spaces (2016) [Paper] [Code]

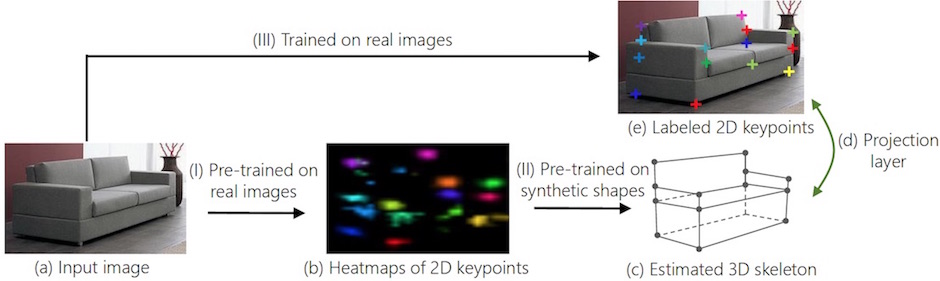

Single Image 3D Interpreter Network (2016) [Paper] [Code]

Deep Multi-Modal Image Correspondence Learning (2016) [Paper]

Physically-Based Rendering for Indoor Scene Understanding Using Convolutional Neural Networks (2017) [Paper] [Code] [Code] [Code] [Code]

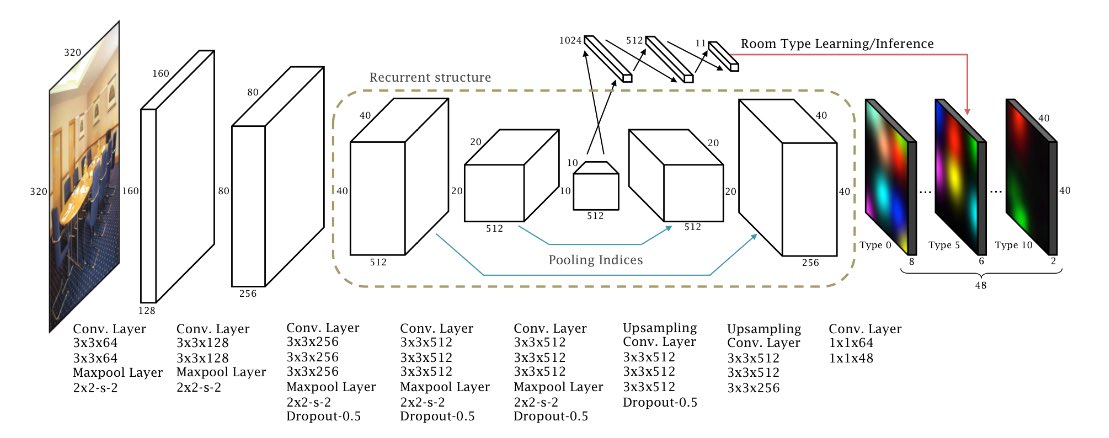

RoomNet: End-to-End Room Layout Estimation (2017) [Paper]

SUN RGB-D: A RGB-D Scene Understanding Benchmark Suite (2017) [Paper]

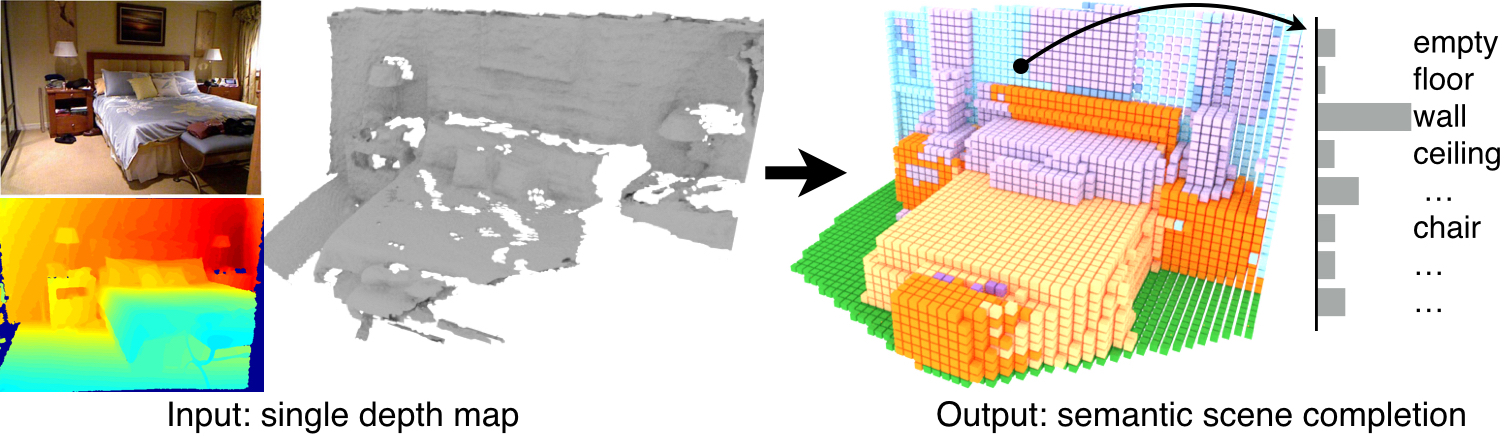

Semantic Scene Completion from a Single Depth Image (2017) [Paper] [Code]

.jpeg)

.jpeg)

.jpeg)

%20Method%20(2016).png)